【MindSpore易点通】如何保存模型进行checkpoint对比以及Print算子使用说明

1 保存模型checkpoint对比

将其他框架的源码迁移至MindSpore后若出现精度问题,可以对比验证精度最大epoch的模型输出。

MindSpore代码和Pytorch代码,存在MindSpore验证集精度比Pytorch低的问题,下面描述如何对比验证精度最大epoch的模型输出来定位问题。

1.1准备工作

训练时,将Pytorch和MindSpore每个epoch的模型保存下来。

- Pytorch中设置如下,完整代码参见示例代码:

model.eval()if not os.path.isdir('checkpoint'):

os.mkdir('checkpoint')

torch.save(model.state_dict(), './checkpoint/'+ str(epoch) +

"_" + str(my_val_accuracy) + '_resnet50.pth')- MindSpore中设置如下,完整代码请见附件:

config_ck = CheckpointConfig(save_checkpoint_steps=steps_per_epoch_train,keep_checkpoint_max=epoch_max)

ckpoint_cb = ModelCheckpoint(prefix="train_resnet_cifar10", directory="./", config=config_ck)其中save_checkpoint_steps为一个轮次中要执行的step数。keep_checkpoint_max为最大存储模型数目。

1.2打印模型输出

分别打印MindSpore和Pytorch中验证精度最大的epoch对应的模型。

- MindSpore模型加载并打印:

from mindspore import load_checkpoint

param_dict = load_checkpoint("./train_mindspore/train_resnet_cifar10_1-162_391.ckpt")for key in param_dict.keys():

print(key,param_dict[key].data.asnumpy())打印结果如下 :

conv1.weight [[[[-0.06971329 -0.00755827 -0.02128288]

[-0.11358283 0.0838231 0.05153372]

[-0.16815324 0.21193054 0.2659021 ]]

...- Pytorch模型加载并打印:

import torch

model = torch.load('./checkpoint/174_0_resnet50.pth')

print(model)打印结果如下 :

OrderedDict([('conv1.weight', tensor([[[[ 0.0798, 0.2771, -0.0230],

[-0.4563, -0.4013, 0.2395],

[-0.0804, 0.2683, 0.2198]],

...1.3对比模型输出结果

对比模型的各项输出结果,若发现有某项输出不一致,则需要分析对应的算子,可在MindSpore API中搜索相应算子,并分析。

2 Print算子使用说明

MindSpore的自研Print算子可以将用户输入的Tensor或字符串信息打印出来。Print算子使用方法与其他算子相同,在网络中的__init__声明算子并在construct进行调用。

2.1打印正向输出

Print算子正向打印,简单示例如下 :

class ResNet(nn.Cell):

def __init__(self, num_blocks, num_classes=10):

super(ResNet, self).__init__()

self.conv1 = _conv2d(3, 64, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU()

self.print = P.Print()

def construct(self, x_input):

x_input = self.conv1(x_input)

self.print('output_conv1:', x_input)

out = self.relu(x_input)

return out打印结果如下:

output_conv1:

Tensor(shape=[1, 64, 32, 32], dtype=Float32, value=

[[[[ 2.72979736e-02 5.00488281e-02 9.80834961e-02 ... 1.38671875e-01 1.46850586e-01 5.52673340e-02]

[ 1.49291992e-01 1.49658203e-01 1.94824219e-01 ... 3.98193359e-01 3.90380859e-01 1.97875977e-01]

[ 7.49511719e-02 7.70263672e-02 1.22924805e-01 ... 2.86865234e-01 2.58056641e-01 1.22192383e-01]

...2.2打印模型权重

Print算子打印模型权重,简单示例如下:

class ResNet(nn.Cell):

def __init__(self, num_blocks, num_classes=10):

super(ResNet, self).__init__()

self.conv1 = _conv2d(3, 64, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU()

self.print = P.Print()

def construct(self, x_input):

x_input = self.conv1(x_input)

self.print("self.conv1.weight:", self.conv1.weight)

out = self.bn1(x_input)

out = self.relu(out)

return out打印结果如下:

self.conv1.weight:

Tensor(shape=[64, 3, 3, 3], dtype=Float32, value=

[[[[ 1.87883265e-02 8.28264281e-02 3.95536423e-02]

[ 1.72755457e-02 -2.93852817e-02 5.61546721e-02]

[-2.40226928e-02 1.50793493e-01 1.78463876e-01]]

...2.3打印反向梯度

Print算子反向打印,简单示例如下:

class ResNet(nn.Cell):

def __init__(self, num_blocks, num_classes=10):

super(ResNet, self).__init__()

self.in_planes = 64

self.conv1 = _conv2d(3, 64, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU()

self.print = P.Print()

self.print_grad = P.InsertGradientOf(self.save_gradient)

def save_gradient(self, dout):

return dout

def construct(self, x_input):

x_input = self.conv1(x_input)

out_grad = self.print_grad(x_input)

self.print("out_grad_conv1",out_grad)

out = self.bn1(x_input)

out = self.relu(out)

return out打印结果如下:

out_grad_conv1

Tensor(shape=[128, 64, 32, 32], dtype=Float32, value=

[[[[-3.97216797e-01 4.74853516e-02 2.53417969e-01 ... 5.80749512e-02 7.40356445e-02 2.26806641e-01]

[-1.38867188e+00 -1.12890625e+00 -7.66601562e-01 ... -7.64160156e-01 -8.21777344e-01 -2.39013672e-01]

[-1.13964844e+00 -9.60449219e-01 -6.69921875e-01 ... -9.59472656e-01 -1.27050781e+00 -8.31054688e-01]

...2.4具体示例

下面以一份有问题的MindSpore代码为例,描述如何通过print算子打印输出来定位问题。

问题现象:MindSpore验证集精度比Pytorch低

验证集精度:MindSpore:91.96%; Pytorch:92.58%。

准备工作

为保证Pytorch和MindSpore网络输入图片相同,代码做如下修改,Pytorch中代码也做对应修改。

(1)训练数据集shuffle设置为False:

复制

(2)数据转换中注释如下内容:

transform_data = C.Compose([

# CV.RandomCrop((32, 32), (4, 4, 4, 4)),

# CV.RandomHorizontalFlip(),

CV.Rescale(rescale, shift),

CV.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

CV.HWC2CHW()])措施:通过print逐层打印前向输出,对比输出数据数量级

打印网络每层输出,需要在init中加入self.print = P.Print()。主网络中打印方式如下:

class ResNet(nn.Cell):

def __init__(self, num_blocks, num_classes=10):

super(ResNet, self).__init__()

self.in_planes = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, pad_mode='pad', weight_init='Uniform')

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU()

self.layer1 = self._make_layer(64, num_blocks[0], stride=1)

self.layer2 = self._make_layer(128, num_blocks[1], stride=2)

self.layer3 = self._make_layer(256, num_blocks[2], stride=2)

self.layer4 = self._make_layer(512, num_blocks[3], stride=2)

self.avgpool2d = nn.AvgPool2d(kernel_size=4, stride=4)

self.reshape = mindspore.ops.Reshape()

self.linear = nn.Dense(2048, num_classes)

self.print = P.Print()

def _make_layer(self, planes, num_blocks, stride):

strides = [stride] + [1]*(num_blocks-1)

layers = []

for stride in strides:

layers.append(ResidualBlock(self.in_planes, planes, stride))

self.in_planes = EXPANSION*planes

return nn.SequentialCell(*layers)

def construct(self, x_input):

x_input = self.conv1(x_input)

self.print('output_conv1:', x_input)

out = self.bn1(x_input)

out = self.relu(out)

self.print('output_relu:', out)

out = self.layer1(out)

self.print('output_layer1:', out)

out = self.layer2(out)

self.print('output_layer2:', out)

out = self.layer3(out)

self.print('output_layer3:', out)

out = self.layer4(out)

self.print('output_layer4:', out)

out = self.avgpool2d(out)

self.print('output_avgpool2d:', out)

out = self.reshape(out, (out.shape[0], 2048))

out = self.linear(out)

self.print('output_linear:', out)

return out对比第一张图片的输出,发现output_conv1(卷积)输出的数据数量级不一致。

问题1:卷积权重初始化不一致

对比MindSpore和Pytorch中卷积入参,发现卷积权重初始化不一致。

MindSpore中,权重初始化用的是:normal方法。

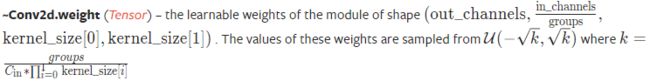

class mindspore.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, pad_mode="same", padding=0, dilation=1, group=1, has_bias=False, weight_init="normal", bias_init="zeros", data_format="NCHW")而Pytorch中,默认的权重初始化方法如下图所示:

解决方案:更新MindSpore中卷积权重初始化方法,与Pytorch中一致

将卷积算法改成如下形式:

def _conv2d(in_channel, out_channel, kernel_size, stride=1, padding=0):

scale = math.sqrt(1/(in_channel*kernel_size*kernel_size))

if padding == 0:

return nn.Conv2d(in_channel, out_channel, kernel_size=kernel_size,

stride=stride, padding=padding, pad_mode='same',

weight_init=mindspore.common.initializer.Uniform(scale=scale))

else:

return nn.Conv2d(in_channel, out_channel, kernel_size=kernel_size,

stride=stride, padding=padding, pad_mode='pad',

weight_init=mindspore.common.initializer.Uniform(scale=scale))问题2:全连接层中权重和偏差初始化方法不一致

根据问题1中的问题,检查其他算子是否有类似问题。发现MindSpore中nn.Dense和Pytorch中nn.linear的权重和偏差初始化方法有所差别。

解决方案:更新MindSpore全连接层中权重和偏差初始化方法,与Pytorch中一致

将MindSpore中nn.Dense算法做如下修改:

def _dense(in_channel, out_channel):

scale = math.sqrt(1/in_channel)

return nn.Dense(in_channel, out_channel,

weight_init=mindspore.common.initializer.Uniform(scale=scale),

bias_init=mindspore.common.initializer.Uniform(scale=scale))更新后代码,继续从头开始训练,并逐层打印前向输出。

改进效果

所有算子前向输出数量级一致;

MindSpore验证集精度有所提升,较Pytorch高。

验证集精度:MindSpore:92.98%;Pytorch:92.58%。