CMT

- 概要

- 介绍

-

- transformer存在的问题

- CMT中块的设计

- 相关工作

-

- 方法

-

- 代码解析

-

概要

CNN捕获局部信息,Transformer来捕获全局信息

介绍

transformer存在的问题

- 将图片打成patch,会忽略图片内部潜在的2D结构和空间局部信息。

- transformer的块输出和输入大小固定,难以显示提取多尺度特征和低分辨率的特征。

- 计算复杂度太高。自注意力的计算与输入图片的大小成二次复杂度。

CMT中块的设计

- CMT块中的局部感知单元(LPU)和反向残差前馈网络(IRFFN)可以帮助捕获中间特征内的局部和全局结构信息,并提高网络的表示能力。

相关工作

CNN

| 网络 |

特点 |

| LeNet |

手写数字识别 |

| AlexNet & VGGNet & GoogleNet & InceptionNet |

ImageNet大赛 |

| ResNet |

泛化性增强 |

| SENet |

自适应地重新校准通道特征响应 |

Vision Transformer

| 网络 |

特点 |

| ViT |

将NLP中的Transformer引入到CV领域 |

| DeiT |

蒸馏的方式,使用teacher model来指导Vision Transformer的训练 |

| T2T-ViT |

递归的将相邻的tokens聚合成为一个,transformer对visual tokens进行建模 |

| TNT |

outer block建模patch embedding 之间的关系,inner block建模pixel embedding之间的关系 |

| PVT |

将金字塔结构引入到 ViT 中,可以为各种像素级密集预测任务生成多尺度特征图。 |

| CPVT和CvT |

cnn引入到transformer之中 |

方法

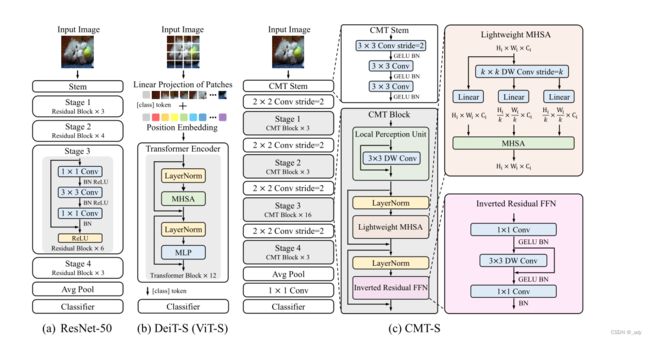

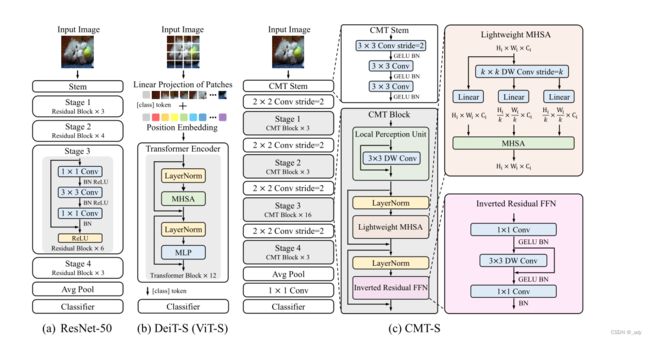

整体架构

- CMT stem(减小图片大小,提取本地信息)

- Conv Stride(用来减少feature map,增大channel)

- CMT block(捕获全局和局部关系)

CMT Block

LPU

- 位置编码会破坏卷积中的平移不变性,忽略了patch之间的局部信息和patch内部的结构信息。

- LPU来缓解这个问题。

LMHSA

- 使用深度卷积来减少KV的大小,加入相对位置偏置,构成了轻量级的自注意力计算。

IRFFN

- 深度卷积增强局部信息的提取,残差结构来促进梯度的传播能力。

代码解析

LPU

class LocalPerceptionUint(nn.Module):

def __init__(self, dim, act=False):

super(LocalPerceptionUint, self).__init__()

self.act = act

self.conv_3x3_dw = ConvDW3x3(dim)

if self.act:

self.actation = nn.Sequential(

nn.GELU(),

nn.BatchNorm2d(dim)

)

def forward(self, x):

if self.act:

out = self.actation(self.conv_3x3_dw(x))

return out

else:

out = self.conv_3x3_dw(x)

return out

IRFFN

class InvertedResidualFeedForward(nn.Module):

def __init__(self, dim, dim_ratio=4.):

super(InvertedResidualFeedForward, self).__init__()

output_dim = int(dim_ratio * dim)

self.conv1x1_gelu_bn = ConvGeluBN(

in_channel=dim,

out_channel=output_dim,

kernel_size=1,

stride_size=1,

padding=0

)

self.conv3x3_dw = ConvDW3x3(dim=output_dim)

self.act = nn.Sequential(

nn.GELU(),

nn.BatchNorm2d(output_dim)

)

self.conv1x1_pw = nn.Sequential(

nn.Conv2d(output_dim, dim, 1, 1, 0),

nn.BatchNorm2d(dim)

)

def forward(self, x):

x = self.conv1x1_gelu_bn(x)

out = x + self.act(self.conv3x3_dw(x))

out = self.conv1x1_pw(out)

return out

LMHSA

class LightMutilHeadSelfAttention(nn.Module):

"""calculate the self attention with down sample the resolution for k, v, add the relative position bias before softmax

Args:

dim (int) : features map channels or dims

num_heads (int) : attention heads numbers

relative_pos_embeeding (bool) : relative position embeeding

no_distance_pos_embeeding (bool): no_distance_pos_embeeding

features_size (int) : features shape

qkv_bias (bool) : if use the embeeding bias

qk_scale (float) : qk scale if None use the default

attn_drop (float) : attention dropout rate

proj_drop (float) : project linear dropout rate

sr_ratio (float) : k, v resolution downsample ratio

Returns:

x : LMSA attention result, the shape is (B, H, W, C) that is the same as inputs.

"""

def __init__(self, dim, num_heads=8, features_size=56,

relative_pos_embeeding=False, no_distance_pos_embeeding=False, qkv_bias=False, qk_scale=None,

attn_drop=0., proj_drop=0., sr_ratio=1.):

super(LightMutilHeadSelfAttention, self).__init__()

assert dim % num_heads == 0, f"dim {dim} should be divided by num_heads {num_heads}"

self.dim = dim

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = qk_scale or head_dim ** -0.5

self.relative_pos_embeeding = relative_pos_embeeding

self.no_distance_pos_embeeding = no_distance_pos_embeeding

self.features_size = features_size

self.q = nn.Linear(dim, dim, bias=qkv_bias)

self.kv = nn.Linear(dim, dim*2, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop)

self.softmax = nn.Softmax(dim=-1)

self.sr_ratio = sr_ratio

if sr_ratio > 1:

self.sr = nn.Conv2d(dim, dim, kernel_size=sr_ratio, stride=sr_ratio)

self.norm = nn.LayerNorm(dim)

if self.relative_pos_embeeding:

self.relative_indices = generate_relative_distance(self.features_size)

self.position_embeeding = nn.Parameter(torch.randn(2 * self.features_size - 1, 2 * self.features_size - 1))

elif self.no_distance_pos_embeeding:

self.position_embeeding = nn.Parameter(torch.randn(self.features_size ** 2, self.features_size ** 2))

else:

self.position_embeeding = None

if self.position_embeeding is not None:

trunc_normal_(self.position_embeeding, std=0.2)

def forward(self, x):

B, C, H, W = x.shape

N = H*W

x_q = rearrange(x, 'B C H W -> B (H W) C')

q = self.q(x_q).reshape(B, N, self.num_heads, C // self.num_heads).permute(0, 2, 1, 3)

if self.sr_ratio > 1:

x_reduce_resolution = self.sr(x)

x_kv = rearrange(x_reduce_resolution, 'B C H W -> B (H W) C ')

x_kv = self.norm(x_kv)

else:

x_kv = rearrange(x, 'B C H W -> B (H W) C ')

kv_emb = rearrange(self.kv(x_kv), 'B N (dim h l ) -> l B h N dim', h=self.num_heads, l=2)

k, v = kv_emb[0], kv_emb[1]

attn = (q @ k.transpose(-2, -1)) * self.scale

q_n, k_n = q.shape[1], k.shape[2]

if self.relative_pos_embeeding:

attn = attn + self.position_embeeding[self.relative_indices[:, :, 0], self.relative_indices[:, :, 1]][:, :k_n]

elif self.no_distance_pos_embeeding:

attn = attn + self.position_embeeding[:, :k_n]

attn = self.softmax(attn)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B, N, C)

x = self.proj(x)

x = self.proj_drop(x)

x = rearrange(x, 'B (H W) C -> B C H W ', H=H, W=W)

return x