YOLO-Fastestv2--训练自己的数据集

YOLO-Fastestv2介绍

YOLO-Fastestv2:

简单、快速、紧凑、易于移植;

资源占用少,单核性能出色,功耗更低;

更快更小:以 0.3% 的精度损失换取推理速度提高 30%,参数量减少 25%;

训练速度快,算力要求低;

训练流程:

一. 构建环境

1、从github clone代码到本地,在终端运行:

git clone https://github.com/dog-qiuqiu/Yolo-FastestV2.git

2、切换到Yolo-FastestV2路径下:

cd Yolo-FastestV2

3、并安装相关python依赖包:

# pip3 install -r requirements.txt

pip3 install -r requirements.txt -i https://pypi.mirrors.ustc.edu.cn/simple #采用国内源,速度较快

二. 准备数据

1、采集需要做检测的图像,利用labelme进行标注:

labelme标注实例:

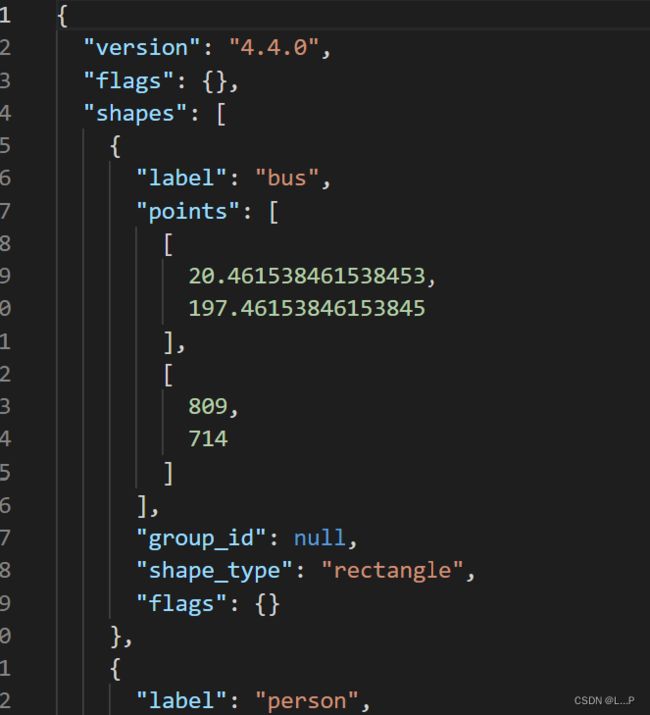

结果: 得到图像以及对标注json文件;

2、json转换为YOLO-Fastestv2(yolov5)训练所需txt文件:

import os

import numpy as np

import json

import cv2

# 1、标注类别名称

classes = ["bus","person",]

labelme_path = r'.\images'

file_list = os.listdir(labelme_path)

files = []

for file in file_list:

if file.split(".")[-1] in ["jpg","png","bmp","jpeg","tif"]:

files.append(file)

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return x, y, w, h

def yolo_json2txt(filex):

if not os.path.exists('./tmp/'):

os.makedirs('./tmp/')

list_file_train = open('./tmp/train.txt', 'w+')

list_file_val = open('./tmp/val.txt', 'w+')

for i, imagePath in enumerate(filex):

json_file_ = imagePath.split(".")[0]

json_filename = os.path.join(labelme_path, json_file_ + ".json")

if not os.path.exists(json_filename):

print(json_filename)

continue

json_file = json.load(open(json_filename, "r", encoding="utf-8"))

if i % 5 == 0:

list_file_val.write('%s/\n' % imagePath)

else:

list_file_train.write('%s/\n' % imagePath)

out_file = open('%s/%s.txt' % (labelme_path, json_file_), 'w')

height, width, channels = cv2.imread(os.path.join(labelme_path,imagePath)).shape

for multi in json_file["shapes"]:

points = np.array(multi["points"])

xmin = min(points[:, 0]) if min(points[:, 0]) > 0 else 0

xmax = max(points[:, 0]) if max(points[:, 0]) > 0 else 0

ymin = min(points[:, 1]) if min(points[:, 1]) > 0 else 0

ymax = max(points[:, 1]) if max(points[:, 1]) > 0 else 0

label = multi["label"]

if xmax <= xmin:

pass

elif ymax <= ymin:

pass

else:

cls_id = classes.index(label)

b = (float(xmin), float(xmax), float(ymin), float(ymax))

bb = convert((width, height), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

print(json_filename, xmin, ymin, xmax, ymax, cls_id)

if __name__ == '__main__':

yolo_json2txt(files)

结果:

训练集、测试集路径文件:

三. 开始训练

1、修改数据集路径:(data/coco.data文件)

[name]

model_name=coco

[train-configure]

epochs=40

steps=25,35

batch_size=512

subdivisions=1

learning_rate=0.001

[model-configure]

pre_weights=

classes=2

width=352

height=352

anchor_num=3

anchors=12.64,19.39, 37.88,51.48, 55.71,138.31, 126.91,78.23, 131.57,214.55, 279.92,258.87

[data-configure]

train=tmp/train.txt

val=tmp/val.txt

names=./data/coco.names

2、训练相关

python3 genanchors.py --traintxt ./tmp/train.txt # 计算适应数据集的anchors,将输出值替换coco.data中anchors

python3 train.py --data data/coco.data # 开始训练

python3 test.py --data data/coco.data --weights weights/coco-40-epoch-0.994063ap-model.pth --img ./image # 测试模型效果

python3 pytorch2onnx.py --data data/coco.data --weights weights/coco-40-epoch-0.994063ap-model.pth --output yolo-fastestv2.onnx # 将模型转为onnx模型

python3 -m onnxsim yolo-fastestv2.onnx yolo-fastestv2-sim3.onnx # 将onnx模型简化,之后可通过"https://convertmodel.com/#outputFormat=ncnn"转换为ncnn,部署Android端

---------更新中--------------