【pytorch笔记】第八篇 线性层及其他层

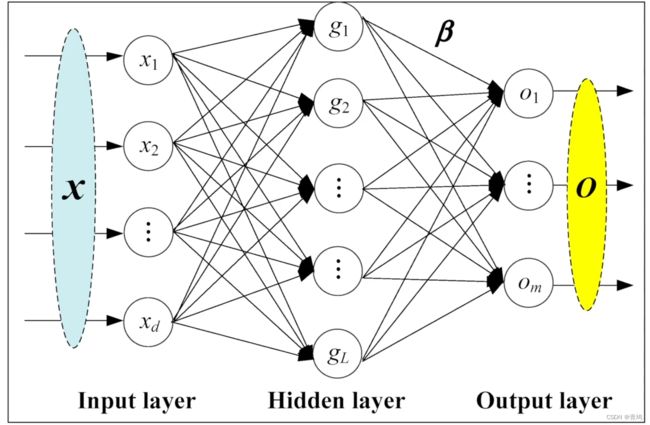

1. 神经网络

① 神经网络训练的就是函数系数 K k K_{k} Kk与d。

2. 线性拉平

import torch

import torchvision

from torch import nn

from torch.nn import ReLU

from torch.nn import Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset, batch_size=64)

for data in dataloader:

imgs, targets = data

print(imgs.shape)

output = torch.reshape(imgs,(1,1,1,-1))

print(output.shape)

Output exceeds the size limit. Open the full output data in a text editor

Files already downloaded and verified

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

...

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([16, 3, 32, 32])

torch.Size([1, 1, 1, 49152])

3. 线性层

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset, batch_size=64,drop_last=True)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear1 = Linear(196608,10)

def forward(self, input):

output = self.linear1(input)

return output

tudui = Tudui()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

print(imgs.shape)

writer.add_images("input", imgs, step)

output = torch.reshape(imgs,(1,1,1,-1)) # 方法一:拉平

print(output.shape)

output = tudui(output)

print(output.shape)

writer.add_images("output", output, step)

step = step + 1

Output exceeds the size limit. Open the full output data in a text editor

Files already downloaded and verified

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

...

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

Output exceeds the size limit. Open the full output data in a text editor

torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10])

...

torch.Size([1, 1, 1, 10]) torch.Size([64, 3, 32, 32]) torch.Size([1, 1, 1, 196608]) torch.Size([1, 1, 1, 10])

在 Anaconda 终端里面,激活py3.6.3环境,再输入 tensorboard --logdir=C:\Users\qj\CV\logs 命令,将网址赋值浏览器的网址栏,回车,即可查看tensorboard显示日志情况。

4. 自定义层

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset, batch_size=64,drop_last=True)

class MyModule(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear1 = Linear(196608,10)

def forward(self, input):

output = self.linear1(input)

return output

myModule= MyModule()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

print(imgs.shape)

writer.add_images("input", imgs, step)

output = torch.flatten(imgs) # 方法二:拉平。展开为一维

print(output.shape)

output = myModule(output)

print(output.shape)

step = step + 1

Output exceeds the size limit. Open the full output data in a text editor

Files already downloaded and verified

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

...

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

torch.Size([64, 3, 32, 32])

Output exceeds the size limit. Open the full output data in a text editor

torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608])

...

torch.Size([10]) torch.Size([64, 3, 32, 32]) torch.Size([196608]) torch.Size([10])