【强化学习】实现Atari游戏的自动化学习(仅供参考)

问题描述:

参考书的第八章《Atari Games with Deep Q Network》因为版本变更太多,所以本人直接更改源代码,从而实现程序的运行,但是因本人能力有限,本章代码只能单纯实现代码的运行

代码展示:

'''Building an agent to play Atari games'''

from cv2 import merge

from matplotlib import units

import numpy as np

import gym

import tensorflow as tf

from tensorflow.keras.layers import LSTM

from collections import deque,Counter

from tensorflow import keras

#定义preprocess_observation的函数,

# 用于预处理输入游戏屏幕,缩小图像的大小,图像转换成灰度。

coior = np.array([210,164,74]).mean()

def preprocess_observation(obs):

# Crop and resize the image

img = obs[1:176:2, ::2]

# Convert the image to greyscale

img = img.mean(axis=2)

# Next we normalize the image from -1 to +1

img = (img - 128) / 128 - 1

return img.reshape(88,80,1)

import gym

env = gym.make("MsPacman-v0")

n_outputs = env.action_space.n

# env.render()

# print(n_outputs)

#define a q_network function for building our Q network. The input to our Q network will be the game state X.

tf.compat.v1.reset_default_graph()

tf.compat.v1.enable_eager_execution()

#@tf.function

def q_network(X,name_scope):

tf.compat.v1.disable_eager_execution()

#(None, 88, 80, 1)

#X = tf.compat.v1.placeholder(tf.float32, shape=X_shape)

X = tf.float32

#initialize layers

initializer = tf.keras.initializers.variance_scaling()

with tf.compat.v1.variable_scope(name_scope) as scope:

layer_1 = LSTM(units =10, kernel_initializer=initializer)

flat =tf.keras.layers.Flatten()

fc= tf.keras.layers.Dense(units = 10,kernel_initializer=initializer)

vars = {v.name[len(scope.name):]: v for v in tf.compat.v1.get_collection(key=tf.compat.v1.GraphKeys.TRAINABLE_VARIABLES, scope=scope.name)}

output = 32.0

return vars, output

#define an epsilon_greedy function

epsilon = 0.5

eps_min = 0.005

eps_max = 1.0

eps_decay_steps = 500000

def epsilon_greedy(action,step):

p = np.random.random(1).squeeze()

epsilon = max(eps_min,eps_max - (eps_max -eps_min) * step / eps_decay_steps)

if np.random.rand() start_steps:

# sample experience

o_obs, o_act, o_next_obs, o_rew, o_done = sample_memories(batch_size)

# states

o_obs = [x for x in o_obs]

# next states

o_next_obs = [x for x in o_next_obs]

# next actions

#next_act = mainQ_outputs.eval(feed_dict={X:o_next_obs, in_training_mode:False})

next_act = mainQ_outputs

# reward

#y_batch = o_rew + discount_factor * np.max(next_act, axis=-1) * (1-o_done)

o_rew =o_rew.astype(int)

o_done = o_done.astype(int)

o_rew = 0

o_done = 0

discount_factor = int(discount_factor)

next_act =32.0

y_batch = (discount_factor *next_act)

# merge all summaries and write to the file

#mrg_summary = merge_summary.eval(feed_dict={X:o_obs, y:np.expand_dims(y_batch, axis=-1), X_action:o_act, in_training_mode:False})

mrg_summary = merge_summary

#file_writer.add_summary(mrg_summary, global_step)

# now we train the network and calculate loss

#train_loss, _ = sess.run([loss, training_op], feed_dict={X:o_obs, y:np.expand_dims(y_batch, axis=-1), X_action:o_act, in_training_mode:True})

#episodic_loss.append(train_loss)

# after some interval we copy our main Q network weights to target Q network

if (global_step+1) % copy_steps == 0 and global_step > start_steps:

copy_target_to_main.run()

obs = next_obs

epoch += 1

global_step += 1

episodic_reward += reward

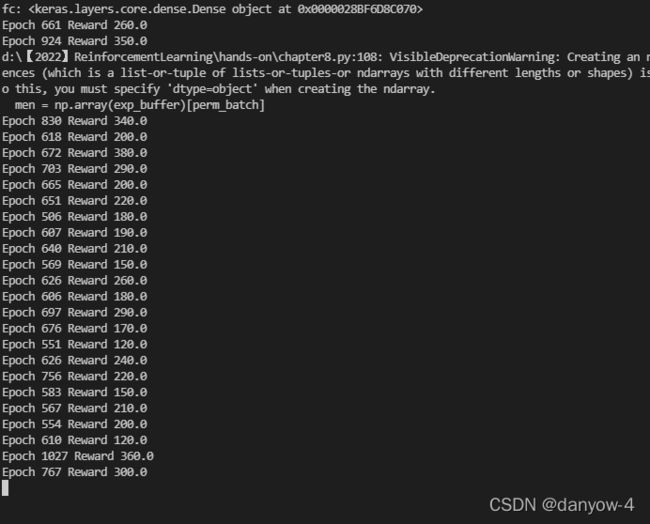

print('Epoch', epoch, 'Reward', episodic_reward,) 实现截图:

参考:

《Hands-on Reinforcement Learning with Python. Master Reinforcement and Deep Reinforcement Learning using OpenAI Gym and TensorFlow》