吴恩达机器学习课后习题ex2

ex2:Logistic_regression

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import scipy.optimize as opt #优化算法

from sklearn.metrics import classification_report

from sklearn import linear_model

Scipy优化算法:scipy.optimize.fmin_tnc()/minimize()

参见:https://www.cnblogs.com/tongtong123/p/10634716.html

sklearn.metrics.classification_report

参见:https://blog.csdn.net/kancy110/article/details/74937469

# 获取原始数据

def raw_data(path):

data=pd.read_csv(path,names=['exam1','exam2','admit'])

return data

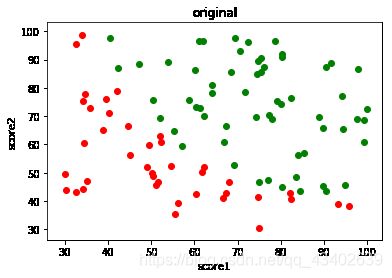

#绘制初始数据

#需要将两种判断结果区别颜色绘制

def draw_data(data):

accept = data[data.admit==1]

refuse = data[data.admit == 0]

#accept=data[data['admit'].isin([1])]

#refuse=data[data['admit'].isin([0])]

plt.scatter(accept.exam1,accept.exam2,c='g',label = 'admit')

plt.scatter(refuse.exam1,refuse.exam2,c='r',label = 'not admit')

plt.title('admission')

plt.xlabel('score1')

plt.ylabel('score2')

return plt

pandas中isin()函数及其逆函数使用

https://blog.csdn.net/weixin_44056331/article/details/89461816

#sigmoid函数

def sigmoid(z):

return 1/(1+np.exp(-z))#exp(-z):e的-z次方

#代价函数

def cost_function(theta, x, y):

m = x.shape[0]

j = (y.dot(np.log(sigmoid(x.dot(theta))))+(1-y).dot(np.log(1-sigmoid(x.dot(theta)))))/(-m)

return j

#计算偏导数即可,后面会用scipy.optimize中方法求最优解

def gradient_descent(theta, x, y):

return ((sigmoid(x.dot(theta))-y).T).dot(x)

#预测函数

def predict(theta, x):

h = sigmoid(x.dot(theta))

return [1 if x>= 0.5 else 0 for x in h]

#决策边界

def boundary(theta, data):

x1 = np.arange(20,100,0.01) #x1范围

x2 = (theta[0]+theta[1]*x1)/-theta[2] #直线方程为:theta[0] + theta[1]*x1 + theta[2]*x2 = 0

plt = draw_data(data)

plt.plot(x1, x2)

plt.show()

def main():

data = raw_data('ex2data1.txt')

plt = draw_data(data)

plt.title('original')

plt.show()

data.insert(0,'Ones',1)

#x1=data['exam1']

#x2=data['exam2']

#x=np.c_[np.ones(x1.shape[0]),x1,x2]

#和ex1同样的数据处理步骤

cols = data.shape[1]

x = data.iloc[:,:cols-1]

y=data.admit#data['admit']都可以

# 不要将theta初始化为1,初始化为1的话,h(x)会过大,sigmoid近似返回1,log(1-h(x))无意义

theta=np.zeros(x.shape[1])

# print(cost_function(theta,x,y)) # 0.6931471805599453

# theta=opt.fmin_tnc(func=cost_function,x0=theta,fprime=gradient_descent,args=(x,y))

theta = opt.minimize(fun=cost_function,x0=theta,args=(x,y),method='tnc',jac=gradient_descent)

theta=theta.x#x是opt.minimize返回的最优解数组

# print(cost_function(theta,x,y))

print(classification_report(predict(theta,x),y))

model=linear_model.LogisticRegression()

model.fit(x,y)

print(model.score(x,y))

boundary(theta,data)

Scipy优化算法:scipy.optimize.fmin_tnc()/minimize()

参见:https://www.cnblogs.com/tongtong123/p/10634716.html

main()

precision recall f1-score support

0 0.85 0.87 0.86 39

1 0.92 0.90 0.91 61

avg / total 0.89 0.89 0.89 100

0.91

参考学习博客:

https://blog.csdn.net/zy1337602899/article/details/84777396