Pytorch_finetune代码解读

最近跑了一下王晋东博士迁移学习简明手册上的深度网络的 finetune 代码实现,在这里做一下笔记。

来源:Githup开源链接

总结代码的大体框架如下:

1.数据集选择:office31-------load data

2.模型选择:Alexnet、Resnet50-------load model

3.优化器设置

4.模型训练

5.模型检测

下面来一个模块一个模块分析:

1.load data

# -*- coding: utf-8 -*-

from torchvision import datasets, transforms

import torch

import os

def load_data(root_path, dir, batch_size, phase):

transform_dict = {

'src': transforms.Compose(

[transforms.RandomResizedCrop(224),#先随机采集,然后对裁剪得到的图像缩放为同一大小

transforms.RandomHorizontalFlip(),#以给定的概率随机水平旋转给定的PIL的图像,默认为0.5

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],#归一化

std=[0.229, 0.224, 0.225]),

]),

'tar': transforms.Compose(

[transforms.Resize(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]),

])}

data = datasets.ImageFolder(root=os.path.join(root_path, dir), transform=transform_dict[phase])

#数据加载器,结合了数据集和取样器,并且可以提供多个线程处理数据集。

#在训练模型时使用到此函数,用来把训练数据分成多个小组,此函数每次抛出一组数据。直至把所有的数据都抛出。就是做一个数据的初始化。

data_loader = torch.utils.data.DataLoader(data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=4)

return data_loader

def load_train(root_path, dir, batch_size, phase):

transform_dict = {

'src': transforms.Compose(

[transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]),

]),

'tar': transforms.Compose(

[transforms.Resize(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]),

])}

data = datasets.ImageFolder(root=os.path.join(root_path, dir), transform=transform_dict[phase])

train_size = int(0.8 * len(data))

test_size = len(data) - train_size

data_train, data_val = torch.utils.data.random_split(data, [train_size, test_size])

train_loader = torch.utils.data.DataLoader(data_train, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=4)

val_loader = torch.utils.data.DataLoader(data_val, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=4)

return train_loader, val_loader

分析:

这块代码保存为data_loader.py文件,主要实现数据集的下载、分类、格式调整等功能。这部分代码比较固定,在做其他深度网络的finetune时,一般情况下可以直接copy。

torch.utils.data.DataLoader()函数用来加载数据,起到承上起下的作用。把得到的数据集直接送入模型中。

2.load model

def load_model(name='alexnet'):

if name == 'alexnet':

model = torchvision.models.alexnet(pretrained=True)

n_features = model.classifier[6].in_features

#定义预训练网络倒数第一层参数为输入,全连接层输出10个类别

fc = torch.nn.Linear(n_features, args.n_class)

model.classifier[6] = fc

elif name == 'resnet':

model = torchvision.models.resnet50(pretrained=True)

n_features = model.fc.in_features

fc = torch.nn.Linear(n_features, args.n_class)

model.fc = fc

model.fc.weight.data.normal_(0, 0.005)

model.fc.bias.data.fill_(0.1)

return model

分析:

这里只提供了两种网络的选择,确定使用的网络之后,直接在Pytorch自带的库中下载已经训练好的网络参数即可。注意,一般情况要冻结前n层,这里我们的finetune实验比较简单,直接冻结全连接层以前的所有层即可,然后定义一个新的全连接层,并把冻结模型的最后一层参数作为全连接层的输入参数,输出定义为自己实验数据集的类别即可。

注意,不同网络的定义会有细微区别,这部分代码不能生搬硬套。

3.优化器设置

def get_optimizer(model_name):

learning_rate = args.lr

if model_name == 'alexnet':

param_group = [

{'params': model.features.parameters(), 'lr': learning_rate}]

for i in range(6):

param_group += [{'params': model.classifier[i].parameters(),

'lr': learning_rate}]

#最后一层提高学习率

param_group += [{'params': model.classifier[6].parameters(),

'lr': learning_rate * 10}]

elif model_name == 'resnet':

param_group = []

for k, v in model.named_parameters():

#用于判断键是否存在于字典中,如果键在字典dict里返回true,否则返回false

if not k.__contains__('fc'):

param_group += [{'params': v, 'lr': learning_rate}]

else:

param_group += [{'params': v, 'lr': learning_rate * 10}]

optimizer = optim.SGD(param_group, momentum=args.momentum)

return optimizer

分析:

设置每一层参数的优化,注意最后一层的学习率与冻结层的学习率不同。

4.模型训练

def finetune(model, dataloaders, optimizer):

since = time.time()

best_acc = 0

criterion = nn.CrossEntropyLoss()

stop = 0

for epoch in range(1, args.n_epoch + 1):

stop += 1

# You can uncomment this line for scheduling learning rate

# lr_schedule(optimizer, epoch)

#循环

#训练集,测试集,验证集

for phase in ['src', 'val', 'tar']:

if phase == 'src':

model.train()

else:

model.eval()

total_loss, correct = 0, 0

#一批一批算 batch_size

for inputs, labels in dataloaders[phase]:

inputs, labels = inputs.to(DEVICE), labels.to(DEVICE)

# 把梯度置零,也就是把loss关于weight的导数变成0

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'src'):

outputs = model(inputs)

loss = criterion(outputs, labels)

preds = torch.max(outputs, 1)[1]

if phase == 'src':

loss.backward()

# 更新所有的参数,一旦梯度被如backward()之类的函数计算好后,我们就可以调用这个函数

optimizer.step()

total_loss += loss.item() * inputs.size(0)

correct += torch.sum(preds == labels.data)

#存储一次训练的平均loss和acc

epoch_loss = total_loss / len(dataloaders[phase].dataset)

epoch_acc = correct.double() / len(dataloaders[phase].dataset)

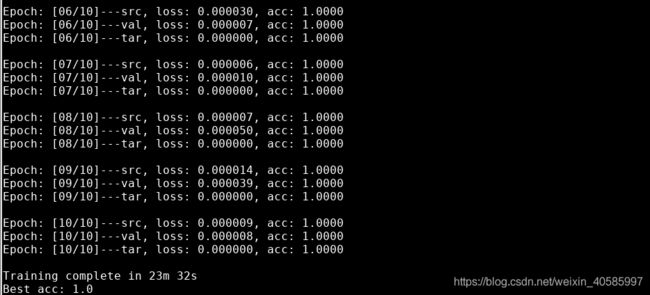

print('Epoch: [{:02d}/{:02d}]---{}, loss: {:.6f}, acc: {:.4f}'.format(epoch, args.n_epoch, phase, epoch_loss,

epoch_acc))

if phase == 'val' and epoch_acc > best_acc:

stop = 0

best_acc = epoch_acc

torch.save(model.state_dict(), 'model.pkl')

#如果20个训练还没有提高准确率,则跳出循环

if stop >= args.early_stop:

break

print()

model.load_state_dict(torch.load('model.pkl'))

#使用训练好的的模型测试

acc_test = test(model, dataloaders['tar'])

time_pass = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_pass // 60, time_pass % 60))

return model, acc_test

分析:

注意这里参数为:model、dataloaders、optimizers,即上面三部分做的工作,在这块代码全部用到。

注意训练集与测试集、验证集的运行有细微差别,比如训练集由loss、backward部分,但测试集和验证集则没有。

下面附上finetune_office31,py的全部代码,配合第一块data_loader.py使用

# -*- coding: utf-8 -*-

from __future__ import print_function

import argparse

import data_loader

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import time

# Command setting

parser = argparse.ArgumentParser(description='Finetune')

parser.add_argument('--model', type=str, default='resnet')

parser.add_argument('--batchsize', type=int, default=64)

parser.add_argument('--src', type=str, default='amazon')

parser.add_argument('--tar', type=str, default='webcam')

parser.add_argument('--n_class', type=int, default=31)

parser.add_argument('--lr', type=float, default=1e-4)

parser.add_argument('--n_epoch', type=int, default=100)

parser.add_argument('--momentum', type=float, default=0.9)

parser.add_argument('--decay', type=float, default=5e-4)

parser.add_argument('--data', type=str, default='Your dataset folder')

parser.add_argument('--early_stop', type=int, default=20)

args = parser.parse_args()

# Parameter setting

DEVICE = torch.device('cuda:2')

BATCH_SIZE = {'src': int(args.batchsize), 'tar': int(args.batchsize)}

#选择模型

def load_model(name='alexnet'):

if name == 'alexnet':

model = torchvision.models.alexnet(pretrained=True)

n_features = model.classifier[6].in_features

#定义预训练网络倒数第一层参数为输入,全连接层输出10个类别

fc = torch.nn.Linear(n_features, args.n_class)

model.classifier[6] = fc

elif name == 'resnet':

model = torchvision.models.resnet50(pretrained=True)

n_features = model.fc.in_features

fc = torch.nn.Linear(n_features, args.n_class)

model.fc = fc

model.fc.weight.data.normal_(0, 0.005)

model.fc.bias.data.fill_(0.1)

return model

#获得优化器

def get_optimizer(model_name):

learning_rate = args.lr

if model_name == 'alexnet':

param_group = [

{'params': model.features.parameters(), 'lr': learning_rate}]

for i in range(6):

param_group += [{'params': model.classifier[i].parameters(),

'lr': learning_rate}]

#最后一层提高学习率

param_group += [{'params': model.classifier[6].parameters(),

'lr': learning_rate * 10}]

elif model_name == 'resnet':

param_group = []

for k, v in model.named_parameters():

#用于判断键是否存在于字典中,如果键在字典dict里返回true,否则返回false

if not k.__contains__('fc'):

param_group += [{'params': v, 'lr': learning_rate}]

else:

param_group += [{'params': v, 'lr': learning_rate * 10}]

optimizer = optim.SGD(param_group, momentum=args.momentum)

return optimizer

# Schedule learning rate

#运行时可以先不管这部分,后续优化时会用到

def lr_schedule(optimizer, epoch):

def lr_decay(LR, n_epoch, e):

return LR / (1 + 10 * e / n_epoch) ** 0.75

#调整学习率

for i in range(len(optimizer.param_groups)):

if i < len(optimizer.param_groups) - 1:

optimizer.param_groups[i]['lr'] = lr_decay(

args.lr, args.n_epoch, epoch)

else:

optimizer.param_groups[i]['lr'] = lr_decay(

args.lr, args.n_epoch, epoch) * 10

def test(model, target_test_loader):

model.eval()

correct = 0

criterion = torch.nn.CrossEntropyLoss()

len_target_dataset = len(target_test_loader.dataset)

#在使用pytorch时,并不是所有的操作都需要进行计算图的生成(计算过程的构建,以便梯度反向传播等操作)。

#而对于tensor的计算操作,默认是要进行计算图的构建的,

#在这种情况下,可以使用 with torch.no_grad():,强制之后的内容不进行计算图构建。

with torch.no_grad():

for data, target in target_test_loader:

data, target = data.to(DEVICE), target.to(DEVICE)

s_output = model(data)

loss = criterion(s_output, target)

pred = torch.max(s_output, 1)[1]

correct += torch.sum(pred == target)

acc = correct.double() / len(target_test_loader.dataset)

return acc

"""

#torch.max(input, dim) 函数

输入:

input是softmax函数输出的一个tensor

dim是max函数索引的维度0 / 1,0

是每列的最大值,1

是每行的最大值

输出:

函数会返回两个tensor,

第一个tensor是每行的最大值,softmax的输出中最大的是1,

所以第一个tensor是全1的tensor;第二个tensor是每行最大值的索引。

"""

def finetune(model, dataloaders, optimizer):

since = time.time()

best_acc = 0

criterion = nn.CrossEntropyLoss()

stop = 0

for epoch in range(1, args.n_epoch + 1):

stop += 1

# You can uncomment this line for scheduling learning rate

# lr_schedule(optimizer, epoch)

#循环

#训练集,测试集,验证集

for phase in ['src', 'val', 'tar']:

if phase == 'src':

model.train()

else:

model.eval()

total_loss, correct = 0, 0

#一批一批算 batch_size

for inputs, labels in dataloaders[phase]:

inputs, labels = inputs.to(DEVICE), labels.to(DEVICE)

# 把梯度置零,也就是把loss关于weight的导数变成0

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'src'):

outputs = model(inputs)

loss = criterion(outputs, labels)

preds = torch.max(outputs, 1)[1]

if phase == 'src':

loss.backward()

# 更新所有的参数,一旦梯度被如backward()之类的函数计算好后,我们就可以调用这个函数

optimizer.step()

total_loss += loss.item() * inputs.size(0)

correct += torch.sum(preds == labels.data)

#存储一次训练的平均loss和acc

epoch_loss = total_loss / len(dataloaders[phase].dataset)

epoch_acc = correct.double() / len(dataloaders[phase].dataset)

print('Epoch: [{:02d}/{:02d}]---{}, loss: {:.6f}, acc: {:.4f}'.format(epoch, args.n_epoch, phase, epoch_loss,

epoch_acc))

if phase == 'val' and epoch_acc > best_acc:

stop = 0

best_acc = epoch_acc

torch.save(model.state_dict(), 'model.pkl')

#如果20个训练还没有提高准确率,则跳出循环

if stop >= args.early_stop:

break

print()

model.load_state_dict(torch.load('model.pkl'))

#使用训练好的的模型测试

acc_test = test(model, dataloaders['tar'])

time_pass = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_pass // 60, time_pass % 60))

return model, acc_test

if __name__ == '__main__':

torch.manual_seed(10)

# Load data

root_dir = args.data

domain = {'src': str(args.src), 'tar': str(args.tar)}

dataloaders = {}

dataloaders['tar'] = data_loader.load_data(root_dir, domain['tar'], BATCH_SIZE['tar'], 'tar')

dataloaders['src'], dataloaders['val'] = data_loader.load_train(root_dir, domain['src'], BATCH_SIZE['src'], 'src')

# Load model

model_name = str(args.model)

#device=

model = load_model(model_name).to(DEVICE)

print('Source: {} ({}), target: {} ({}), model: {}'.format(

domain['src'], len(dataloaders['src'].dataset), domain['tar'], len(dataloaders['val'].dataset), model_name))

optimizer = get_optimizer(model_name)

model_best, best_acc = finetune(model, dataloaders, optimizer)

print('Best acc: {}'.format(best_acc))

end