利用主成分分析法和多层感知机(MLP)实现MNIST数据集分类

"""

Created on Wed Jan 5 09:17:06 2022

@author: wzy

"""

import numpy as np

from sklearn.decomposition import PCA

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets,transforms

from torch.utils.data import Dataset

batch_size=200

learning_rate=0.001

epochs=20

n_features = 10

class MyDataset(Dataset):

def __init__(self, datas,labels):

self.datas = datas

self.labels = labels

def __getitem__(self, index):

img = self.datas[index]

target = self.labels[index]

return img, target

def __len__(self):

return len(self.datas)

train_dataset = datasets.MNIST('./data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1,), (0.1,))

]))

test_dataset = datasets.MNIST('./data', train=False, download=True, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1,), (0.1,))

]))

def pca_mnist(train_dataset,test_dataset,n_features):

dataset_train = np.array([data[0].flatten().numpy() for data in train_dataset])

dataset_test = np.array([data[0].flatten().numpy() for data in test_dataset])

pca = PCA(n_components=n_features)

dataset_all = np.concatenate((dataset_train,dataset_test),axis=0)

pca.fit(dataset_all)

data_all = pca.transform(dataset_all)

data_all = torch.from_numpy(data_all)

train_datas = data_all[:len(dataset_train)]

test_datas = data_all[len(dataset_train):]

train_labels = np.array([data[1] for data in train_dataset])

train_labels = torch.tensor(train_labels)

test_labels = np.array([data[1] for data in test_dataset])

test_labels = torch.tensor(test_labels)

return train_datas,train_labels,test_datas,test_labels

train_datas,train_labels,test_datas,test_labels = pca_mnist(train_dataset,test_dataset,n_features)

train_dataset = MyDataset(train_datas,train_labels)

test_dataset = MyDataset(test_datas,test_labels)

train_loader = torch.utils.data.DataLoader(

train_dataset,

batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(

test_dataset,

batch_size=batch_size, shuffle=False)

class MLP(nn.Module):

def __init__(self,n_features):

super(MLP,self).__init__()

self.flatten = nn.Flatten()

self.linear1 = nn.Linear(n_features,200)

self.linear2 = nn.Linear(200,100)

self.linear3 = nn.Linear(100,10)

def forward(self,x):

x = self.flatten(x)

x = self.linear1(x)

x = F.relu(x)

x = self.linear2(x)

x = F.relu(x)

x = self.linear3(x)

return x

net = MLP(n_features)

optimizer = optim.SGD(net.parameters(), lr=learning_rate)

loss_fn = nn.CrossEntropyLoss()

for epoch in range(epochs):

for batch_idx, (data, target) in enumerate(train_loader):

cal_data = net(data)

loss = loss_fn(cal_data, target.long())

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch_idx % 25 == 0:

print('训练代数: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch+1, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

test_loss = 0

correct = 0

for data, target in test_loader:

logits = net(data)

test_loss += loss_fn(logits, target.long()).item()

pred = logits.data.argmax(dim=1)

correct += pred.eq(target.data).sum()

test_loss /= len(test_loader.dataset)

print('\n测试集: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

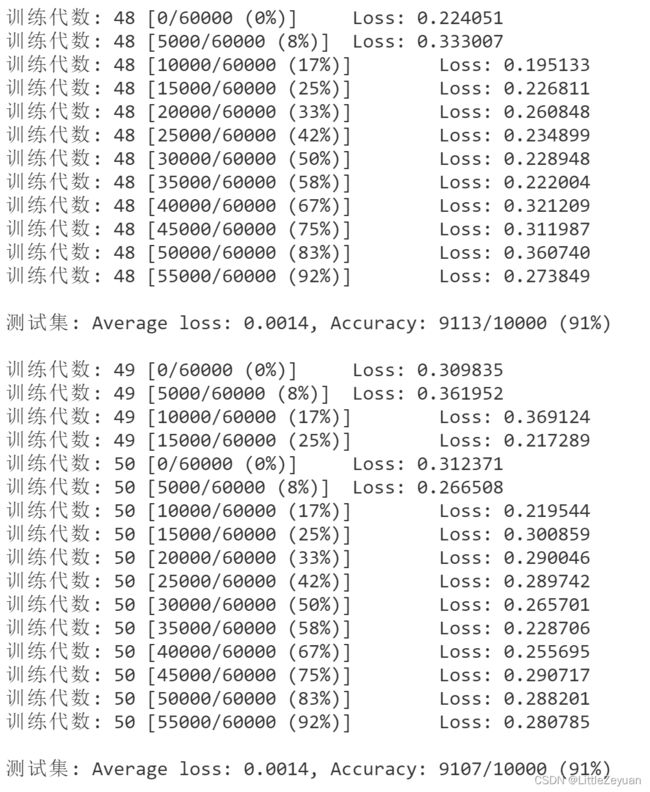

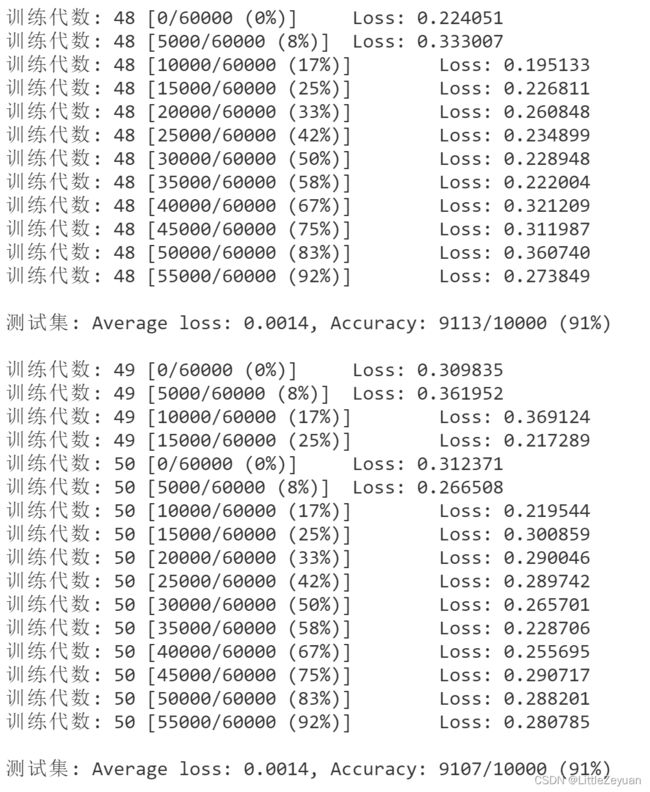

最终运行结果如下: