数据增强-albumentations与imgaug使用方法

目标检测过程中常遇见目标种类或者数量不足的情况,在此情况下,我们常常使用数据增强的策略去扩充数据,以获取更高的检测精度。

如下介绍两个数据增强的常用库

1、imgaug库

(1)安装

直接安装pypi最新的版本

pip install imgaug

如果遇见Shapely导致的错误,则进行如下操作:

pip install six numpy scipy Pillow matplotlib scikit-image opencv-python imageio

pip install --no-dependencies imgaug

当然你也可以直接使用github仓库的release版本进行安装,如下:

pip install six numpy scipy Pillow matplotlib scikit-image opencv-python imageio

pip install --no-dependencies imgaug

当然,要想使用imgaug里面的augmenters库,需要额外安装imagecorruptions包:

pip install imagecorruptions

(2)使用方法

以目标检测自动变化生成对应的增强图片/bbox/xml为例说明

注意:针对crop或者平移或者空间变换等操作,引起bbox超出原图的,需要使用如下操作,对结果进行变换:

bbs_aug_clip = bbs_aug.remove_out_of_image().clip_out_of_image()

import imageio

import imgaug as ia

import imgaug.augmenters as iaa

from imgaug.augmentables.bbs import BoundingBox, BoundingBoxesOnImage

import os

from xml.dom.minidom import Document

import xml.etree.ElementTree as ET

from tqdm import tqdm

ia.seed(42)

def generate_xml(save_path, img_info):

doc = Document()

DOCUMENT = doc.createElement('annotation')

floder = doc.createElement('folder')

floder_text = doc.createTextNode(img_info["folder"])

floder.appendChild(floder_text)

DOCUMENT.appendChild(floder)

doc.appendChild(DOCUMENT)

filename = doc.createElement('filename')

filename_text = doc.createTextNode(img_info["filename"]) # filename:

filename.appendChild(filename_text)

DOCUMENT.appendChild(filename)

doc.appendChild(DOCUMENT)

path = doc.createElement('path')

path_text = doc.createTextNode(img_info["img_path"]) # path:

path.appendChild(path_text)

DOCUMENT.appendChild(path)

doc.appendChild(DOCUMENT)

source = doc.createElement('source')

database = doc.createElement('database')

database_text = doc.createTextNode('Unknow')

database.appendChild(database_text)

source.appendChild(database)

DOCUMENT.appendChild(source)

doc.appendChild(DOCUMENT)

size = doc.createElement('size')

width = doc.createElement('width')

width_text = doc.createTextNode(str(img_info["img_shape"][1]))

width.appendChild(width_text)

size.appendChild(width)

height = doc.createElement('height')

height_text = doc.createTextNode(str(img_info["img_shape"][0]))

height.appendChild(height_text)

size.appendChild(height)

depth = doc.createElement('depth')

depth_text = doc.createTextNode(str(img_info["img_shape"][2]))

depth.appendChild(depth_text)

size.appendChild(depth)

DOCUMENT.appendChild(size)

segmented = doc.createElement('segmented')

segmented_text = doc.createTextNode('0')

segmented.appendChild(segmented_text)

DOCUMENT.appendChild(segmented)

doc.appendChild(DOCUMENT)

objectes = img_info["object"]

if len(objectes) == 0:

return

for obj in objectes:

_object = doc.createElement('object')

name = doc.createElement('name')

name_text = doc.createTextNode(obj["category"])

name.appendChild(name_text)

_object.appendChild(name)

pose = doc.createElement('pose')

pose_text = doc.createTextNode('Unspecified')

pose.appendChild(pose_text)

_object.appendChild(pose)

truncated = doc.createElement('truncated')

truncated_text = doc.createTextNode('0')

truncated.appendChild(truncated_text)

_object.appendChild(truncated)

truncated = doc.createElement('difficult')

truncated_text = doc.createTextNode('0')

truncated.appendChild(truncated_text)

_object.appendChild(truncated)

bndbox = doc.createElement('bndbox')

xmin = doc.createElement('xmin')

xmin_text = doc.createTextNode(str(int(obj["bbox"][0])))

xmin.appendChild(xmin_text)

bndbox.appendChild(xmin)

ymin = doc.createElement('ymin')

ymin_text = doc.createTextNode(str(int(obj["bbox"][1])))

ymin.appendChild(ymin_text)

bndbox.appendChild(ymin)

xmax = doc.createElement('xmax')

xmax_text = doc.createTextNode(str(int(obj["bbox"][2])))

xmax.appendChild(xmax_text)

bndbox.appendChild(xmax)

ymax = doc.createElement('ymax')

ymax_text = doc.createTextNode(str(int(obj["bbox"][3])))

ymax.appendChild(ymax_text)

bndbox.appendChild(ymax)

_object.appendChild(bndbox)

DOCUMENT.appendChild(_object)

f = open(save_path, 'w')

doc.writexml(f, indent='\t', newl='\n', addindent='\t')

f.close()

def parse_xml(xml_path):

dict_info = {'cat': [], 'bboxes': [], 'box_wh': [], 'whd': []}

tree = ET.parse(xml_path)

root = tree.getroot()

whd = root.find('size')

whd = [int(whd.find('width').text), int(whd.find('height').text), int(whd.find('depth').text)]

for obj in root.findall('object'):

cat = str(obj.find('name').text)

bbox = obj.find('bndbox')

x1, y1, x2, y2 = [int(bbox.find('xmin').text),

int(bbox.find('ymin').text),

int(bbox.find('xmax').text),

int(bbox.find('ymax').text)]

b_w = x2 - x1 + 1

b_h = y2 - y1 + 1

dict_info['cat'].append(cat)

dict_info['bboxes'].append([x1, y1, x2, y2])

dict_info['box_wh'].append([b_w, b_h])

dict_info['whd'].append(whd)

return dict_info

def get_bbox(xml):

box_res = []

tree = ET.parse(xml)

root = tree.getroot()

for obj in root.findall('object'):

name = obj.find('name').text

for box in obj.findall('bndbox'):

xmin = int(box.find('xmin').text)

ymin = int(box.find('ymin').text)

xmax = int(box.find('xmax').text)

ymax = int(box.find('ymax').text)

box_res.append(BoundingBox(x1=xmin, y1=ymin, x2=xmax, y2=ymax, label=name))

return box_res

def get_all_files(img_root):

img_paths = []

img_names = []

for root, _, files in os.walk(img_root):

if files is not None:

for file in files:

if file.endswith('xml'):

img_paths.append(os.path.join(root, file))

img_names.append(file)

return img_paths, img_names

if __name__ == '__main__':

img_root = r'D:\Users\User\Desktop\k\defect\img'

save_path = r'D:\Users\User\Desktop\k\defect\img_aug'

os.makedirs(save_path, exist_ok=True)

xmls, _ = get_all_files(img_root)

for xml_path in tqdm(xmls):

jpg_path = xml_path[:-4] + '.jpg'

filename = os.path.split(jpg_path)[-1]

if os.path.exists(jpg_path):

box = get_bbox(xml_path)

img = imageio.imread(jpg_path)

bbs = BoundingBoxesOnImage(box, img.shape)

image_before = bbs.draw_on_image(img, size=2)

# 图像的transform方式

seq = iaa.Sequential([

iaa.Multiply((1.2, 1.5)),

# iaa.Affine(translate_px={"x": 50, "y": 60}, scale=(0.5, 0.7)),

iaa.AdditiveGaussianNoise(scale=0.1 * 255),

iaa.Rotate(rotate=(-10, 10))

], random_order=True)

image_aug, bbs_aug = seq(image=img, bounding_boxes=bbs)

bbs_aug_clip = bbs_aug.remove_out_of_image().clip_out_of_image() # clip box out of img

if len(bbs_aug_clip) > 0:

parse_info = parse_xml(xml_path)

img_info = {

'img_shape': parse_info['whd'][0],

'folder': os.path.split(xml_path)[0],

'filename': filename,

'img_path': jpg_path,

'object': [{'category': b.label, 'bbox': [b.x1, b.y1, b.x2, b.y2]} for b in

bbs_aug_clip.bounding_boxes]}

generate_xml(os.path.join(save_path, os.path.split(xml_path)[-1]), img_info)

imageio.imwrite(os.path.join(save_path, filename), image_aug)

else:

print('bboxes are out of boundry, {}'.format(jpg_path))

2、albumentations库

(1)安装

直接使用pip安装即可

pip install albumentations

(2)使用方法

直接对图像本身进行增强

import random

import cv2

from matplotlib import pyplot as plt

import albumentations as A

def visualize(image):

plt.figure(figsize=(10, 10))

plt.axis('off')

plt.imshow(image)

if __name__ == '__main__':

image = cv2.imread(

r'D:\personal\model_and_code\augmentation\albumentations_examples-master\images\original_parrot.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # albu需要的类型为RGB, CV获取的为BGR

visualize(image)

transform = A.Compose([

A.RandomRotate90(),

A.Flip(),

A.Transpose(),

A.OneOf([

A.IAAAdditiveGaussianNoise(),

A.GaussNoise(),

], p=0.2),

A.OneOf([

A.MotionBlur(p=.2),

A.MedianBlur(blur_limit=3, p=0.1),

A.Blur(blur_limit=3, p=0.1),

], p=0.2),

A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.2, rotate_limit=45, p=0.2),

A.OneOf([

A.OpticalDistortion(p=0.3),

A.GridDistortion(p=.1),

A.IAAPiecewiseAffine(p=0.3),

], p=0.2),

A.OneOf([

A.CLAHE(clip_limit=2),

A.IAASharpen(),

A.IAAEmboss(),

A.RandomBrightnessContrast(),

], p=0.3),

A.HueSaturationValue(p=0.3),

])

random.seed(7)

augmented_image = transform(image=image)['image']

visualize(augmented_image)

plt.show()

效果如下:

对于目标检测带有bbox的样本,需要注意:

1、bbox类型

常见的有pascal_voc/coco/yolo格式的数据格式:

pascal_voc:[xmin, ymin, xmax,ymax]

coco:[xmin, ymin, width, height]

yolo:[center_x,centen_y,width,height]

albu库均支持,使用变换的时候需要进行进行指定

如下:更改format的类型

import albumentations as A

import cv2

transform = A.Compose([

A.RandomCrop(width=450, height=450),

A.HorizontalFlip(p=0.5),

A.RandomBrightnessContrast(p=0.2),

], bbox_params=A.BboxParams(format='coco', min_area=1024, min_visibility=0.1, label_fields=['class_labels']))

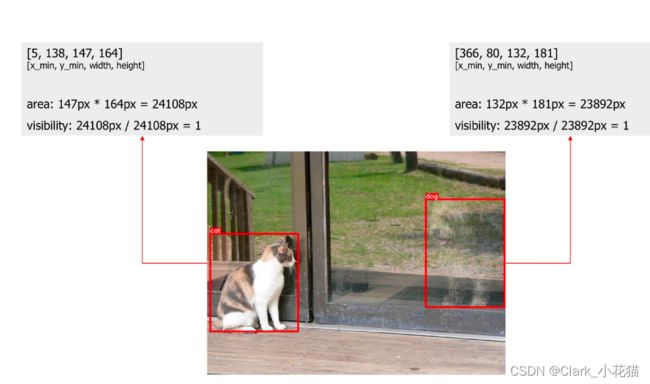

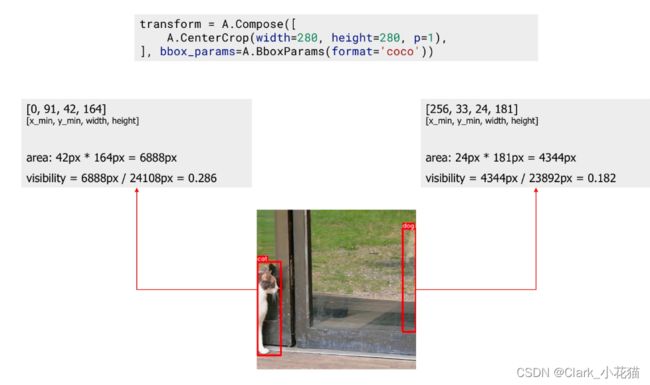

2、min_area与min_visibility

min_area: 该值是一个pixels的数值,表示的是框的长和宽的乘积,如果变换后(框的长和宽的乘积)的数值小于min_area,albu库会直接丢掉该box,返回值中将不存在该box

min_visibility:该值是一个属于0-1之间的数值,表示变换之后的box的面积和变换之前的box的面积的比值,如果该比值低于设定值,则会被丢弃,返回值将不存在该框。

示例图如下:

(3)传递图片和框进行增强并返回

bboxes = [

[23, 74, 295, 388],

[377, 294, 252, 161],

[333, 421, 49, 49],

]

class_labels = ['cat', 'dog', 'parrot']

class_categories = ['animal', 'animal', 'item']

transform = A.Compose([

A.RandomCrop(width=450, height=450),

A.HorizontalFlip(p=0.5),

A.RandomBrightnessContrast(p=0.2),

], bbox_params=A.BboxParams(format='coco', label_fields=['class_labels', 'class_categories'])))

transformed = transform(image=image, bboxes=bboxes, class_labels=class_labels, class_categories=class_categories)

transformed_image = transformed['image']

transformed_bboxes = transformed['bboxes']

transformed_class_labels = transformed['class_labels']

transformed_class_categories = transformed['class_categories']

如果有问题,请批评指正

–END–