Deformable Convolutional Networks v2 可变形卷积v2版翻译

Abstract

The superior performance of Deformable Convolutional Networks arises from its ability to adapt to the geometric variations of objects.

可变形卷积网络的优越性能源于其对目标几何变化的适应能力

Through an examination of its adaptive behavior, we observe that while the spatial support for its neural features conforms more closely than regular ConvNets to object structure, this support may nevertheless extend well beyond the region of interest, causing features to be influenced by irrelevant image content.

通过对其自适应行为的考察,我们发现,虽然其神经特征的空间支持比规则的ConvNets更接近于对象结构,但这种支持可能远远超出了感兴趣的区域,导致特征受到不相关图像内容的影响

To address this problem, we present a reformulation of Deformable ConvNets that improves its ability to focus on pertinent image regions, through increased modeling power and stronger training.

为了解决这个问题,我们提出了一种可变形的卷积神经网络,通过增加建模能力和更强的训练,提高了它对相关图像区域的聚焦能力。

The modeling power is enhanced through a more comprehensive integration of deformable convolution within the network, and by introducing a modulation mechanism that expands the scope of deformation modeling.

通过在网络内部对可变形卷积进行更全面的集成,引入扩展变形建模范围的调制机制,增强了建模能力。

To effectively harness this enriched modeling capability, we guide network training via a proposed feature mimicking scheme that helps the network to learn features that reflect the object focus and classification power of RCNN features.

为了有效地利用这种丰富的建模能力,我们提出了一种特征模仿方案来指导网络训练,帮助网络学习反映RCNN特征的对象焦点和分类能力的特征。

With the proposed contributions, this new version of Deformable ConvNets yields significant performance gains over the original model and produces leading results on the COCO benchmark for object detection and instance segmentation.

在提出的贡献下,这个可变形ConvNets的新版本在原始模型的基础上获得了显著的性能提升,并在用于对象检测和实例分割的COCO基准上产生了领先的结果。

1.Introduction

Geometric variations due to scale, pose, viewpoint and part deformation present a major challenge in object recognition and detection.

由于尺度、姿态、视点和零件变形等因素引起的几何变化是物体识别和检测的主要挑战。

The current state-of-the-art method for addressing this issue is Deformable Convolutional Networks (DCNv1) [8], which introduces two modules that aid CNNs in modeling such variations.

目前解决这一问题的最先进的方法是可变形卷积网络(DCNv1)[8],它引入了两个模块来帮助CNNs对这种变化进行建模。

One of these modules is deformable convolution, in which the grid sampling location of standard convolution are each offset by displacements learned with respect to the preceding feature maps

其中一个模块是可变形卷积(deformable convolution),其中标准卷积的网格采样位置通过相对于前面的特征图所学习到的位移来进行偏移

The other is deformable RoIpooling, where offsets are learned for the bin positions in RoIpooling [16].

另一个是可变形的RoIpooling,在这里可以学习RoIpooling[16]中bin位置的偏移量。

The incorporation of these modules into a neural network gives it the ability to adapt its feature representation to the configuration of an object, specifically by deforming its sampling and pooling patterns to fit the object’s structure.With this approach, large improvements in object detection accuracy are obtained.

将这些模块合并到神经网络中,使其能够根据对象的配置调整其特征表示,特别是通过改变其采样和池模式来适应对象的结构。利用该方法,可以大大提高目标检测的精度。

Towards understanding Deformable ConvNets, the authors visualized the induced changes in receptive field, via the arrangement of offset sampling positions in PASCAL VOC images [11]. It is found that samples for an activation unit tend to cluster around the object on which it lies.

为了理解可变形卷积网络,作者通过在PASCAL VOC图像[11]中偏移采样位置的安排,直观地观察了感受场的诱导变化。研究发现,激活单元的样本往往围绕其所在的对象聚集。

However, the coverage over an object is inexact, exhibiting a spread of samples beyond the area of interest.

然而,对一个物体的覆盖是不精确的,显示了超出兴趣区域的样本的扩散。

In a deeper analysis of spatial support using images from the more challenging COCO dataset [29], we observe that such behavior becomes more pronounced. These findings suggest that greater potential exists for learning deformable convolutions.

在使用来自更具挑战性的COCO数据集[29]的图像进行更深入的空间支持分析时,我们发现这种行为变得更加明显。这些发现表明学习可变形卷积的潜力更大。

In this paper, we present a new version of Deformable ConvNets, called Deformable ConvNets v2 (DCNv2), with enhanced modeling power for learning deformable convolutions.

在本文中,我们提出了一种新的变形卷积算法,称为Deformable ConvNets v2 (DCNv2),它增强了学习可变形卷积的建模能力。

This increase in modeling capability comes in two complementary forms.

这种建模能力的增加来自两种补充的形式。

The first is the expanded use of deformable convolution layers within the network. Equipping more convolutional layers with offset learning capacity allows DCNv2 to control sampling over a broader range of feature levels.

首先是网络中可变形卷积层的扩展使用。装备更多的具有offset学习能力的卷积层允许DCNv2在更大范围的特征级别上控制采样。

The second is a modulation mechanism in the deformable convolution modules, where each sample not only undergoes a learned offset, but is also modulated by a learned feature amplitude.

第二种是可变形卷积模块中的调制机制,其中每个样本不仅要经过学习偏移量,而且还要经过学习特征幅值的调制。

The network module is thus given the ability to vary both the spatial distribution and the relative influence of its samples.

因此,网络模块能够改变空间分布及其样本的相对影响。

To fully exploit the increased modeling capacity of DCNv2, effective training is needed.

为了充分开发DCNv2提高的建模能力,需要进行有效的训练。

Inspired by work on knowledge distillation in neural networks [2, 22], we make use of a teacher network for this purpose,where the teacher provides guidance during training.

受神经网络中知识蒸馏工作的启发[2,22],我们利用教师网络来实现这一目的,教师在培训过程中提供指导。

We specifically utilize R-CNN[17]as the eacher. Since it is a network trained for classification on cropped image content,R-CNN learns features unaffected by irrelevant information outside the region of interest.

我们特别利用R-CNN[17]作为导师。由于R-CNN是一个训练有素的对裁剪后的图像内容进行分类的网络,它可以学习不受感兴趣区域之外无关信息影响的特征。

To emulate this property,DCNv2 incorporates a feature mimicking loss into its training, which favors learning of features consistent to those of R-CNN. In this way, DCNv2 is given a strong training signal for its enhanced deformable sampling.

为了模拟这个特性,DCNv2在其训练中加入了一个模拟损失的特性,这有助于学习与R-CNN一致的特性。这样,DCNv2的增强变形采样得到了较强的训练信号。

With the proposed changes, the deformable modules remain lightweight and can easily be incorporated into existing network architectures.Specifically, we incorporate DCNv2 into the Faster R-CNN [33]and Mask R-CNN [20] systems, with a variety of backbone networks. Extensive experiments on the COCO benchmark demonstrate the significant improvement of DCNv2 over DCNv1 for object detection and instance segmentation. The code for DCNv2 will be released.

通过建议的更改,可变形模块仍然是轻量级的,并且可以轻松地集成到现有的网络体系结构中。具体来说,我们将DCNv2合并到Faster R-CNN[33]和 Mask R-CNN[20]系统中,使用多种骨干网络。在COCO基准上的大量实验表明,在对象检测和实例分割方面,DCNv2比DCNv1有显著的改进。DCNv2的代码将会发布。

2.Analysis of Deformable ConvNet Behavior

·2.1Spatial Support Visualization

To better understand the behavior of Deformable ConvNets,we visualize the spatial support of network nodes by their effective receptive fields [31], effective sampling locations, and error-bounded saliency regions. These three modalities provide different and complementary perspectives on the underlying image regions that contribute to a node’s response.

为了更好地理解可变形卷积网络的行为,我们通过网络节点的有效接受域[31]、有效采样位置和误差有界显著性区域来形象化网络节点的空间支持。这三种模式提供了不同的和互补的观点,对潜在的图像区域,有助于节点的响应。

Effective receptive fields :Not all pixels within the receptive field of a network node contribute equally to its response. The differences in these contributions are represented by an effective receptive field, whose values are calculated as the gradient of the node response with respect to intensity perturbations of each image pixel [31].

有效接受域并:非网络节点接受域内的所有像素对其响应的贡献都是相等的。这些贡献的差异由一个有效的接受域表示,其值计算为节点响应相对于每个图像像素[31]的强度扰动的梯度。

We utilize the effective receptive field to examine the relative influence of individual pixels on a network node,but note that this measure does not reflect the structured influence of full image regions.

我们利用有效接受域来检验单个像素对网络节点的相对影响,但注意,该方法不能反映完整图像区域的结构化影响。

Effective sampling / bin locations:In [8], the sampling locations of (stacked) convolutional layers and the sampling bins in RoIpooling layers are visualized for understanding the behavior of Deformable ConvNets. However, the relative contributions of these sampling locations to the network node are not revealed. We instead visualize effective sampling locations that incorporate this information, computed as the gradient of the network node with respect to the sampling / bin locations, so as to understand their contribution strength.

有效采样/ bin位置:在[8]中,(堆叠)卷积层的采样位置和RoIpooling层的采样bin都做了可视化来理解可变形卷积网络的行为。但是,这些采样位置对网络节点的相对贡献没有被揭示。相反,我们将包含此信息的有效抽样位置可视化,计算为网络节点相对于抽样/ bin位置的梯度,以便了解它们的贡献强度。

Error-bounded saliency regions :The response of a network node will not change if we remove image regions that do not influence it, as demonstrated in recent research on image saliency [41, 44, 13, 7]. Based on this property, we can determine a node’s support region as the smallest image region giving the same response as the full image, within a small error bound. We refer to this as the errorbounded saliency region, which can be found by progressively masking parts of the image and computing the resulting node response, as described in more detail in the Appendix. The error-bounded saliency region facilitates comparison of support regions from different networks.

Error-bounded saliency regions :最近关于图像显著性的研究[41,44,13,7]表明,去除不影响网络节点的图像区域,网络节点的响应不会发生变化。基于此属性,我们可以在一个小的误差范围内,将节点的支持区域确定为与完整图像响应相同的最小图像区域。我们将此称为错误有界显著性区域,它可以通过逐步屏蔽图像的部分并计算得到的节点响应来找到,详见附录。误差有界显著性区域便于比较不同网络的支持区域。

·2.2Spatial Support of Deformable ConvNets

We analyze the visual support regions of Deformable ConvNets in object detection. The regular ConvNet we employ as a base line consists of a Faster R-CNN + ResNet50 [21] object detector with aligned RoIpooling1 [20]. All the convolutional layers in ResNet-50 are applied on the whole input image. The effective stride in the conv5 stage is reduced from 32 to 16 pixels to increase feature map resolution. The RPN[33] head is added on top of the conv4 features of ResNet-101.On top of the conv5 features we add the Fast R-CNN head [16], which is composed of aligned RoIpooling and two fully-connected (fc) layers, followed by the classification and bounding box regression branches.

分析了可变形卷积在目标检测中的视觉支持区域。我们使用常规的ConvNet作为基线,由包含对齐的RoIpooling1[20] 的Faster R-CNN + ResNet50[21]对象检测器构成。ResNet-50中的所有卷积层都应用于整个输入图像。conv5阶段的有效步长由32个像素降低到16个像素,提高了特征图的分辨率。RPN[33]头是在ResNet-101的conv4特性的基础上添加的。在conv5特性的基础上,我们添加了Fast R-CNN头部[16],它由对齐的RoIpooling和两个完全连接(fc)层组成,然后是分类和包围框回归分支。

We follow the procedure in [8] to turn the object detector into its deformable counterpart. The three layers of 3 × 3 convolutions in the conv5 stage are replaced by deformable convolution layers. Also, the aligned RoIpooling layer is replaced by deformable RoIPooling. Both networks are trainedandvisualizedontheCOCObenchmark. Itisworth mentioning that when the offset learning rate is set to zero, the Deformable Faster R-CNN detector degenerates to regular Faster R-CNN with aligned RoIpooling.

我们按照[8]中的步骤将目标检测器转换为可变形的对应对象。将conv5阶段的3×3卷积层替换为可变形卷积层。此外,对齐的RoIpooling层被可变形的RoIpooling替换。这两种网络都是经过培训的,并且在cobenchmark上是可视化的。值得一提的是,当偏移学习速率设置为零时,可变形的更快的R-CNN检测器退化为具有对齐的RoIpooling的规则更快的R-CNN。

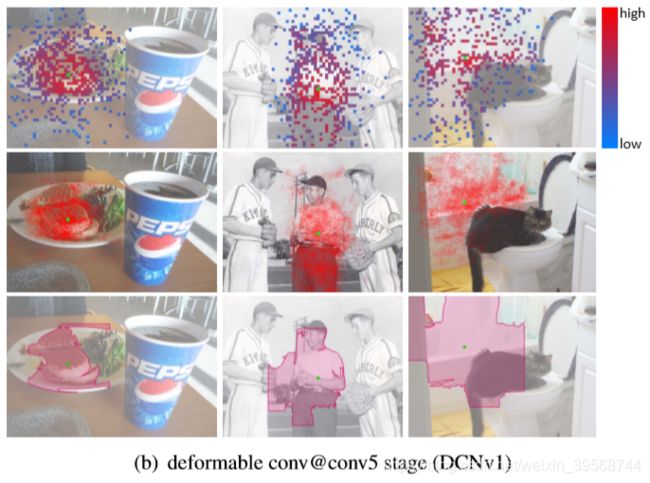

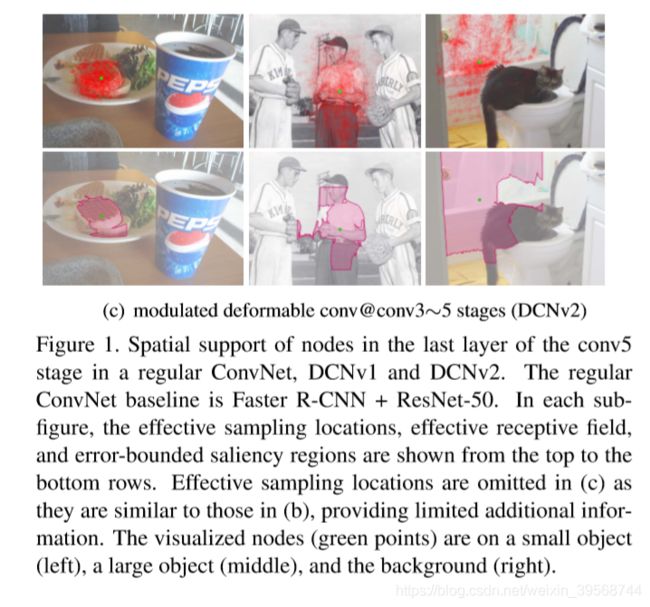

Using the three visualization modalities,we examine the spatial support of nodes in the last layer of the conv5 stage in Figure1 (a)∼(b). The sampling locations analyzed in[8] are also shown. From these visualizations, we make the following observations:

使用这三种可视化模式,我们检查了图1 (a)∼(b)中conv5阶段的最后一层节点的空间支持。并给出了在[8]中分析的采样位置。通过这些可视化,我们得出以下观察结果:

1. Regular ConvNets can model geometric variations to some extent, as evidenced by the changes in spatial support with respect to image content. Thanks to the strong representation power of deep ConvNets,the network weights are learned to accommodate some degree of geometric transformation.

1. 普通的ConvNets可以在一定程度上对几何变化进行建模,这可以从图像内容在空间支持方面的变化得到证明。由于深卷积的强大表示能力,网络权值能够适应一定程度的几何变换。

2. By introducing deformable convolution,the network’s ability to model geometric transformation is considerably enhanced, even on the challenging COCO benchmark. The spatial support adapts much more to image content, with nodes on the foreground having support that covers the whole object, while nodes on the background have expanded support that encompasses greater context. However, the range of spatial support may be inexact, with the effective receptive field and error-bounded saliency region of a foreground node including background areas irrelevant for detection.

2. 通过引入可变形卷积,网络对几何变换建模的能力大大增强,即使在具有挑战性的COCO基准上也是如此。空间支持更适合于图像内容,前台的节点拥有覆盖整个对象的支持,而后台的节点则扩展了包含更大上下文的支持。然而,空间支持的范围可能是不精确的,包括与检测无关的背景区域在内的前景节点的有效接受域和误差有界显著性区域。

3. The three presented types of spatial support visualizations are more informative than the sampling locations used in [8]. This can be seen, for example, with regular ConvNets, which have fixed sampling locations along a grid, but actually adapt its effective spatial support via network weights. The same is true for Deformable ConvNets, whose predictions are jointly affected by learned offsets and network weights. Examining sampling locations alone, as done in [8], can result in misleading conclusions about Deformable ConvNets.

3.第三种类型的空间支持可视化比[8]中使用的采样位置提供更多的信息。这一点可以在规则的ConvNets中看到,规则ConvNets在网格中具有固定的采样位置,但实际上通过网络权重调整其有效的空间支持。可变形的对流也是如此,它的预测受到学习偏移量和网络权重的共同影响。像在[8]中所做的那样,仅仅检查采样位置,就可能导致关于可变形的对流的错误结论。

Figure 2 (a)∼(b) display the spatial support of the 2fc node in the per-RoI detection head, which is directly followed by the classification and the bounding box regression branches. The visualization of effective bin locations suggests that bins on the object foreground generally receive larger gradients from the classification branch, and thus exert greater influence on prediction. This observation holds for both aligned RoIpooling and Deformable RoIpooling. In Deformable RoIpooling, a much larger proportion of bins cover the object foreground than in aligned RoIpooling, thanks to the introduction of learnable bin offsets. Thus, more information from relevant bins is available for the down stream FastR-CNNhead. Meanwhile,the error-bounded saliency regions in both aligned RoIpooling and Deformable RoIpooling are not fully focused on the object foreground, which suggests that image content outside of the RoI affects the prediction result. According to a recent study [6], such feature interference could be harmful for detection.

图2 (a)∼(b)显示了每个roi检测头中2fc节点的空间支持,紧接着是分类和边界框回归分支。有效bin位置的可视化表明,目标前景上的bin通常会从分类分支获得较大的梯度,从而对预测产生较大的影响。这种观察适用于对齐的RoIpooling和可变形的RoIpooling。在可变形的RoIpooling中,由于引入了可学习的bin偏移量,与对齐的RoIpooling相比,覆盖对象前景的bin比例要大得多。因此,下游FastR-CNNhead可以获得更多来自相关桶的信息。同时,对齐的RoIpooling和可变形的RoIpooling中误差有界的显著性区域都没有完全聚焦于对象前景,这说明RoI外的图像内容影响预测结果。根据最近的一项研究[6],这种特征干扰可能对检测有害。

While it is evident that Deformable ConvNets have markedly improved ability to adapt to geometric variation in comparison to regular ConvNets, it can also be seen that their spatial support may extend beyond the region of interest. We thus seek to upgrade Deformable ConvNets so that they can better focus on pertinent image content and deliver greater detection accuracy.

虽然与常规对卷积相比,可变形卷积网络对几何变化的适应能力明显提高,但也可以看出,它们的空间支持可能超出了感兴趣的区域。因此,我们寻求对可变形的ConvNets进行升级,使其能够更好地专注于相关的图像内容,并提供更高的检测精度。

3.More Deformable ConvNets

To improve the network’s ability to adapt to geometric variations, we present changes to boost its modeling power and to help it take advantage of this increased capability.

为了提高网络适应几何变化的能力,我们提出了一些变化来增强其建模能力,并帮助它利用这种增强的能力

3.1.Stacking More Deformable ConvLayers

Encouraged by the observation that Deformable ConvNets can effectively model geometric transformation on challenging benchmarks, we boldly replace more regular conv layers by their deformable counterparts. We expect that by stacking more deformable conv layers, the geometric transformation modeling capability of the entirenetwork can be further strengthened.

我们发现可变形的conv层可以在具有挑战性的基准上有效地对几何变换进行建模,受此启发,我们大胆地用可变形的conv层替换更规则的conv层。我们希望通过叠加更多的可变形conv层,进一步增强整个网络的几何变换建模能力。

In this paper, deformable convolutions are applied in all the 3×3 conv layers in stages conv3, conv4, and conv5 in ResNet-50. Thus, there are 12 layers of deformable convolution in the network. In contrast, just three layers of deformable convolution are used in [8], all in the conv5 stage. It is observed in [8] that performance saturates when stacking more than three layers for there latively simpleand small-scale PASCAL VOC benchmark. Also, misleading offset visualizations on COCO may have hindered further exploration on more challenging benchmarks. In experiments, we observe that utilizing deformable layers in the conv3-conv5 stages achieves the best tradeoff between accuracy and efficiency for object detection on COCO. See Section 5.2 for details.

本文中,可变形卷积被应用到了ResNet-50中conv3, conv4, and conv5 阶段中的全部3×3卷积层中。因此,网络中有12层可变形卷积。相比之下,[8]中只使用了三层可变形卷积,全部处于conv5阶段。在[8]中可以观察到,当叠加超过三层时,性能达到饱和,这是相对简单和小规模的 PASCAL VOC benchmark。此外,对COCO的误导偏移可视化可能阻碍了对更具挑战性的基准的进一步探索。在实验中,我们观察到利用可变形层在conv3-conv5阶段实现对COCO目标检测的精度和效率之间的最佳权衡。详见5.2节。

3.2 Modulated Defotmable Modules

To further strengthen the capability of Deformable ConvNets in manipulating spatial support regions,a modulation mechanism is introduced. With it, the Deformable ConvNets modules can not only adjust offsets in perceiving input features, but also modulate the input feature amplitudes from different spatial locations/bins. In the extreme case,a module can decide not to perceive signals from a particular location / bin by setting its feature amplitude to zero. Consequently, image content from the corresponding spatial location will have considerably reduced or no impact on the module output. Thus, the modulation mechanism provides the network module another dimension of freedom to adjust its spatial support regions.

为了进一步增强变形卷积网络在空间支持区域的操纵能力,引入了一种调制机制。可变形ConvNets模块不仅可以在感知输入特征时调整偏移量,还可以从不同的空间位置/桶中调节输入特征幅值。在极端情况下,模块可以通过将特征振幅设置为零来决定不接收来自特定位置/ bin的信号。因此,来自相应空间位置的图像内容会大大减少或不影响模块输出。因此,调制机制为网络模块提供了另一个自由度来调整其空间支持区域。

Given a convolutional kernel of K sampling locations, let wk and pk denote the weight and pre-specified offset for the k-th location, respectively. For example, K = 9 and pk ∈ {(−1,−1),(−1,0),...,(1,1)} defines a 3×3 convolutional kernel of dilation1. Let x(p) and y(p) denote the features at location p from the input feature maps x and output feature maps y,respectively. The modulated deformable convolution can then be expressed as

给定K个采样点的卷积核,令wk和pk分别表示第K个采样点的权值和预先指定的偏移量。例如,K = 9, pk∈{(- 1,- 1),(- 1,0),…,(1,1)}定义了一个3×3卷积核的展开式1。设x(p)和y(p)分别表示输入特征图x和输出特征图y中位置p处的特征。调制后的可变形卷积可表示为

where ∆pk and ∆mk are the learnable offset and modulation scalar for the k-th location, respectively. The modulation scalar ∆mk lies in the range [0,1], while ∆pk is a real number with unconstrained range. As p + pk + ∆pk is fractional, bilinear interpolation is applied as in [8] in computing x(p + pk + ∆pk). Both ∆pk and ∆mk are obtained via a separate convolution layer applied over the same input feature maps x.

其中∆pk和∆mk分别为第k个位置的可学习偏移量和调制标量。调制标量∆mk位于[0,1]范围内,∆pk为实数,取值范围无约束。由于p + pk +∆pk为分数形式,在计算x时采用双线性插值,如[8]所示x(p + pk +∆pk)。∆pk和∆mk都是通过在相同的输入特征映射x上应用一个单独的卷积层得到的。

This convolutional layer is of the same spatial resolution and dilation as the current convolutional layer. The output is of 3K channels, where the first 2K channels correspond to the learned offsets {∆pk}K k=1, and theremaining K channels are further fed to a sigmoid layer to obtain the modulation scalars {∆mk}K k=1. The kernel weights in this separate convolution layer are initialized to zero.

该卷积层与当前卷积层具有相同的空间分辨率和膨胀度。输出为3K通道,其中第一个2K通道对应于学习到的偏移量{∆pk}K K =1,其余的K通道进一步馈给一个sigmoid层,得到调制标量{∆mk}K =1。在这个单独的卷积层中,内核权值初始化为零。

Thus, the initial values of ∆pk and ∆mk are 0 and 0.5, respectively. The learning rates of the added conv layers for offset and modulation learning are set to 0.1 times those of the existing layers.

因此,∆pk和∆mk的初始值分别为0和0.5。添加的用于偏移和调制学习的conv层的学习率设置为现有层的0.1倍。

The design of modulated deformable RoIpooling is similar. Given an input RoI,RoIpooling divides it into K spatial bins (e.g. 7×7). Within each bin, sampling grids of even spatial intervals are applied (e.g. 2×2). The sampled values on the grids are averaged to compute the bin output. Let ∆pk and ∆mk be the learnable offset and modulation scalar for the k-th bin. The output binning feature y(k) is computed as

调制可变形RoIpooling的设计与此类似。给定一个输入RoI,RoIpooling将它分成 K 个空间 bin(例如7×7)划分为若干个区域。在每个箱内,采用均匀空间间隔的采样网格(如2×2)。将网格上的采样值取平均值,计算bin输出。设∆pk和∆mk为第k个bin的可学习偏移量和调制量。输出边特征y(k)计算为

where pkj is the sampling location for the j-th grid cell in the k-th bin, and nk denotes the number of sampled grid cells. Bilinear interpolation is applied to obtain features x(pkj + ∆pk). The values of ∆pk and ∆mk are produced by a sibling branch on the input feature maps. In this branch, RoIpooling generates features on the RoI, followed by two fc layers of 1024-D (initialized with Gaussian distribution of standard derivation of 0.01). On top of that, an additional fc layer produces output of 3K channels (weights initialized to be zero). The first 2K channels are the normalized learnable offsets, where element-wise multiplications with the RoI’s width and height are computed to obtain {∆pk}K k=1. The remaining K channels are normalized by a sigmoid layer to produce {∆mk}K k=1. The learning rates of the added fc layers for offset learning are the same as those of the existing layers.

其中pkj为第k个bin中第j个网格单元的采样位置,nk为采样网格单元的个数。采用双线性插值得到特征x(pkj +∆pk)。∆pk和∆mk的值由输入feature map上的同级分支产生。在这个分支中,RoIpooling在RoI上生成特征,然后是两个fc层1024-D(初始化为标准导数为0.01的高斯分布)。在此之上,另一个fc层生成3K通道的输出(初始化为零的权重)。第一个2K通道是标准化的可学习偏移量,其中计算元素与RoI的宽度和高度的乘积,得到{∆pk}K K =1。其余的K个通道被一个sigmoid层规范化,生成{∆mk}K K =1。添加的fc层用于偏移学习的学习率与现有层相同。

3.3 R-CNN Feature Mimicking

As observed in Figure 2, the error-bounded saliency region of a per-RoI classification node can stretch beyond the RoI for both regular ConvNets and Deformable ConvNets. Image content outside of the RoI may thus affect the extracted features and consequently degrade the final results of object detection.

如图2所示,对于常规卷积和可变形卷积,每个RoI分类节点的误差有界显著性区域都可以超出RoI。RoI外的图像内容可能会影响提取的特征,从而降低目标检测的最终结果。

In [6], the authors find redundant context to be a plausible source of detection error for Faster R-CNN. Together with other motivations(e.g.,to share fewer features between the classification and bounding box regression branches), the authors propose to combine the classification scores of Faster R-CNN and R-CNN to obtain the final detection score. Since R-CNN classification scores are focused on cropped image content from the input RoI, incorporating them would help to alleviate the redundant context problem and improve detection accuracy. However, the combined system is slow because both the Faster-RCNN and R-CNN branches need to be applied in both training and inference.

在[6]中,作者发现冗余上下文可能是Faster R-CNN检测错误的来源。连同其他动机(例如:为了减少分类和边界盒回归分支之间的特征共享),作者提出将Faster R-CNN和R-CNN的分类分数结合起来,得到最终的检测分数。由于R-CNN分类得分主要集中在从输入RoI中裁剪的图像内容上,将其合并将有助于缓解冗余上下文问题,提高检测精度。然而,由于Faster R-CNN和R-CNN分支都需要同时应用于训练和推理,因此组合系统的速度较慢。

Meanwhile, Deformable ConvNets are powerful in adjusting spatial support regions. For Deformable ConvNets v2 in particular, the modulated deformable RoIpooling module could simply set the modulation scalars of bins in a way that excludes redundant context. However, our experiments in Section5.3 show that even with modulated deformable modules, such representations cannot be learned well through the standard FasterR-CNN training procedure. We suspect that this is because the conventional Faster RCNN training loss cannot effectively drive the learning of such representations. Additional guidance is needed to steer the training.

同时,可变形卷积在调节空间支持区域方面具有较强的能力。特别是对于可变形ConvNets v2,调制的可变形RoIpooling模块可以简单地设置bin的调制标量,排除冗余上下文。然而,我们在第5.3节的实验表明,即使使用调制的可变形模块,也不能通过标准的Faster R-CNN训练程序很好地学习这种表示。我们怀疑这是因为传统的更快的Faster RCNN训练损失不能有效地驱动这种表征的学习。需要额外的指导来指导培训。

Motivated by recent work on feature mimicking [2, 22, 28],we incorporate a feature mimic loss on the per-RoI features of Deformable Faster R-CNN to force them to be similar to R-CNN features extracted from cropped images. This auxiliary training objective is intended to drive Deformable Faster R-CNN to learn more “focused” feature representations like R-CNN. We note that, based on the visualized spatial support regions in Figure 2, a focused feature representation may well not be optimal for negative RoIs on the image background. For background areas, more context information may need to be considered so as not to produce false positive detections. Thus,the feature mimic loss is enforced only on positive RoIs that sufficiently overlap with ground-truth objects.

受最近关于特征模仿的研究[2,22,28]的启发,我们在可变形的更快的R-CNN的每roi特征上加入了一个特征模拟损失,以迫使它们类似于从裁剪图像中提取的R-CNN特征。这个辅助训练目标的目的是驱动可变形更快的R-CNN学习更多的“聚焦”特征表示,如R-CNN。我们注意到,基于图2中可视化的空间支持区域,对于图像背景上的负roi,聚焦特征表示很可能不是最优的。对于背景区域,可能需要考虑更多的上下文信息,以免产生假阳性检测。因此,特征模拟损失只在与地面真实对象足够重叠的正roi上强制执行。

The network architecture for training Deformable Faster R-CNN is presented in Figure 3. In addition to the Faster R-CNN network, an additional R-CNN branch is added for feature mimicking. Given an RoI b for feature mimicking, the image patch corresponding to it is cropped and resized to 224 × 224 pixels. In the R-CNN branch, the backbone network operates on the resized image patch and produces feature maps of 14×14 spatial resolution. A (modulated) deformable RoIpooling layer is applied on top of the feature maps, where the input RoI covers the whole resized image patch(top-left corner at(0,0),and height and width are 224 pixels). After that, 2 fc layers of 1024-D are applied, producinganR-CNN feature representation for the input image patch,denoted by fRCNN(b).

训练可变形更快的R-CNN的网络结构如图3所示。除了更快的R-CNN网络,一个额外的R-CNN分支被添加到功能模仿。给定一个用于特征模仿的RoI b,裁剪相应的图像块并将其大小调整到224×224像素。在R-CNN分支中,主干网对调整后的图像patch进行操作,生成14×14空间分辨率的feature map。在特征图的顶部应用(调制)可变形的RoIpooling层,其中输入RoI覆盖整个调整大小的图像patch(左上角为(0,0),高度和宽度为224像素)。然后应用两个fc层1024-D,对输入图像patch生成anr - cnn特征表示,用fRCNN(b)表示。

A(C+1)-waySoftmaxclassifier follows for classification, where C denotes the number of foreground categories,plus one for background. The feature mimic loss is enforced between the R-CNN feature representation fRCNN(b) and the counterpart in Faster R-CNN, fFRCNN(b), which is also 1024-D and is produced by the 2 fc layers in the Fast R-CNN head. The feature mimic loss is defined on the cosine similarity between fRCNN(b) and fFRCNN(b), computed as

A(C+1)-way Softmax classifier用于分类,其中C表示前景类别的数量,加上一个作为背景。特征模拟损失是在R-CNN特征表示fRCNN(b)和速度更快的R-CNN对应的fFRCNN(b)之间进行的,后者也是1024-D,由速度更快的R-CNN头部的2个fc层产生。特征拟合损失由fRCNN(b)与fFRCNN(b)的余弦相似度定义,计算为

where Ω denotes the set of RoIs sampled for feature mimic training. In the SGD training, given an input image, 32 positive region proposals generated by RPN are randomly sampled into Ω. A cross-entropy classification loss is enforced on the R-CNN classification head,also computedon the RoIs in Ω.

在Ω表示的roi采样特性模拟训练。SGD(随机梯度下降)训练,给定一个输入图像,32积极地区所产生的随机抽样为Ω项建议。交叉熵分类损失是R-CNN分类实施的头,还computedonΩ的roi。

Network training is driven by the feature mimic loss and the R-CNN classification loss,together with the original loss terms in Faster R-CNN.The loss weights of the two newly introduced loss terms are 0.1 times those of the original FasterR-CNN loss terms. The network parameters between the corresponding modules in the R-CNN and the Faster R-CNN branches are shared, including the back bone network,(modulated) deformable RoIpooling,and the 2 fc heads (the classification heads in the two branches are unshared). In inference, only the Faster R-CNN network is applied on the test images, without the auxiliary R-CNN branch. Thus, no additional computation is introduced by R-CNN feature mimicking in inference.

网络训练是由特征模拟损失和R-CNN分类损失以及更快的R-CNN原始损失项驱动的。两个新引入的损耗项的损耗权值是原FasterR-CNN损耗项的0.1倍。R-CNN中对应模块与速度较快的R-CNN分支之间的网络参数是共享的,包括后骨架网络、(调制的)可变形RoIpooling和2个fc头(两个分支中的分类头未共享)。在推理过程中,只对测试图像使用较快的R-CNN网络,不使用辅助的R-CNN分支。因此,R-CNN特征在推理中模拟没有引入额外的计算。

4.Related Work

Deformation Modeling is a long-standing problem in computer vision, and there has been tremendous effort in designing translation-invariant features. Prior to the deep learning era, notable works include scale-invariant feature transform (SIFT) [30], oriented FAST and rotated BRIEF (ORB) [34], and deformable part-based models (DPM) [12]. Such works are limited by the inferior representation power of handcrafted features and the constrained family of geometric transformations they address (e.g., affine transformations). Spatial transformer networks (STN)[25]isthefirstworkonlearningtranslation-invariant features for deep CNNs. It learns to apply global affine transformations to warp feature maps, but such transformations inadequately model the more complex geometric variations encountered in many vision tasks. Instead of performing global parametric transformations and feature warping, Deformable ConvNets sample feature maps in a local and dense manner, via learnable offsets in the proposed deformable convolution and deformable RoIpooling modules. Deformable ConvNets is the first work to effectively model geometric transformations in complex vision tasks (e.g., object detection and semantic segmentation) on challenging benchmarks.

变形建模是计算机视觉中一个长期存在的问题,在设计平移不变特征方面付出了巨大的努力。在深度学习时代之前,著名的作品包括尺度不变特征变换(SIFT)[30]、面向快速旋转的BRIEF (ORB)[34]和基于可变形部件的模型(DPM)[12]。这些作品受到手工特征的低表达能力和它们所处理的几何变换家族的约束(例如仿射变换)的限制。空间变压器网络(STN)[25]是学习深度CNNs平移不变特性的第一个工作。它学会了将全局仿射变换应用于扭曲特征图,但这种变换不能很好地模拟在许多视觉任务中遇到的更复杂的几何变化。在提出的可变形卷积和可变形RoIpooling模块中,通过可学习的偏移量,将可变形卷积和可变形RoIpooling模块中的可学习偏移量以局部密集的方式对特征图进行采样,而不是进行全局参数转换和特征扭曲。变形卷积是在复杂视觉任务(如目标检测和语义分割)中,在具有挑战性的基准上对几何变换进行有效建模的第一个工作。

Our work extends Deformable ConvNets by enhancing its modeling power and facilitating network training. This new version of Deformable ConvNets yields significant performance gains over the original model.

我们的工作通过增强可变形卷积的建模能力和促进网络训练来扩展可变形卷积。与原来的模型相比,这个新版本的可变形卷积有显著的性能提升。

Relation Networks and Attention Modules are first proposed in natural language processing [14, 15, 4, 36] and physical system modeling [3, 38, 23, 35, 10, 32]. An attention / relation module effects an individual element (e.g., a word in a sentence) by aggregating features from a set of elements (e.g., all the words in the sentence), where the aggregation weights are usually defined on feature similarities among the elements. They are powerful in capturing longrange dependencies and contextual information in these tasks. Recently, the concurrent works of [24] and [37] successfully extend relation networks and attention modules to the image domain, for modeling long-range object-object and pixel-pixel relations, respectively. In [19], a learnable region feature extractor is proposed, unifying the previous region feature extraction modules from the pixel-object relation perspective.

关系网络和注意模块最早是在自然语言处理[14,15,4,36]和物理系统建模[3,38,23,35,10,32]中提出的。注意/关系模块通过从一组元素(例如,句子中的一个单词)中聚合特征来影响单个元素(例如,句子中的所有单词),其中聚合权重通常根据元素之间的特征相似性定义。它们在捕捉这些任务中的长期依赖关系和上下文信息方面非常强大。最近,[24]和[37]的并行工作成功地将关系网络和注意模块扩展到图像领域,分别用于建模远程对象-对象关系和像素-像素关系。在[19]中,提出了一种可学习的区域特征提取器,从像素对象关系的角度统一了以前的区域特征提取模块。

A common issue with such approaches is that the aggregation weights and the aggregation operation need to be computed on the elements in a pairwise fashion, incurring heavy computation that is quadratic to the number of elements ( e.g. ,all the pixels in an image ). Our developed approach can be perceived as a special attention mechanism where only a sparse set of elements have non-zero aggregation weights (e.g., 3 × 3 pixels from among all the image pixels). The attended elements are specified by the learnable offsets, and the aggregation weights are controlled by the modulation mechanism. The computational overhead is just linear to the number of elements, which is negligible compared to that of the entire network (See Table 1).

这种方法的一个常见问题是,需要以成对的方式对元素计算聚合权重和聚合操作,这会导致大量的计算,其计算量是元素数量的平方(例如,图像中的所有像素)。我们开发的方法可以理解为一种特殊的注意机制,其中只有稀疏的一组元素具有非零的聚集权(例如,所有图像像素中有3×3个像素)。参与的元素由可学习的偏移量指定,聚合权重由调制机制控制。计算开销与元素的数量成线性关系,与整个网络的元素数量相比微不足道(见表1)。

Spatial Support Manipulation. For atrous convolution, the spatial support of convolutional layers has been enlarged by padding zeros in the convolutional kernels [5]. The padding parameters are handpicked and predetermined. In active convolution [26], which is contemporary with Deformable ConvNets,convolutional kernel offsets are learned via back-propagation. But the offsets are static model parameters fixed after training and shared over different spatial locations. In a multi-path network for object detection[40],multiple RoIpooling layers are employed for each input RoI to better exploit multi-scale and context information. The multiple RoIpooling layers are centered at the input RoI, and are of different spatial scales. A common issue with these approaches is that the spatial support is controlled by static parameters and does not adapt to image content.

空间操作的支持。对于无阶卷积,通过在卷积核[5]中填充零,扩大了卷积层的空间支持。填充参数是人工选择和预先确定的。在与可变形卷积同时代的有源卷积[26]中,卷积核的偏移量是通过反向传播来学习的。但偏移量是训练后固定的静态模型参数,在不同的空间位置上共享。在目标检测的多径网络中,每个输入RoI都使用多个RoIpooling层,以更好地利用多尺度和上下文信息。多个RoIpooling层以输入RoI为中心,具有不同的空间尺度。这些方法的一个常见问题是空间支持受静态参数控制,不适应图像内容。

Effective Receptive Field and Salient Region. Towards better interpreting how a deep network functions, significant progress has been made in understanding which image regions contribute most to network prediction. Recent works on effective receptive fields [31] and salient regions [41, 44, 13, 7] reveal that only a small proportion of pixels in the theoretical receptive field contribute significantly to the final network prediction. The effective support region is controlled by the joint effect of network weights and sampling locations. Here we exploit the developed techniques to better understand the network behavior of Deformable ConvNets. The resulting observations guide and motivate us to improve over the original model.

有效接受区和显著区。为了更好地解释深度网络的功能,在理解哪些图像区域对网络预测贡献最大方面已经取得了重大进展。最近对有效接受域[31]和显著区域的研究[41,44,13,7]表明,理论接受域中只有一小部分像素对最终的网络预测有显著的贡献。有效支撑区域由网络权值和采样点的联合作用控制。在这里,我们利用所开发的技术来更好地理解可变形对流的网络行为。由此产生的观察结果指导并激励我们对原始模型进行改进。

Network Mimicking and Distillation are recently introduced techniques for model acceleration and compression. Given a large teacher model, a compact student model is trained by mimicking the teacher model output or feature responses on training images [2, 22, 28]. The hope is that the compact model can be better trained by distilling knowledge from the large model.

网络模拟和蒸馏是近年来引入的模型加速和压缩技术。给定一个大型教师模型,通过模仿教师模型输出或训练图像上的特征响应来训练紧凑的学生模型[2,22,28]。希望通过从大型模型中提取知识,可以更好地训练紧凑模型。

Here we employ a feature mimic loss to help the network learn features that reflect the object focus and classification power of R-CNN features. Improved accuracy is obtained and the visualized spatial supports corroborate this approach.

在这里,我们使用一个特征模拟损失来帮助网络学习反映R-CNN特征的对象焦点和分类能力的特征。得到了较好的精度,可视化的空间支持验证了该方法。

5.Experiments

5.1.ExperimentSettings

Our models are trained on the 118k images of the COCO 2017 train set. In ablation, evaluation is done on the 5k images of the COCO 2017 validation set. We also evaluate performance on the 20k images of the COCO 2017 test-dev set. The standard mean average-precision scores at different box and mask IoUs are used for measuring object detection and instance segmentation accuracy, respectively.

模型训练自2017年118 k张图片的COCO图像训练集。在消融,评价了2017年5 k的COCO图像验证集。我们也评估表现20 k的COCO图像2017 test-dev集。分别用不同盒和掩码iou下的标准平均精度分数来测量目标检测和实例分割的精度。

Faster R-CNN and Mask R-CNN are chosen as the base line systems. ImageNet [9] pre-trained ResNet-50 is utilized as the backbone. The implementation of Faster RCNN is the same as in Section 3.3. For Mask R-CNN, we follow the implementation in[20]. To turn the networks into their deformable counterparts, the last set of 3×3 regular conv layers (close to the output in the bottom-up computation) are replaced by (modulated) deformable conv layers. Aligned RoIpooling is replaced by (modulated) deformable RoIpooling. Specially for Mask R-CNN, the two aligned RoIpooling layers with 7×7 and 14×14 bins are replaced by two (modulated) deformable RoIpooling layers with the same bin numbers. In R-CNN feature mimicking, the feature mimic loss is enforced on the RoI head for classification only (excluding that for mask estimation). For both systems, the choice of hyper-parameters follows the latest Detectron [18] code base except for the image resolution, which is briefly presented here. In both training and inference, images are resized so that the shorter side is 1,000 pixels2. Anchors of 5 scales and 3 aspect ratios are utilized. 2k and 1k region proposals are generated at a nonmaximum suppression threshold of 0.7 at training and inference respectively. In SGD training, 256 anchor boxes (ofpositive-negativeratio1:1)and 512 region proposals (of positive-negative ratio 1:3) are sampled for backpropagating their gradients. In our experiments, the networks are trained on 8 GPUs with 2 images per GPU for 16 epochs. The learning rate is initialized to 0.02 and is divided by 10 atthe10-thandthe14-thepochs. The weight decay and the momentum parameters are set to10−4 and 0.9,respectively.

选择Faster R-CNN和掩模R-CNN作为基线系统。 ImageNet [9]预训练的ResNet-50被用作骨干。更快的RCNN的实现与第3.3节中的相同。对于Mask R-CNN,我们遵循[20]中的实现。为了将网络变成可变形的对应物,最后一组3×3常规转换层(靠近自底向上计算中的输出)被(调制的)可变形转换层代替。 Aligned RoIpooling被(调制的)可变形RoIpooling取代。特别是对于掩模R-CNN,具有7×7和14×14个二进制位的两个对齐的RoIpooling层被具有相同二进制数的两个(调制的)可变形的RoIpooling层替换。在R-CNN特征模拟中,仅在分类上对RoI头强制执行特征模拟丢失(不包括用于掩模估计的特征)。对于这两个系统,超级参数的选择遵循最新的Detectron [18]代码库,除了图像分辨率,这里简要介绍了这一点。在训练和推理中,图像被调整大小以使短边为1,000像素2。使用5个刻度和3个纵横比的锚。在训练和推断时,在非最大抑制阈值0.7处生成2k和1k区域提议。在SGD训练中,对256个锚箱(正 - 负比率1:1)和512个区域提议(正负比率1:3)进行采样以反向传播它们的梯度。在我们的实验中,网络在8个GPU上训练,每个GPU有2个图像,共16个时期。学习率初始化为0.02,并且在10到14和14之间除以10。重量衰减和动量参数分别设定为10-4和0.9。

5.2.Enriched Deformation Modeling

The effects of enriched deformation modeling are examined from ablations shown in Table 1. The baseline with regular CNN modules obtains an APbbox score of 34.7% for FasterR-CNN,andAPbbox andAPmask scoresof36.6%and 32.2% respectively for Mask R-CNN. To obtain a DCNv1 baseline, we follow the original Deformable ConvNets paper by replacing the last three layers of 3×3 convolution in the conv5 stage and the aligned RoIpooling layer by their deformable counterparts.This DCNv1 baseline achieves an APbbox score of 38.0% for Faster R-CNN, and APbbox and APmask scores of 40.4% and 35.3% respectively for Mask R-CNN.The deformable modules considerably improve accuracy as observed in [8].

从表1中所示的消融中检查富集变形建模的效果。具有常规CNN模块的基线对于FasterR-CNN获得34.4%的APbbox分数,并且对于掩模R-CNN分别获得36.6%和APAP分数的APbbox和AP分数。 为了获得DCNv1基线,我们遵循原始的Deformable ConvNets论文,通过在conv5阶段替换最后三层3×3卷积,并且通过它们的可变形计数器部分替换alignRoIpooling层。对于更快的R-CNN,该DCNv1基线达到38.0%的APbbox得分,并且对于Mask R-CNN,APbbox和APmask得分分别为40.4%和35.3%。可变形模块显着提高了准确性,如[8]中所观察到的。

By replacing more 3 × 3 regular conv layers by their deformable counterparts, the accuracy of both Faster RCNN and Mask R-CNN steadily improve, with gains between 2.0% and 3.0% for APbbox and APmask scores when the conv layers in conv3-conv5 are replaced. No additional improvement is observed on the COCO benchmark by further replacing the regular conv layers in the conv2 stage. By upgrading the deformable modules to modulated deformable modules, we obtain further gains between 0.3% and 0.7% in APbbox and APmask scores. In total, enriching the deformation modeling capability yields a 41.7% APbbox score on Faster R-CNN, which is 3.7% higher than that of the DCNv1 baseline. On Mask R-CNN, 43.1% APbbox and 37.3% APmask scores are obtained with the enriched deformation modeling, which are respectively 2.7% and 2.0% higher than those of the DCNv1 baseline. Note that the added parameters and FLOPs for enriching the deformation modeling are minor compared to those of the overall networks.

通过可变形对应物替换更多3×3常规转换层,更快的RCNN和掩模R-CNN的准确度稳步提高,当conv3-conv5中的转换层为时,APbbox和APmask分数的增益在2.0%和3.0%之间。更换。 通过进一步替换conv2阶段中的常规转换层,未在COCO基准上观察到额外的改进。 通过将可变形模块升级到调制的可变形模块,我们在APbbox和APmask分数中获得了0.3%和0.7%之间的进一步增益。 总的来说,丰富变形建模能力可以在更快的R-CNN上获得41.7%的APbbox分数,比DCNv1基线高出3.7%。 在面罩R-CNN上,通过富集变形建模获得43.1%APbbox和37.3%APmask得分,其分别比DCNv1基线高2.7%和2.0%。 请注意,与整个网络相比,用于丰富变形建模的附加参数和FLOP较小。

Shown in Figure 1 (b)∼(c), the spatial support of the enriched deformable modeling exhibits better adaptation to image content compared to that of DCNv1.

Table 2 presents the results at input image resolution of 800 pixels, which follows the default setting in the Detectron code base. The same conclusion holds.

如图1(b)〜(c)所示,与DCNv1相比,富集可变形建模的空间支持表现出对图像内容的更好适应性。

表2显示了输入图像分辨率为800像素的结果,该结果遵循Detectron代码库中的默认设置。 同样的结论成立。

5.3.R-CNN Feature Mimicking

Ablations of the design choices in R-CNN feature mimicking are shown in Table3. With the enriched deformation modeling, R-CNN feature mimicking further improves the APbbox and APmask scores by about 1% to 1.4% in both the Faster R-CNN and Mask R-CNN systems. Mimicking features of positive boxes on the object foreground is found to be particularly effective, and the results when mimicking all the boxes or just negative boxes are much lower. As shown in Figure 2 (c)∼(d), feature mimicking can help the network features better focus on the object foreground, which is beneficial for positive boxes. For the negative boxes, the network tends to exploit more context information (seeFigure 2), where feature mimicking would not be helpful.

表3中显示了R-CNN特征模拟中设计选择的消融。 通过丰富的变形建模,R-CNN特征模拟在更快的R-CNN和掩模R-CNN系统中进一步将APbbox和APmask得分提高了约1%至1.4%。 发现对象前景上的正框的模仿特征特别有效,并且模仿所有框或仅负框时的结果要低得多。 如图2(c)〜(d)所示,特征模拟可以帮助网络特征更好地聚焦于对象前景,这对于正框来说是有益的。 对于负面框,网络倾向于利用更多的上下文信息(参见图2),其中模拟特征没有帮助。

We also apply R-CNN feature mimicking to regular ConvNets without any deformable layers. Almost no accuracy gains are observed. The visualized spatial support regions are shown in Figure 2 (e), which are not focused on the object foreground even with the auxiliary mimic loss. This is likely because it is beyond the representation capability of regular ConvNets to focus features on the object foreground, and thus this cannot be learned.

我们还应用R-CNN功能模仿常规ConvNets而没有任何可变形层。 几乎没有观察到准确度增加。 可视化的空间支撑区域如图2(e)所示,即使在辅助模拟损失的情况下也不会聚焦在物体前景上。 这很可能是因为它超出了常规ConvNets的表示能力,无法将特征集中在对象前景上,因此无法学习。

6.Conclusion

Despite the superior performance of Deformable ConvNets in modeling geometric variations, its spatial support extends well beyond the region of interest, causing features to be influenced by irrelevant image content. In this paper, we present a reformulation of Deformable ConvNets which improves its ability to focus on pertinent image regions, through increased modeling power and stronger training. Significant performance gains are obtained on the COCO benchmark for object detection and instance segmentation.

尽管Deformable ConvNets在建模几何变化方面具有卓越的性能,但其空间支持远远超出了感兴趣的区域,导致特征受到不相关图像内容的影响。 在本文中,我们提出了可变形ConvNets的改进,通过提高建模能力和更强的训练,提高了其关注相关图像区域的能力。 在COCO基准测试中获得了显着的性能提升,用于对象检测和实例分割。

A1.Error-bounded Image Saliency

In existing research on image saliency [41, 44, 13, 7], a widely utilized formulation is as follows. Given an input image I and a trained network N, let N(I) denote the network response on the original image. A binary mask M, which is of the same spatial dimension as I, can be applied on the image as I M. For the image pixel p where M(p) = 1, its content is kept in the masked image. Meanwhile, if M(p) = 0, the content is set as 0 in the masked image. The saliency map is obtained by optimizing loss function L(M) = ||N(I) −N(I M)||2 + λ||M||1 as a function of M, where λ is the hyper-parameter balancing the output reconstruction error||N(I)−N(I M)||2 and the salient area loss||M||1. The optimized mask M is called the saliency map. The problem is it is hard to obtain the salient regionat as pecified reconstruction error. Thus it is hard to compare the salient regions from two networks at the same reconstruction error.

在现有的图像显着性研究[41,44,13,7]中,广泛使用的配方如下。 给定输入图像I和训练的网络N,令N(I)表示原始图像上的网络响应。 具有与I相同的空间维度的二元掩模M可以作为I M应用于图像。对于图像像素p,其中M(p)= 1,其内容保持在掩蔽图像中。 同时,如果M(p)= 0,则在掩蔽图像中将内容设置为0。 通过优化损失函数L(M)= || N(I)-N(I M)|| 2 +λ|| M || 1作为M的函数来获得显着图,其中λ是超参数 平衡输出重建误差|| N(I)-N(IM)|| 2和显着区域损失|| M || 1。 优化的掩模M称为显着图。 问题是难以获得显着区域作为重建误差。 因此,在相同的重建误差下很难比较来自两个网络的显着区域。

We seek to strictly constrain the reconstruction loss in the image saliency formulation, so as to facilitate comparison among the salient regions derived from different networks. Thus, the optimization problem is slightly modified to be

我们寻求严格约束图像显着性公式中的重建损失,以便于比较来自不同网络的显着区域。 因此,优化问题稍作修改

where Lrec(N(I),N(I M)) denotes an arbitrary form of reconstruction loss,which is strictly bounded by. We term the collection of image pixels where{p|M(p) = 1}in the optimized mask as visual support region.

其中Lrec(N(I),N(I M))表示任意形式的重建损失,其严格限制为。 我们将优化掩模中{p | M(p)= 1}的图像像素的集合称为视觉支撑区域。

The formulation in Eq.(4) is hard to be optimized,due to the hard reconstruction error constraint introduced. Here we develop a heuristic two-step procedure to reduce the search space in deriving the visual support region. At the first step, the visual support region is constrained to be rectangular of arbitrary shape. The rectangular is centered on the node to be interpreted. The rectangular is initialized of area size 0, and is enlarged gradually (at even area increment). The enlargement stops upon the reconstruction error constraint is satisfied. At the second step, pixel-level visual support region is derived within the rectangular area. The image is segmented into super-pixels by the algorithm in [1], so as to restrict the solution space. At initial, all the super-pixels within the rectangular are counted in the visual support region (taking mask value 1). Then the super-pixels are gradually removed in a greedy manner. At each iteration, the super-pixel causing the smallest rise in reconstruction error is removed. The iteration stops till the constraint would be violated by removing anymore super-pixels.

由于引入了硬重建误差约束,方程(4)中的公式很难被优化。在这里,我们开发了一个启发式的两步程序,以减少导出视觉支持区域的搜索空间。在第一步,视觉支撑区域被约束为任意形状的矩形。矩形以要解释的节点为中心。矩形被初始化为区域大小0,并且逐渐扩大(以均匀区域增量)。在重建误差约束满足时,放大停止。在第二步,在矩形区域内导出像素级视觉支持区域。通过[1]中的算法将图像分割成超像素,以限制解空间。在初始时,矩形内的所有超像素在视觉支持区域中计数(取掩模值1)。然后以贪婪的方式逐渐移除超像素。在每次迭代时,去除导致重建误差最小上升的超像素。迭代停止,直到通过移除更多超像素来违反约束。

We apply the two-step procedure to visualize network nodes in Faster R-CNN object detector [33]. We visualize both feature map nodes shared on the whole image, and the 2fc node in the per-RoI detection head ,which is directly followed by the classification and the bounding box regression branches. For image-wise feature map nodes (at a certain location), square rectangular is applied in the two-step procedure. For RoI-wise feature nodes, the rectangular is of the same aspect ratio as the input RoI. For both image-wise andRoI-wisenodes,there construction loss is one minus the cosine similarity between the feature vectors derived from masked and original images. The error upper bound is set as 0.1.

我们应用两步程序在更快的R-CNN对象检测器中可视化网络节点[33]。我们可视化整个图像上共享的特征映射节点,以及per-RoI检测头中的2fc节点,它直接 然后是分类和边界框回归分支。 对于图像方式的特征映射节点(在某个位置),在两步过程中应用方形矩形。 对于RoI方式的特征节点,矩形具有与输入RoI相同的纵横比。 对于图像方式和ROI-wisenodes,构造损失是减去从掩模和原始图像导出的特征向量之间的余弦相似性的一个。 误差上限设置为0.1。