ROS系统中从零开始部署YoloV4目标检测算法(3种方式)

目录

1 封装darknet框架为dll方式

2 封装TensorFlow或pytorch框架为dll方式

3 调用OpenCV中DNN模块方式

3.0 需求说明

3.1 环境搭建

3.2 创建catkin空间

3.3 创建package

3.4 package中源码

3.4.1 ①main.cpp

3.4.2 ②Detection.h

3.4.3 ③Detection.cpp

3.4.4 ④CMakeLists.txt

3.4.5 ⑤package.xml

3.5 修改ROS系统中cv_bridge模块的配置文件

3.6 重新编译catkin空间

3.7 测试

1 封装darknet框架为dll方式

暂略

2 封装TensorFlow或pytorch框架为dll方式

暂略

3 调用OpenCV中DNN模块方式

3.0 需求说明

在ROS系统下创建一个package目标检测程序,订阅realsense传感器的RGB流,进行行人检测,然后将检测结果信息发布出去。

包和节点名称定义:

- 该目标检测package名称为:detection_pkg

- 开展目标检测的节点名称为:yolo4

- 发布检测结果的节点名称为:yolo4_result

接口定义:

目标检测结果发布的格式为:(string类型)类别序号,置信度,目标框的xmin,目标框的ymin,目标框的width,目标框的height@类别序号,置信度,目标框的xmin,目标框的ymin,目标框的width,目标框的height@............

接口解释:

- 单帧图像中,可能有多个人,每个检测人框的结果以@分隔。

- “人”的类别序号目前设定为0,后续开展其他目标检测时,新增类别序号后续再定。

- 置信度的取值范围:[0, 1]

- 重要备注:目前xy的坐标,人在图像边缘情况出现xy为负值情况,该BUG后续将会去解决。

3.1 环境搭建

- 关于OpenCV4.5.1编译安装、ROS melodic版安装等见我之前的《ubuntu + oepncv + PCL + realsenseSDK + ROS + OpenVino开发环境搭建》https://blog.csdn.net/weixin_42118657/article/details/114527831

- yolov4算法的模型文件、cfg文件、coco.names文件可百度搜索下载,具体文件摆放位置见下文源码。

3.2 创建catkin空间

$ mkdir -p ~/catkin_ws/src #直接创建了catkin_ws文件夹和src子文件夹。

$ cd ~/catkin_ws/

$ catkin_make #初始化工作空间,此命令必须在工作空间路径下执行。备注:catkin_ws是工作空间名字,可自定义。

重要备注:创建工作空间后,还需要给工作空间进行注册,注册的目的是让各种命令能够找到这个工作空间(相当于win系统中设定path)。

- 注册方法1(注册一次爽一次):每次新建一个终端时,都先执行如下命令告诉此终端我在哪:source ~/catkin_ws/devel/setup.bash #catkin_ws是改你空间名字。

- 注册方法2(一劳永逸):

(base) ym@ym-2021:~$ echo "source ~/catkin_test/catkin_2/devel/setup.bash" >> ~/.bashrc

(base) ym@ym-2021:~$ source ~/.bashrc备注:上述catkin_test是我创建的普通文件夹,catkin_2是我创建的Catkin工作空间。

3.3 创建package

- 先cd到工作空间的src路径内,然后创建package,语法规则是:catkin_create_pkg packageYY dependsXX

- 其中packageYY是包名,dependsXX是依赖的包名,可以依赖多个软件包。

- 例如,新建一个package叫做detection

_pkg,依赖roscpp、rospy、std_msgs(常用依赖)。-

$ catkin_create_pkg detection_pkg roscpp rospy std_msgs

-

3.4 package中源码

程序结构如下:(在/home/ym/catkin_test/catkin_2/src/detection_pkg 路径下)

- ①main.cpp(自己的程序)

- ②Detection.h(自己的程序)

- ③Detection.cpp(自己的程序)

- ④CMakeLists.txt(创建package时自动生成的,等下需要改造内容)

- ⑤package.xml(创建package时自动生成的,等下需要改造内容)

- include 文件夹(创建package时自动生成的空文件夹)

- src 文件夹(创建package时自动生成的空文件夹)

3.4.1 ①main.cpp

#include "Detection.h"

#include

#include //cv_bridge是在ROS图像消息和OpenCV图像之间进行转换的一个功能包。如提供sensor_msgs

#include

#include

ros::Publisher pub;

#include "std_msgs/Int8.h"

#include "std_msgs/String.h"

using namespace std;

using namespace cv;

using namespace dnn;

Detection detection = Detection();

void imageCallback(const sensor_msgs::ImageConstPtr &msg)

{

cv::Mat img;

cv_bridge::CvImageConstPtr cv_ptr;

cv_ptr = cv_bridge::toCvShare(msg, "bgr8");

img = cv_ptr->image;

detection.Initialize(img.cols, img.rows);

detection.Detecting(img);

imshow("Show RgbImage", detection.GetFrame());

char ch = waitKey(10); // 一定要加这个,不然不显示窗口

string output = detection.GetResult();

std_msgs::String out_msg;

std::stringstream ss;

ss << output;

out_msg.data = ss.str();

pub.publish(out_msg);

}

int main(int argc, char **argv)

{

//创建node第一步需要用到的函数

ros::init(argc, argv, "yolo4"); //第3个参数为本节点名,

//ros::NodeHandle : 和topic、service、param等交互的公共接口

//创建ros::NodeHandle对象,也就是节点的句柄,它可以用来创建Publisher、Subscriber以及做其他事情。

//句柄(Handle)这个概念可以理解为一个“把手”,你握住了门把手,就可以很容易把整扇门拉开,而不必关心门是

//什么样子。NodeHandle就是对节点资源的描述,有了它你就可以操作这个节点了,比如为程序提供服务、监听某个topic上的消息、访问和修改param等等。

ros::NodeHandle nd; //实例化句柄,初始化node

// Create a ROS subscriber for the input point cloud

ros::Subscriber sub = nd.subscribe("/realsense_camera0/color_image", 1, imageCallback); //第二个参数:缓存队列大小,以防我们处理消息的速度不够快

std::cout << "sub:" << sub << std::endl;

// Create a ROS publisher for the output

pub = nd.advertise("yolo4_result", 1);

ros::spin();

return 0;

} 3.4.2 ②Detection.h

#pragma once

#ifndef __DETECTION_H__

#define __DETECTION_H__

#include

#include

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

using namespace dnn;

class Detection

{

public:

//构造、析构函数

Detection();

~Detection();

//初始化函数

void Initialize(int width, int height);

//读取网络模型

void ReadModel();

//行人与车辆检测

bool Detecting(Mat frame);

//获取网络输出层名称

vector GetOutputsNames();

//对输出进行处理,使用NMS选出最合适的框

void PostProcess();

//画检测结果

void Drawer();

//画出检测框和相关信息

void DrawBoxes(int classId, float conf, int left, int top, int right, int bottom);

//获取Mat对象

Mat GetFrame();

//获取检测结果字符串

string GetResult();

//获取图像宽度

int GetResWidth();

//获取图像高度

int GetResHeight();

private:

//图像属性

int m_width; //图像宽度

int m_height; //图像高度

//网络处理相关

Net m_model; //网络模型

Mat m_frame; //每一帧

Mat m_blob; //从每一帧创建一个4D的blob用于网络输入

vector m_outs; //网络输出

vector m_confs; //置信度

vector m_boxes; //检测框左上角坐标、宽、高

vector m_classIds; //类别id

vector m_perfIndx; //非极大阈值处理后边界框的下标

//检测超参数

int m_inpWidth; //网络输入图像宽度

int m_inpHeight; //网络输入图像高度

float m_confThro; //置信度阈值

float m_NMSThro; //NMS非极大抑制阈值

vector m_classes; //类别名称

private:

//内存释放

void Dump();

};

#endif 3.4.3 ③Detection.cpp

#include "Detection.h"

using namespace cv;

using namespace dnn;

//构造函数,成员变量初始化

Detection::Detection()

{

//图像属性

m_width = 0;

m_height = 0;

m_inpWidth = 416;

m_inpHeight = 416;

//其他成员变量

m_confThro = 0.25;

m_NMSThro = 0.4;

//网络模型加载

ReadModel();

}

//析构函数

Detection::~Detection()

{

//Dump();

}

//内存释放

void Detection::Dump()

{

//网络输出相关清零

m_outs.clear();

m_boxes.clear();

m_confs.clear();

m_classIds.clear();

m_perfIndx.clear();

}

//初始化函数

void Detection::Initialize(int width, int height)

{

//图像属性

m_width = width;

m_height = height;

}

//读取网络模型和类别

void Detection::ReadModel()

{

string classesFile = "/data/coco.names";

String modelConfig = "/data/yolov4.cfg";

String modelWeights = "/data/yolov4.weights";

//加载类别名

ifstream ifs(classesFile.c_str());

string line;

while (getline(ifs, line))

m_classes.push_back(line);

//加载网络模型

m_model = readNetFromDarknet(modelConfig, modelWeights);

m_model.setPreferableBackend(DNN_BACKEND_OPENCV);

m_model.setPreferableTarget(DNN_TARGET_OPENCL);

}

//检测

bool Detection::Detecting(Mat frame)

{

Dump(); //各缓存变量的内存释放

m_frame = frame.clone();

//创建4D的blob用于网络输入

blobFromImage(m_frame, m_blob, 1 / 255.0, Size(m_inpWidth, m_inpHeight), Scalar(0, 0, 0), true, false);

//设置网络输入

m_model.setInput(m_blob);

//前向预测得到网络输出,forward需要知道输出层,这里用了一个函数找到输出层

m_model.forward(m_outs, GetOutputsNames());

//使用非极大抑制NMS删除置信度较低的边界框

PostProcess();

//画检测框

Drawer();

return true;

}

//获取网络输出层名称

vector Detection::GetOutputsNames()

{

static vector names;

if (names.empty())

{

//得到输出层索引号

vector outLayers = m_model.getUnconnectedOutLayers();

//得到网络中所有层名称

vector layersNames = m_model.getLayerNames();

//获取输出层名称

names.resize(outLayers.size());

for (int i = 0; i < outLayers.size(); ++i)

names[i] = layersNames[outLayers[i] - 1];

}

return names;

}

//使用非极大抑制NMS去除置信度较低的边界框

void Detection::PostProcess()

{

for (int num = 0; num < m_outs.size(); num++)

{

Point Position;

double confidence;

//得到每个输出的数据

float *data = (float *)m_outs[num].data;

for (int j = 0; j < m_outs[num].rows; j++, data += m_outs[num].cols)

{

//得到该输出的所有类别的

Mat scores = m_outs[num].row(j).colRange(5, m_outs[num].cols);

//获取最大置信度对应的值和位置

minMaxLoc(scores, 0, &confidence, 0, &Position);

//对置信度大于阈值的边界框进行相关计算和保存

if (confidence > m_confThro)

{

//data[0],data[1],data[2],data[3]都是相对于原图像的比例

int centerX = (int)(data[0] * m_width);

int centerY = (int)(data[1] * m_height);

int width = (int)(data[2] * m_width);

int height = (int)(data[3] * m_height);

int left = centerX - width / 2;

int top = centerY - height / 2;

//保存信息

m_classIds.push_back(Position.x);

m_confs.push_back((float)confidence);

m_boxes.push_back(Rect(left, top, width, height));

}

}

}

//非极大值抑制,以消除具有较低置信度的冗余重叠框

NMSBoxes(m_boxes, m_confs, m_confThro, m_NMSThro, m_perfIndx);

}

//画出检测结果

void Detection::Drawer()

{

//获取所有最佳检测框信息

for (int i = 0; i < m_perfIndx.size(); i++)

{

int idx = m_perfIndx[i];

Rect box = m_boxes[idx];

// std::cout << "m_classIds[idx]"<< m_classIds[idx] << std::endl;

// std::cout << "m_confs[idx]"<< m_confs[idx] << std::endl;

// std::cout << "box.x"<< box.x << std::endl;

// std::cout << "box.y"<< box.y << std::endl;

// std::cout << "box.width"<< box.width << std::endl;

// std::cout << "box.height"<< box.height << std::endl;

if (m_classIds[idx] == 0) //仅person类

{

DrawBoxes(m_classIds[idx], m_confs[idx], box.x, box.y,

box.x + box.width, box.y + box.height);

}

// DrawBoxes(m_classIds[idx], m_confs[idx], box.x, box.y,

// box.x + box.width, box.y + box.height);

}

}

//画出检测框和相关信息

void Detection::DrawBoxes(int classId, float conf, int left, int top, int right, int bottom)

{

//画检测框

rectangle(m_frame, Point(left, top), Point(right, bottom), Scalar(255, 178, 50), 3);

//该检测框对应的类别和置信度

string label = format("%.2f", conf);

if (!m_classes.empty())

{

CV_Assert(classId < (int)m_classes.size());

label = m_classes[classId] + ":" + label;

}

//将标签显示在检测框顶部

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

rectangle(m_frame, Point(left, top - round(1.5 * labelSize.height)), Point(left + round(1.5 * labelSize.width), top + baseLine), Scalar(255, 255, 255), FILLED);

putText(m_frame, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(0, 0, 0), 1);

}

//获取Mat对象

Mat Detection::GetFrame()

{

return m_frame;

}

//返回检测结果

string Detection::GetResult()

{

string result = "";

//获取所有最佳检测框信息

for (int i = 0; i < m_perfIndx.size(); i++)

{

int idx = m_perfIndx[i];

Rect box = m_boxes[idx];

// std::cout << "m_classIds[idx]"<< m_classIds[idx] << std::endl;

// std::cout << "m_confs[idx]"<< m_confs[idx] << std::endl;

// std::cout << "box.x"<< box.x << std::endl;

// std::cout << "box.y"<< box.y << std::endl;

// std::cout << "box.width"<< box.width << std::endl;

// std::cout << "box.height"<< box.height << std::endl;

if (m_classIds[idx] == 0) //仅person类

{

stringstream strStream;

//int、float类型都可以塞到stringstream中

strStream << m_classIds[idx] << "," << m_confs[idx] << "," << box.x << "," << box.y << "," << box.width << "," << box.height;

result = strStream.str();

}

}

return result;

}

//获取结果图像宽度

int Detection::GetResWidth()

{

return m_width;

}

//获取结果图像高度

int Detection::GetResHeight()

{

return m_height;

}

3.4.4 ④CMakeLists.txt

这部分主要新增如下几点:

-

开头几行find_package中新增“cv_bridge”

-

新增OpenCV部分

cmake_minimum_required(VERSION 3.0.2)

project(detection_pkg)

## Compile as C++11, supported in ROS Kinetic and newer

# add_compile_options(-std=c++11)

## Find catkin macros and libraries

## if COMPONENTS list like find_package(catkin REQUIRED COMPONENTS xyz)

## is used, also find other catkin packages

find_package(catkin REQUIRED COMPONENTS

roscpp

rospy

std_msgs

cv_bridge

)

## System dependencies are found with CMake's conventions

# find_package(Boost REQUIRED COMPONENTS system)

## Uncomment this if the package has a setup.py. This macro ensures

## modules and global scripts declared therein get installed

## See http://ros.org/doc/api/catkin/html/user_guide/setup_dot_py.html

# catkin_python_setup()

################################################

## Declare ROS messages, services and actions ##

################################################

## To declare and build messages, services or actions from within this

## package, follow these steps:

## * Let MSG_DEP_SET be the set of packages whose message types you use in

## your messages/services/actions (e.g. std_msgs, actionlib_msgs, ...).

## * In the file package.xml:

## * add a build_depend tag for "message_generation"

## * add a build_depend and a exec_depend tag for each package in MSG_DEP_SET

## * If MSG_DEP_SET isn't empty the following dependency has been pulled in

## but can be declared for certainty nonetheless:

## * add a exec_depend tag for "message_runtime"

## * In this file (CMakeLists.txt):

## * add "message_generation" and every package in MSG_DEP_SET to

## find_package(catkin REQUIRED COMPONENTS ...)

## * add "message_runtime" and every package in MSG_DEP_SET to

## catkin_package(CATKIN_DEPENDS ...)

## * uncomment the add_*_files sections below as needed

## and list every .msg/.srv/.action file to be processed

## * uncomment the generate_messages entry below

## * add every package in MSG_DEP_SET to generate_messages(DEPENDENCIES ...)

## Generate messages in the 'msg' folder

# add_message_files(

# FILES

# Message1.msg

# Message2.msg

# )

## Generate services in the 'srv' folder

# add_service_files(

# FILES

# Service1.srv

# Service2.srv

# )

## Generate actions in the 'action' folder

# add_action_files(

# FILES

# Action1.action

# Action2.action

# )

## Generate added messages and services with any dependencies listed here

# generate_messages(

# DEPENDENCIES

# std_msgs

# )

################################################

## Declare ROS dynamic reconfigure parameters ##

################################################

## To declare and build dynamic reconfigure parameters within this

## package, follow these steps:

## * In the file package.xml:

## * add a build_depend and a exec_depend tag for "dynamic_reconfigure"

## * In this file (CMakeLists.txt):

## * add "dynamic_reconfigure" to

## find_package(catkin REQUIRED COMPONENTS ...)

## * uncomment the "generate_dynamic_reconfigure_options" section below

## and list every .cfg file to be processed

## Generate dynamic reconfigure parameters in the 'cfg' folder

# generate_dynamic_reconfigure_options(

# cfg/DynReconf1.cfg

# cfg/DynReconf2.cfg

# )

###################################

## catkin specific configuration ##

###################################

## The catkin_package macro generates cmake config files for your package

## Declare things to be passed to dependent projects

## INCLUDE_DIRS: uncomment this if your package contains header files

## LIBRARIES: libraries you create in this project that dependent projects also need

## CATKIN_DEPENDS: catkin_packages dependent projects also need

## DEPENDS: system dependencies of this project that dependent projects also need

catkin_package(

# INCLUDE_DIRS include

# LIBRARIES detection_pkg

# CATKIN_DEPENDS roscpp rospy std_msgs

# DEPENDS system_lib

)

###########

## Build ##

###########

## Specify additional locations of header files

## Your package locations should be listed before other locations

include_directories(

# include

${catkin_INCLUDE_DIRS}

)

## Declare a C++ library

# add_library(${PROJECT_NAME}

# src/${PROJECT_NAME}/detection_pkg.cpp

# )

## Add cmake target dependencies of the library

## as an example, code may need to be generated before libraries

## either from message generation or dynamic reconfigure

# add_dependencies(${PROJECT_NAME} ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS})

## Declare a C++ executable

## With catkin_make all packages are built within a single CMake context

## The recommended prefix ensures that target names across packages don't collide

# add_executable(${PROJECT_NAME}_node src/detection_pkg_node.cpp)

## Rename C++ executable without prefix

## The above recommended prefix causes long target names, the following renames the

## target back to the shorter version for ease of user use

## e.g. "rosrun someones_pkg node" instead of "rosrun someones_pkg someones_pkg_node"

# set_target_properties(${PROJECT_NAME}_node PROPERTIES OUTPUT_NAME node PREFIX "")

## Add cmake target dependencies of the executable

## same as for the library above

# add_dependencies(${PROJECT_NAME}_node ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS})

## Specify libraries to link a library or executable target against

# target_link_libraries(${PROJECT_NAME}_node

# ${catkin_LIBRARIES}

# )

#############

## Install ##

#############

# all install targets should use catkin DESTINATION variables

# See http://ros.org/doc/api/catkin/html/adv_user_guide/variables.html

## Mark executable scripts (Python etc.) for installation

## in contrast to setup.py, you can choose the destination

# catkin_install_python(PROGRAMS

# scripts/my_python_script

# DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION}

# )

## Mark executables for installation

## See http://docs.ros.org/melodic/api/catkin/html/howto/format1/building_executables.html

# install(TARGETS ${PROJECT_NAME}_node

# RUNTIME DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION}

# )

## Mark libraries for installation

## See http://docs.ros.org/melodic/api/catkin/html/howto/format1/building_libraries.html

# install(TARGETS ${PROJECT_NAME}

# ARCHIVE DESTINATION ${CATKIN_PACKAGE_LIB_DESTINATION}

# LIBRARY DESTINATION ${CATKIN_PACKAGE_LIB_DESTINATION}

# RUNTIME DESTINATION ${CATKIN_GLOBAL_BIN_DESTINATION}

# )

## Mark cpp header files for installation

# install(DIRECTORY include/${PROJECT_NAME}/

# DESTINATION ${CATKIN_PACKAGE_INCLUDE_DESTINATION}

# FILES_MATCHING PATTERN "*.h"

# PATTERN ".svn" EXCLUDE

# )

## Mark other files for installation (e.g. launch and bag files, etc.)

# install(FILES

# # myfile1

# # myfile2

# DESTINATION ${CATKIN_PACKAGE_SHARE_DESTINATION}

# )

#############

## Testing ##

#############

## Add gtest based cpp test target and link libraries

# catkin_add_gtest(${PROJECT_NAME}-test test/test_detection_pkg.cpp)

# if(TARGET ${PROJECT_NAME}-test)

# target_link_libraries(${PROJECT_NAME}-test ${PROJECT_NAME})

# endif()

## Add folders to be run by python nosetests

# catkin_add_nosetests(test)

#此句要在下面target_link_libraries语句之前!!!!!

set(DETECTION Detection.cpp)

add_executable(yolo4 main.cpp ${DETECTION})

# opencv opencv opencv opencv opencv opencv

#寻找opencv库

find_package(OpenCV REQUIRED)

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

target_link_libraries(yolo4 ${OpenCV_LIBS} )

target_link_libraries(yolo4 ${catkin_LIBRARIES})

3.4.5 ⑤package.xml

这里主要是新增一个“cv_bridge”字段

detection_pkg

0.0.0

The detection_pkg package

ym

TODO

catkin

roscpp

rospy

std_msgs

cv_bridge

roscpp

rospy

std_msgs

cv_bridge

roscpp

rospy

std_msgs

cv_bridge

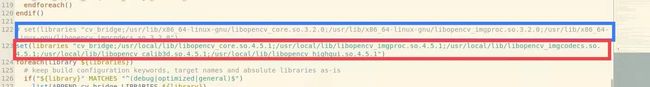

3.5 修改ROS系统中cv_bridge模块的配置文件

因为ROS系统中默认安装一个低版本OpenCV,导致有些函数无法正常使用而报错,需要修改ROS系统中cv_bridge模块对OpenCV的引用,让它引用自己安装的4.5.1版本OpenCV。

参考:《Ubuntu18.04+ROS melodic+opencv4 修改cv_bridge配置文件,解决ROS使用不同版本opencv的冲突问题》https://blog.csdn.net/PrideQ/article/details/115304494

操作步骤:

- 首先找到cv_brige文件,使用gedit打开:

- cd /opt/ros/melodic/share/cv_bridge/cmake/

- sudo gedit cv_bridgeConfig.cmake

- 将此处指定的头文件目录改到自己安装的头文件目录下:

- 之后再将指定库文件也修改为自己安装的opencv库文件:

3.6 重新编译catkin空间

- 先cd到工作空间路径(注意不是package路径),比如我的是:/home/ym/catkin_test/catkin_2

- 然后终端中执行:catkin_make

按照流程走编译一般没问题,有其他小问题应该也好自己解决。

BUG备注:

- make[2]: *** No rule to make target '/usr/local/lib/libopencv_hiqhgui.so.4.5.1', needed by '/home/moi/yinming/catkin_ym/devel/lib/detection_pkg/yolo4'

- 原因打错字:libopencv_hiqhgui.so.4.5.1应该写成libopencv_highgui.so.4.5.1

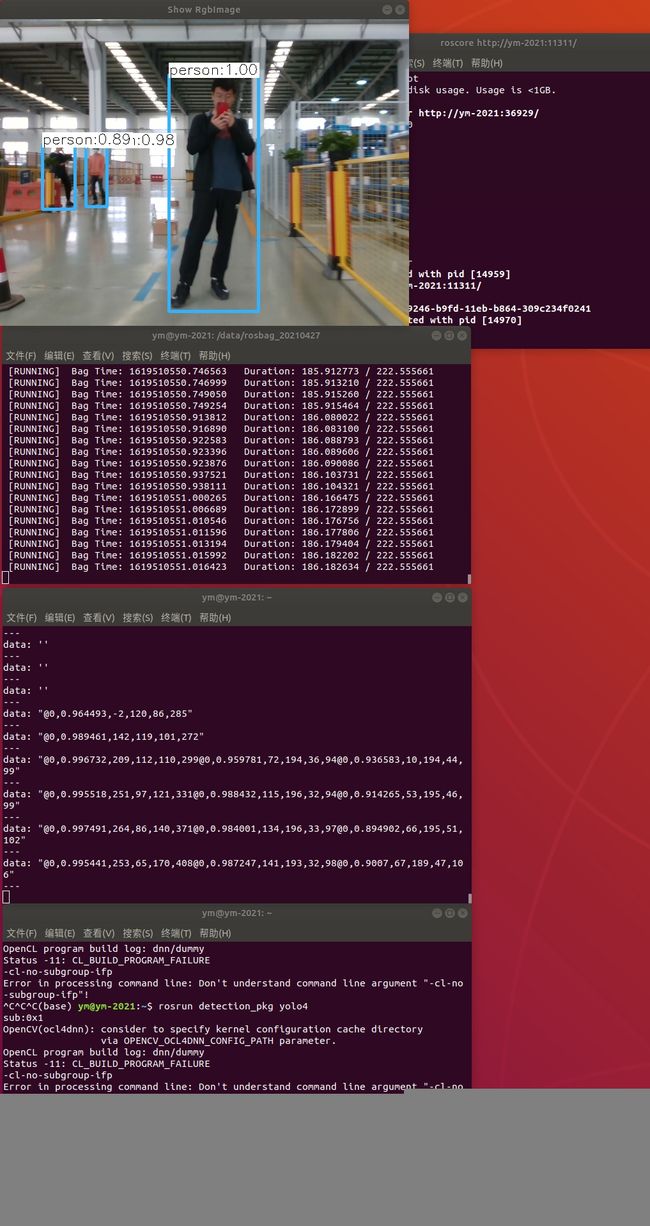

3.7 测试

测试流程简述:使用在ros系统中预先录制的realsense传感器的bag数据,通过rosbag play这个bag历史数据,然后我们这个目标检测package程序去订阅里面的RGB流,再将检测结果发布出来。

整个流程操作命令如下(顺序不能变):

- 新建第1个终端,直接执行:roscore

- 新建第2个终端,cd到bag包的存放路径,执行:rosbag play 2021-04-27-15-59-19.bag

- 新建第3个终端,直接执行:rosrun detection_pkg yolo4

- 新建第4个终端,直接执行:rostopic echo yolo4_result

结果如下:

问题备注:

在真机上编译通过,运行节点时,如果报如下错:

- what(): OpenCV(4.5.1) /home/moi/yinming/OpenCV/opencv-4.5.1/modules/highgui/src/window_gtk.cpp:624: error: (-2:Unspecified error) Can't initialize GTK backend in function 'cvInitSystem'

- 原因是:笔记本VScode远程接入机器的Ubuntu系统,没有可视化界面,需要注释main.py中可视化的两行代码。

- imshow("Show RgbImage", detection.GetFrame());

- char ch = waitKey(10);