Ubuntu云原生环境安装,docker+k8s+kubeedge(亲测好用)

docker安装步骤(Linux)

一、移除以前docker相关包

sudo apt-get autoremove docker docker-ce docker-engine docker.io containerd runc

二、设置存储库

1、更新软件包索引并安装软件包,以允许通过 HTTPS 使用存储库

sudo apt-get update

sudo apt-get install \

ca-certificates \

curl \

gnupg \

lsb-release

2、添加 Docker 的官方 GPG 密钥

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

3、使用以下命令设置稳定存储库

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

三、安装docker

sudo apt-get update

#要安装特定版本的 Docker 引擎,请在存储库中列出可用版本,然后选择并安装

sudo apt-cache madison docker-ce

安装特定版本

sudo apt-get install docker-ce=<VERSION_STRING> docker-ce-cli=<VERSION_STRING> containerd.io docker-compose-plugin

四、配置加速

这里额外添加了docker的生产环境核心配置cgroup

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

五、验证是否安装成功

sudo docker run hello-world

docker官网地址:在 Ubuntu |上安装 Docker 引擎Docker 文档

Kubernetes安装步骤(Linux)

一、前置条件(要确认各个结点网络互通)

#各个机器设置自己的域名

hostnamectl set-hostname xxxx

#使用sestatus命令显示SELinux是启用还是禁用

sestatus

#如果未安装sestatus我们可以使用以下命令将其安装

sudo apt install policycoreutils

# 将 SELinux 设置为 disabled 模式(临时)

SELINUX=disabled

# 修改nano /etc/selinux/config参数 SELINUX = disabled(永久,重启系统生效)

sudo vim /etc/selinux/config

#关闭swap(临时)

sudo swapoff -a

#关闭swap(永久,重启系统生效)

sudo sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

二、安装kubelet、kubeadm、kubectl

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl

#下载 Google Cloud 公开签名秘钥(阿里云镜像):

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg

#添加 Kubernetes apt 仓库(阿里云镜像):

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] http://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

#查看可安装版本

sudo apt-cache madison kubelet

#安装

sudo apt install -y kubelet=1.21.10-00 kubeadm=1.21.10-00 kubectl=1.21.10-00

#立即生效

sudo systemctl enable --now kubelet

三、初始化主节点

先将主节点设置域名

#所有机器添加master域名映射,192.168.1.125是主节点ip,其他集群都执行这条语句

echo "192.168.1.125 cluster-endpoint" >> sudo /etc/hosts

#加完之后ping一下

ping cluster-endpoint

主节点初始化

sudo kubeadm init \

--apiserver-advertise-address=192.168.1.125 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.21.10 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

ps:如果用阿里云服务器的话,出现库kubeadm init 超时情况:把--apiserver-advertise-address=ip 这个参数对应的ip换成内网ip,不要用公网

#看kubelet启动状态

sudo systemctl status kubelet

#如果没有启动查看一下日志,再根据日志解决对应问题

journalctl -xeu kubelet

初始化完成之后,回看到一个这样的信息,留存一下,后续操作需要使用

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

#加入一个控制节点

kubeadm join cluster-endpoint:6443 --token 24fldo.6z7mn6the365gckm \

--discovery-token-ca-cert-hash sha256:38e42b0ca861a9fa27b11b1ab9c9c0e2f2428947c790dfd20b77ea3dd2ffa7f6 \

--control-plane

#加入一个worker节点

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token 24fldo.6z7mn6the365gckm \

--discovery-token-ca-cert-hash sha256:38e42b0ca861a9fa27b11b1ab9c9c0e2f2428947c790dfd20b77ea3dd2ffa7f6

执行上述脚本中的语句

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装网络组件(我安装的是calico)

curl https://docs.projectcalico.org/manifests/calico.yaml -O

#根据配置文件,给集群创建资源

sudo kubectl apply -f calico.yaml

相关命令

#查看集群所有节点

sudo kubectl get nodes

#查看集群部署了哪些应用?

sudo kubectl get pods -A

四、加入主节点

#加入一个控制节点

sudo kubeadm join cluster-endpoint:6443 --token 24fldo.6z7mn6the365gckm \

--discovery-token-ca-cert-hash sha256:38e42b0ca861a9fa27b11b1ab9c9c0e2f2428947c790dfd20b77ea3dd2ffa7f6 \

--control-plane

#加入一个worker节点

Then you can join any number of worker nodes by running the following on each as root:

sudo kubeadm join cluster-endpoint:6443 --token 24fldo.6z7mn6the365gckm \

--discovery-token-ca-cert-hash sha256:38e42b0ca861a9fa27b11b1ab9c9c0e2f2428947c790dfd20b77ea3dd2ffa7f6

令牌过期,获取新令牌

#主节点执行

sudo kubeadm token create --print-join-command

ps:如果用阿里云服务器的话,访问不了主节点6443端口的话,先去确认一下安全组相应端口开没开

五、验证集群

kubectl get nodes

六、安装dashbord

1、安装

sudo kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

如果上述地址访问不了,可以手动创建一个yaml文件,添加如下配置

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.3.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

应用配置

#dashbord.yaml是自己手动创建的ymal文件

sudo kubectl apply -f dashbord.yaml

2、设置端口

执行这条命令

sudo kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

将type: ClusterIP 改为 type: NodePort

# 找到端口,在安全组放行

sudo kubectl get svc -A |grep kubernetes-dashboard

3、验证

访问: https://集群任意IP:端口 https://139.198.165.238:32759

4、创建访问账号

创建一个yaml文件,添加如下配置

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

应用配置

#dash.yaml是自己手动创建的ymal文件

kubectl apply -f dash.yaml

5、令牌访问

#获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

6、界面

Kubeedge安装步骤(Linux)

使用keadm工具安装

一、前置条件(云端、边缘端都需要操作)

1)首先确认主机已安装好docker、k8s、go,下图是k8s和kubeedge版本兼容图

请下载对应的版本

2)准备好keadm的安装包,随便放一个目录,下载地址:Release KubeEdge v1.9.2 release · kubeedge/kubeedge · GitHub,根据架构选择对应安装包

3)解压

#keadm-v1.9.2-linux-arm64.tar.gz修改成相应安装包名称

tar -zxvf keadm-v1.9.2-linux-arm64.tar.gz

4)keadm添加到环境变量中

进入到**/keadm安装目录/keadm/** 下

#将其配置进入环境变量,方便使用

cp keadm /usr/sbin/

5)下载好kubeedge源码,随便放一个目录,下载地址:Release KubeEdge v1.9.2 release · kubeedge/kubeedge · GitHub

# 进入到kubeedge源码所在目录,执行下面命令解压

tar -zxvf kubeedge-1.9.2.tar

6)下载好kubeedge安装包放到/etc/kubeedge 目录下 ,下载地址:Release KubeEdge v1.9.2 release · kubeedge/kubeedge · GitHub,根据架构选择对应安装包

二、安装keadm(云端)

1、keadm init

sudo keadm init --advertise-address=192.168.1.125 --kubeedge-version=1.9.2

--advertise-address 云端地址

2、问题

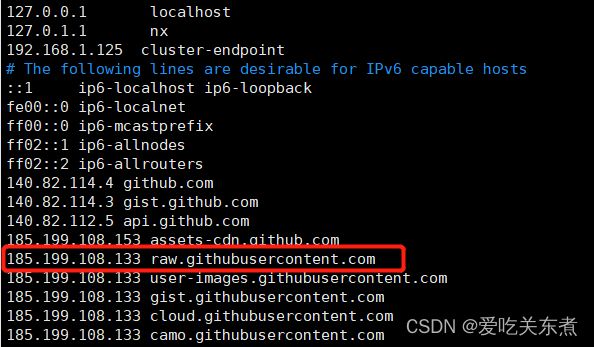

keadm init的时候如果出现raw.githubusercontent.com 访问不了的话,编辑 /etc/hosts 文件,添加 185.199.108.133 raw.githubusercontent.com

sudo vim /etc/hosts

之后,在重新执行上面keadm init命令

如果还不行,可以用我下载好的文件,放到相应目录(myData: 资料相关 - Gitee.com)

1)devices_v1alpha2_device.yaml(用于设备接入的CRD)下载失败

mkdir -p /etc/kubeedge/crds/devices && mkdir -p /etc/kubeedge/crds/reliablesyncs

cp devices_v1alpha2_device.yaml /etc/kubeedge/crds/devices/

2)devices_v1alpha2_devicemodel.yaml下载失败

cp devices_v1alpha2_devicemodel.yaml /etc/kubeedge/crds/devices/

3)其他xxx…yaml下载失败,将下载文件放到/etc/kubeedge/crds/

4)cloudcore.service下载失败,将文件放到/etc/kubeedge/

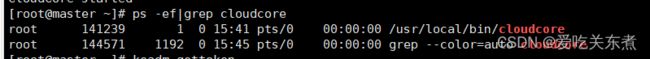

3、检查cloudcore是否启动

ps -ef|grep cloudcore

三、安装keadm(边缘端)

1、keadm join

sudo keadm join --cloudcore-ipport=192.168.1.125:10000 --edgenode-name=node --kubeedge-version=1.9.2 --token=3dc13e89ee6b907f7346786d018d0fa4c1efa7ddb0017607c7512bc1b4926449.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjM5OTg0ODd9.hTQMyupZd5d_e5uOVtz3RVsfe9H_BSFnwuLzPRy2ZUg

参数说明

cloudcore-ipport:云端的ip和port

edgenode-name:边缘的hostname

kubeedge-version:安装的kubeedge版本

token:在云端输入命令keadm gettoken返回的token

2、问题

如果edgecore.service下载失败,编辑 /etc/hosts 文件,添加 185.199.108.133 raw.githubusercontent.com

sudo vim /etc/hosts

如果还不行,可以用我下载好的文件,放到相应目录(myData: 资料相关 - Gitee.com)

下载edgecore.service,将文件放到/etc/kubeedge/

之后,在重新执行上面keadm join的命令

3、启用kubectl logs功能

1)确保可以找到 Kubernetes 的 ca.crt 和 ca.key 文件

ls /etc/kubernetes/pki/

2)设置 CLOUDCOREIPS 环境。环境变量设置为指定的 cloudcore 的IP地址

export CLOUDCOREIPS="192.168.1.125"

3)查看环境变量是否加入成功

echo $CLOUDCOREIPS

4)在云端节点上为 CloudStream 生成证书

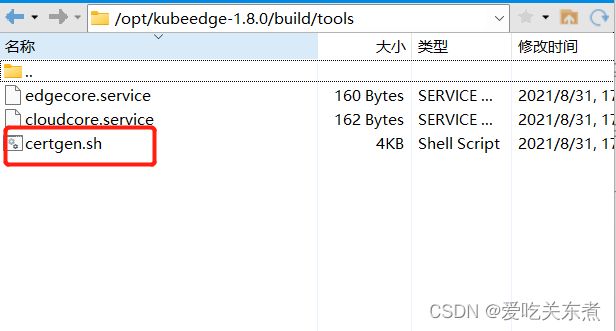

进入kubeedge源码目录/build/tools下有个 certgen.sh文件

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

复制到/etc/kubeedge/目录下

cp /opt/kubeedge-1.9.2/build/tools/certgen.sh /etc/kubeedge/

从 certgen.sh 生成证书

bash /etc/kubeedge/certgen.sh stream

在主机上设置 iptables。(此命令应该在每个apiserver部署的节点上执行。)(

注意: 您需要先设置CLOUDCOREIPS变量

iptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to $CLOUDCOREIPS:10003

端口10003和10350是 CloudStream 和 Edgecore 的默认端口,如果已发生变更,请使用自己设置的端口。

如果您不确定是否设置了iptables,并且希望清除所有这些表。(如果您错误地设置了iptables,它将阻止您使用 kubectl logs 功能) 可以使用以下命令清理iptables规则:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

5)在cloudcore和edgecore 中编辑 YAML 文件

#cloud端

sudo vim /etc/kubeedge/config/cloudcore.yaml

#edge端

sudo vim /etc/kubeedge/config/edgecore.yaml

将将 cloudStream 和 edgeStream 设置为 enable: true,将服务器IP更改为 cloudcore IP

6)重启cloudcore和edgecore

#cloudcore

pkill cloudcore

nohup cloudcore > cloudcore.log 2>&1 &

#edgecore

systemctl restart edgecore.service

4、检查edgecore是否启动

systemctl status edgecore

如果edgecore没起来,请检查是否是由于 kube-proxy 的缘故,同时杀死这个进程。 kubeedge 默认不纳入该进程,我们使用 edgemesh 来进行替代

注意: 可以考虑避免 kube-proxy 部署在edgenode上。有两种解决方法:

#1. 通过调用 `kubectl edit daemonsets.apps -n kube-system kube-proxy` 添加以下设置: ```yaml affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: node-role.kubernetes.io/edge operator: DoesNotExist ``` #2. 如果您仍然要运行 `kube-proxy` ,请通过在以下位置添加 `edgecore.service` 中的 env 变量来要求 **edgecore** 不进行检查edgecore.service: ```shell sudo vi /etc/kubeedge/edgecore.service ``` # 将以下行添加到 **edgecore.service** 文件: ```shell Environment="CHECK_EDGECORE_ENVIRONMENT=false" ``` # 最终文件应如下所示: ``` Description=edgecore.service [Service] Type=simple ExecStart=/root/cmd/ke/edgecore --logtostderr=false --log-file=/root/cmd/ke/edgecore.log Environment="CHECK_EDGECORE_ENVIRONMENT=false" [Install] WantedBy=multi-user.target ```

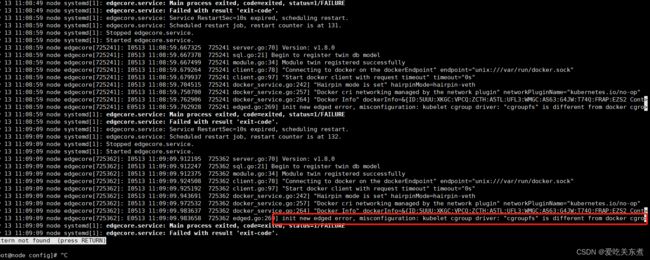

如果还不行,看一下edgecore日志

journalctl -u edgecore -n 50

例如这个报错是因为 kubelet 的cgroup driver 从docker的配置中读取到不到

编辑docker的配置文件,修改成edge kubelet需要的cgroup driver即可

vim /etc/docker/daemon.json

"exec-opts": ["native.cgroupdriver=cgroupfs"]

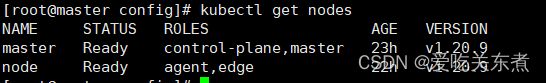

5、检查是否加入cloud端

在cloud输入

kubectl get nodes

dgecore.service

``` # 将以下行添加到 **edgecore.service** 文件: ```shell Environment="CHECK_EDGECORE_ENVIRONMENT=false" ``` # 最终文件应如下所示: ``` Description=edgecore.service [Service] Type=simple ExecStart=/root/cmd/ke/edgecore --logtostderr=false --log-file=/root/cmd/ke/edgecore.log Environment="CHECK_EDGECORE_ENVIRONMENT=false" [Install] WantedBy=multi-user.target ```

如果还不行,看一下edgecore日志

journalctl -u edgecore -n 50

例如这个报错是因为 kubelet 的cgroup driver 从docker的配置中读取到不到

编辑docker的配置文件,修改成edge kubelet需要的cgroup driver即可

vim /etc/docker/daemon.json

"exec-opts": ["native.cgroupdriver=cgroupfs"]

5、检查是否加入cloud端

在cloud输入

kubectl get nodes