RNN自学笔记

视频来源:链接

优点:有代码

一、序列模型

1.1、序列数据举例:

a、音乐,语言,文本和视频是连续的

b、大地震发生后,很可能会有几次较小的余震

c、人的互动是连续的

d、预测明天的股票要比填补昨天以实的股价更困难(炒股是炒预期)

# :图片是空间的,语言是时序的。

1.2、统计工具

(x1,x2,...xt)~p(x)

情况1:数据是图片,那么x1,x2,...xt就是一个个像素点,它们在空间上满足某种关系的概率是p(x),比如分类算法的输出就是一个概率。

情况2:数据是语言,那么x1,x2,...xt就是一个个股价,重点来了,股价在时间上是有关联的。

数学基础:条件概率,p(a,b) = p(a)p(b|a) = p(b)p(a|b)

p(x) = p(x1)p(x2|x1)p(x3|x1,x2)...p(xt|x1,...xt-1)

1.3、序列模型

方案A:马尔科夫假设——假设当前数据只跟k个过去数据点相关

举例:当天股价可能跟过去一年甚至3年有关,但是5年前的股价对今天的股价基本上没有什么影响。

这样就将问题大大简化了,这样我们用一个MLP模型就可以来对这个k个数据进行建模,所有的f都用这一个模型进行预测。

方案B:潜变量模型——引入潜变量ht来表示过去的信息ht=f(x1,...xt-1),这样xt=p(xt|ht)。

RNN就是一个潜变量模型

二、文本预处理

自己要用在百度。参考链接

三、语言模型

给定文本序列x1,x2,...,xt,语言模型的目标是估计联合概率p(x1,...,xt)。

应用:

a、做预训练模型(eg BERT, GPT-3)

b、生成文本,给定前面几个词,不断的使用xt~p(x1,x2,...xt-1)来生成后续文本

c、判断多个序列中哪个更常见

四、循环神经网络RNN

4.1、语言模型

(这里如果去掉激活函数中的第一项那么就是多层感知机,其实这么看来RNN好像CNN的残差机制,将每层的输出都concat到后面去)

(这里如果去掉激活函数中的第一项那么就是多层感知机,其实这么看来RNN好像CNN的残差机制,将每层的输出都concat到后面去)

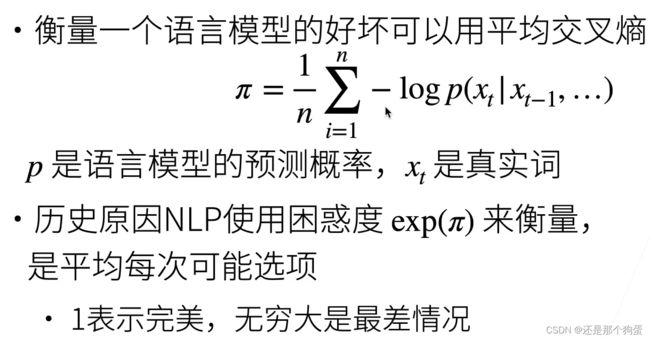

4.2、困惑度(perplexity)

4.3、梯度剪裁

作用:预防梯度爆炸

4.4、RNN应用

4.5、循环神经网络从零开始实现

import math

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

'''

batch_size就是批量大小'

num_steps是输出字符的个数,给你一个句子,后面要输出num_steps个字符(char)

len(vocab)就是含有字符的种类:a,b...,z,句号,空格这些一共有28个

'''

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

'''参数初始化'''

def get_params(vocab_size, num_hiddens, device):

'''

num_inputs = vocab_size = 28是因为one-hot编码将28种char进行了映射

num_outputs = vocab_size = 28是因为一个输出的char一共有28中可能

'''

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape, device=device) * 0.01

W_xh = normal((num_inputs, num_hiddens))

W_hh = normal((num_hiddens, num_hiddens))

b_h = torch.zeros(num_hiddens, device=device)

W_hq = normal((num_hiddens, num_outputs))

b_q = torch.zeros(num_outputs, device=device)

params = [W_xh, W_hh, b_h, W_hq, b_q]

for param in params:

param.requires_grad_(True)

return params

'''潜变量初始化'''

def init_rnn_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device), )

'''重点:这里关键要理解W_xh ,W_hh ,W_hq 分别是什么,一张图来描述其作用'''

def rnn(inputs, state, params):

W_xh, W_hh, b_h, W_hq, b_q = params

H, = state

outputs = []

for X in inputs:

H = torch.tanh(torch.mm(X, W_xh) + torch.mm(H, W_hh) + b_h)

Y = torch.mm(H, W_hq) + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H,)

# 创建一个类来包装上面的三个函数

class RNNModelScratch:

"""从零开始实现的循环神经网络模型"""

def __init__(self, vocab_size, num_hiddens, device,

get_params, init_state, forward_fn):

self.vocab_size, self.num_hiddens = vocab_size, num_hiddens

self.params = get_params(vocab_size, num_hiddens, device)

self.init_state, self.forward_fn = init_state, forward_fn

def __call__(self, X, state):

X = F.one_hot(X.T, self.vocab_size).type(torch.float32)

return self.forward_fn(X, state, self.params)

def begin_state(self, batch_size, device):

return self.init_state(batch_size, self.num_hiddens, device)# 关于上面这个类的小实验

# 自己造数据

X = torch.arange(10).reshape((2, 5))

num_hiddens = 512

# 其中len(vocab)是整形数字28

net = RNNModelScratch(len(vocab), num_hiddens, d2l.try_gpu(), get_params,

init_rnn_state, rnn)

state = net.begin_state(X.shape[0], d2l.try_gpu())

Y, new_state = net(X.to(d2l.try_gpu()), state)

# 输出的shape

Y.shape, len(new_state), new_state[0].shape输出:

(torch.Size([10, 28]), 1, torch.Size([2, 512]))

直接上图:

'''定义训练以及测试'''

def grad_clipping(net, theta):

"""裁剪梯度——作用防止梯度爆炸"""

if isinstance(net, nn.Module):

params = [p for p in net.parameters() if p.requires_grad]

else:

params = net.params

norm = torch.sqrt(sum(torch.sum((p.grad ** 2)) for p in params))

if norm > theta:

for param in params:

param.grad[:] *= theta / norm

def predict_ch8(prefix, num_preds, net, vocab, device):

"""在prefix后面生成新字符"""

state = net.begin_state(batch_size=1, device=device)

outputs = [vocab[prefix[0]]]

get_input = lambda: torch.tensor([outputs[-1]], device=device).reshape((1, 1))

for y in prefix[1:]:

_, state = net(get_input(), state)

outputs.append(vocab[y])

for _ in range(num_preds):

y, state = net(get_input(), state)

outputs.append(int(y.argmax(dim=1).reshape(1)))

return ''.join([vocab.idx_to_token[i] for i in outputs])

# 关于上述函数的小测试

predict_ch8('time traveller ', 10, net, vocab, d2l.try_gpu())

def train_epoch_ch8(net, train_iter, loss, updater, device, use_random_iter):

"""训练网络一个迭代周期(定义见第8章)"""

state, timer = None, d2l.Timer()

metric = d2l.Accumulator(2)

for X, Y in train_iter:

if state is None or use_random_iter:

state = net.begin_state(batch_size=X.shape[0], device=device)

else:

if isinstance(net, nn.Module) and not isinstance(state, tuple):

state.detach_()

else:

for s in state:

s.detach_()

y = Y.T.reshape(-1)

X, y = X.to(device), y.to(device)

y_hat, state = net(X, state)

l = loss(y_hat, y.long()).mean()

if isinstance(updater, torch.optim.Optimizer):

updater.zero_grad()

l.backward()

grad_clipping(net, 1)

updater.step()

else:

l.backward()

grad_clipping(net, 1)

updater(batch_size=1)

metric.add(l * y.numel(), y.numel())

return math.exp(metric[0] / metric[1]), metric[1] / timer.stop()

def train_ch8(net, train_iter, vocab, lr, num_epochs, device,

use_random_iter=False):

"""训练模型(定义见第8章)"""

loss = nn.CrossEntropyLoss()

animator = d2l.Animator(xlabel='epoch', ylabel='perplexity',

legend=['train'], xlim=[10, num_epochs])

if isinstance(net, nn.Module):

updater = torch.optim.SGD(net.parameters(), lr)

else:

updater = lambda batch_size: d2l.sgd(net.params, lr, batch_size)

predict = lambda prefix: predict_ch8(prefix, 50, net, vocab, device)

for epoch in range(num_epochs):

ppl, speed = train_epoch_ch8(

net, train_iter, loss, updater, device, use_random_iter)

if (epoch + 1) % 10 == 0:

print(predict('time traveller'))

animator.add(epoch + 1, [ppl])

print(f'困惑度 {ppl:.1f}, {speed:.1f} 词元/秒 {str(device)}')

print(predict('time traveller'))

print(predict('traveller'))4.6、循环神经网络的简单实现——借助pytorch

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

num_hiddens = 256

'''输入一共有len(vocab)类'''

rnn_layer = nn.RNN(len(vocab), num_hiddens)

'''注意这里比上面多了一个维度'''

state = torch.zeros((1, batch_size, num_hiddens))

state.shape

X = torch.rand(size=(num_steps, batch_size, len(vocab)))

Y, state_new = rnn_layer(X, state)

Y.shape, state_new.shape

class RNNModel(nn.Module):

"""循环神经网络模型"""

def __init__(self, rnn_layer, vocab_size, **kwargs):

super(RNNModel, self).__init__(**kwargs)

self.rnn = rnn_layer

self.vocab_size = vocab_size

self.num_hiddens = self.rnn.hidden_size

# 如果RNN是双向的(之后将介绍),num_directions应该是2,否则应该是1

if not self.rnn.bidirectional:

self.num_directions = 1

self.linear = nn.Linear(self.num_hiddens, self.vocab_size)

else:

self.num_directions = 2

self.linear = nn.Linear(self.num_hiddens * 2, self.vocab_size)

def forward(self, inputs, state):

X = F.one_hot(inputs.T.long(), self.vocab_size)

X = X.to(torch.float32)

Y, state = self.rnn(X, state)

# 全连接层首先将Y的形状改为(时间步数*批量大小,隐藏单元数)

# 它的输出形状是(时间步数*批量大小,词表大小)。

output = self.linear(Y.reshape((-1, Y.shape[-1])))

return output, state

def begin_state(self, device, batch_size=1):

if not isinstance(self.rnn, nn.LSTM):

# nn.GRU以张量作为隐状态

return torch.zeros((self.num_directions * self.rnn.num_layers,

batch_size, self.num_hiddens),

device=device)

else:

# nn.LSTM以元组作为隐状态

return (torch.zeros((

self.num_directions * self.rnn.num_layers,

batch_size, self.num_hiddens), device=device),

torch.zeros((

self.num_directions * self.rnn.num_layers,

device = d2l.try_gpu()

net = RNNModel(rnn_layer, vocab_size=len(vocab))

net = net.to(device)

d2l.predict_ch8('time traveller', 10, net, vocab, device)

num_epochs, lr = 500, 1

d2l.train_ch8(net, train_iter, vocab, lr, num_epochs, device)五、门控循环单元(GRU)

5.1、基本概念

关注一个序列——不是每个观察值都是同等重要。

想只记住相关的观察需要:

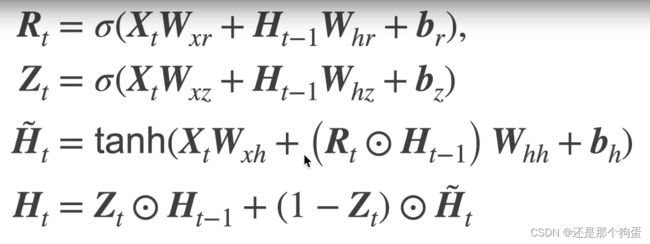

a、能关注的机制(更新门)——Z_t(与前面介绍的RNN基础网络差不多,只不过换了激活函数)

b、能遗忘的机制(重置门)——R_ t

候选隐藏状态(控制作用):

最终隐藏状态:

总结:

5.2、GRU从零实现

import torch

from torch import nn

from d2l import torch as d2l

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

def get_params(vocab_size, num_hiddens, device):

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape, device=device)*0.01

def three():

return (normal((num_inputs, num_hiddens)),

normal((num_hiddens, num_hiddens)),

torch.zeros(num_hiddens, device=device))

W_xz, W_hz, b_z = three() # 更新门参数

W_xr, W_hr, b_r = three() # 重置门参数

W_xh, W_hh, b_h = three() # 候选隐状态参数

# 输出层参数

W_hq = normal((num_hiddens, num_outputs))

b_q = torch.zeros(num_outputs, device=device)

# 附加梯度

params = [W_xz, W_hz, b_z, W_xr, W_hr, b_r, W_xh, W_hh, b_h, W_hq, b_q]

for param in params:

param.requires_grad_(True)

return params

def init_gru_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device), )

def gru(inputs, state, params):

W_xz, W_hz, b_z, W_xr, W_hr, b_r, W_xh, W_hh, b_h, W_hq, b_q = params

H, = state

outputs = []

for X in inputs:

# @代表的点乘

Z = torch.sigmoid((X @ W_xz) + (H @ W_hz) + b_z)

R = torch.sigmoid((X @ W_xr) + (H @ W_hr) + b_r)

H_tilda = torch.tanh((X @ W_xh) + ((R * H) @ W_hh) + b_h)

H = Z * H + (1 - Z) * H_tilda

Y = H @ W_hq + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H,)

vocab_size, num_hiddens, device = len(vocab), 256, d2l.try_gpu()

num_epochs, lr = 500, 1

model = d2l.RNNModelScratch(len(vocab), num_hiddens, device, get_params,

init_gru_state, gru)

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)5.3、GRU简单实现

num_inputs = vocab_size

gru_layer = nn.GRU(num_inputs, num_hiddens)

model = d2l.RNNModel(gru_layer, len(vocab))

model = model.to(device)

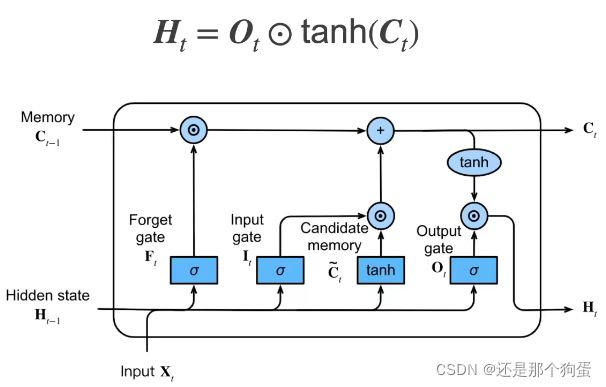

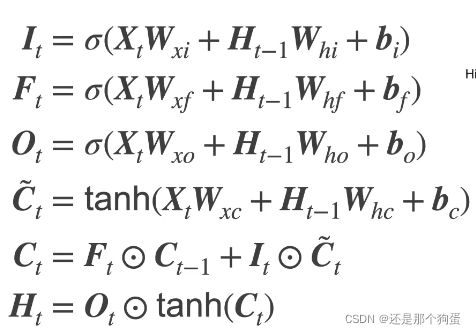

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)六、长短期记忆网络(LSTM)

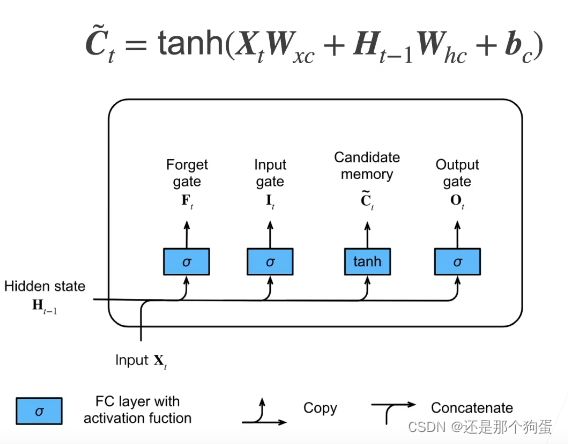

6.1、概念

忘记门:将值朝0减少

输入门:决定不是忽略掉输入数据

输出门:决定是不是使用隐状态

候选记忆单元:

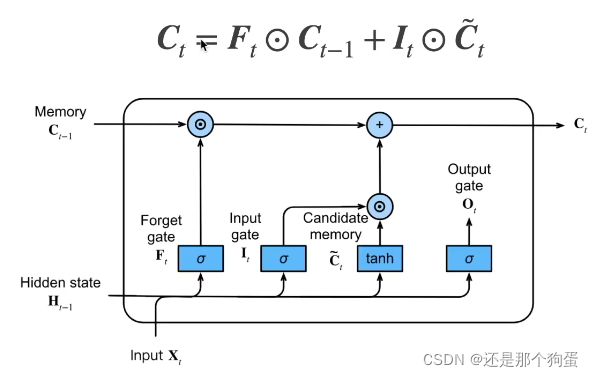

记忆单元:

隐藏状态:

总结:

没太明白为什么要这么做,又引入了更多的参数——记忆单元

有点像之前在CNN种接触过的残差与注意力,这里像他们两者的结合。

6.2、代码从零开始实现

import torch

from torch import nn

from d2l import torch as d2l

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

def get_lstm_params(vocab_size, num_hiddens, device):

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape, device=device)*0.01

def three():

return (normal((num_inputs, num_hiddens)),

normal((num_hiddens, num_hiddens)),

torch.zeros(num_hiddens, device=device))

W_xi, W_hi, b_i = three() # 输入门参数

W_xf, W_hf, b_f = three() # 遗忘门参数

W_xo, W_ho, b_o = three() # 输出门参数

W_xc, W_hc, b_c = three() # 候选记忆元参数

# 输出层参数

W_hq = normal((num_hiddens, num_outputs))

b_q = torch.zeros(num_outputs, device=device)

# 附加梯度

params = [W_xi, W_hi, b_i, W_xf, W_hf, b_f, W_xo, W_ho, b_o, W_xc, W_hc,

b_c, W_hq, b_q]

for param in params:

param.requires_grad_(True)

return params

def init_lstm_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device),

torch.zeros((batch_size, num_hiddens), device=device))

def lstm(inputs, state, params):

[W_xi, W_hi, b_i, W_xf, W_hf, b_f, W_xo, W_ho, b_o, W_xc, W_hc, b_c,

W_hq, b_q] = params

(H, C) = state

outputs = []

for X in inputs:

I = torch.sigmoid((X @ W_xi) + (H @ W_hi) + b_i)

F = torch.sigmoid((X @ W_xf) + (H @ W_hf) + b_f)

O = torch.sigmoid((X @ W_xo) + (H @ W_ho) + b_o)

C_tilda = torch.tanh((X @ W_xc) + (H @ W_hc) + b_c)

C = F * C + I * C_tilda

H = O * torch.tanh(C)

Y = (H @ W_hq) + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H, C)

vocab_size, num_hiddens, device = len(vocab), 256, d2l.try_gpu()

num_epochs, lr = 500, 1

model = d2l.RNNModelScratch(len(vocab), num_hiddens, device, get_lstm_params,

init_lstm_state, lstm)

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)6.3、简单实现

import torch

from torch import nn

from d2l import torch as d2l

num_inputs = vocab_size

lstm_layer = nn.LSTM(num_inputs, num_hiddens)

model = d2l.RNNModel(lstm_layer, len(vocab))

model = model.to(device)

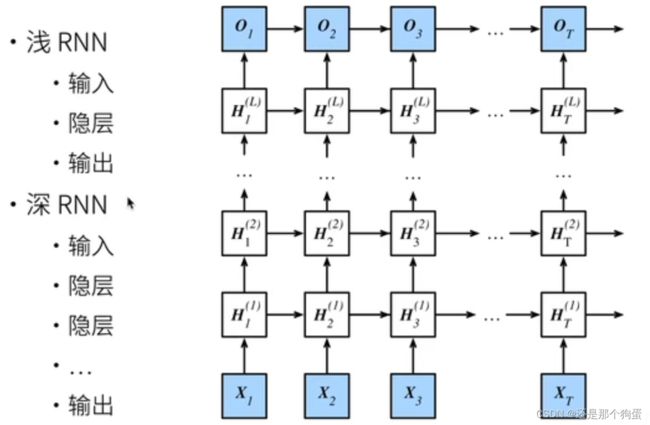

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)七、深度循环神经网络

7.1、概念

之前4-5实现的代码中只有一层隐藏层,现在加更多的隐藏层就出现了深度这个概念

7.2、简单实现

import torch

from torch import nn

from d2l import torch as d2l

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

vocab_size, num_hiddens, num_layers = len(vocab), 256, 2

num_inputs = vocab_size

device = d2l.try_gpu()

'''num_layers你增加隐藏层的数量,每层的大小是num_hiddens'''

lstm_layer = nn.LSTM(num_inputs, num_hiddens, num_layers)

model = d2l.RNNModel(lstm_layer, len(vocab))

model = model.to(device)

num_epochs, lr = 500, 2

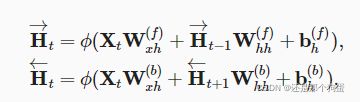

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)八、双向循环神经网络

8.1、概念

语文中的根据上下文填空,这样就体现了未来也很重要

8.2、代码实现

import torch

from torch import nn

from d2l import torch as d2l

# 加载数据

batch_size, num_steps, device = 32, 35, d2l.try_gpu()

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

# 通过设置“bidirective=True”来定义双向LSTM模型

vocab_size, num_hiddens, num_layers = len(vocab), 256, 2

num_inputs = vocab_size

'''bidirectional=True,这样pytorch框架会自动帮你实现'''

lstm_layer = nn.LSTM(num_inputs, num_hiddens, num_layers, bidirectional=True)

model = d2l.RNNModel(lstm_layer, len(vocab))

model = model.to(device)

# 训练模型

num_epochs, lr = 500, 1

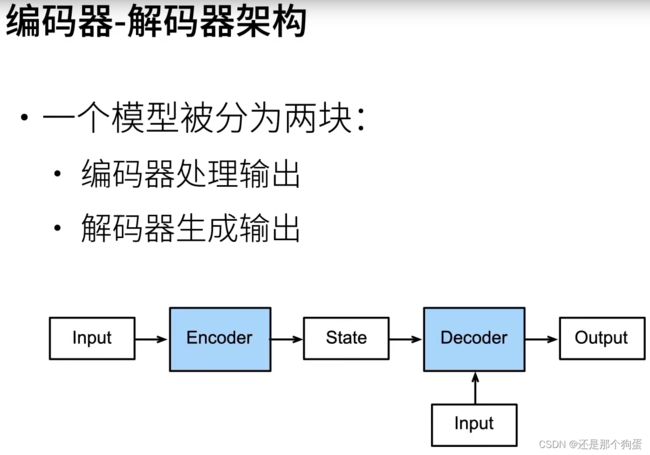

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)九、编码器与解码器

9.2、代码实现

编码器:

from torch import nn

#@save

class Encoder(nn.Module):

"""编码器-解码器架构的基本编码器接口"""

def __init__(self, **kwargs):

super(Encoder, self).__init__(**kwargs)

def forward(self, X, *args):

raise NotImplementedError解码器:

#@save

class Decoder(nn.Module):

"""编码器-解码器架构的基本解码器接口"""

def __init__(self, **kwargs):

super(Decoder, self).__init__(**kwargs)

def init_state(self, enc_outputs, *args):

raise NotImplementedError

def forward(self, X, state):

raise NotImplementedError合并解码器与解码器:

class EncoderDecoder(nn.Module):

"""编码器-解码器架构的基类"""

def __init__(self, encoder, decoder, **kwargs):

super(EncoderDecoder, self).__init__(**kwargs)

self.encoder = encoder

self.decoder = decoder

def forward(self, enc_X, dec_X, *args):

enc_outputs = self.encoder(enc_X, *args)

dec_state = self.decoder.init_state(enc_outputs, *args)

return self.decoder(dec_X, dec_state)十、Seqtoseq

10.1、基本概念

seqtoseq从一个句子生成另外一个句子

编码器是一个RNN,读取输入句子(可以是双向的)

解码器使用另外一个RNN来输出

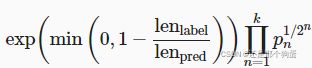

衡量生成序列的好坏的BLEU:

10.2、代码实现

import collections

import math

import torch

from torch import nn

from d2l import torch as d2l

class Seq2SeqEncoder(d2l.Encoder):

"""用于序列到序列学习的循环神经网络编码器"""

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqEncoder, self).__init__(**kwargs)

# 嵌入层

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.GRU(embed_size, num_hiddens, num_layers,

dropout=dropout)

def forward(self, X, *args):

# 输出'X'的形状:(batch_size,num_steps,embed_size)

X = self.embedding(X)

# 在循环神经网络模型中,第一个轴对应于时间步

X = X.permute(1, 0, 2)

# 如果未提及状态,则默认为0

output, state = self.rnn(X)

# output的形状:(num_steps,batch_size,num_hiddens)

# state[0]的形状:(num_layers,batch_size,num_hiddens)

return output, state编码器小实验:

encoder = Seq2SeqEncoder(vocab_size=10, embed_size=8, num_hiddens=16,

num_layers=2)

encoder.eval()

X = torch.zeros((4, 7), dtype=torch.long)

output, state = encoder(X)

output.shape = torch.Size([7, 4, 16])