NLP学习(十六)-NLP实战之文本分类多算法对比分析实战-tensorflow2+Python3

本文将详细介绍文本分类问题并用Python实现这个过程:

文本分类是有监督学习的一个例子,它使用包含文本文档和标签的数据集来训练一个分类器。端到端的文本分类训练主要由三个部分组成:

-

准备数据集:第一步是准备数据集,包括加载数据集和执行基本预处理,然后把数据集分为训练集和验证集。

-

特征工程:第二步是特征工程,将原始数据集被转换为用于训练机器学习模型的平坦特征(flat features),并从现有数据特征创建新的特征。

-

模型训练:最后一步是建模,利用标注数据集训练机器学习模型。

-

进一步提高分类器性能:本文还将讨论用不同的方法来提高文本分类器的性能。

一、准备数据集

在本文中,亚马逊产品评论数据集

https://www.kaggle.com/datafiniti/consumer-reviews-of-amazon-products?select=1429_1.csv

https://www.kesci.com/home/dataset/5ce629ae0ee9cd002cd07e0c

二、特征工程

接下来是特征工程,在这一步,原始数据将被转换为特征向量,另外也会根据现有的数据创建新的特征。为了从数据集中选出重要的特征,有以下几种方式:

计数向量作为特征

TF-IDF向量作为特征

单个词语级别

多个词语级别(N-Gram)

词性级别

词嵌入作为特征

基于文本/NLP的特征

主题模型作为特征

2.1 计数向量作为特征

计数向量是数据集的矩阵表示,其中每行代表来自语料库的文档,每列表示来自语料库的术语,并且每个单元格表示特定文档中特定术语的频率计数

2.2 TF-IDF向量作为特征

TF-IDF的分数代表了词语在文档和整个语料库中的相对重要性。TF-IDF分数由两部分组成:第一部分是计算标准的词语频率(TF),第二部分是逆文档频率(IDF)。其中计算语料库中文档总数除以含有该词语的文档数量,然后再取对数就是逆文档频率。

TF(t)=(该词语在文档出现的次数)/(文档中词语的总数)

IDF(t)= log_e(文档总数/出现该词语的文档总数)

TF-IDF向量可以由不同级别的分词产生(单个词语,词性,多个词(n-grams))

词语级别TF-IDF:矩阵代表了每个词语在不同文档中的TF-IDF分数。

N-gram级别TF-IDF: N-grams是多个词语在一起的组合,这个矩阵代表了N-grams的TF-IDF分数。

词性级别TF-IDF:矩阵代表了语料中多个词性的TF-IDF分数。

2.3 词嵌入

词嵌入是使用稠密向量代表词语和文档的一种形式。向量空间中单词的位置是从该单词在文本中的上下文学习到的,词嵌入可以使用输入语料本身训练,也可以使用预先训练好的词嵌入模型生成,词嵌入模型有:Glove, FastText,Word2Vec。它们都可以下载,并用迁移学习的方式使用。

接下来介绍如何在模型中使用预先训练好的词嵌入模型,主要有四步:

-

加载预先训练好的词嵌入模型

-

创建一个分词对象

-

将文本文档转换为分词序列并填充它们

-

创建分词和各自嵌入的映射

wiki-news-300d-1M.vec

2.4 基于文本/NLP的特征

创建许多额外基于文本的特征有时可以提升模型效果。比如下面的例子:

文档的词语计数—文档中词语的总数量

文档的词性计数—文档中词性的总数量

文档的平均字密度–文件中使用的单词的平均长度

完整文章中的标点符号出现次数–文档中标点符号的总数量

整篇文章中的大写次数—文档中大写单词的数量

完整文章中标题出现的次数—文档中适当的主题(标题)的总数量

词性标注的频率分布

名词数量

动词数量

形容词数量

副词数量

代词数量

这些特征有很强的实验性质,应该具体问题具体分析。

2.5 主题模型作为特征

主题模型是从包含重要信息的文档集中识别词组(主题)的技术,我已经使用LDA生成主题模型特征。LDA是一个从固定数量的主题开始的迭代模型,每一个主题代表了词语的分布,每一个文档表示了主题的分布。虽然分词本身没有意义,但是由主题表达出的词语的概率分布可以传达文档思想。

三、建模

文本分类框架的最后一步是利用之前创建的特征训练一个分类器。关于这个最终的模型,机器学习中有很多模型可供选择。我们将使用下面不同的分类器来做文本分类:

**朴素贝叶斯分类器

线性分类器

支持向量机(SVM)

Bagging Models

Boosting Models

浅层神经网络

深层神经网络

卷积神经网络(CNN)

LSTM

GRU

双向RNN

循环卷积神经网络(RCNN)

其它深层神经网络的变种 **

接下来我们详细介绍并使用这些模型。下面的函数是训练模型的通用函数,它的输入是分类器、训练数据的特征向量、训练数据的标签,验证数据的特征向量。我们使用这些输入训练一个模型,并计算准确度。

3.1 朴素贝叶斯

利用sklearn框架,在不同的特征下实现朴素贝叶斯模型。

朴素贝叶斯是一种基于贝叶斯定理的分类技术,并且假设预测变量是独立的。朴素贝叶斯分类器假设一个类别中的特定特征与其它存在的特征没有任何关系。

想了解朴素贝叶斯算法细节可点击:

https://www.analyticsvidhya.com/blog/2017/09/naive-bayes-explained/

3.2 线性分类器

实现一个线性分类器(Logistic Regression):Logistic回归通过使用logistic / sigmoid函数估计概率来度量类别因变量与一个或多个独立变量之间的关系。如果想了解更多关于logistic回归,请访问:

https://www.analyticsvidhya.com/blog/2015/10/basics-logistic-regression/

3.3 实现支持向量机模型

支持向量机(SVM)是监督学习算法的一种,它可以用来做分类或回归。该模型提取了分离两个类的最佳超平面或线。如果想了解更多关于SVM,请访问:

https://www.analyticsvidhya.com/blog/2017/09/understaing-support-vector-machine-example-code/

3.4 Bagging Model

实现一个随机森林模型:随机森林是一种集成模型,更准确地说是Bagging model。它是基于树模型家族的一部分。如果想了解更多关于随机森林,请访问:

https://www.analyticsvidhya.com/blog/2014/06/introduction-random-forest-simplified/

3.5 Boosting Model

实现一个Xgboost模型:Boosting model是另外一种基于树的集成模型。Boosting是一种机器学习集成元算法,主要用于减少模型的偏差,它是一组机器学习算法,可以把弱学习器提升为强学习器。其中弱学习器指的是与真实类别只有轻微相关的分类器(比随机猜测要好一点)。如果想了解更多,请访问:

https://www.analyticsvidhya.com/blog/2016/01/xgboost-algorithm-easy-steps/

3.6 浅层神经网络

神经网络被设计成与生物神经元和神经系统类似的数学模型,这些模型用于发现被标注数据中存在的复杂模式和关系。一个浅层神经网络主要包含三层神经元-输入层、隐藏层、输出层。如果想了解更多关于浅层神经网络,请访问:

https://www.analyticsvidhya.com/blog/2017/05/neural-network-from-scratch-in-python-and-r/

3.7 深层神经网络

深层神经网络是更复杂的神经网络,其中隐藏层执行比简单Sigmoid或Relu激活函数更复杂的操作。不同类型的深层学习模型都可以应用于文本分类问题。

卷积神经网络

卷积神经网络中,输入层上的卷积用来计算输出。本地连接结果中,每一个输入单元都会连接到输出神经元上。每一层网络都应用不同的滤波器(filter)并组合它们的结果。

如果想了解更多关于卷积神经网络,请访问:

https://www.analyticsvidhya.com/blog/2017/06/architecture-of-convolutional-neural-networks-simplified-demystified/

循环神经网络-LSTM

与前馈神经网络不同,前馈神经网络的激活输出仅在一个方向上传播,而循环神经网络的激活输出在两个方向传播(从输入到输出,从输出到输入)。因此在神经网络架构中产生循环,充当神经元的“记忆状态”,这种状态使神经元能够记住迄今为止学到的东西。RNN中的记忆状态优于传统的神经网络,但是被称为梯度弥散的问题也因这种架构而产生。这个问题导致当网络有很多层的时候,很难学习和调整前面网络层的参数。为了解决这个问题,开发了称为LSTM(Long Short Term Memory)模型的新型RNN:

如果想了解更多关于LSTM,请访问:

https://www.analyticsvidhya.com/blog/2017/12/fundamentals-of-deep-learning-introduction-to-lstm/

循环神经网络-GRU

门控递归单元是另一种形式的递归神经网络,我们在网络中添加一个GRU层来代替LSTM。

双向RNN

RNN层也可以被封装在双向层中,我们把GRU层封装在双向RNN网络中。

循环卷积神经网络

如果基本的架构已经尝试过,则可以尝试这些层的不同变体,如递归卷积神经网络,还有其它变体,比如:

层次化注意力网络(Sequence to Sequence Models with Attention)

具有注意力机制的seq2seq(Sequence to Sequence Models with Attention)

双向循环卷积神经网络

更多网络层数的CNNs和RNNs

进一步提高文本分类模型的性能

虽然上述框架可以应用于多个文本分类问题,但是为了达到更高的准确率,可以在总体框架中进行一些改进。例如,下面是一些改进文本分类模型和该框架性能的技巧:

-

清洗文本:文本清洗有助于减少文本数据中出现的噪声,包括停用词、标点符号、后缀变化等。这篇文章有助于理解如何实现文本分类:

https://www.analyticsvidhya.com/blog/2014/11/text-data-cleaning-steps-python/ -

组合文本特征向量的文本/NLP特征:特征工程阶段,我们把生成的文本特征向量组合在一起,可能会提高文本分类器的准确率。

模型中的超参数调优:参数调优是很重要的一步,很多参数通过合适的调优可以获得最佳拟合模型,例如树的深层、叶子节点数、网络参数等。

- 集成模型:堆叠不同的模型并混合它们的输出有助于进一步改进结果。如果想了解更多关于模型集成,请访问:

https://www.analyticsvidhya.com/blog/2015/08/introduction-ensemble-learning/

四 代码实现

# -*- coding: utf-8 -*-

# 导入数据集预处理、特征工程和模型训练所需的库

from sklearn import model_selection, preprocessing, linear_model, naive_bayes, metrics, svm

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn import decomposition, ensemble

import pandas as pd

import numpy as np

import pandas, xgboost, numpy, textblob, string

from tensorflow.keras.preprocessing import text, sequence

import tensorflow as tf

from tensorflow.keras import layers, models, optimizers

# 加载数据集

def loadfile():

# neg=pd.read_csv('./testdata/neg.csv',header=None,index_col=None)

# pos=pd.read_csv('../testdata/pos.csv',header=None,index_col=None,error_bad_lines=False)

# neu=pd.read_csv('../testdata/neutral.csv', header=None, index_col=None)

# #concatenate 能够一次完成多个数组的拼接

# combined = np.concatenate((pos[0], neu[0], neg[0]))

# y = np.concatenate((np.ones(len(pos), dtype=int), np.zeros(len(neu), dtype=int),-1*np.ones(len(neg),dtype=int)))

# # 创建一个dataframe,列名为text和label

# trainDF = pandas.DataFrame()

# trainDF['text'] = combined

# trainDF['label'] = y

df = pd.read_csv('./corpus/1429_1.csv')

# 创建一个dataframe,列名为text和label

#删除表中含有任何NaN的行

df.dropna(axis=0, how='any')

trainDF = pandas.DataFrame()

trainDF['text'] = df['reviews.text'].fillna('')

trainDF['label'] = df['reviews.rating']

return trainDF

#拆分数据集

def splitdata(trainDF):

# 将数据集分为训练集和验证集

train_x, valid_x, train_y, valid_y = model_selection.train_test_split(trainDF['text'], trainDF['label'])

# label编码为目标变量

encoder = preprocessing.LabelEncoder()

train_y = encoder.fit_transform(train_y)

valid_y = encoder.fit_transform(valid_y)

return train_x, valid_x, train_y, valid_y

#特征提取

'''

计数向量作为特征

TF-IDF向量作为特征

单个词语级别

多个词语级别(N-Gram)

词性级别

词嵌入作为特征

基于文本/NLP的特征

主题模型作为特征

'''

#1 计数向量作为特征,结果是词频

def countvectorizer(trainDF,train_x,valid_x):

# 创建一个向量计数器对象

count_vect = CountVectorizer(analyzer='word', token_pattern=r'\w{1,}')

count_vect.fit(trainDF['text'])

# 使用向量计数器对象转换训练集和验证集

xtrain_count = count_vect.transform(train_x)

xvalid_count = count_vect.transform(valid_x)

return xtrain_count,xvalid_count

#2 TF-IDF向量作为特征,结果是词频和逆文档频率(IDF(t)= log_e(文档总数/出现该词语的文档总数))

def tfidfvectorizer(trainDF,train_x,valid_x):

# 词语级tf-idf

tfidf_vect = TfidfVectorizer(analyzer='word', token_pattern=r'\w{1,}', max_features=5000)

tfidf_vect.fit(trainDF['text'])

xtrain_tfidf = tfidf_vect.transform(train_x)

xvalid_tfidf = tfidf_vect.transform(valid_x)

# ngram 级tf-idf

tfidf_vect_ngram = TfidfVectorizer(analyzer='word', token_pattern=r'\w{1,}', ngram_range=(2, 3), max_features=5000)

tfidf_vect_ngram.fit(trainDF['text'])

xtrain_tfidf_ngram = tfidf_vect_ngram.transform(train_x)

xvalid_tfidf_ngram = tfidf_vect_ngram.transform(valid_x)

# 词性级tf-idf

tfidf_vect_ngram_chars = TfidfVectorizer(analyzer='char', token_pattern=r'\w{1,}', ngram_range=(2, 3),

max_features=5000)

tfidf_vect_ngram_chars.fit(trainDF['text'])

xtrain_tfidf_ngram_chars = tfidf_vect_ngram_chars.transform(train_x)

xvalid_tfidf_ngram_chars = tfidf_vect_ngram_chars.transform(valid_x)

return xtrain_tfidf,xvalid_tfidf,xtrain_tfidf_ngram,xvalid_tfidf_ngram,xtrain_tfidf_ngram_chars,xvalid_tfidf_ngram_chars

#3 词嵌入

def embeddings(trainDF,train_x,valid_x):

# 加载预先训练好的词嵌入向量

embeddings_index = {}

for i, line in enumerate(open('../testdata/wiki-news-300d-1M.vec', 'rb')):

values = line.split()

embeddings_index[values[0]] = numpy.asarray(values[1:], dtype='float32')

# 创建一个分词器

token = text.Tokenizer()

token.fit_on_texts(trainDF['text'])

word_index = token.word_index

# 将文本转换为分词序列,并填充它们保证得到相同长度的向量

train_seq_x = sequence.pad_sequences(token.texts_to_sequences(train_x), maxlen=70)

valid_seq_x = sequence.pad_sequences(token.texts_to_sequences(valid_x), maxlen=70)

# 创建分词嵌入映射

embedding_matrix = numpy.zeros((len(word_index) + 1, 300))

for word, i in word_index.items():

embedding_vector = embeddings_index.get(word)

if embedding_vector is not None:

embedding_matrix[i] = embedding_vector

return word_index,embedding_matrix,train_seq_x,valid_seq_x

#基于文本/NLP的特征

def nlp(trainDF,train_x,valid_x):

trainDF['char_count'] = trainDF['text'].apply(len)

trainDF['word_count'] = trainDF['text'].apply(lambda x: len(x.split()))

trainDF['word_density'] = trainDF['char_count'] / (trainDF['word_count'] + 1)

trainDF['punctuation_count'] = trainDF['text'].apply(

lambda x: len("".join(_ for _ in x if _ in string.punctuation)))

trainDF['title_word_count'] = trainDF['text'].apply(lambda x: len([wrd for wrd in x.split() if wrd.istitle()]))

trainDF['upper_case_word_count'] = trainDF['text'].apply(lambda x: len([wrd for wrd in x.split() if wrd.isupper()]))

pos_family = {

'noun': ['NN', 'NNS', 'NNP', 'NNPS'],

'pron': ['PRP', 'PRP$', 'WP', 'WP$'],

'verb': ['VB', 'VBD', 'VBG', 'VBN', 'VBP', 'VBZ'],

'adj': ['JJ', 'JJR', 'JJS'],

'adv': ['RB', 'RBR', 'RBS', 'WRB']

}

# 检查和获得特定句子中的单词的词性标签数量

def check_pos_tag(x, flag):

cnt = 0

try:

wiki = textblob.TextBlob(x)

for tup in wiki.tags:

ppo = list(tup)[1]

if ppo in pos_family[flag]:

cnt += 1

except:

pass

return cnt

trainDF['noun_count'] = trainDF['text'].apply(lambda x: check_pos_tag(x, 'noun'))

trainDF['verb_count'] = trainDF['text'].apply(lambda x: check_pos_tag(x, 'verb'))

trainDF['adj_count'] = trainDF['text'].apply(lambda x: check_pos_tag(x, 'adj'))

trainDF['adv_count'] = trainDF['text'].apply(lambda x: check_pos_tag(x, 'adv'))

trainDF['pron_count'] = trainDF['text'].apply(lambda x: check_pos_tag(x, 'pron'))

#主题模型作为特征

def lad(trainDF,train_x,valid_x):

count_vect = CountVectorizer(analyzer='word', token_pattern=r'\w{1,}')

count_vect.fit(trainDF['text'])

# 使用向量计数器对象转换训练集和验证集

xtrain_count = count_vect.transform(train_x)

xvalid_count = count_vect.transform(valid_x)

# 训练主题模型

lda_model = decomposition.LatentDirichletAllocation(n_components=20, learning_method='online', max_iter=20)

X_topics = lda_model.fit_transform(xtrain_count)

topic_word = lda_model.components_

vocab = count_vect.get_feature_names()

# 可视化主题模型

n_top_words = 10

topic_summaries = []

for i, topic_dist in enumerate(topic_word):

topic_words = numpy.array(vocab)[numpy.argsort(topic_dist)][:-(n_top_words + 1):-1]

topic_summaries.append(' '.join(topic_words))

#建模

'''

朴素贝叶斯分类器

线性分类器

支持向量机(SVM)

Bagging Models

Boosting Models

浅层神经网络

深层神经网络

卷积神经网络(CNN)

LSTM

GRU

双向RNN

循环卷积神经网络(RCNN)

其它深层神经网络的变种

'''

def train_model(classifier, feature_vector_train, label_train, feature_vector_valid, label_valid, is_neural_net=False):

# fit the training dataset on the classifier

classifier.fit(feature_vector_train, label_train)

# predict the labels on validation dataset

predictions = classifier.predict(feature_vector_valid)

if is_neural_net:

#numpy之argmax()作用 返回相应维度axis上的最大值的位置

predictions = predictions.argmax(axis=-1)

accuracy = metrics.accuracy_score(predictions, label_valid)

return accuracy

#1 朴素贝叶斯

def multinomialNB(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars):

# 特征为计数向量的朴素贝叶斯

accuracy = train_model(naive_bayes.MultinomialNB(), xtrain_count, train_y, xvalid_count, valid_y)

print ("NB, Count Vectors: ", accuracy)

# 特征为词语级别TF-IDF向量的朴素贝叶斯

accuracy = train_model(naive_bayes.MultinomialNB(), xtrain_tfidf, train_y, xvalid_tfidf, valid_y)

print ("NB, WordLevel TF-IDF: ", accuracy)

# 特征为多个词语级别TF-IDF向量的朴素贝叶斯

accuracy = train_model(naive_bayes.MultinomialNB(), xtrain_tfidf_ngram, train_y, xvalid_tfidf_ngram, valid_y)

print ("NB, N-Gram Vectors: ", accuracy)

# 特征为词性级别TF-IDF向量的朴素贝叶斯

accuracy = train_model(naive_bayes.MultinomialNB(), xtrain_tfidf_ngram_chars, train_y, xvalid_tfidf_ngram_chars, valid_y)

print ("NB, CharLevel Vectors: ", accuracy)

# 输出结果

# NB, Count

# Vectors: 0.7361532625189682

# NB, WordLevel

# TF - IDF: 0.6350531107738998

# NB, N - Gram

# Vectors: 0.4178679817905918

# NB, CharLevel

# Vectors: 0.7716236722306525

#2 线性分类器

def logisticRegression(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars):

# Linear Classifier on Count Vectors

accuracy = train_model(linear_model.LogisticRegression(), xtrain_count, train_y, xvalid_count, valid_y)

print ("LR, Count Vectors: ", accuracy)

# 特征为词语级别TF-IDF向量的线性分类器

accuracy = train_model(linear_model.LogisticRegression(), xtrain_tfidf, train_y, xvalid_tfidf, valid_y)

print ("LR, WordLevel TF-IDF: ", accuracy)

# 特征为多个词语级别TF-IDF向量的线性分类器

accuracy = train_model(linear_model.LogisticRegression(), xtrain_tfidf_ngram, train_y, xvalid_tfidf_ngram, valid_y)

print ("LR, N-Gram Vectors: ", accuracy)

# 特征为词性级别TF-IDF向量的线性分类器

accuracy = train_model(linear_model.LogisticRegression(), xtrain_tfidf_ngram_chars, train_y,

xvalid_tfidf_ngram_chars, valid_y)

print ("LR, CharLevel Vectors: ", accuracy)

# 输出结果

# LR, Count

# Vectors: 0.7264795144157815

# LR, WordLevel

# TF - IDF: 0.6432094081942337

# LR, N - Gram

# Vectors: 0.4954476479514416

# LR, CharLevel

# Vectors: 0.8875189681335357

#3 实现支持向量机模型

def svc(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars):

# SVM Classifier on Count Vectors

accuracy = train_model(svm.SVC(), xtrain_count, train_y, xvalid_count, valid_y)

print ("SVM, Count Vectors: ", accuracy)

# 特征为词语级别TF-IDF向量的SVM

accuracy = train_model(svm.SVC(), xtrain_tfidf, train_y, xvalid_tfidf, valid_y)

print ("SVM, WordLevel TF-IDF: ", accuracy)

# 特征为多个词语级别TF-IDF向量的SVM

accuracy = train_model(svm.SVC(), xtrain_tfidf_ngram, train_y, xvalid_tfidf_ngram, valid_y)

print ("SVM, N-Gram Vectors: ", accuracy)

# 特征为词性级别TF-IDF向量的SVM

accuracy = train_model(svm.SVC(), xtrain_tfidf_ngram_chars, train_y,

xvalid_tfidf_ngram_chars, valid_y)

print ("SVM, CharLevel Vectors: ", accuracy)

# 输出结果

# SVM, Count

# Vectors: 0.6875948406676783

# SVM, WordLevel

# TF - IDF: 0.6488998482549317

# SVM, N - Gram

# Vectors: 0.49753414264036416

# SVM, CharLevel

# Vectors: 0.9062974203338392

#4 Bagging Model随机森林

def randomForestClassifier(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars):

# 特征为计数向量的RF

accuracy = train_model(ensemble.RandomForestClassifier(), xtrain_count, train_y, xvalid_count, valid_y)

print ("RF, Count Vectors: ", accuracy)

# 特征为词语级别TF-IDF向量的RF

accuracy = train_model(ensemble.RandomForestClassifier(), xtrain_tfidf, train_y, xvalid_tfidf, valid_y)

print ("RF, WordLevel TF-IDF: ", accuracy)

# 特征为多个词语级别TF-IDF向量的RF

accuracy = train_model(ensemble.RandomForestClassifier(), xtrain_tfidf_ngram, train_y, xvalid_tfidf_ngram, valid_y)

print("RF, N-Gram Vectors: ", accuracy)

# 特征为词性级别TF-IDF向量的RF

accuracy = train_model(ensemble.RandomForestClassifier(), xtrain_tfidf_ngram_chars, train_y,

xvalid_tfidf_ngram_chars, valid_y)

print("RF, CharLevel Vectors: ", accuracy)

# 输出结果

# RF, Count

# Vectors: 0.6684370257966616

# RF, WordLevel

# TF - IDF: 0.636380880121396

# RF, N - Gram

# Vectors: 0.49298179059180575

# RF, CharLevel

# Vectors: 0.9148330804248862

#5 Boosting Model Xgboost模型

def xgbClassifier(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars):

#tocsc 返回这个矩阵的压缩稀疏列格式

# 特征为计数向量的Xgboost

accuracy = train_model(xgboost.XGBClassifier(), xtrain_count.tocsc(), train_y, xvalid_count.tocsc(), valid_y)

print ("Xgb, Count Vectors: ", accuracy)

# 特征为词语级别TF-IDF向量的Xgboost

accuracy = train_model(xgboost.XGBClassifier(), xtrain_tfidf.tocsc(), train_y, xvalid_tfidf.tocsc(), valid_y)

print ("Xgb, WordLevel TF-IDF: ", accuracy)

# 特征为多个词语级别TF-IDF向量的Xgboost

accuracy = train_model(xgboost.XGBClassifier(), xtrain_tfidf_ngram.tocsc(), train_y, xvalid_tfidf_ngram.tocsc(), valid_y)

print("Xgb, N-Gram Vectors: ", accuracy)

# 特征为词性级别TF-IDF向量的Xgboost

accuracy = train_model(xgboost.XGBClassifier(), xtrain_tfidf_ngram_chars.tocsc(), train_y,

xvalid_tfidf_ngram_chars.tocsc(), valid_y)

print ("Xgb, CharLevel Vectors: ", accuracy)

# 输出结果

# Xgb, Count

# Vectors: 0.5379362670713201

# Xgb, WordLevel

# TF - IDF: 0.5390743550834598

# Xgb, N - Gram

# Vectors: 0.412556904400607

# Xgb, CharLevel

# Vectors: 0.9099013657056145

#6 浅层神经网络

def nn(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars):

def create_model_architecture(input_size):

# create input layer

input_layer = layers.Input((input_size,), sparse=True)

# create hidden layer

hidden_layer = layers.Dense(100, activation="relu")(input_layer)

# create output layer

output_layer = layers.Dense(1, activation="sigmoid")(hidden_layer)

classifier = models.Model(inputs=input_layer, outputs=output_layer)

classifier.compile(optimizer=optimizers.Adam(), loss='binary_crossentropy')

return classifier

classifier = create_model_architecture(xtrain_tfidf_ngram.shape[1])

accuracy = train_model(classifier, xtrain_tfidf_ngram, train_y, xvalid_tfidf_ngram, valid_y, is_neural_net=True)

print("NN, Ngram Level TF IDF Vectors", accuracy)

# 输出结果:

# 7.卷积神经网络

def cnn(word_index,embedding_matrix,train_seq_x, train_y, valid_seq_x, valid_y):

def create_cnn(word_index,embedding_matrix):

# Add an Input Layer

input_layer = layers.Input((70,))

# Add the word embedding Layer

embedding_layer = layers.Embedding(len(word_index) + 1, 300, weights=[embedding_matrix], trainable=False)(input_layer)

embedding_layer = layers.SpatialDropout1D(0.3)(embedding_layer)

# Add the convolutional Layer

conv_layer = layers.Convolution1D(100, 3, activation="relu")(embedding_layer)

# Add the pooling Layer

pooling_layer = layers.GlobalMaxPool1D()(conv_layer)

# Add the output Layers

output_layer1 = layers.Dense(50, activation="relu")(pooling_layer)

output_layer1 = layers.Dropout(0.25)(output_layer1)

output_layer2 = layers.Dense(1, activation="sigmoid")(output_layer1)

# Compile the model

model = models.Model(inputs=input_layer, outputs=output_layer2)

model.compile(optimizer=optimizers.Adam(), loss='binary_crossentropy')

return model

classifier = create_cnn(word_index,embedding_matrix)

accuracy = train_model(classifier, train_seq_x, train_y, valid_seq_x, valid_y, is_neural_net=True)

print ("CNN, Word Embeddings", accuracy)

# 输出结果

#8.循环神经网络-LSTM

def lstm(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y):

def create_rnn_lstm():

# Add an Input Layer

input_layer = layers.Input((70,))

# Add the word embedding Layer

embedding_layer = layers.Embedding(len(word_index) + 1, 300, weights=[embedding_matrix], trainable=False)(input_layer)

embedding_layer = layers.SpatialDropout1D(0.3)(embedding_layer)

# Add the LSTM Layer

lstm_layer = layers.LSTM(100)(embedding_layer)

# Add the output Layers

output_layer1 = layers.Dense(50, activation="relu")(lstm_layer)

output_layer1 = layers.Dropout(0.25)(output_layer1)

output_layer2 = layers.Dense(1, activation="sigmoid")(output_layer1)

# Compile the model

model = models.Model(inputs=input_layer, outputs=output_layer2)

model.compile(optimizer=optimizers.Adam(), loss='binary_crossentropy')

return model

classifier = create_rnn_lstm()

accuracy = train_model(classifier, train_seq_x, train_y, valid_seq_x, valid_y, is_neural_net=True)

print ("RNN-LSTM, Word Embeddings", accuracy)

# 输出结果

#9.循环神经网络-GRU

def gru(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y):

def create_rnn_gru():

# Add an Input Layer

input_layer = layers.Input((70,))

# Add the word embedding Layer

embedding_layer = layers.Embedding(len(word_index) + 1, 300, weights=[embedding_matrix], trainable=False)(input_layer)

embedding_layer = layers.SpatialDropout1D(0.3)(embedding_layer)

# Add the GRU Layer

lstm_layer = layers.GRU(100)(embedding_layer)

# Add the output Layers

output_layer1 = layers.Dense(50, activation="relu")(lstm_layer)

output_layer1 = layers.Dropout(0.25)(output_layer1)

output_layer2 = layers.Dense(1, activation="sigmoid")(output_layer1)

# Compile the model

model = models.Model(inputs=input_layer, outputs=output_layer2)

model.compile(optimizer=optimizers.Adam(), loss='binary_crossentropy')

return model

classifier = create_rnn_gru()

accuracy = train_model(classifier, train_seq_x, train_y, valid_seq_x, valid_y, is_neural_net=True)

print ("RNN-GRU, Word Embeddings", accuracy)

# 输出结果

#10.双向RNN

def rnn(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y):

def create_bidirectional_rnn():

# Add an Input Layer

input_layer = layers.Input((70,))

# Add the word embedding Layer

embedding_layer = layers.Embedding(len(word_index) + 1, 300, weights=[embedding_matrix], trainable=False)(input_layer)

embedding_layer = layers.SpatialDropout1D(0.3)(embedding_layer)

# Add the LSTM Layer

lstm_layer = layers.Bidirectional(layers.GRU(100))(embedding_layer)

# Add the output Layers

output_layer1 = layers.Dense(50, activation="relu")(lstm_layer)

output_layer1 = layers.Dropout(0.25)(output_layer1)

output_layer2 = layers.Dense(1, activation="sigmoid")(output_layer1)

# Compile the model

model = models.Model(inputs=input_layer, outputs=output_layer2)

model.compile(optimizer=optimizers.Adam(), loss='binary_crossentropy')

return model

classifier = create_bidirectional_rnn()

accuracy = train_model(classifier, train_seq_x, train_y, valid_seq_x, valid_y, is_neural_net=True)

print ("RNN-Bidirectional, Word Embeddings", accuracy)

# 输出结果

#11.循环卷积神经网络

def rcnn(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y):

def create_rcnn():

# Add an Input Layer

input_layer = layers.Input((70,))

# Add the word embedding Layer

embedding_layer = layers.Embedding(len(word_index) + 1, 300, weights=[embedding_matrix], trainable=False)(input_layer)

embedding_layer = layers.SpatialDropout1D(0.3)(embedding_layer)

# Add the recurrent layer

rnn_layer = layers.Bidirectional(layers.GRU(50, return_sequences=True))(embedding_layer)

# Add the convolutional Layer

conv_layer = layers.Convolution1D(100, 3, activation="relu")(embedding_layer)

# Add the pooling Layer

pooling_layer = layers.GlobalMaxPool1D()(conv_layer)

# Add the output Layers

output_layer1 = layers.Dense(50, activation="relu")(pooling_layer)

output_layer1 = layers.Dropout(0.25)(output_layer1)

output_layer2 = layers.Dense(1, activation="sigmoid")(output_layer1)

# Compile the model

model = models.Model(inputs=input_layer, outputs=output_layer2)

model.compile(optimizer=optimizers.Adam(), loss='binary_crossentropy')

return model

classifier = create_rcnn()

accuracy = train_model(classifier, train_seq_x, train_y, valid_seq_x, valid_y, is_neural_net=True)

print ("CNN, Word Embeddings", accuracy)

# 输出结果

if __name__ == '__main__':

#加载数据

trainDF = loadfile()

print("数据样例.......")

print(trainDF.head(5))

#拆分数据

train_x, valid_x, train_y, valid_y = splitdata(trainDF)

#计数向量特征

xtrain_count, xvalid_count = countvectorizer(trainDF,train_x, valid_x)

print("计数向量返回值......")

print(xtrain_count)

#TF-IDF向量作为特征

xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars = tfidfvectorizer(trainDF,train_x, valid_x)

print("TF-IDF向量。。。。。")

print(xtrain_tfidf_ngram)

print(xtrain_tfidf_ngram.shape[1])

#词嵌入

word_index,embedding_matrix,train_seq_x,valid_seq_x = embeddings(trainDF,train_x, valid_x)

# #基于文本/NLP的特征

# nlp(trainDF,train_x, valid_x)

# #主题模型作为特征

# lad(trainDF,train_x, valid_x)

#建模

#1.朴树贝叶斯

multinomialNB(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram,

xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars)

#2.线性分类

logisticRegression(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram,

xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars)

#3.支持向量机

svc(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram,xvalid_tfidf_ngram,

xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars)

#4.Bagging Model随机森林

randomForestClassifier(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram,

xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars)

#5.Boosting Model Xgboost模型

xgbClassifier(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram,

xvalid_tfidf_ngram, xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars)

#6.浅层神经网络

nn(xtrain_count, train_y, xvalid_count, valid_y, xtrain_tfidf, xvalid_tfidf, xtrain_tfidf_ngram, xvalid_tfidf_ngram,

xtrain_tfidf_ngram_chars, xvalid_tfidf_ngram_chars)

# 7.卷积神经网络

cnn(word_index,embedding_matrix,train_seq_x, train_y, valid_seq_x, valid_y)

#8.循环神经网络-LSTM

lstm(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y)

#9.循环神经网络-GRU

gru(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y)

#10.双向RNN

rnn(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y)

#11.循环卷积神经网络

rcnn(word_index, embedding_matrix, train_seq_x, train_y, valid_seq_x, valid_y)

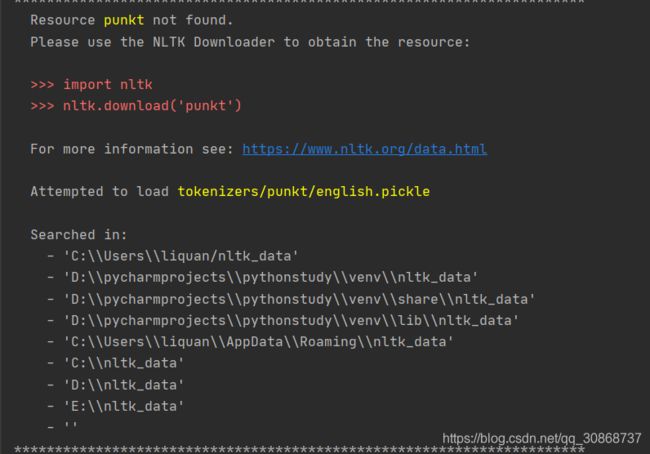

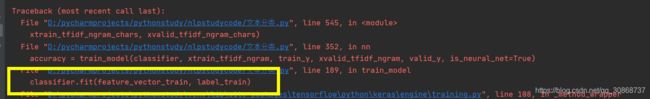

上述代码调试时候还有一些报错没有解决希望有遇到相同问题解决的给个方法

1.运行到第六个浅层神经网络时候提交训练报错

tensorflow.python.framework.errors_impl.InvalidArgumentError: indices[1] = [0,4717] is out of order. Many sparse ops require sorted indices.

Use `tf.sparse.reorder` to create a correctly ordered copy.