PyTorch Week 3——Optimizer优化器

系列文章目录

PyTorch Week 3——权值初始化操作

PyTorch Week 3——nn.MaxPool2d、nn.AvgPool2d、nn.Linear、激活层

PyTorch Week 3——卷积

PyTorch Week 3——nn.Module的容器:Sequential、ModuleList、ModuleDice

PyTorch Week 3——模型创建

PyTorch Week 2——Dataloader与Dataset

PyTorch Week 1

PyTorch Week 3——Optimizer

- 系列文章目录

- 前言

- 一、优化器

- 二、class Optimizer

-

- 1. __init__定义

- 2. def 方法

- 3. 代码实现

-

- 1.查看基本属性

-

- defaults

- param_groups

- 2 方法

-

- optimizer.step()更新参数

- optimizer.zero_grad()参数梯度归零

- optimizer.add_param_group()添加新的参数组

- optimizer.state_dict()、torch.load()与optimizer.load_state_dict()完成参数的重载

- optimizer在模型中的使用

- 总结

前言

一、优化器

原理部分,详细待补充

二、class Optimizer

1. __init__定义

def __init__(self, params, defaults):

torch._C._log_api_usage_once("python.optimizer")

self.defaults = defaults#优化器超参数

self._hook_for_profile()

if isinstance(params, torch.Tensor):

raise TypeError("params argument given to the optimizer should be "

"an iterable of Tensors or dicts, but got " +

torch.typename(params))

self.state = defaultdict(dict)#参数的缓存,如momentum的缓存

self.param_groups = []#管理的参数组,比如在迁移学习过程中,想让前面几层的参数更新的慢,就可以把前几层分成一组

param_groups = list(params)

if len(param_groups) == 0:

raise ValueError("optimizer got an empty parameter list")

if not isinstance(param_groups[0], dict):

param_groups = [{'params': param_groups}]#管理的参数组通常是一个个字典,字典中保存了参数和学习率等

for param_group in param_groups:

self.add_param_group(param_group)#该方法用于为param_group添加新的参数组

2. def 方法

3. 代码实现

1.查看基本属性

defaults

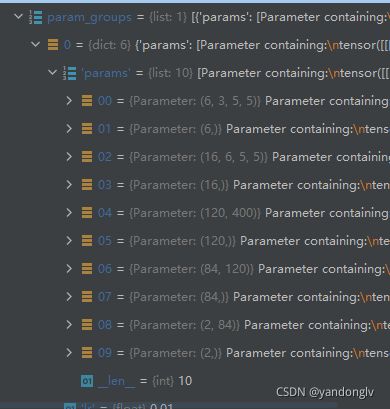

param_groups

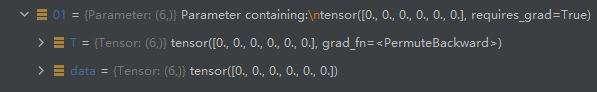

param_groups = [{‘params’:[00,01,02,…,09]}]

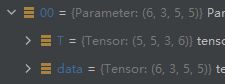

00是第一组的第一层的权重,卷积核,接收输入6个特征层,3个5×5卷积核

2 方法

optimizer.step()更新参数

0.5614 = 0.6614 - 0.1*1

w = w - lr * grad

weight = torch.randn((2, 2), requires_grad=True)

weight.grad = torch.ones((2, 2))

optimizer = optim.SGD([weight], lr=0.1)

print("weight before step:{}".format(weight.data))

optimizer.step() # 修改lr=1 0.1观察结果

print("weight after step:{}".format(weight.data))

weight before step:tensor([[0.6614, 0.2669],

[0.0617, 0.6213]])

weight after step:tensor([[ 0.5614, 0.1669],

[-0.0383, 0.5213]])

optimizer.zero_grad()参数梯度归零

print("weight in optimizer:{}\nweight in weight:{}\n".format(id(optimizer.param_groups[0]['params'][0]), id(weight)))

print("weight.grad is {}\n".format(weight.grad))

optimizer.zero_grad()

print("after optimizer.zero_grad(), weight.grad is\n{}".format(weight.grad))

#查看参数的地址,optimizer.param_groups和weight.grad是相同的地址

weight in optimizer:1887850565704

weight in weight:1887850565704

weight.grad is:

tensor([[1., 1.],

[1., 1.]])

after optimizer.zero_grad(), weight.grad is:

tensor([[0., 0.],

[0., 0.]])

#梯度被归零了。

optimizer.add_param_group()添加新的参数组

print("optimizer.param_groups is\n{}".format(optimizer.param_groups))

w2 = torch.randn((3, 3), requires_grad=True)#自定义w2=3×3随机数张量矩阵,开启自动求导

optimizer.add_param_group({"params": w2, 'lr': 0.0001})#将w2作为这一组的参数,lr作为学习率添加进参数组

print("optimizer.param_groups is\n{}".format(optimizer.param_groups))

#先输出没添加之前的

optimizer.param_groups is

[{'params': [tensor([[0.6614, 0.2669],[0.0617, 0.6213]], requires_grad=True)],

'lr': 0.1,

'momentum': 0,

'dampening': 0,

'weight_decay': 0,

'nesterov': False}]

#输出添加之后的

optimizer.param_groups is

[{'params': [tensor([[0.6614, 0.2669], [0.0617, 0.6213]], requires_grad=True)],

'lr': 0.1,

'momentum': 0,

'dampening': 0,

'weight_decay': 0,

'nesterov': False},

{'params': [tensor([[-0.4519, -0.1661, -1.5228],[ 0.3817, -1.0276, -0.5631], [-0.8923, -0.0583, -0.1955]], requires_grad=True)],

'lr': 0.0001, #w2和lr=0.0001已经添加进来了

'momentum': 0,

'dampening': 0,

'weight_decay': 0,

'nesterov': False}]

optimizer.state_dict()、torch.load()与optimizer.load_state_dict()完成参数的重载

optimizer = optim.SGD([weight], lr=0.1, momentum=0.9)

opt_state_dict = optimizer.state_dict()

for i in range(10):

optimizer.step()

torch.save(optimizer.state_dict(), os.path.join(BASE_DIR, "optimizer_state_dict.pkl"))

optimizer = optim.SGD([weight], lr=0.1, momentum=0.9)

state_dict = torch.load(os.path.join(BASE_DIR, "optimizer_state_dict.pkl"))

optimizer.load_state_dict(state_dict)

optimizer在模型中的使用

方法的使用,在前向传播之后,先清空参数原有的梯度,然后计算损失函数,反向传播,更新参数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)

# ============================ step 5/5 训练 ============================

outputs = net(inputs)

# backward

optimizer.zero_grad()#在前向传播之后,清空参数的梯度

loss = criterion(outputs, labels)#计算损失函数

loss.backward()#反向传播,计算梯度

# update weights

optimizer.step()#更新参数