Hive -- DDL 数据库 表 基础操作

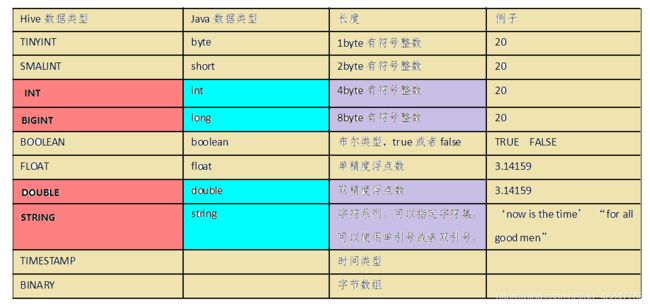

Hive 数据类型

对于Hive的String类型相当于数据库的varchar类型,该类型是一个可变的字符串,不过它不能声明其中最多能存储多少个字符,理论上它可以存储2GB的字符数

--- DDL基础操作 ---

--- 显示系统中所有的数据库

show databases ;

+----------------+

| database_name |

+----------------+

| default |

| demo |

+----------------+--- 创建数据库

create database demo ;

create database if not exists demo ; 避免报错

创建数据库 指定库的位置 不指定 在/user/hive/warehouse

--- 修改数据库

alter database demo set dbproperties("author"="DEMO") ;--- 显示数据库中所有的信息

+----------+----------+----------------------------------------------------+-------------+-------------+-------------+

| db_name | comment | location | owner_name | owner_type | parameters |

+----------+----------+----------------------------------------------------+-------------+-------------+-------------+

| demo | | hdfs://linux01:8020/user/hive/warehouse/demo.db | root | USER | |

+----------+----------+----------------------------------------------------+-------------+-------------+-------------+--- 使用当前数据库

use db_name ;

--- 删除数据库

1> 删除空数据库

drop database demo ;2> 如果删除的数据库不存在,最好采用 if exists判断数据库是否存在

hive> drop database demo;

FAILED: SemanticException [Error 10072]: Database does not exist: demo

hive> drop database if exists demo2;3> 如果数据库不为空,可以采用cascade命令,强制删除

hive> drop database demo;

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. InvalidOperationException(message:Database demo is not empty. One or more tables exist.)

hive> drop database demo cascade;--- 查看当前正在使用的数据库

select current_database();--- 过滤显示查询数据库

- show databases like 'db_hive*';

- show databases like '*lt' ;

--- 创建表 ---

1 )基础语法

CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name

[(col_name data_type [COMMENT col_comment], ...)]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)] 分区

[CLUSTERED BY (col_name, col_name, ...) 分桶

[SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS]

[ROW FORMAT row_format] row format delimited fields terminated by “分隔符”

[STORED AS file_format]

[LOCATION hdfs_path]

--- 字段解释说明

- CREATE TABLE 创建一个指定名字的表。如果相同名字的表已经存在,则抛出异常;用户可以用 IF NOT EXISTS 选项来忽略这个异常。

- EXTERNAL关键字可以让用户创建一个外部表,在建表的同时指定一个指向实际数据的路径(LOCATION),Hive创建内部表时,会将数据移动到数据仓库指向的路径;若创建外部表,仅记录数据所在的路径,不对数据的位置做任何改变。在删除表的时候,内部表的元数据和数据会被一起删除,而外部表只删除元数据,不删除数据。

- COMMENT:为表和列添加注释。

- PARTITIONED BY创建分区表

- CLUSTERED BY创建分桶表

- SORTED BY不常用

- ROW FORMAT

2 )建表语法

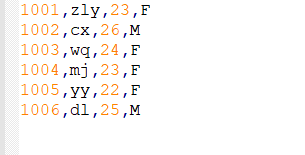

举例说明 -------- 下面为数据

1> 建表

- 根据HDFS的数据创建一个结构相同的表

- 指定 属性之间的分隔符

- 可以指定数据的位置 location hdfs_path

0: jdbc:hive2://linux01:10000> create table if not exists tb_user(

. . . . . . . . . . . . . . .> uid string ,

. . . . . . . . . . . . . . .> name string ,

. . . . . . . . . . . . . . .> age int ,

. . . . . . . . . . . . . . .> gender string

. . . . . . . . . . . . . . .> )

. . . . . . . . . . . . . . .> row format delimited fields terminated by "," 分割符 ","

. . . . . . . . . . . . . . .>

. . . . . . . . . . . . . . .> location "/demo/" 路径

. . . . . . . . . . . . . . .> ;2> 显示数据库中所有的表

0: jdbc:hive2://linux01:10000> show tables ;

OK

+-----------+

| tab_name |

+-----------+

| tb_user |

+-----------+3> 查看表结构

0: jdbc:hive2://linux01:10000> desc tb_user ;

OK

+-----------+------------+----------+

| col_name | data_type | comment |

+-----------+------------+----------+

| uid | string | |

| name | string | |

| age | int | |

| gender | string | |

+-----------+------------+----------+4> 查看表的详细信息

0: jdbc:hive2://linux01:10000> desc formatted tb_user ;

OK

+-------------------------------+----------------------------------------------------+-----------------------+

| col_name | data_type | comment |

+-------------------------------+----------------------------------------------------+-----------------------+

| # col_name | data_type | comment |

| uid | string | |

| name | string | |

| age | int | |

| gender | string | |

| | NULL | NULL |

| # Detailed Table Information | NULL | NULL |

| Database: | doit17 | NULL |

| OwnerType: | USER | NULL |

| Owner: | root | NULL |

| CreateTime: | Tue Sep 01 14:52:47 CST 2020 | NULL |

| LastAccessTime: | UNKNOWN | NULL |

| Retention: | 0 | NULL |

| Location: | hdfs://linux01:8020/doit17 | NULL |

| Table Type: | MANAGED_TABLE | NULL |

| Table Parameters: | NULL | NULL |

| | bucketing_version | 2 |

| | numFiles | 1 |

| | totalSize | 93 |

| | transient_lastDdlTime | 1598943167 |

| | NULL | NULL |

| # Storage Information | NULL | NULL |

| SerDe Library: | org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe | NULL |

| InputFormat: | org.apache.hadoop.mapred.TextInputFormat | NULL |

| OutputFormat: | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | NULL |

| Compressed: | No | NULL |

| Num Buckets: | -1 | NULL |

| Bucket Columns: | [] | NULL |

| Sort Columns: | [] | NULL |

| Storage Desc Params: | NULL | NULL |

| | field.delim | , |

| | serialization.format | , |

+-------------------------------+----------------------------------------------------+-----------------------+4>查询表中所有的数据信息

0: jdbc:hive2://linux01:10000> select * from tb_user ;

OK

+--------------+---------------+--------------+-----------------+

| tb_user.uid | tb_user.name | tb_user.age | tb_user.gender |

+--------------+---------------+--------------+-----------------+

| 1001 | zly | 23 | F |

| 1002 | cx | 26 | M |

| 1003 | wq | 24 | F |

| 1004 | mj | 23 | F |

| 1005 | yy | 22 | F |

| 1006 | dl | 25 | M |

+---------------------------------------------------------------+5> 查询表中平均年龄

0: jdbc:hive2://linux01:10000> select avg(age) age_avg(别名) from tb_user ;

+---------------------+

| age_avg |

+---------------------+

| 23.833333333333332 |

+---------------------+--- 内部表和外部表 ---

未被external修饰的是内部表(managed table),被external修饰的为外部表(external table);

区别:

内部表数据由Hive自身管理,外部表数据由HDFS管理;

内部表数据存储的位置是hive.metastore.warehouse.dir(默认:/user/hive/warehouse),

外部表数据的存储位置由自己制定(如果没有LOCATION,Hive将在HDFS上的/user/hive/warehouse文件夹下以外部表的表名创建一个文件夹,并将属于这个表的数据存放在这里);

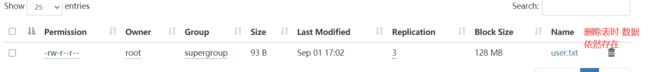

删除内部表会直接删除元数据(metadata)及存储数据;删除外部表仅仅会删除元数据,HDFS上的文件并不会被删除;

对内部表的修改会将修改直接同步给元数据,而对外部表的表结构和分区进行修改,则需要修复(MSCK REPAIR TABLE table_name;)

应用场景

对于一些公共的数据源使用外部表. 对于保存的一些业务维度表或者是统计好的报表使用管理表(内部表)

1> 内部表

(在创建表时 默认就是内部表 当删除表时 对应的数据也会被删除)

create table tb_user2(

uid string ,

name string ,

age int ,

gender string

)

row format delimited fields terminated by ","

location "/demo/"

;

2> 外部表

(在建表时 指定 external table 外部表 当删除表时 数据不会被删除)

create external table tb_user2(

uid string ,

name string ,

age int ,

gender string

)

row format delimited fields terminated by ","

location "/demo/"

;

3> 查看表类型

desc formatted user;

Table Type: MANAGED_TABLE -- 内部表

desc formatted user;

Table Type: EXTERNAL_TABLE -- 外部表4> 内部表和外部表互换

alter table user set tblproperties('EXTERNAL'='FALSE');

注意:'EXTERNAL'='FALSE' 要区分大小写呦