深度学习之基于Inception_ResNet_V2和CNN实现交通标志识别

这次的结果是没有想到的,利用官方的Inception_ResNet_V2模型识别效果差到爆,应该是博主自己的问题,但是不知道哪儿出错了。

本次实验分别基于自己搭建的Inception_ResNet_V2和CNN网络实现交通标志识别,准确率很高。

1.导入库

import tensorflow as tf

import matplotlib.pyplot as plt

import os,PIL,pathlib

import pandas as pd

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers,models

from tensorflow.keras import layers, models, Input

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Conv2D, Dense, Flatten, Dropout,BatchNormalization,Activation

from tensorflow.keras.layers import MaxPooling2D, AveragePooling2D, Concatenate, Lambda,GlobalAveragePooling2D

from tensorflow.keras import backend as K

2.导入数据

数据形式如下所示:

其实images中包含5998张交通标志的图片,其中一共是58种类别。

annotations中是各个图片的名称以及它所代表的种类,一共是58种。

#图片预处理

def preprocess_image(image):##归一化&&调整图片大小

image = tf.image.decode_jpeg(image,channels=3)

image = tf.image.resize(image,[299,299])

return image/255.0

def load_and_preprocess_image(path):#根据路径读入图片

image = tf.io.read_file(path)

return preprocess_image(image)

#导入数据

data_dir = "E:/tmp/.keras/datasets/trasig_photos/images"

data_dir = pathlib.Path(data_dir)

#导入训练数据的图片路径以及标签

train = pd.read_csv("E:/tmp/.keras/datasets/trasig_photos/annotations.csv")

#图片所在的主路径

img_dir = "E:/tmp/.keras/datasets/trasig_photos/images/"

#训练数据的标签

train_image_label = [i for i in train["category"]]

train_label_ds = tf.data.Dataset.from_tensor_slices(train_image_label)

#训练数据的路径既每一张图片的具体路径

train_image_paths = [img_dir+i for i in train["file_name"]]

#加载图片路径

train_path_ds = tf.data.Dataset.from_tensor_slices(train_image_paths)

#加载图片数据

train_image_ds = train_path_ds.map(load_and_preprocess_image,num_parallel_calls=tf.data.experimental.AUTOTUNE)

#将图片与路径对应进行打包

image_label_ds = tf.data.Dataset.zip((train_image_ds,train_label_ds))

训练集与测试集的划分:

train_ds = image_label_ds.take(5000).shuffle(1000)

test_ds = image_label_ds.skip(5000).shuffle(1000)

train_ds = train_ds.batch(batch_size)#设置batch_size

train_ds = train_ds.prefetch(buffer_size=tf.data.experimental.AUTOTUNE)

test_ds = test_ds.batch(batch_size)

test_ds = test_ds.prefetch(buffer_size=tf.data.experimental.AUTOTUNE)

3.CNN网络

CNN模型是博主自己搭建的,包含三层卷积池化层+Flatten+二层全连接层。其中池化层前两个用的MaxPooling,最后一个用的AveragePooling。

model = models.Sequential([

tf.keras.layers.Conv2D(32,(3,3),activation='relu',input_shape=(299,299,3)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(64,(3,3),activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(128,(3,3),activation='relu'),

tf.keras.layers.AveragePooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(1000,activation='relu'),

tf.keras.layers.Dense(58,activation='softmax')

])

优化器的设置,与上篇博客的设置无异。

#优化器的设置

initial_learning_rate = 1e-4

lr_sch = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate=initial_learning_rate,

decay_steps=100,

decay_rate=0.96,

staircase=True

)

模型编译&&训练

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=lr_sch),

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

history = model.fit(

train_ds,

validation_data=test_ds,

epochs=epochs

)

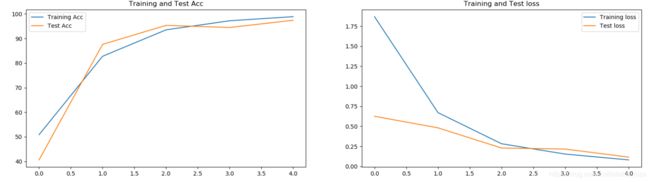

实验结果如下所示:

其中训练集的准确率已经接近100%,测试集的准确率95%左右,这是在batch_size=8,epoch=5的情况下。增加epoch的情况下,准确率应该会提高。

4.Inception_ResNet_V2网络

模型的搭建参考大神**K同学啊**的博客。

网络模型如下所示:

网络模型搭建:

def conv2d_bn(x, filters, kernel_size, strides=1, padding='same', activation='relu', use_bias=False, name=None):

x = Conv2D(filters, kernel_size, strides=strides, padding=padding, use_bias=use_bias, name=name)(x)

if not use_bias:

bn_axis = 1 if K.image_data_format() == 'channels_first' else 3

bn_name = None if name is None else name + '_bn'

x = BatchNormalization(axis=bn_axis, scale=False, name=bn_name)(x)

if activation is not None:

ac_name = None if name is None else name + '_ac'

x = Activation(activation, name=ac_name)(x)

return x

def inception_resnet_block(x, scale, block_type, block_idx, activation='relu'):

if block_type == 'block35':

branch_0 = conv2d_bn(x, 32, 1)

branch_1 = conv2d_bn(x, 32, 1)

branch_1 = conv2d_bn(branch_1, 32, 3)

branch_2 = conv2d_bn(x, 32, 1)

branch_2 = conv2d_bn(branch_2, 48, 3)

branch_2 = conv2d_bn(branch_2, 64, 3)

branches = [branch_0, branch_1, branch_2]

elif block_type == 'block17':

branch_0 = conv2d_bn(x, 192, 1)

branch_1 = conv2d_bn(x, 128, 1)

branch_1 = conv2d_bn(branch_1, 160, [1, 7])

branch_1 = conv2d_bn(branch_1, 192, [7, 1])

branches = [branch_0, branch_1]

elif block_type == 'block8':

branch_0 = conv2d_bn(x, 192, 1)

branch_1 = conv2d_bn(x, 192, 1)

branch_1 = conv2d_bn(branch_1, 224, [1, 3])

branch_1 = conv2d_bn(branch_1, 256, [3, 1])

branches = [branch_0, branch_1]

else:

raise ValueError('Unknown Inception-ResNet block type. '

'Expects "block35", "block17" or "block8", '

'but got: ' + str(block_type))

block_name = block_type + '_' + str(block_idx)

mixed = Concatenate(name=block_name + '_mixed')(branches)

up = conv2d_bn(mixed, K.int_shape(x)[3], 1, activation=None, use_bias=True, name=block_name + '_conv')

x = Lambda(lambda inputs, scale: inputs[0] + inputs[1] * scale,

output_shape=K.int_shape(x)[1:],

arguments={'scale': scale},

name=block_name)([x, up])

if activation is not None:

x = Activation(activation, name=block_name + '_ac')(x)

return x

def InceptionResNetV2(input_shape=[299, 299, 3], classes=1000):

inputs = Input(shape=input_shape)

# Stem block

x = conv2d_bn(inputs, 32, 3, strides=2, padding='valid')

x = conv2d_bn(x, 32, 3, padding='valid')

x = conv2d_bn(x, 64, 3)

x = MaxPooling2D(3, strides=2)(x)

x = conv2d_bn(x, 80, 1, padding='valid')

x = conv2d_bn(x, 192, 3, padding='valid')

x = MaxPooling2D(3, strides=2)(x)

# Mixed 5b (Inception-A block)

branch_0 = conv2d_bn(x, 96, 1)

branch_1 = conv2d_bn(x, 48, 1)

branch_1 = conv2d_bn(branch_1, 64, 5)

branch_2 = conv2d_bn(x, 64, 1)

branch_2 = conv2d_bn(branch_2, 96, 3)

branch_2 = conv2d_bn(branch_2, 96, 3)

branch_pool = AveragePooling2D(3, strides=1, padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 64, 1)

branches = [branch_0, branch_1, branch_2, branch_pool]

x = Concatenate(name='mixed_5b')(branches)

# 10次 Inception-ResNet-A block

for block_idx in range(1, 11):

x = inception_resnet_block(x, scale=0.17, block_type='block35', block_idx=block_idx)

# Reduction-A block

branch_0 = conv2d_bn(x, 384, 3, strides=2, padding='valid')

branch_1 = conv2d_bn(x, 256, 1)

branch_1 = conv2d_bn(branch_1, 256, 3)

branch_1 = conv2d_bn(branch_1, 384, 3, strides=2, padding='valid')

branch_pool = MaxPooling2D(3, strides=2, padding='valid')(x)

branches = [branch_0, branch_1, branch_pool]

x = Concatenate(name='mixed_6a')(branches)

# 20次 Inception-ResNet-B block

for block_idx in range(1, 21):

x = inception_resnet_block(x, scale=0.1, block_type='block17', block_idx=block_idx)

# Reduction-B block

branch_0 = conv2d_bn(x, 256, 1)

branch_0 = conv2d_bn(branch_0, 384, 3, strides=2, padding='valid')

branch_1 = conv2d_bn(x, 256, 1)

branch_1 = conv2d_bn(branch_1, 288, 3, strides=2, padding='valid')

branch_2 = conv2d_bn(x, 256, 1)

branch_2 = conv2d_bn(branch_2, 288, 3)

branch_2 = conv2d_bn(branch_2, 320, 3, strides=2, padding='valid')

branch_pool = MaxPooling2D(3, strides=2, padding='valid')(x)

branches = [branch_0, branch_1, branch_2, branch_pool]

x = Concatenate(name='mixed_7a')(branches)

# 10次 Inception-ResNet-C block

for block_idx in range(1, 10):

x = inception_resnet_block(x, scale=0.2, block_type='block8', block_idx=block_idx)

x = inception_resnet_block(x, scale=1., activation=None, block_type='block8', block_idx=10)

x = conv2d_bn(x, 1536, 1, name='conv_7b')

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(classes, activation='softmax', name='predictions')(x)

# 创建模型

model = Model(inputs, x, name='inception_resnet_v2')

return model

model = InceptionResNetV2([299, 299, 3], 58)

相比起VGG系列的网络,该网络复杂了太多了。而且运行速度真的是不敢恭维。但是模型的准确率是真的高。

总结:

本次实验真的体会到了硬件问题对于深度学习的局限性。昨晚用InceptionResNetV2的官方模型开始跑,跑了5-6个小时,才跑了6的epoch,而且每个epoch的准确率在30%左右,真的是心态炸裂。参考大神的博客,利用自己搭建的模型开始跑,这次epochs设置的是5,跑了5个小时左右,才算是跑完。

本次实验InceptionResNetV2模型的效果虽然好,但是自己搭建的CNN模型的准确率照样很好,所以InceptionResNetV2的优势在本次实验中并没有完全体现出来,但是它的强大还不是一般的CNN网络可以比拟的。

希望路过的大佬可以用官方的InceptionResNetV2模型跑一下,如果效果很好,可以私聊我分享一下经验,感谢!!

努力加油a啊