[论文阅读笔记36]CASREL代码运行记录

《[论文阅读笔记33]CASREL:基于标注与bert的实体与关系抽取》https://blog.csdn.net/ld326/article/details/116465089

总的来说,文档都还是写得很好的,按文档(readme.md)来就行,不过有点小小不同就是文件的命名,作一个补充记录。

0. 关于代码结构—值得学习,十分清晰

1. 关于环境

按说明的关键的几个句进行,可是依赖的包还是版本不对。这个是requirement.txt, 不过还是有些警告,先不处理警告:

absl-py==0.12.0

astor==0.8.1

blessings==1.7

cached-property==1.5.2

certifi==2020.12.5

gast==0.4.0

gpustat==0.4.1

grpcio==1.37.1

h5py==2.10.0

importlib-metadata==4.0.1

Keras==2.2.4

Keras-Applications==1.0.8

keras-bert==0.80.0

keras-embed-sim==0.8.0

keras-layer-normalization==0.14.0

keras-multi-head==0.27.0

keras-pos-embd==0.11.0

keras-position-wise-feed-forward==0.6.0

Keras-Preprocessing==1.1.2

keras-self-attention==0.46.0

keras-transformer==0.30.0

Markdown==3.3.4

mock==4.0.3

numpy==1.20.2

nvidia-ml-py3==7.352.0

protobuf==3.16.0

psutil==5.8.0

PyYAML==5.4.1

scipy==1.6.3

six==1.16.0

tensorboard==1.13.1

tensorflow-estimator==1.13.0

tensorflow-gpu==1.13.1

termcolor==1.1.0

tqdm==4.60.0

typing-extensions==3.10.0.0

Werkzeug==1.0.1

zipp==3.4.1

2. 关于数据

google那里,下载不是很方便,上传了一份(NYT)到csdn:https://download.csdn.net/download/ld326/18544111

3. 下载Bert

略

4. 数据预测处理

第一步,把下载下来的内容,把数字转成字符串;

这里把代码修改一下,想要做的工作是,把train,dev,test都要处理的:

for file_type in ['train', 'valid', 'test']:

file_name = f'{file_type}.json'

output = f'new_{file_type}.json'

output_normal = f'new_{file_type}_normal.json'

output_epo = f'new_{file_type}_epo.json'

output_seo = f'new_{file_type}_seo.json'

with open('relations2id.json', 'r') as f1, open('words2id.json', 'r') as f2:

rel2id = json.load(f1)

words2id = json.load(f2)

rel_dict = {j: i for i, j in rel2id.items()}

word_dict = {j: i for i, j in words2id.items()}

load_data(file_name, word_dict, rel_dict, output, output_normal, output_epo, output_seo)

另外,build文件,修改一下文件路径,修改为对应生成的新文件就行;

还有两个test文件夹,修改一下文件路径,修改为对应生成的新文件;

5. 训练

python run.py --train=True --dataset=NYT

默认的参数为:

{

"bert_model": "cased_L-12_H-768_A-12",

"max_len": 100,

"learning_rate": 1e-5,

"batch_size": 6,

"epoch_num": 100,

}

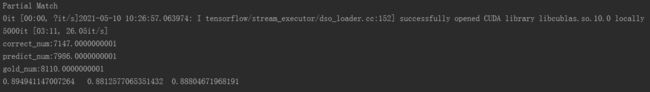

6. 预测评估

python run.py --dataset=NYT

结果与论文报告的基本相符的。

另外,抽取的结果也是可以看到:

"text": "But that spasm of irritation by a master intimidator was minor compared with what Bobby Fischer , the erratic former world chess champion , dished out in March at a news conference in Reykjavik , Iceland .",

"triple_list_gold": [

{

"subject": "Fischer",

"relation": "/people/person/nationality",

"object": "Iceland"

},

{

"subject": "Fischer",

"relation": "/people/deceased_person/place_of_death",

"object": "Reykjavik"

},

{

"subject": "Iceland",

"relation": "/location/location/contains",

"object": "Reykjavik"

},

{

"subject": "Iceland",

"relation": "/location/country/capital",

"object": "Reykjavik"

}

],

"triple_list_pred": [

{

"subject": "Fischer",

"relation": "/people/person/nationality",

"object": "Iceland"

},

{

"subject": "Iceland",

"relation": "/location/location/contains",

"object": "Reykjavik"

},

{

"subject": "Iceland",

"relation": "/location/country/capital",

"object": "Reykjavik"

}

],

"new": [],

"lack": [

{

"subject": "Fischer",

"relation": "/people/deceased_person/place_of_death",

"object": "Reykjavik"

}

]

7. 关于代码可能会出现的问题

代码运行过程问题记录:

Traceback (most recent call last):

File "/opt/data/private/code/CasRel/run.py", line 40, in <module>

subject_model, object_model, hbt_model = E2EModel(bert_config_path, bert_checkpoint_path, LR, num_rels)

File "/opt/data/private/code/CasRel/model.py", line 15, in E2EModel

bert_model = load_trained_model_from_checkpoint(bert_config_path, bert_checkpoint_path, seq_len=None)

File "/opt/data/private/pyenvs/cas_rel_env/lib/python3.7/site-packages/keras_bert/loader.py", line 169, in load_trained_model_from_checkpoint

**kwargs)

File "/opt/data/private/pyenvs/cas_rel_env/lib/python3.7/site-packages/keras_bert/loader.py", line 58, in build_model_from_config

**kwargs)

File "/opt/data/private/pyenvs/cas_rel_env/lib/python3.7/site-packages/keras_bert/bert.py", line 126, in get_model

adapter_activation=gelu,

TypeError: get_encoders() got an unexpected keyword argument 'use_adapter'

https://github.com/weizhepei/CasRel/issues/54

8. 关于是否支持中文

修改两个地方:第一处是pre-trained BERT; 第二处triple extraction 部分;

Hi, @fresh382227905. To make the model support Chinese, you may need

to change the pre-trained BERT and the triple extraction part (due to

the different tokenization between English and Chinese) with minor

revisions. You can also refer to @longlongman’s great work : )

参考:https://github.com/weizhepei/CasRel/issues/23

有这样的说法:

同意 @Phoeby2618

的说法,我试了(1)把中文分割成带空格的类似英文的格式,用代码里面的HBTokenizer(2)中文用原文,tokenizer用原生的Tokenier加上[unused1],metric函数中把’

‘.join(sub.split(’[unused1]’))也改过来了。(3)中文用原文,tokenizer用原生的Tokenier不加[unused1],metric同上。

前2者结果差不多。最后一种情况,pred的关系实体总是为0。应该是[unused1]不能随便去掉,暂时没搞清楚咋回事。

参考:https://github.com/weizhepei/CasRel/issues/50

另外还有一个项目直接是中文的:

https://github.com/longlongman/CasRel-pytorch-reimplement

对于项目要进行分词,采用DEMO例子[CMED数据集的结果]运行两者结果为:

CasRel-pytorch:

correct_num: 4927, predict_num: 8899, gold_num: 10610

epoch 39, eval time: 49.97s, f1: 0.51, precision: 0.55, recall: 0.46

saving the model, epoch: 39, best f1: 0.51, precision: 0.55, recall: 0.46

CasRel:

correct_num:4863.0000000001

predict_num:8697.0000000001

gold_num:10475.0000000001

f1: 0.5073, precision: 0.5592, recall: 0.4642, best f1: 0.5093

两都运行的结果差不多。

![[论文阅读笔记36]CASREL代码运行记录_第1张图片](http://img.e-com-net.com/image/info8/a15ea566075a4519829eae658474055f.jpg)