GG-CNN阅读报告

一、动机

本文主要描述一种基于利用卷积神经网络处理分析被抓取物图像,实现机器人高效、实时抓取未知物体轨迹生成的方法。

二、本文贡献

对比以往利用计算机视觉技术实现机器人抓取物体的研究,本文提出的方法可以实时生成让机器人对静态的、动态的、集中堆放的未知物体的抓取轨迹,采用的GG-CNN相比以往包含了更少的参数,性能更佳。

三、具体方法

首先对抓取点在笛卡尔坐标中进行定义,然后对深度相机拍摄的物体图片进行处理,利用图像中心点相对于相机参考帧的旋转角度、抓取宽度,再根据相机内参与实际物理位置,进一步转化为世界坐标系。将每个图片的中心像素S如此操作,生成的grasp map标记为集合G, G中每组数据的中心s点都会有一个对应的标量q值用来表示抓取成功的可能性。

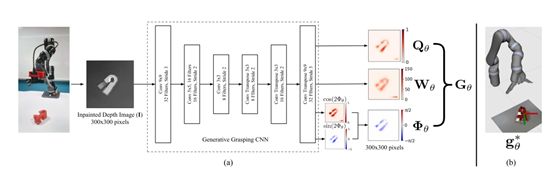

进一步将问题表示为求一个将图像输入I转化为集合G的复杂函数,这个函数可以通过损失函数为L2,训练权重为θ的神经网络来实现。具体操作时使用的是数据集中标记的抓取方块的中心1/3方块,GG-CNN的网络结构为完整的卷积拓扑。使用300*300的图像作为输入,OpenCV处理图像瑕疵问题,训练集与验证集按八二比例划分。

接下来分别使用开环与闭环的方式去实时检测图像帧,输入GG-CNN,生成推荐的排名前3的pose,再选择从现在姿态过渡到其中pose姿态最快的一个,执行到该位置后,垂直抓取,检测到碰撞后抓取物体并移到初始位置,记录下抓取成功的事件。

整体操作的pipeline如下图

其中Q为质量集合、W为抓取宽度集合、Φ为旋转角度集合。

四、实验结果

使用Intel Realsense SR300 深度相机,Kinova Mico六自由度机械臂搭建实验硬件环境。GG-CNN运行的计算环境为Nvidia GTX 1070显卡,操作系统为Ubuntu16.04。

分别对工作台上的静态、动态、动态多物体进行抓取实验,物体也区分为家中常见物品与复杂几何体。实验结果如下:

五、分析与总结

本文提出了一种生成抓取卷积神经网络(GG-CNN),这种网络与物体无关,可以通过图像直接生成抓取姿势的模型,无需像其他深度学习那样采样和分类。GG-CNN相比其他的网络,抓取的姿势可以以高达50hz的频率更新,并进行闭环控制。通过试验证明该系统是抓住未知的动态物体方法中能达到的最先进的技术,包括动态的堆放杂乱的物体。此外,在模拟机器人存在控制误差的情况下,闭环抓取方法的性能明显优于开环方法。

本文鼓励通过使用两个标准的对象集来重现机器人抓取实验,采用一组8个具有对抗性几何形状的3d打印对象,以及从标准机器人提出的一套12个家用物品基准对象集,并通过定义参数进行动态实验。当对象在抓取过程中被移动时,在两个对象集上分别可达到83%和88%的抓取成功率,且抓取动态杂乱堆放对象成功率为81%。

六、参考文献

[1] Vijay Badrinarayanan, Alex Kendall, and RobertoCipolla. SegNet: A Deep Convolutional EncoderDecoder Architecture for Image Segmentation. arXivpreprint arXiv:1511.00561, 2015.

[2] Antonio Bicchi and Vijay Kumar. Robotic Grasping and Contact: A Review . In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 348–353, 2000.

[3] Jeannette Bohg, Antonio Morales, Tamim Asfour, and Danica Kragic. Data-Driven Grasp Synthesis – A Survey. IEEE Transactions on Robotics, 30(2):289–309, 2014.

[4] G. Bradski. The OpenCV Library. Dr. Dobb’s Journal of Software Tools, 2000.

[5] Berk Calli, Aaron Walsman, Arjun Singh, Siddhartha Srinivasa, Pieter Abbeel, and Aaron M Dollar. Benchmarking in Manipulation Research: Using the YaleCMU-Berkeley Object and Model Set. IEEE Robotics & Automation Magazine, 22(3):36–52, 2015.

[6] Renaud Detry, Emre Baseski, Mila Popovic, Younes Touati, N Kruger, Oliver Kroemer, Jan Peters, and Justus Piater. Learning Object-specific Grasp Affordance Densities. In Proc. of the IEEE International Conference on Development and Learning (ICDL), pages 1–7, 2009.

[7] Sahar El-Khoury and Anis Sahbani. Handling Objects By Their Handles. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2008.

[8] Corey Goldfeder, Peter K Allen, Claire Lackner, and Raphael Pelossof. Grasp Planning via Decomposition Trees. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 4679–4684,2007.

[9] Kota Hara, Raviteja Vemulapalli, and Rama Chellappa. Designing Deep Convolutional Neural Networks for Continuous Object Orientation Estimation. arXiv preprint arXiv:1702.01499, 2017.

[10] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep Residual Learning for Image Recognition. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 770–778, 2016.

[11] Radu Horaud, Fadi Dornaika, and Bernard Espiau. Visually Guided Object Grasping. IEEE Transactions on Robotics and Automation, 14(4):525–532, 1998.

[12] Edward Johns, Stefan Leutenegger, and Andrew J. Davison. Deep Learning a Grasp Function for Grasping under Gripper Pose Uncertainty. In Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 4461–4468, 2016.

[13] Jens Kober, Matthew Glisson, and Michael Mistry. Playing Catch and Juggling with a Humanoid Robot. In Proc. of the IEEE-RAS International Conference on Humanoid Robots (Humanoids), pages 875–881, 2012.

[14] Danica Kragic, Henrik I Christensen, et al. Survey on Visual Servoing for Manipulation. Computational Vision and Active Perception Laboratory, Fiskartorpsv, 2002.

[15] S. Kumra and C. Kanan. Robotic Grasp Detection using Deep Convolutional Neural Networks. In Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 769–776, 2017.

[16] Jurgen Leitner, Adam W Tow, Niko S ¨ underhauf, Jake E ¨Dean, Joseph W Durham, Matthew Cooper, Markus Eich, Christopher Lehnert, Ruben Mangels, Christopher McCool, et al. The ACRV Picking Benchmark: A Robotic Shelf Picking Benchmark to Foster Reproducible Research. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 4705–4712,2017.

[17] Ian Lenz, Honglak Lee, and Ashutosh Saxena. Deep learning for detecting robotic grasps. The International Journal of Robotics Research (IJRR), 34(4-5):705–724,2015.

[18] Sergey Levine, Peter Pastor, Alex Krizhevsky, and Deirdre Quillen. Learning Hand-Eye Coordination for Robotic Grasping with Large-Scale Data Collection. In International Symposium on Experimental Robotics, pages 173–184, 2016.

[19] Jonathan Long, Evan Shelhamer, and Trevor Darrell.Fully Convolutional Networks for Semantic Segmentation. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3431–3440, 2015.

[20] Jeffrey Mahler, Florian T. Pokorny, Brian Hou, Melrose Roderick, Michael Laskey, Mathieu Aubry, Kai Kohlhoff, Torsten Kroger, James Kuffner, and Ken Goldberg. DexNet 1.0: A cloud-based network of 3D objects for robust grasp planning using a Multi-Armed Bandit model with correlated rewards. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 1957–1964, 2016.

[21] Jeffrey Mahler, Jacky Liang, Sherdil Niyaz, Michael Laskey, Richard Doan, Xinyu Liu, Juan Aparicio Ojea, and Ken Goldberg. Dex-Net 2.0: Deep Learning to Plan Robust Grasps with Synthetic Point Clouds and Analytic Grasp Metrics. In Robotics: Science and Systems (RSS),2017.

[22] Andrew T Miller, Steffen Knoop, Henrik I Christensen,and Peter K Allen. Automatic Grasp Planning Using Shape Primitives. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 1824–1829, 2003.

[23] Lerrel Pinto and Abhinav Gupta. Supersizing selfsupervision: Learning to grasp from 50k tries and 700 robot hours. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 3406–3413, 2016.

[24] Domenico Prattichizzo and Jeffrey C. Trinkle. Grasping. In Springer Handbook of Robotics, chapter 28, pages 671–700. Springer Berlin Heidelberg, 2008.

[25] Joseph Redmon and Anelia Angelova. Real-Time Grasp Detection Using Convolutional Neural Networks. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 1316–1322, 2015.

[26] Carlos Rubert, Daniel Kappler, Antonio Morales, Stefan Schaal, and Jeannette Bohg. On the Relevance of Grasp Metrics for Predicting Grasp Success. In Proc. of the IEEE/RSJ International Conference of Intelligent Robots and Systems (IROS), pages 265–272, 2017.

[27] Anis Sahbani, Sahar El-Khoury, and Philippe Bidaud. An overview of 3D object grasp synthesis algorithms. Robotics and Autonomous Systems, 60(3):326–336, 2012.

[28] Ashutosh Saxena, Justin Driemeyer, and Andrew Y. Ng. Robotic Grasping of Novel Objects using Vision. The International Journal of Robotics Research (IJRR), 27 (2):157–173, 2008.

[29] Karun B Shimoga. Robot Grasp Synthesis Algorithms: A Survey. The International Journal of Robotics Research (IJRR), 15(3):230–266, 1996.

[30] N. Vahrenkamp, S. Wieland, P. Azad, D. Gonzalez, T. Asfour, and R. Dillmann. Visual servoing for humanoid grasping and manipulation tasks. In Proc. of the International Conference on Humanoid Robots (Humanoids), pages 406–412, 2008.

[31] Jacob Varley, Jonathan Weisz, Jared Weiss, and Peter Allen. Generating Multi-Fingered Robotic Grasps via Deep Learning. In Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 4415–4420. IEEE, 2015.

[32] Ulrich Viereck, Andreas Pas, Kate Saenko, and Robert Platt. Learning a visuomotor controller for real world robotic grasping using simulated depth images. In Proc. of the Conference on Robot Learning (CoRL), pages 291– 300, 2017.

[33] Z. Wang, Z. Li, B. Wang, and H. Liu. Robot grasp detection using multimodal deep convolutional neural networks. Advances in Mechanical Engineering, 8(9), 2016.

[34] Jimei Yang, Brian Price, Scott Cohen, Honglak Lee, and Ming-Hsuan Yang. Object Contour Detection with a Fully Convolutional Encoder-Decoder Network. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 193–202, 2016.

[35] Yun Jiang, Stephen Moseson, and Ashutosh Saxena. Efficient Grasping from RGBD Images: Learning using a new Rectangle Representation. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), pages 3304–3311, 2011