人工神经网络

神经元与感知器

M-P神经元

M-P神经元的权值向量W无法自动学习和更新,不具备学习的能力

感知机

- 自动调整权值,具备学习能力

- 第一个用算法来精确定义的神经网络模型

- 线性二分类的分类器

- 感知机算法存在多个解,受到权值向量初始值,错误样本顺序的影响对于非线性可分的数据集,感知机训练法则无法收敛。

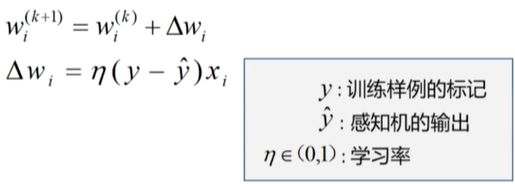

Delta法则:使用梯度下降法,找到能够最佳拟合训练样本集的权向量。

逻辑回归可以看做单层神经网络

神经网络的设计:

- 神经网络的结构

- 激活函数

- 损失函数

鸢尾花实例

(单层神经网络)

import tensorflow as tf

import pandas as pd

import numpy as np

import matplotlib as mpl

import matplotlib.pyplot as plt

#设置GPU的使用模式,避免GPU显存空间不足

# gpus=tf.config.experimental.list_physical_devices('GPU')

# tf.config.experimental.set_memory_growth(gpus[0],True)

#加载数据

TRAIN_URL = "http://download.tensorflow.org/data/iris_training.csv"

train_path = tf.keras.utils.get_file(TRAIN_URL.split("/")[-1],TRAIN_URL)

TEST_URL = "http://download.tensorflow.org/data/iris_test.csv"

test_path = tf.keras.utils.get_file(TEST_URL.split('/')[-1],TEST_URL)

df_iris_train = pd.read_csv(train_path,header=0)

df_iris_test = pd.read_csv(test_path,header=0)

iris_train = np.array(df_iris_train)

iris_test = np.array(df_iris_test)

print(iris_train.shape, iris_test.shape)

#数据处理

x_train = iris_train[:,0:4]#属性值

y_train = iris_train[:,4]#标签值

x_test = iris_test[:,0:4]

y_test = iris_test[:,4]

print(x_train.shape,y_train.shape)

print(x_test.shape,y_test.shape)

#对属性值进行标准化处理

x_train = x_train-np.mean(x_train,axis=0)

x_test = x_test-np.mean(x_test,axis=0)

print(x_train.dtype,y_train.dtype)

X_train = tf.cast(x_train,tf.float32)

Y_train = tf.one_hot(tf.constant(y_train,dtype=tf.int32),3)

X_test = tf.cast(x_test,tf.float32)

Y_test = tf.one_hot(tf.constant(y_test,dtype=tf.int32),3)

print(X_train.shape,Y_train.shape)

print(X_test.shape,Y_test.shape)

#设置超参数和现实间隔

learn_rate = 0.5

iter = 50

display_step = 10

#设置模型参数初始值

np.random.seed(612)

W=tf.Variable(np.random.randn(4,3),dtype=tf.float32)#权重为4行3列的二维张量

B = tf.Variable(np.zeros([3]),dtype=tf.float32)#偏置值为一维张量

acc_train = []

acc_test = []

cce_trian = []

cce_test = []

#训练模型

for i in range(0,iter+1):

with tf.GradientTape() as tape:

PRED_train = tf.nn.softmax(tf.matmul(X_train,W)+B)

Loss_train = tf.reduce_mean(tf.keras.losses.categorical_crossentropy(y_true=Y_train,y_pred=PRED_train))

PRED_test = tf.nn.softmax(tf.matmul(X_test,W)+B)

Loss_test = tf.reduce_mean(tf.keras.losses.categorical_crossentropy(y_true=Y_test,y_pred=PRED_test))

accuracy_train = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(PRED_train.numpy(),axis=1),y_train),tf.float32))

accuracy_test = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(PRED_test.numpy(),axis=1),y_test),tf.float32))

acc_train.append(accuracy_train)

acc_test.append(accuracy_test)

cce_trian.append(Loss_train)

cce_test.append(Loss_test)

grads = tape.gradient(Loss_train,[W,B])

W.assign_sub(learn_rate*grads[0])

B.assign_sub(learn_rate*grads[1])

if i % display_step == 0:

print("i: %i, TrainAcc:%f, TrainLoss:%f, TestAcc:%f, TestLoss: %f"%(i,accuracy_train,Loss_train,accuracy_test,Loss_test))

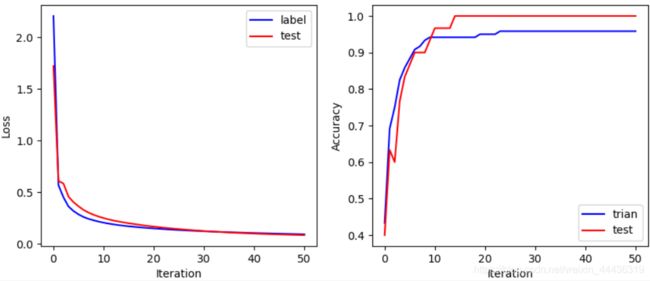

#结果可视化

plt.figure(figsize=(10,4))

plt.subplot(121)

plt.plot(cce_trian,color="blue",label="label")

plt.plot(cce_test,color = "red",label="test")

plt.xlabel("Iteration")

plt.ylabel("Loss")

plt.legend()

plt.subplot(122)

plt.plot(acc_train,color = "blue",label = "trian")

plt.plot(acc_test,color = "red",label = "test")

plt.xlabel("Iteration")

plt.ylabel("Accuracy")

plt.legend()

plt.show()

import tensorflow as tf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

#设置GPU的使用模式,避免GPU显存空间不足

# gpus=tf.config.experimental.list_physical_devices('GPU')

# tf.config.experimental.set_memory_growth(gpus[0],True)

#加载数据

TRAIN_URL = "http://download.tensorflow.org/data/iris_training.csv"

train_path = tf.keras.utils.get_file(TRAIN_URL.split("/")[-1],TRAIN_URL)

TEST_URL = "http://download.tensorflow.org/data/iris_test.csv"

test_path = tf.keras.utils.get_file(TEST_URL.split('/')[-1],TEST_URL)

df_iris_train = pd.read_csv(train_path,header=0)

df_iris_test = pd.read_csv(test_path,header=0)

iris_train = np.array(df_iris_train)

iris_test = np.array(df_iris_test)

print(iris_train.shape, iris_test.shape)

#数据处理

x_train = iris_train[:,0:4]#属性值

y_train = iris_train[:,4]#标签值

x_test = iris_test[:,0:4]

y_test = iris_test[:,4]

print(x_train.shape,y_train.shape)

print(x_test.shape,y_test.shape)

#对属性值进行标准化处理

x_train = x_train-np.mean(x_train,axis=0)

x_test = x_test-np.mean(x_test,axis=0)

print(x_train.dtype,y_train.dtype)

X_train = tf.cast(x_train,tf.float32)

Y_train = tf.one_hot(tf.constant(y_train,dtype=tf.int32),3)

X_test = tf.cast(x_test,tf.float32)

Y_test = tf.one_hot(tf.constant(y_test,dtype=tf.int32),3)

print(X_train.shape,Y_train.shape)

print(X_test.shape,Y_test.shape)

#设置超参数和现实间隔

learn_rate = 0.5

iter = 50

display_step = 10

#设置模型参数初始值

np.random.seed(612)

W1=tf.Variable(np.random.randn(4,16),dtype=tf.float32)#权重为4行3列的二维张量

B1 = tf.Variable(tf.zeros([16]),dtype=tf.float32)#偏置值为一维张量

W2 = tf.Variable(np.random.randn(16,3),dtype=tf.float32)

B2 = tf.Variable(tf.zeros([3]),dtype=tf.float32)

acc_train = []

acc_test = []

cce_trian = []

cce_test = []

#训练模型

for i in range(0,iter+1):

with tf.GradientTape() as tape:

Hidden_train = tf.nn.relu(tf.matmul(X_train,W1)+B1)

PRED_train = tf.nn.softmax(tf.matmul(Hidden_train,W2)+B2)

Loss_train = tf.reduce_mean(tf.keras.losses.categorical_crossentropy(y_true=Y_train,y_pred=PRED_train))

HIdden_test = tf.nn.relu(tf.matmul(X_test,W1)+B1)

PRED_test = tf.nn.softmax(tf.matmul(HIdden_test,W2)+B2)

Loss_test = tf.reduce_mean(tf.keras.losses.categorical_crossentropy(y_true=Y_test,y_pred=PRED_test))

accuracy_train = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(PRED_train.numpy(),axis=1),y_train),tf.float32))

accuracy_test = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(PRED_test.numpy(),axis=1),y_test),tf.float32))

acc_train.append(accuracy_train)

acc_test.append(accuracy_test)

cce_trian.append(Loss_train)

cce_test.append(Loss_test)

grads = tape.gradient(Loss_train,[W1,B1,W2,B2])

W1.assign_sub(learn_rate*grads[0])

B1.assign_sub(learn_rate*grads[1])

W2.assign_sub(learn_rate*grads[2])

B2.assign_sub(learn_rate*grads[3])

if i % display_step == 0:

print("i: %i, TrainAcc:%f, TrainLoss:%f, TestAcc:%f, TestLoss: %f"%(i,accuracy_train,Loss_train,accuracy_test,Loss_test))

#结果可视化

plt.figure(figsize=(10,4))

plt.subplot(121)

plt.plot(cce_trian,color="blue",label="label")

plt.plot(cce_test,color = "red",label="test")

plt.xlabel("Iteration")

plt.ylabel("Loss")

plt.legend()

plt.subplot(122)

plt.plot(acc_train,color = "blue",label = "trian")

plt.plot(acc_test,color = "red",label = "test")

plt.xlabel("Iteration")

plt.ylabel("Accuracy")

plt.legend()

plt.show()

理论上,如果神经网络中有足够的隐含层,每个隐含层中有足够多的神经元,神经网络就可以表示任意复杂的函数或空间分布。

误差反向传播算法(Backpropagation,BP)

利用链式法则,反向传播损失函数的梯度信息,计算出损失函数对网络中所有模型参数的梯度。

- 只有一组输入和一组输出

- 各个层按照先后顺序堆叠

Sequential模型实现手写数字识别实例

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

#设置GPU的使用模式,避免GPU显存空间不足

# gpus=tf.config.experimental.list_physical_devices('GPU')

# tf.config.experimental.set_memory_growth(gpus[0],True)

#下载mnist数据集

# mnist = tf.keras.datasets.mnist

# (train_x, train_y),(test_x,test_y) = mnist.load_data()

#提前下载好数据集自行读取

mnist = np.load('mnist.npz')

train_x = mnist['x_train']

train_y = mnist['y_train']

test_x = mnist['x_test']

test_y = mnist['y_test']

print(train_x.shape)

print(train_y.shape)

print(test_x.shape)

print(test_y.shape)

#数据预处理

# X_train = train_x.reshape((60000,28*28))

# X_test = test_x.reshape((10000,28*28))

# print(X_train.shape)

# print(X_test.shape)

#对属性进行归一化,使其取值范围在0~1

X_train, X_test = tf.cast(train_x/255.0,tf.float32),tf.cast(test_x/255.0,tf.float32)

y_train,y_test = tf.cast(train_y,tf.int16),tf.cast(test_y,tf.int16)

print(type(X_train),type(y_train))

#建立模型

model = tf.keras.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28,28)))#完成形状转换,把输入的属性拉直变成一维数组

model.add(tf.keras.layers.Dense(128,activation='relu'))#添加隐含层,有128个节点,激活函数为relu,该层为全连接层

model.add(tf.keras.layers.Dense(10,activation='softmax'))#添加输出层,有10个节点,激活函数是softmax函数,该层也是全连接层

print(model.summary())#查看网络结果

#配置训练方法

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['sparse_categorical_accuracy'])#优化器使用adam,损失函数使用稀疏交叉熵损失函数,准确率使用稀疏分类准确率函数

#训练模型

#划分十分之二的数据为测试集,每个小批量使用64条数据,一共训练5轮

model.fit(X_train,y_train,batch_size=64,epochs=5,validation_split=0.2)

#评估模型

model.evaluate(X_test,y_test,verbose=2)

#使用模型

# 测试集中的前四个数据识别

# model.predict([[X_test[0]]])

# np.argmax(model.predict([X_test[0]]))

# model.predict(X_test[0:4])

y_pred = np.argmax(model.predict(X_test[0:4]),axis=1)

for i in range(4):

plt.subplot(1,4,i+1)

plt.axis('off')

plt.imshow(test_x[i],cmap='gray')

plt.title('y='+str(test_y[i])+'\ny_pred='+str(y_pred[i]))

plt.figure()

#随机从测试集中抽取四个样本来验证

num = np.random.randint(1,10000)

y_pred = np.argmax(model.predict(X_test[num:num+4]),axis=1)

print(y_pred)

for i in range(4):

plt.subplot(1,4,i+1)

plt.axis('off')

plt.imshow(test_x[num],cmap='gray')

plt.title('y='+str(test_y[num])+'\ny_pred='+str(y_pred[i]))

num = num + 1

plt.show()

保存模型

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

#提前下载好数据集自行读取

mnist = np.load('mnist.npz')

train_x = mnist['x_train']

train_y = mnist['y_train']

test_x = mnist['x_test']

test_y = mnist['y_test']

print(train_x.shape)

print(train_y.shape)

print(test_x.shape)

print(test_y.shape)

#数据预处理

#对属性进行归一化,使其取值范围在0~1

X_train, X_test = tf.cast(train_x/255.0,tf.float32),tf.cast(test_x/255.0,tf.float32)

y_train,y_test = tf.cast(train_y,tf.int16),tf.cast(test_y,tf.int16)

print(type(X_train),type(y_train))

#建立模型

model = tf.keras.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28,28)))#完成形状转换,把输入的属性拉直变成一维数组

model.add(tf.keras.layers.Dense(128,activation='relu'))#添加隐含层,有128个节点,激活函数为relu,该层为全连接层

model.add(tf.keras.layers.Dense(10,activation='softmax'))#添加输出层,有10个节点,激活函数是softmax函数,该层也是全连接层

print(model.summary())#查看网络结果

#配置训练方法

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['sparse_categorical_accuracy'])#优化器使用adam,损失函数使用稀疏交叉熵损失函数,准确率使用稀疏分类准确率函数

#训练模型

#划分十分之二的数据为测试集,每个小批量使用64条数据,一共训练5轮

model.fit(X_train,y_train,batch_size=64,epochs=5,validation_split=0.2)

#评估模型

model.evaluate(X_test,y_test,verbose=2)

#保存模型

model.save('mnist_model.h5')#保存完整模型到h5网页

读取保存的模型

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

#提前下载好数据集自行读取

mnist = np.load('mnist.npz')

train_x = mnist['x_train']

train_y = mnist['y_train']

test_x = mnist['x_test']

test_y = mnist['y_test']

print(train_x.shape)

print(train_y.shape)

print(test_x.shape)

print(test_y.shape)

#数据预处理

#对属性进行归一化,使其取值范围在0~1

X_train, X_test = tf.cast(train_x/255.0,tf.float32),tf.cast(test_x/255.0,tf.float32)

y_train,y_test = tf.cast(train_y,tf.int16),tf.cast(test_y,tf.int16)

print(type(X_train),type(y_train))

#读取保存的模型

model = tf.keras.models.load_model('mnist_model.h5')

model.summary()

#评估模型

model.evaluate(X_test,y_test,verbose=2)

#随机从测试集中抽取四个样本来验证

num = np.random.randint(1,10000)

y_pred = np.argmax(model.predict(X_test[num:num+4]),axis=1)

print(y_pred)

for i in range(4):

plt.subplot(1,4,i+1)

plt.axis('off')

plt.imshow(test_x[num],cmap='gray')

plt.title('y='+str(test_y[num])+'\ny_pred='+str(y_pred[i]))

num = num + 1

plt.show()