DataAnnotation

KITTI格式的3D目标框标注

在得到图像数据,激光雷达数据,标定数据之后,需要进行3D目标框标注。本文采用的标注工具采用的是:SUSTechPOINTS。这个工具是2020年IEEE收录的,是一个比较好的开源项目,可以使得激光雷达和图像数据联合标注,感谢这个工作的贡献者。标注分为下列两个步骤:

- 安装SUSTechPOINTS

- 导入数据

- 标注结果

一、安装SUSTechPOINTS

二、导入数据

这个部分强调的是calibration这个文件。我们从autoware标定得到的结果是相机到激光雷达的变换矩阵,而SUST标注需要的是雷达到图像的变换矩阵。这个具体的变换原理,可以参考这篇博文。

而要从autoware标定的结果到SUST需要的标定参数,我们需要把autoware标定得到的外参矩阵,进行求逆。然后放入SUST中即可。例子:

import numpy as np

a = np.array([ [6.0286026043575580e-03, -9.0804300016155137e-03,

9.9994059910657929e-01, 6.1113303192026514e-02],

[-9.9974137931300844e-01, -2.1982070752877503e-02,

5.8277830037655742e-03, 3.9766420007541822e-03],

[2.1927846222605130e-02, -9.9971712716968164e-01,

-9.2106027835603399e-03, 5.3362131154951524e-02], [0., 0., 0., 1. ] ])

print(np.linalg.inv(a))

三、标注结果

从SUST标注后得到的结果是json文件,并以以下格式输出:

[{"psr":{"position":{"x":8.578882506346547,"y":4.570226735185341,"z":-0.620151247095764},"scale":{"x":3.870270893514173,"y":1.5810586719944622,"z":1.4560020655161139},"rotation":{"x":0,"y":0,"z":6.152285613280012}},"obj_type":"Car","obj_id":""}]

因此,我们需要转换为KITTI格式,下面为转换为KITTI的代码:

import json

import os

import math

import numpy as np

import random

import shutil

random.seed(0)

np.random.seed(0)

label_path = './data/20210409/label/'

calibFile = './data/20210409/calib/front.json'

sourceImagePath = './data/20210409/image/front/'

sourceVelodynePath = './data/20210409/bin/'

kittiLabelPath = '/home/.../catkin_ws/src/pcdetection/src/data/kitti/training/label_2/'

kittiCalibPath = '/home/.../catkin_ws/src/pcdetection/src/data/kitti/training/calib/'

imageSetsPath = '/home/.../catkin_ws/src/pcdetection/src/data/kitti/ImageSets/'

trainingPath = '/home/.../catkin_ws/src/pcdetection/src/data/kitti/training/'

testingPath = '/home/.../catkin_ws/src/pcdetection/src/data/kitti/testing/'

errorFlag = 0

def calibLabelFileGen(Path, fname, istrain=True):

if istrain:

if os.path.exists(Path + "label_2/" + fname.replace('json', 'txt')):

os.remove(Path + "label_2/" + fname.replace('json', 'txt'))

with open(label_path + fname)as fp:

# json content

jsonContent = json.load(fp)

if (len(jsonContent) == 0):

print("the json annotation file is empty, please check file: ", fname)

return 1

else:

for i in range(len(jsonContent)):

content = jsonContent[i]

psr = content["psr"]

position = psr["position"]

scale = psr["scale"]

rotation = psr["rotation"]

#lidar -> camera

pointXYZ = np.array([position["x"], position["y"], position["z"], 1]).T

camPosition = np.matmul(Tr_velo_to_cam, pointXYZ) #Tr_velo_to_cam @ pointXYZ camera coordinate position

#print(invExtrinsic @ pointXYZ)

# kitti content

kittiDict = {}

kittiDict["objectType"] = content["obj_type"]

kittiDict["truncated"] = "1.0"

kittiDict["occluded"] = "0"

kittiDict["alpha"] = "0.0"

kittiDict["bbox"] = [0.00, 0.00, 50.00, 50.00] # should be higher than 50

kittiDict["diamensions"] = [scale['z'], scale['y'], scale['x']] #height, width, length

kittiDict["location"] = [camPosition[0], camPosition[1] + float(scale["z"])/2 , camPosition[2] ] # camera coordinate

kittiDict["rotation_y"] = -math.pi/2 - rotation["z"]

# write txt files

with open(Path + "label_2/" + fname.replace('json', 'txt'), 'a+') as f:

for item in kittiDict.values():

if isinstance(item, list):

for temp in item:

f.writelines(str(temp) + " ")

else:

f.writelines(str(item)+ " ")

f.writelines("\n")

# write calibration files

with open(Path + "calib/" + fname.replace('json', 'txt'), 'w') as f:

P2 = np.array(intrinsic).reshape(3,3)

P2 = np.insert(P2, 3, values=np.array([0,0,0]), axis=1)

f.writelines("P0: ")

for num in P2.flatten():

f.writelines(str(num)+ " ")

f.writelines("\n")

f.writelines("P1: ")

for num in P2.flatten():

f.writelines(str(num)+ " ")

f.writelines("\n")

f.writelines("P2: ")

for num in P2.flatten():

f.writelines(str(num)+ " ")

f.writelines("\n")

f.writelines("P3: ")

for num in P2.flatten():

f.writelines(str(num)+ " ")

f.writelines("\n")

f.writelines("R0_rect: ")

for num in np.eye(3,3).flatten():

f.writelines(str(num)+ " ")

f.writelines("\n")

f.writelines("Tr_velo_to_cam: ")

for temp in Tr_velo_to_cam[:3].flatten():

f.writelines(str(temp) + " ")

f.writelines("\n")

f.writelines("Tr_imu_to_velo: ")

for temp in Tr_velo_to_cam[:3].flatten():

f.writelines(str(temp) + " ")

return 0

# SUST image coordinate, kitti camera coordinate

def getCalibMatrix():

with open(calibFile) as fp:

calib = json.load(fp)

return calib["extrinsic"], calib["intrinsic"]

extrinsic, intrinsic = getCalibMatrix()

Tr_velo_to_cam = np.array(extrinsic).reshape(4,4)

print("Tr_velo_to_cam Extrinsic: ", Tr_velo_to_cam)

# read all annotation json files

files = os.listdir(label_path)

total_num = len(files)

testing_num = 4

training_num = total_num - testing_num

print("total files num:", total_num)

print("training files num:", training_num)

print("testing files num:", testing_num)

fileLists = ["test.txt", "train.txt", "trainval.txt", "val.txt"]

for fileName in fileLists:

if fileName == "test.txt":

with open(imageSetsPath + fileName, 'w') as f:

for i in range(training_num,total_num):

errorFlag = calibLabelFileGen(testingPath ,files[i], istrain=False)

if errorFlag:

pass

else:

shutil.copy(sourceImagePath + files[i].replace("json", "png"), testingPath + 'image_2/' + files[i].replace("json", "png")) # copy Image

shutil.copy(sourceVelodynePath + files[i].replace("json", "bin"), testingPath + 'velodyne/' + files[i].replace("json", "bin")) # copy bin

f.writelines(files[i].strip(".json") + "\n")

elif fileName == "train.txt":

with open(imageSetsPath + fileName, 'w') as f:

for i in range(training_num):

errorFlag = calibLabelFileGen(trainingPath , files[i], istrain=True)

if errorFlag:

pass

else:

shutil.copy(sourceImagePath + files[i].replace("json", "png"), trainingPath + 'image_2/' + files[i].replace("json", "png")) # copy Image

shutil.copy(sourceVelodynePath + files[i].replace("json", "bin"), trainingPath + 'velodyne/' + files[i].replace("json", "bin")) # copy bin

f.writelines(files[i].strip(".json") + "\n")

else:

shutil.copy(imageSetsPath + 'train.txt', imageSetsPath + fileName)

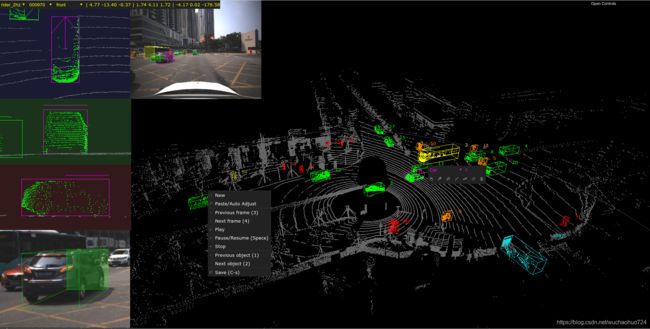

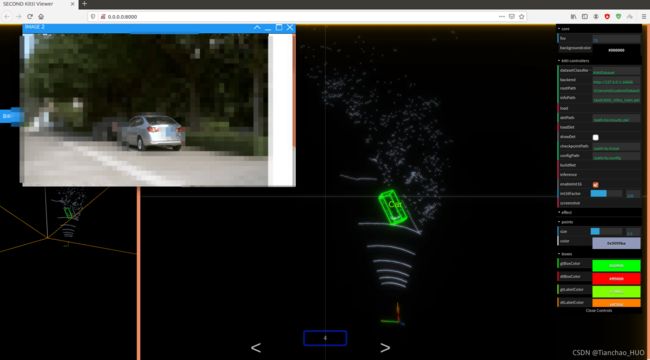

因为本文只用了一个相机,而KITTI有多个,因此其他的参数,如P0,P1等都是直接写入P2的参数,仅仅是为了满足KITTI的数据格式。而R0_rect本文则写入单位矩阵,Tr_velo_to_cam是上面一部分求出来的autoware标定结果的逆矩阵。而对于这种SUST到KITTI的转换,我们需要进行进一步的验证,保证标注的结果和训练的标注是一致的。而因为我们没有进行2D图像标注框,所以对于kittiDict[“bbox”] = [0.00, 0.00, 50.00, 50.00]设置为这个参数。为什么是50呢,因为KITTI上说明了Easy, Moderate, 和 hard的bounding box像素范围。如果全部设为0,会在数据预处理的时候,所有的数据都会被认为无效。我们通过kitti_viewer 查看转换后的结果。通过安装second.pytorch,利用其生成的kitti_infos_train.pkl。我们可以验证标注结果,如下图所示。

kitti训练的只是相机视野范围内的视锥体范围的点云,因此会出现以上右图的情况。

kitti训练的只是相机视野范围内的视锥体范围的点云,因此会出现以上右图的情况。

与其对应的标准文件显示如下:

上图的左图,代表的是SUST标注的结果,而右图代表的是转换后,kitti训练数据的结果。可以看出是一致的。

至此,标注完成。

Reference:

- https://github.com/naurril/SUSTechPOINTS