fashion_mnist数据集分类模型及详细注解笔记

可以用ipython编程,方便查看代码块的输出结果,代码如下:

import tensorflow as tf

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl,np,pd,sklearn,tf,keras:

print(module.__name__,module.__version__)#打印版本信息

输出:

2.0.0

sys.version_info(major=3, minor=7, micro=3, releaselevel=‘final’, serial=0)

matplotlib 3.1.1

numpy 1.17.3

pandas 0.25.3

sklearn 0.21.3

tensorflow 2.0.0

tensorflow_core.keras 2.2.4-tf

数据集处理:

fashion_mnist = keras.datasets.fashion_mnist#下载数据集

(x_train_all,y_train_all),(x_test,y_test) = fashion_mnist.load_data()

x_valid,x_train = x_train_all[:5000],x_train_all[5000:]

y_valid,y_train = y_train_all[:5000],y_train_all[5000:]

#共60000张图片,前5000张分给验证集,后55000张分给训练集

print(x_valid.shape,y_valid.shape)

print(x_train.shape,y_train.shape)

print(x_test.shape,y_test.shape)#打印数据集维度

输出:

(5000, 28, 28) (5000,)

(55000, 28, 28) (55000,)

(10000, 28, 28) (10000,)

print(np.max(x_train),np.min(x_train))#打印归一化之前训练集的最大值和最小值

输出:

255 0

#归一化提高准确率:

#x=(x-u)/std

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

#fit_transform把训练集转化为归一化后的数据,把x_train三维[None,28,28]变成二维[None,784],然后再reshape变回来,fit可以记录训练集均值方差

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

print(np.max(x_train_scaled),np.min(x_train_scaled))#打印归一化之后训练集的最大值和最小值

输出:

2.0231433 -0.8105136

定义一个显示单个图片的函数:

def show_single_image(i):

plt.imshow(i,cmap="binary")

plt.show()

show_single_image(x_train[0])#调用函数,显示训练集中的第一张图片

def show_imgs(n_rows,n_cols,x_data,y_data,class_names):

assert len(x_data) == len(y_data)

assert n_rows * n_cols < len(x_data)#行和列的乘积不能大于数据总数

plt.figure(figsize = (n_cols * 1,n_rows * 2))#定义大图

for row in range(n_rows):

for col in range(n_cols):

index = n_cols * row + col

plt.subplot(n_rows, n_cols, index+1)#显示子图

plt.imshow(x_data[index],cmap = "binary",interpolation = 'nearest')#显示图片,最近邻值插值

plt.axis('off')#去掉坐标系

plt.title(class_names[y_data[index]])

plt.show()

class_names = ['1','2','3','4','5','6','7','8','9','10']#类别名

show_imgs(3, 5,x_valid,y_valid,class_names)#调用函数显示大图,3行5列数据

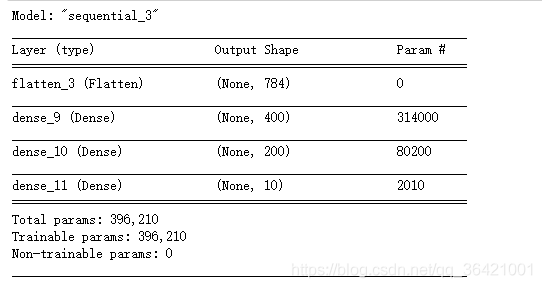

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape = [28,28]))#将28*28的二维向量展平为一维向量784

model.add(keras.layers.Dense(400,activation = 'relu'))#全连接层

model.add(keras.layers.Dense(200,activation = 'relu'))

model.add(keras.layers.Dense(10,activation = 'softmax'))#使用softmax函数进行分类,分为10类

#为了提高准确率,模型中的参数可以自己改

#上面模型还可以这样子写:

'''

model=keras.models.Sequential([

keras.layers.Flatten(input_shape = [28,28]),

keras.layers.Dense(400,activation = 'relu'),

keras.layers.Dense(200,activation = 'relu'),

keras.layers.Dense(10,activation = 'softmax')'''

])

#relu: y = max(0,x)

#softmax ; 将向量变成概率分布,x=[x1,x2,x3]

# y=[e^x1/sum,e^x2/sum,e^x3/sum],sum=e^x1+e^x2+e^x3

#sparse:把y--index变成one_hot编码

model.compile(loss = 'sparse_categorical_crossentropy',

optimizer = "adam",

metrics = ["accuracy"])

model.summary()#查看模型概况,参数

#y=wx+b=[400,784]*[784]+[400]

#784*400+400=314000

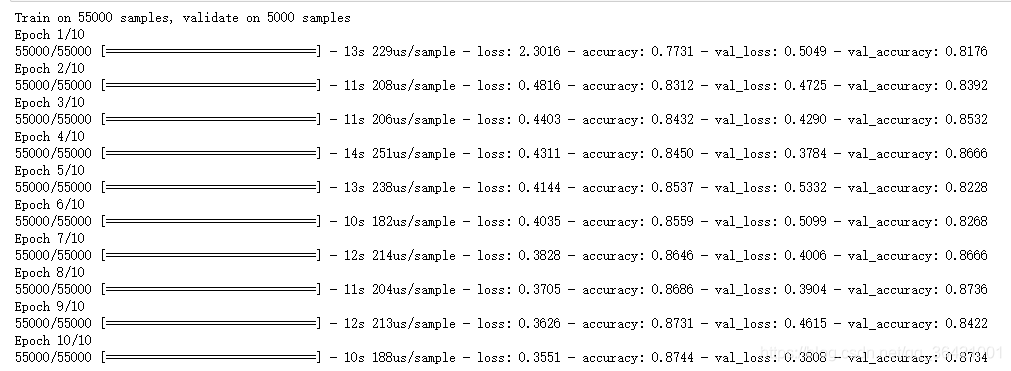

history=model.fit(x_train_scaled,y_train,epochs=10,

validation_data=(x_valid_scaled,y_valid))

查看history函数类型:

type(history)

输出:

tensorflow.python.keras.callbacks.History

history.history#调用history函数,查看保存的历史数据,保存了损失值,准确率,验证集损失值,验证集准确率

输出:

{‘loss’: [2.3015895209962673,

0.4815958445809104,

0.44026698377782647,

0.43106005086465315,

0.4143984176288952,

0.40346119379130274,

0.3827689492702484,

0.37051838736967607,

0.3626356901017102,

0.3551486981045116],

‘accuracy’: [0.77312726,

0.83116364,

0.8431818,

0.8449818,

0.8536909,

0.8559091,

0.86463636,

0.86856365,

0.87307274,

0.87438184],

‘val_loss’: [0.5049341471433639,

0.47250081301927566,

0.4290150542378426,

0.3783755409121513,

0.533234388539195,

0.5099339093625546,

0.40061412162780763,

0.3903807807683945,

0.4614589884161949,

0.3808077231764793],

‘val_accuracy’: [0.8176,

0.8392,

0.8532,

0.8666,

0.8228,

0.8268,

0.8666,

0.8736,

0.8422,

0.8734]}

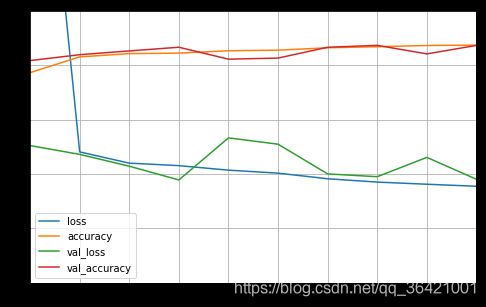

画出结果曲线:

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize = (8,5))#dataframe取数据,图的大小为8*5

plt.grid(True)#设置网格

plt.gca().set_ylim(0,1)#设置y轴坐标轴范围

plt.show()#显示数据

plot_learning_curves(history)

测试集评估:

model.evaluate(x_test_scaled,y_test)