ConvNext模型复现--CVPR2022

ConvNext模型复现--CVPR2022

- 1、Abstract

- 2、ConvNet现代化:路线图

- 3、模型设计方案

-

- 3.1 Macro Design(宏观设计)

- 3.2 ResNext-ify

- 3.3 Inverted Bottleneck

- 3.4 Large kernel

- 3.5 Micro Design(微观设计)

- 4、ConvNext网络结构

- 5、ConvNeXt-T结构图

- 6、Tensorflow复现ConvNext模型

-

- 6.1 模型配置

- 6.2 Layer scale module

- 6.3 随机深度模块

- 6.4 ConvNext Block

- 6.5 ConvNext架构

- 6.6 ConvNextTiny模型

- 6.7 ConvNextTiny模型摘要

- References

参考论文:A ConvNet for the 2020s

作者:Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie

1、Abstract

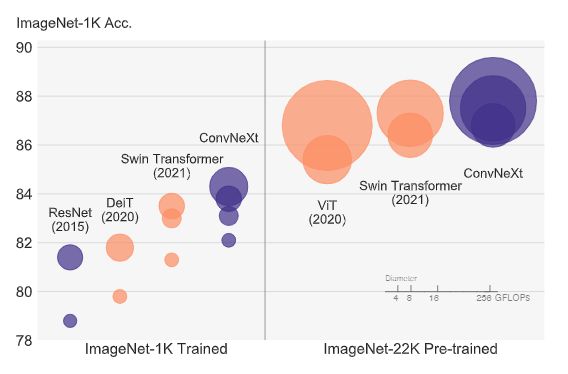

ConvNet和Vision Transformer的ImageNet分类结果。我们证明了标准的 ConvNet 模型可以实现与分层视觉 Transformer 相同的可扩展性,同时在设计上要简单得多。

视觉识别的“咆哮的 20 年代”始于 Vision Transformers (ViTs) 的引入,它迅速取代了 ConvNets,成为最先进的图像分类模型。另一方面,普通的 ViT 在应用于目标检测和语义分割等一般计算机视觉任务时面临困难。正是分层 Transformers(例如,Swin Transformers)重新引入了几个 ConvNet 先验,使 Transformers 作为通用视觉骨干实际上可行,并在各种视觉任务上表现出卓越的性能。然而,这种混合方法的有效性在很大程度上仍归功于 Transformer 的内在优势,而不是卷积固有的归纳偏差。

在这项工作中,我们重新检查了设计空间并测试了纯 ConvNet 所能达到的极限。我们逐渐将标准 ResNet “现代化”为视觉 Transformer 的设计,并在此过程中发现了导致性能差异的几个关键组件。这一探索的结果是一系列纯 ConvNet 模型,称为 ConvNeXt。 ConvNeXts 完全由标准 ConvNet 模块构建,在准确性和可扩展性方面与 Transformer 竞争,达到 87.8% ImageNet top-1 准确率,在 COCO 检测和 ADE20K 分割方面优于 Swin Transformers,同时保持标准 ConvNet 的简单性和效率。

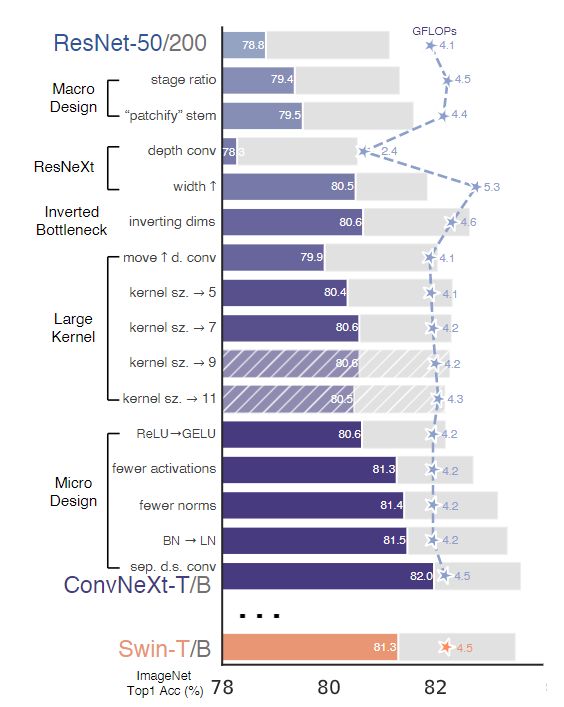

2、ConvNet现代化:路线图

图 2. 我们对标准 ConvNet (ResNet) 进行现代化改造,以设计hierarchical sision Transformer (Swin),而不引入任何基于注意力的模块。前景条是 ResNet-50/Swin-T FLOP 机制中的模型精度; ResNet-200/Swin-B 方案的结果用灰色条显示。阴影条表示未采用修改。两种方案的详细结果见附录。许多 Transformer 架构选择可以合并到 ConvNet 中,它们会带来越来越好的性能。最后,我们的纯 ConvNet 模型,名为 ConvNeXt,可以胜过 Swin Transformer。

3、模型设计方案

3.1 Macro Design(宏观设计)

-

Changing stage compute ratio:对于较大的 Swin Transformers,比例为 1:1:9:1。按照设计,我们将每个阶段的块数从 ResNet-50 中的 (3, 4, 6, 3) 调整为 (3, 3, 9, 3),这也使 FLOPs 与 Swin-T 对齐。这将模型准确率从 78.8% 提高到 79.4%。

-

Changing stem to “Patchify”:我们将 ResNet 风格的stem cell替换为使用 4×4、步幅为 4 的卷积层实现的patchify layer。准确率从 79.4% 变为 79.5%。这表明 ResNet 中的干细胞可以用更简单的“patchify”层 à la ViT 代替,这将产生类似的性能。

3.2 ResNext-ify

在这一部分中,我们尝试采用 ResNeXt [87] 的思想,它比普通的 ResNet 具有更好的 FLOPs/accuracy 权衡。核心组件是分组卷积,其中卷积滤波器被分成不同的组。在高层次上,ResNeXt 的指导原则是“使用更多的组,扩大宽度”。更准确地说,ResNeXt 对瓶颈块中的 3×3 卷积层采用分组卷积。由于这显着降低了 FLOP,因此扩展了网络宽度以补偿容量损失。

我们使用深度卷积,这是分组卷积的一种特殊情况,其中组数等于通道数。

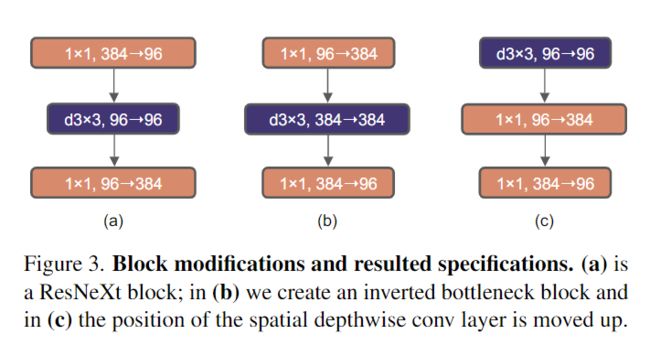

3.3 Inverted Bottleneck

每个 Transformer 块的一个重要设计是它创建了一个倒置瓶颈,即 MLP 块的隐藏维度是输入维度的四倍见图 4)。有趣的是,这种 Transformer 设计与 ConvNets 中使用的扩展比为 4 的倒置瓶颈设计相连。

在这里,我们探索倒置瓶颈设计。图 3 (a) 至 (b) 说明了这些配置。尽管深度卷积层的 FLOPs 增加了,但由于下采样残差块的快捷 1×1 卷积层的 FLOPs 显着减少,这种变化将整个网络的 FLOPs 减少到 4.6G。有趣的是,这会稍微提高性能(从 80.5% 提高到 80.6%)。在 ResNet-200 / Swin-B 方案中,这一步带来了更大的收益(81.9% 到 82.6%),同时也减少了 FLOP。

(a) 是一个 ResNeXt 块;

(b)中,我们创建了一个倒置的bottle block,

©中,空间深度卷积层的位置上移。

3.4 Large kernel

尽管 Swin Transformers 将局部窗口重新引入了 self-attention block,但窗口大小至少为 7×7,明显大于 3×3 的 ResNe(X)t 内核大小。在这里,我们重新审视了在 ConvNets 中使用大内核大小的卷积。

- Moving up depthwise conv layer.:向上移动深度卷积层的位置。这个中间步骤将 FLOP 减少到 4.1G,导致性能暂时下降到 79.9%。

-

Increasing the kernel size.:我们尝试了几种内核大小,包括 3、5、7、9 和 11。网络的性能从 79.9% (3×3) 提高到 80.6% (7×7),而网络的 FLOPs 大致保持不变。此外,我们观察到较大内核大小的好处在 7×7 处达到饱和点。我们也在大容量模型中验证了这种行为:当我们将内核大小增加到 7×7 以上时,ResNet-200 机制模型没有表现出进一步的增益。

我们将在每个块中使用 7×7 深度卷积。

3.5 Micro Design(微观设计)

这里的大部分探索都是在层级完成的,重点是激活函数和归一化层的具体选择。

-

Replacing ReLU with GELU (用 GELU 替换 ReLU):作者发现 ReLU 在我们的 ConvNet 中也可以用 GELU 代替,尽管准确率保持不变(80.6%)。

-

Fewer activation functions(更少的激活函数):通常的做法是在每个卷积层上附加一个激活函数,包括 1×1convs。在这里,我们研究了当我们坚持相同的策略时性能如何变化。如图 4 所示,我们从残差块中消除了所有 GELU 层,除了两个 1×1 层之间的一个,复制了 Transformer 块的风格。这个过程将结果提高了 0.7% 到 81.3%,实际上与 Swin-T 的性能相当。

- Fewer normalization layers. :Transformer 块通常也具有较少的归一化层。在这里,作者删除了两个 BatchNorm (BN) 层,在 conv 1 × 1 层之前只留下一个 BN 层。这进一步将性能提升至 81.4%,已经超过了 Swin-T 的结果。请注意,我们每个块的归一化层比 Transformer 还要少,因为根据经验,我们发现在块的开头添加一个额外的 BN 层并不能提高性能。

- Substituting BN with LN(用 LN 代替 BN):BatchNorm [38] 是 ConvNets 中的重要组成部分,因为它提高了收敛性并减少了过度拟合。然而,BN 也有许多错综复杂的东西,可能会对模型的性能产生不利影响 [84]。在开发替代规范化 [60, 75, 83] 技术方面已经进行了许多尝试,但 BN 在大多数视觉任务中仍然是首选选项。另一方面,Transformers 中使用了更简单的层规范化 [5] (LN),从而在不同的应用场景中实现了良好的性能。

从现在开始,我们将使用一个 LayerNorm 作为我们在每个残差块中的归一化选择。

- Separate downsampling layers:在 ResNet 中,空间下采样是通过每个阶段开始时的残差块来实现的,使用步长为 2 的 3×3 卷积(在快捷连接处使用步长为 2 的 1×1 卷积)。在 Swin Transformers 中,在各个阶段之间添加了一个单独的下采样层。我们探索了一种类似的策略,**在该策略中,作者使用步长为 2 的 2×2 卷积层进行空间下采样。**进一步的调查表明,在空间分辨率发生变化的地方添加归一化层有助于稳定训练。

简单来说就是:Layer Normalization+2x2 conv,stride=2。

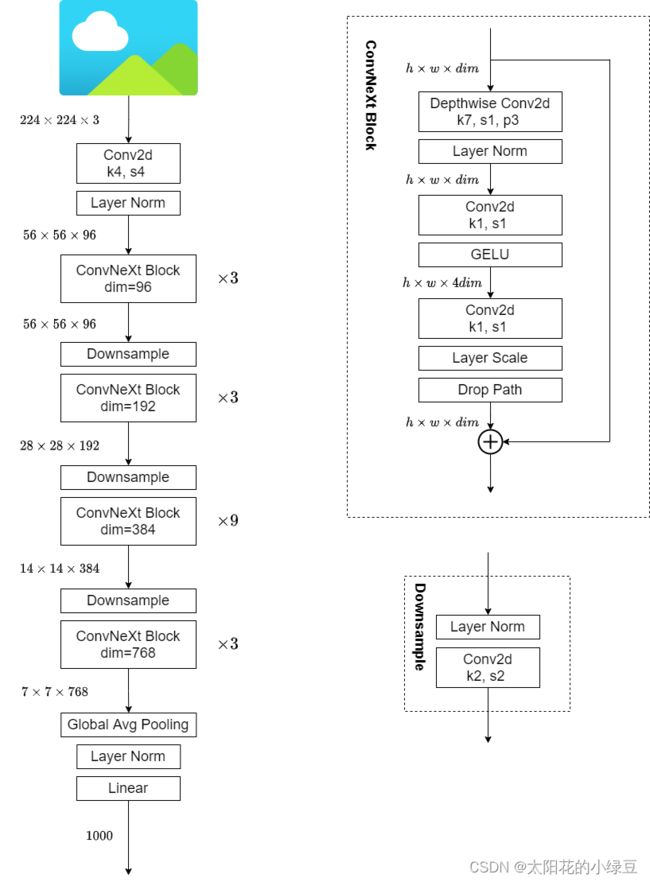

4、ConvNext网络结构

网络有五种配置方案:

- ConvNeXt-T:C=(96,192,384,768),B=(3,3,9,3)

- ConvNeXt-S:C=(96,192,384,768),B=(3,3,27,3)

- ConvNeXt-B:C=(128,256,512,1024),B=(3,3,27,3)

- ConvNeXt-L:C=(192,384,768,1536),B=(3,3,27,3)

- ConvNeXt-XL:C=(256,512,1024,2048),B=(3,3,27,3)

C是每个Stage中输入特征曾的Channel

B是每个Stage中block的重复次数

5、ConvNeXt-T结构图

图片来源:ConvNeXt网络详解

13.1 ConvNeXt网络讲解

github源码中关于DownSample部分代码如下:

可以看到,DownSample blocks=LayerNormalization+2x2 conv,stride=2

ConvNeXt Block会发现其中还有一个Layer Scale操作(论文中只说将值设置为1e-6),其实它就是将输入的特征层乘上一个可训练的参数,该参数就是一个向量,元素个数与特征层channel相同,即对每个channel的数据进行缩放。

我看源码这部分有点不是很懂,应该就是一个缩放操作。

6、Tensorflow复现ConvNext模型

import tensorflow as tf

import numpy as np

from tensorflow.keras import layers

from tensorflow.keras.models import Model

6.1 模型配置

MODEL_CONFIGS = {

"tiny": {

"depths": [3, 3, 9, 3],

"projection_dims": [96, 192, 384, 768],

"default_size": 224,

},

"small": {

"depths": [3, 3, 27, 3],

"projection_dims": [96, 192, 384, 768],

"default_size": 224,

},

"base": {

"depths": [3, 3, 27, 3],

"projection_dims": [128, 256, 512, 1024],

"default_size": 224,

},

"large": {

"depths": [3, 3, 27, 3],

"projection_dims": [192, 384, 768, 1536],

"default_size": 224,

},

"xlarge": {

"depths": [3, 3, 27, 3],

"projection_dims": [256, 512, 1024, 2048],

"default_size": 224,

},

}

我们这里只复现tiny这个配置,其他的方法都是一样的,改个参数就行。

6.2 Layer scale module

class LayerScale(layers.Layer):

def __init__(self, init_values, projection_dim, **kwargs):

super().__init__(**kwargs)

self.init_values = init_values

self.projection_dim = projection_dim

def build(self, input_shape):

self.gamma = tf.Variable(self.init_values * tf.ones((self.projection_dim,)))

def call(self, x):

return x * self.gamma

def get_config(self):

config = super().get_config()

config.update(

{

"init_values": self.init_values,

"projection_dim": self.projection_dim

}

)

return config

6.3 随机深度模块

# 随机深度模块

'''

drop_path_rate (float):丢弃路径的概率。应该在[0, 1]。

返回:残差路径丢弃或保留的张量。

'''

class StochasticDepth(layers.Layer):

def __init__(self, drop_path_rate, **kwargs):

super().__init__(**kwargs)

self.drop_path_rate = drop_path_rate

def call(self, x, training=None):

if training:

keep_prob = 1 - self.drop_path_rate

shape = (tf.shape(x)[0],) + (1,) * (len(tf.shape(x)) - 1)

random_tensor = keep_prob + tf.random.uniform(shape, 0, 1)

random_tensor = tf.floor(random_tensor)

return (x / keep_prob) * random_tensor

return x

def get_config(self):

config = super().get_config()

config.update({"drop_path_rate": self.drop_path_rate})

return config

6.4 ConvNext Block

def ConvNextBlock(inputs,

projection_dim, # 卷积层的filters数量

drop_path_rate=0.0, # 丢弃路径的概率。

layer_scale_init_value=1e-6,

name=None):

x = inputs

# Depthwise卷积是分组卷积的一种特殊情况:当分组数=通道数

x = layers.Conv2D(filters=projection_dim,

kernel_size=(7, 7),

padding='same',

groups=projection_dim,

name=name + '_depthwise_conv')(x)

x = layers.LayerNormalization(epsilon=1e-6, name=name + '_layernorm')(x)

x = layers.Dense(4 * projection_dim, name=name + '_pointwise_conv_1')(x)

x = layers.Activation('gelu', name=name + '_gelu')(x)

x = layers.Dense(projection_dim, name=name + '_pointwise_conv_2')(x)

if layer_scale_init_value is not None:

# Layer scale module

x = LayerScale(layer_scale_init_value, projection_dim, name=name + '_layer_scale')(x)

if drop_path_rate:

# 随机深度模块

layer = StochasticDepth(drop_path_rate, name=name + '_stochastic_depth')

else:

layer = layers.Activation('linear', name=name + '_identity')

return layers.Add()([inputs, layer(x)])

6.5 ConvNext架构

def ConvNext(depths, # tiny:[3,3,9,3]

projection_dims, # tiny:[96, 192, 384, 768],

drop_path_rate=0.0, # 随机深度概率,如果为0.0,图层缩放不会被使用

layer_scale_init_value=1e-6, # 缩放比例

default_size=224, # 默认输入图像大小

model_name='convnext', # 模型的可选名称

include_preprocessing=True, # 是否包含预处理

include_top=True, # 是否包含分类头

weights=None,

input_tensor=None,

input_shape=None,

pooling=None,

classes=1000, # 分类个数

classifier_activation='softmax'): # 分类器激活

img_input = layers.Input(shape=input_shape)

inputs = img_input

x = inputs

# if include_preprocessing:

# x = PreStem(x, name=model_name)

# Stem block:4*4,96,stride=4

stem = tf.keras.Sequential(

[

layers.Conv2D(projection_dims[0],

kernel_size=(4, 4),

strides=4,

name=model_name + '_stem_conv'),

layers.LayerNormalization(epsilon=1e-6, name=model_name + '_stem_layernorm')

],

name=model_name + '_stem'

)

# Downsampling blocks

downsample_layers = []

downsample_layers.append(stem)

num_downsample_layers = 3

for i in range(num_downsample_layers):

downsample_layer = tf.keras.Sequential(

[

layers.LayerNormalization(epsilon=1e-6, name=model_name + '_downsampling_layernorm_' + str(i)),

layers.Conv2D(projection_dims[i + 1],

kernel_size=(2, 2),

strides=2,

name=model_name + '_downsampling_conv_' + str(i))

],

name=model_name + '_downsampling_block_' + str(i)

)

downsample_layers.append(downsample_layer)

# Stochastic depth schedule.

# This is referred from the original ConvNeXt codebase:

# https://github.com/facebookresearch/ConvNeXt/blob/main/models/convnext.py#L86

depth_drop_rates = [

float(x) for x in np.linspace(0.0, drop_path_rate, sum(depths))

]

# First apply downsampling blocks and then apply ConvNeXt stages.

cur = 0

num_convnext_blocks = 4

for i in range(num_convnext_blocks):

x = downsample_layers[i](x)

for j in range(depths[i]): # depth:[3,3,9,3]

x = ConvNextBlock(x,

projection_dim=projection_dims[i],

drop_path_rate=depth_drop_rates[cur + j],

layer_scale_init_value=layer_scale_init_value,

name=model_name + f"_stage_{i}_block_{j}")

cur += depths[i]

if include_top:

x = layers.GlobalAveragePooling2D(name=model_name + '_head_gap')(x)

x = layers.LayerNormalization(epsilon=1e-6, name=model_name + '_head_layernorm')(x)

x = layers.Dense(classes, name=model_name + '_head_dense')(x)

else:

if pooling == 'avg':

x = layers.GlobalAveragePooling2D()(x)

elif pooling == 'max':

x = layers.GlobalMaxPooling2D()(x)

x = layers.LayerNormalization(epsilon=1e-6)(x)

model = Model(inputs=inputs, outputs=x, name=model_name)

# Load weights.

# if weights == "imagenet":

# if include_top:

# file_suffix = ".h5"

# file_hash = WEIGHTS_HASHES[model_name][0]

# else:

# file_suffix = "_notop.h5"

# file_hash = WEIGHTS_HASHES[model_name][1]

# file_name = model_name + file_suffix

# weights_path = utils.data_utils.get_file(

# file_name,

# BASE_WEIGHTS_PATH + file_name,

# cache_subdir="models",

# file_hash=file_hash,

# )

# model.load_weights(weights_path)

# elif weights is not None:

# model.load_weights(weights)

return model

6.6 ConvNextTiny模型

def ConvNextTiny(model_name='convnext-tiny',

include_top=True,

include_processing=True,

weights='imagenet',

input_tensor=None,

input_shape=None,

pooling=None,

classes=1000,

classifier_activation='softmax'):

return ConvNext(depths=MODEL_CONFIGS['tiny']['depths'],

projection_dims=MODEL_CONFIGS['tiny']['projection_dims'],

drop_path_rate=0.0,

layer_scale_init_value=1e-6,

default_size=MODEL_CONFIGS["tiny"]['default_size'],

model_name=model_name,

include_top=include_top,

include_preprocessing=include_processing,

weights=weights,

input_tensor=input_tensor,

input_shape=input_shape,

pooling=pooling,

classes=classes,

classifier_activation=classifier_activation

)

6.7 ConvNextTiny模型摘要

if __name__ == '__main__':

model = ConvNextTiny(input_shape=(224, 224, 3))

model.summary()

Model: "convnext-tiny"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

convnext-tiny_stem (Sequential) (None, 56, 56, 96) 4896 input_1[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_d (None, 56, 56, 96) 4800 convnext-tiny_stem[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_l (None, 56, 56, 96) 192 convnext-tiny_stage_0_block_0_dep

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_p (None, 56, 56, 384) 37248 convnext-tiny_stage_0_block_0_lay

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_g (None, 56, 56, 384) 0 convnext-tiny_stage_0_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_p (None, 56, 56, 96) 36960 convnext-tiny_stage_0_block_0_gel

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_l (None, 56, 56, 96) 96 convnext-tiny_stage_0_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_0_i (None, 56, 56, 96) 0 convnext-tiny_stage_0_block_0_lay

__________________________________________________________________________________________________

add (Add) (None, 56, 56, 96) 0 convnext-tiny_stem[0][0]

convnext-tiny_stage_0_block_0_ide

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_d (None, 56, 56, 96) 4800 add[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_l (None, 56, 56, 96) 192 convnext-tiny_stage_0_block_1_dep

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_p (None, 56, 56, 384) 37248 convnext-tiny_stage_0_block_1_lay

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_g (None, 56, 56, 384) 0 convnext-tiny_stage_0_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_p (None, 56, 56, 96) 36960 convnext-tiny_stage_0_block_1_gel

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_l (None, 56, 56, 96) 96 convnext-tiny_stage_0_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_1_i (None, 56, 56, 96) 0 convnext-tiny_stage_0_block_1_lay

__________________________________________________________________________________________________

add_1 (Add) (None, 56, 56, 96) 0 add[0][0]

convnext-tiny_stage_0_block_1_ide

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_d (None, 56, 56, 96) 4800 add_1[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_l (None, 56, 56, 96) 192 convnext-tiny_stage_0_block_2_dep

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_p (None, 56, 56, 384) 37248 convnext-tiny_stage_0_block_2_lay

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_g (None, 56, 56, 384) 0 convnext-tiny_stage_0_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_p (None, 56, 56, 96) 36960 convnext-tiny_stage_0_block_2_gel

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_l (None, 56, 56, 96) 96 convnext-tiny_stage_0_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_0_block_2_i (None, 56, 56, 96) 0 convnext-tiny_stage_0_block_2_lay

__________________________________________________________________________________________________

add_2 (Add) (None, 56, 56, 96) 0 add_1[0][0]

convnext-tiny_stage_0_block_2_ide

__________________________________________________________________________________________________

convnext-tiny_downsampling_bloc (None, 28, 28, 192) 74112 add_2[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_d (None, 28, 28, 192) 9600 convnext-tiny_downsampling_block_

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_l (None, 28, 28, 192) 384 convnext-tiny_stage_1_block_0_dep

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_p (None, 28, 28, 768) 148224 convnext-tiny_stage_1_block_0_lay

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_g (None, 28, 28, 768) 0 convnext-tiny_stage_1_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_p (None, 28, 28, 192) 147648 convnext-tiny_stage_1_block_0_gel

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_l (None, 28, 28, 192) 192 convnext-tiny_stage_1_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_0_i (None, 28, 28, 192) 0 convnext-tiny_stage_1_block_0_lay

__________________________________________________________________________________________________

add_3 (Add) (None, 28, 28, 192) 0 convnext-tiny_downsampling_block_

convnext-tiny_stage_1_block_0_ide

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_d (None, 28, 28, 192) 9600 add_3[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_l (None, 28, 28, 192) 384 convnext-tiny_stage_1_block_1_dep

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_p (None, 28, 28, 768) 148224 convnext-tiny_stage_1_block_1_lay

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_g (None, 28, 28, 768) 0 convnext-tiny_stage_1_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_p (None, 28, 28, 192) 147648 convnext-tiny_stage_1_block_1_gel

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_l (None, 28, 28, 192) 192 convnext-tiny_stage_1_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_1_i (None, 28, 28, 192) 0 convnext-tiny_stage_1_block_1_lay

__________________________________________________________________________________________________

add_4 (Add) (None, 28, 28, 192) 0 add_3[0][0]

convnext-tiny_stage_1_block_1_ide

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_d (None, 28, 28, 192) 9600 add_4[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_l (None, 28, 28, 192) 384 convnext-tiny_stage_1_block_2_dep

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_p (None, 28, 28, 768) 148224 convnext-tiny_stage_1_block_2_lay

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_g (None, 28, 28, 768) 0 convnext-tiny_stage_1_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_p (None, 28, 28, 192) 147648 convnext-tiny_stage_1_block_2_gel

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_l (None, 28, 28, 192) 192 convnext-tiny_stage_1_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_1_block_2_i (None, 28, 28, 192) 0 convnext-tiny_stage_1_block_2_lay

__________________________________________________________________________________________________

add_5 (Add) (None, 28, 28, 192) 0 add_4[0][0]

convnext-tiny_stage_1_block_2_ide

__________________________________________________________________________________________________

convnext-tiny_downsampling_bloc (None, 14, 14, 384) 295680 add_5[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_d (None, 14, 14, 384) 19200 convnext-tiny_downsampling_block_

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_0_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_0_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_0_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_0_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_0_lay

__________________________________________________________________________________________________

add_6 (Add) (None, 14, 14, 384) 0 convnext-tiny_downsampling_block_

convnext-tiny_stage_2_block_0_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_d (None, 14, 14, 384) 19200 add_6[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_1_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_1_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_1_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_1_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_1_lay

__________________________________________________________________________________________________

add_7 (Add) (None, 14, 14, 384) 0 add_6[0][0]

convnext-tiny_stage_2_block_1_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_d (None, 14, 14, 384) 19200 add_7[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_2_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_2_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_2_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_2_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_2_lay

__________________________________________________________________________________________________

add_8 (Add) (None, 14, 14, 384) 0 add_7[0][0]

convnext-tiny_stage_2_block_2_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_d (None, 14, 14, 384) 19200 add_8[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_3_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_3_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_3_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_3_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_3_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_3_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_3_lay

__________________________________________________________________________________________________

add_9 (Add) (None, 14, 14, 384) 0 add_8[0][0]

convnext-tiny_stage_2_block_3_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_d (None, 14, 14, 384) 19200 add_9[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_4_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_4_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_4_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_4_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_4_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_4_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_4_lay

__________________________________________________________________________________________________

add_10 (Add) (None, 14, 14, 384) 0 add_9[0][0]

convnext-tiny_stage_2_block_4_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_d (None, 14, 14, 384) 19200 add_10[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_5_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_5_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_5_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_5_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_5_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_5_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_5_lay

__________________________________________________________________________________________________

add_11 (Add) (None, 14, 14, 384) 0 add_10[0][0]

convnext-tiny_stage_2_block_5_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_d (None, 14, 14, 384) 19200 add_11[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_6_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_6_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_6_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_6_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_6_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_6_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_6_lay

__________________________________________________________________________________________________

add_12 (Add) (None, 14, 14, 384) 0 add_11[0][0]

convnext-tiny_stage_2_block_6_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_d (None, 14, 14, 384) 19200 add_12[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_7_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_7_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_7_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_7_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_7_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_7_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_7_lay

__________________________________________________________________________________________________

add_13 (Add) (None, 14, 14, 384) 0 add_12[0][0]

convnext-tiny_stage_2_block_7_ide

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_d (None, 14, 14, 384) 19200 add_13[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_l (None, 14, 14, 384) 768 convnext-tiny_stage_2_block_8_dep

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_p (None, 14, 14, 1536) 591360 convnext-tiny_stage_2_block_8_lay

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_g (None, 14, 14, 1536) 0 convnext-tiny_stage_2_block_8_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_p (None, 14, 14, 384) 590208 convnext-tiny_stage_2_block_8_gel

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_l (None, 14, 14, 384) 384 convnext-tiny_stage_2_block_8_poi

__________________________________________________________________________________________________

convnext-tiny_stage_2_block_8_i (None, 14, 14, 384) 0 convnext-tiny_stage_2_block_8_lay

__________________________________________________________________________________________________

add_14 (Add) (None, 14, 14, 384) 0 add_13[0][0]

convnext-tiny_stage_2_block_8_ide

__________________________________________________________________________________________________

convnext-tiny_downsampling_bloc (None, 7, 7, 768) 1181184 add_14[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_d (None, 7, 7, 768) 38400 convnext-tiny_downsampling_block_

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_l (None, 7, 7, 768) 1536 convnext-tiny_stage_3_block_0_dep

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_p (None, 7, 7, 3072) 2362368 convnext-tiny_stage_3_block_0_lay

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_g (None, 7, 7, 3072) 0 convnext-tiny_stage_3_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_p (None, 7, 7, 768) 2360064 convnext-tiny_stage_3_block_0_gel

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_l (None, 7, 7, 768) 768 convnext-tiny_stage_3_block_0_poi

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_0_i (None, 7, 7, 768) 0 convnext-tiny_stage_3_block_0_lay

__________________________________________________________________________________________________

add_15 (Add) (None, 7, 7, 768) 0 convnext-tiny_downsampling_block_

convnext-tiny_stage_3_block_0_ide

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_d (None, 7, 7, 768) 38400 add_15[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_l (None, 7, 7, 768) 1536 convnext-tiny_stage_3_block_1_dep

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_p (None, 7, 7, 3072) 2362368 convnext-tiny_stage_3_block_1_lay

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_g (None, 7, 7, 3072) 0 convnext-tiny_stage_3_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_p (None, 7, 7, 768) 2360064 convnext-tiny_stage_3_block_1_gel

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_l (None, 7, 7, 768) 768 convnext-tiny_stage_3_block_1_poi

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_1_i (None, 7, 7, 768) 0 convnext-tiny_stage_3_block_1_lay

__________________________________________________________________________________________________

add_16 (Add) (None, 7, 7, 768) 0 add_15[0][0]

convnext-tiny_stage_3_block_1_ide

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_d (None, 7, 7, 768) 38400 add_16[0][0]

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_l (None, 7, 7, 768) 1536 convnext-tiny_stage_3_block_2_dep

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_p (None, 7, 7, 3072) 2362368 convnext-tiny_stage_3_block_2_lay

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_g (None, 7, 7, 3072) 0 convnext-tiny_stage_3_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_p (None, 7, 7, 768) 2360064 convnext-tiny_stage_3_block_2_gel

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_l (None, 7, 7, 768) 768 convnext-tiny_stage_3_block_2_poi

__________________________________________________________________________________________________

convnext-tiny_stage_3_block_2_i (None, 7, 7, 768) 0 convnext-tiny_stage_3_block_2_lay

__________________________________________________________________________________________________

add_17 (Add) (None, 7, 7, 768) 0 add_16[0][0]

convnext-tiny_stage_3_block_2_ide

__________________________________________________________________________________________________

convnext-tiny_head_gap (GlobalA (None, 768) 0 add_17[0][0]

__________________________________________________________________________________________________

convnext-tiny_head_layernorm (L (None, 768) 1536 convnext-tiny_head_gap[0][0]

__________________________________________________________________________________________________

convnext-tiny_head_dense (Dense (None, 1000) 769000 convnext-tiny_head_layernorm[0][0

==================================================================================================

Total params: 28,589,128

Trainable params: 28,589,128

Non-trainable params: 0

__________________________________________________________________________________________________

Process finished with exit code 0

References

https://github.com/keras-team/keras/blob/a116637f53c8bf191f4f51853f3ee58d2ec858d9/keras/applications/convnext.py#L300

https://www.tensorflow.org/api_docs/python/tf/keras/applications/convnext/ConvNeXtTiny#returns

A ConvNet for the 2020s

ConvNeXt网络详解

13.1 ConvNeXt网络讲解