【Cut, Paste and Learn】《Cut, Paste and Learn: Surprisingly Easy Synthesis for Instance Detection》

ICCV-2017

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 Method

-

- 4.1 Collecting images

- 4.2 Adding Objects to Images

-

- 4.2.1 Blending

- 4.2.2 Data Augmentation

- 5 Experiments

-

- 5.1 Datasets

- 5.2 Training and Evaluation on the GMU Dataset

- 5.3 Evaluation on the Active Vision Dataset

- 6 Conclusion(own) / Future work

1 Background and Motivation

实例检测(instance detection)之于目标检测,等价于实例分割之于语义分割

不仅要检测出不同类别的目标,还要区分同类别目标的不同个体

Instance detection occurs commonly in robotics, AR/VR etc., and can also be viewed as fine-grained recognition.

显然,这种任务对标签的要求更高

collecting such annotations is a major impediment for rapid deployment of detection systems in robotics or other personalized applications.

针对得到大规模有标签数据比较耗时耗力的问题,本文作者提出 Cut, Paste and Learn 数据生成方法(Sythesizing data),确保生成数据的 only patch-level realism(不 care global consistency,比如杯子一定要在桌子上面等等),即使视觉上看上去仍有瑕疵,但模型跑出来效果不错

The underlying theme is to ‘paste’ real object masks in real images, thus reducing the dependence on graphics renderings.

2 Related Work

instance detection

- local features(SIFT, SURF, MSER)

- shape-based methods

- Modern detection methods(one stage, two stage)

Sythesizing data

- There is a wide spectrum of work where rendered datasets are used for computer vision tasks.(真单目标+真随机背景->全部 render)

3 Advantages / Contributions

提出 Cut, Paste and Learn 数据生成方法,在 instance detection 数据集上提升明显,跨数据集的泛化性能也不错

4 Method

Traditional Dataset Collection:an data curation step + an annotation step

好的 instance detection 模型 have good coverage of viewpoints and scales of the object

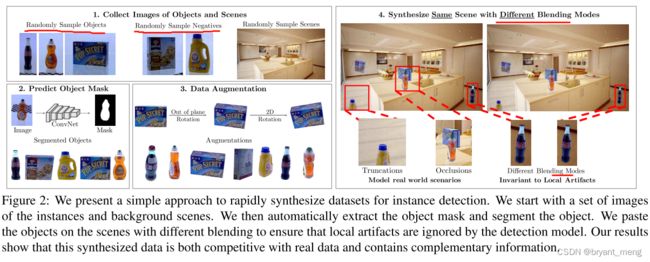

生成数据的大体流程如下图所示

- Collect object instance images

- Collect scene images

- Predict foreground mask for the object

- Paste object instances in scenes

invariance to local artifacts,training algorithm does not focus on subpixel discrepancies at the boundaries.

注意这个 negatives,不仅仅只生成 objects 还引入了负样本的干扰

4.1 Collecting images

(1)Images of objects from different viewpoints

从 BigBIRD Dataset sample,具体介绍见本博客 5.1 小节

(2)Background images of indoor scenes

从 UW Scenes dataset 中 sample

There are 1548 images in the backgrounds dataset.

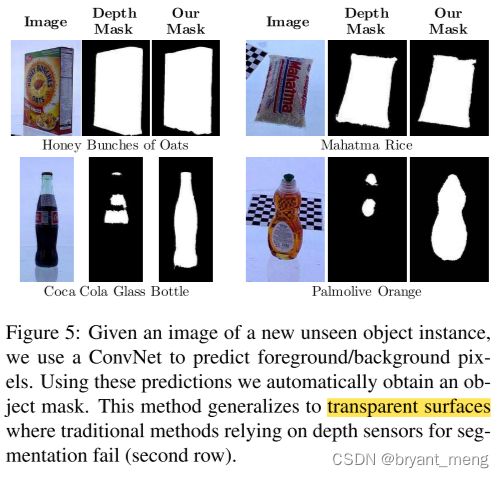

(3)Foreground/Background segmentation

用的 FCN 分割网络,PASCAL VOC 预训练,主干 VGG

The object masks from the depth sensor are used as ground truth for training this model.(BigBIRD Dataset)

还有个后处理操作,用的是 《The Fast Bilateral Solver》(ECCV-2016)方法使分割边缘更加平滑

上图可以看出作者的方法对 transparent 物体也能有很好的分割结果

4.2 Adding Objects to Images

we present steps to generate data thatforces the training algorithm to ignore these artifacts and focus only on the object appearance

(1)Detection Model

Faster RCNN 网络,COCO 预训练,VGG主干

(2)Benchmarking Dataset

use the GMU Kitchen dataset for evaluation

4.2.1 Blending

Poisson blending smooths edges and adds lighting variations

Although these blending methods do not yield visually ‘perfect’ results, they improve performance of the trained detectors.

4.2.2 Data Augmentation

(2)3D Rotation

不引入生成的数据,一些漏检的例子,

(3)Occlusion and Truncation

Truncation,ensuring at least 0.25 of the object box is in the image.

Occlusion,paste the objects with partial overlap with each other (max IOU of 0.75).

(4)Distractor Objects

additional objects from the BigBIRD dataset as distractors.

5 Experiments

We generate a synthetic dataset with approximately 6000 images using all modes of data augmentation.

5.1 Datasets

1)UW Scenes dataset

2)BigBIRD Dataset

each object has 600 images, captured by five cameras with different viewpoints

作者选用了其中的 33 object instances,取目标

3)GMU Kitchen Dataset

9 kitchen scenes with 6, 728 images,训练测试

与作者从 BigBIRD 抽出来的 33 个 instances 有 11 个是重复的

4)Active Vision Dataset

9 scenes and 17,556 images

33 objects in total and 6 objects in overlap with the GMU Kitchen Scenes.

与作者从 BigBIRD 抽出来的 33 个 instances 有 6 个是重复的(11中的6)

5.2 Training and Evaluation on the GMU Dataset

5.3 Evaluation on the Active Vision Dataset

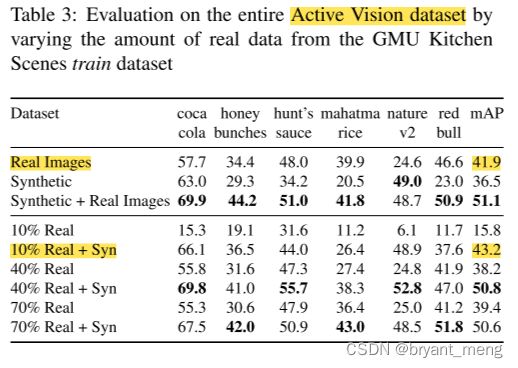

To test generalization across datasets, train on GMU Kitchen,test on Active Vision Dataset

10% 的 Real + Syn 就能匹敌 100% Real,还是很猛哒

6 Conclusion(own) / Future work

-

code:https://github.com/debidatta/syndata-generation

-

《The Fast Bilateral Solver》(ECCV-2016)

a novel algorithm for edge-aware smoothing

-

图像融合(一)Poisson Blending,code:https://github.com/yskmt/pb

-

patch-level realism,not respect global consistency or even obey scene factors such as lighting

-

We showed that patch-based realism is sufficient for training region-proposal based object detectors. 后续要兼顾下 global consistency for placing objects——几何布局真实和全局一致性(比如杯子在桌子上)

-

Cut, Paste and Learn论文阅读

-

Cut Paste and Learn Surprisingly Easy Synthesis for Instance Detection - QuickPeek