论文阅读:AutoAugment: Learning Augmentation Strategies from Data

文章目录

-

-

- 1、论文总述

- 2、MNIST 与 ImageNet 数据集上有效数据增强的不同

- 3、The key difference between our method and GAN

- 4、A search algorithm and a search space.

- 5、 One of the policies found on SVHN

- 6、Search algorithm details:PPO

- 7、 One of the successful policies on ImageNet

- 8、Performance of random policies

- 9、16种数据增强

-

1、论文总述

这篇论文应该是数据增强这块自动学习的开山之作了,论文作者也说了自己是受到网络架构自动搜索的相关工作的影响,这篇论文的主要内容是利用强化学习对一个数据集进行数据增强操作的自动学习,因为每个数据集的特性或者分布不相同,会导致不同的数据集需要不同的数据增强操作,这就是我平常工作内容比较多的原因,,,我得挨个试试哪个数据增强对这个项目数据集提升效果明显。。毕竟数据集做好了,后面的网络模型就好选了。

In this paper, we aim to automate the process of finding

an effective data augmentation policy for a target dataset.

In our implementation (Section 3), each policy expresses

several choices and orders of possible augmentation opera- //orders也是一个需要学习的变量

tions, where each operation is an image processing function (e.g., translation, rotation, or color normalization),

the probabilities of applying the function, and the magnitudes with which they are applied. We use a search algorithm to find the best choices and orders of these operations such that training a neural network yields the best

validation accuracy. In our experiments, we use Reinforcement Learning [71] as the search algorithm, but we believe

the results can be further improved if better algorithms are

used [48, 39].

Our extensive experiments show that AutoAugment

achieves excellent improvements in two use cases: 1) AutoAugment can be applied directly on the dataset of interest

to find the best augmentation policy (AutoAugment-direct)

and 2) learned policies can be transferred to new datasets

(AutoAugment-transfer).

作者说道AutoAugment工作一方面可以直接对某个数据集进行增强策略的学习,另一方面可以将从这个数据集学到的数据增强策略迁移到别的数据分布比较相似的数据集上。

This result suggests that transferring data augmentation policies offers an

alternative method for standard weight transfer learning. A

summary of our results is shown in Table 1.

当预训练权重不好使的时候,可以试试预训练数据增强!!

可以配合这篇博客一起食用

2、MNIST 与 ImageNet 数据集上有效数据增强的不同

For example, on MNIST,

most top-ranked models use elastic distortions, scale, translation, and rotation [54, 8, 62, 52]. On natural image

datasets, such as CIFAR-10 and ImageNet, random cropping, image mirroring and color shifting / whitening are

more common [29]. As these methods are designed manually, they require expert knowledge and time. Our approach

of learning data augmentation policies from data in principle can be used for any dataset, not just one

3、The key difference between our method and GAN

Generative adversarial networks have also been used for

the purpose of generating additional data (e.g., [45, 41, 70, 2, 56]). The key difference between our method and generative models is that our method generates symbolic transformation operations, whereas generative models, such as

GANs, generate the augmented data directly. An exception

is work by Ratner et al., who used GANs to generate sequences that describe data augmentation strategies [47].

The difference of our method to theirs is that

our method tries to optimize classification accuracy directly

whereas their method just tries to make sure the augmented

images are similar to the current training images.

优化的目标不一样

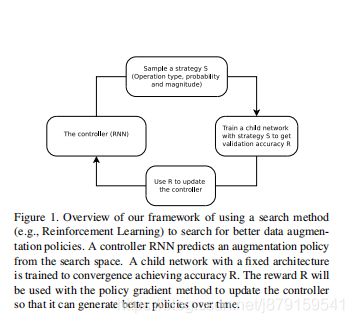

4、A search algorithm and a search space.

We formulate the problem of finding the best augmentation policy as a discrete search problem (see Figure 1).

Our method consists of two components: A search algorithm and a search space. At a high level, the search algorithm (implemented as a controller RNN) samples a data

augmentation policy S, which has information about what

image processing operation to use, the probability of using

the operation in each batch, and the magnitude of the operation. Key to our method is the fact that the policy S will

be used to train a neural network with a fixed architecture,

whose validation accuracy R will be sent back to update the

controller. Since R is not differentiable, the controller will

be updated by policy gradient methods.

文章后面的discussion也说了作者的主要工作是在novel的数据增强的方法以及数据增强搜索空间的构建,只有这个搜索算法的实现并不是重点,还可以用其他算法进行实现,如进化算法。

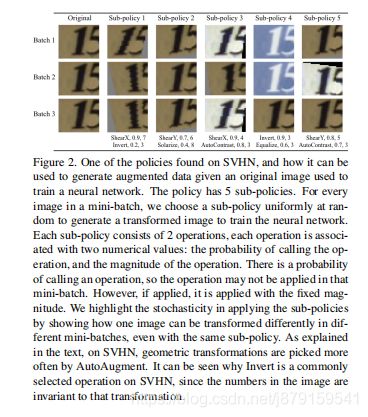

5、 One of the policies found on SVHN

6、Search algorithm details:PPO

The search algorithm that

we used in our experiment uses Reinforcement Learning,

inspired by [71, 4, 72, 5]. The search algorithm has two

components: a controller, which is a recurrent neural network, and the training algorithm, which is the Proximal

Policy Optimization algorithm [53]. At each step, the controller predicts a decision produced by a softmax; the prediction is then fed into the next step as an embedding. In

total the controller has 30 softmax predictions in order to

predict 5 sub-policies, each with 2 operations, and each operation requiring an operation type, magnitude and probability

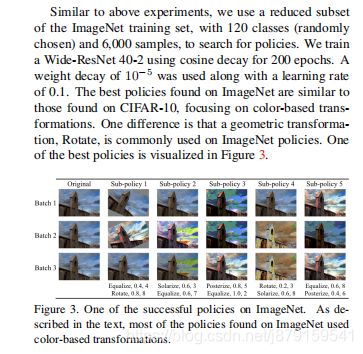

7、 One of the successful policies on ImageNet

可以看到自然类数据集ImageNet与minist 所需要的数据增强操作不一样

8、Performance of random policies

Next, we randomize

the whole policy, the operations as well as the probabilities

and magnitudes. Averaged over 20 runs, this experiment

yields an average accuracy of 3.1% (with a standard deviation of 0.1%), which is slightly worse than randomizing

only the probabilities and magnitudes. The best random

policy achieves achieves an error of 3.0% (when average

over 5 independent runs). This shows that even AutoAugment with randomly sampled policy leads to appreciable

improvements.

The ablation experiments indicate that even data augmentation policies that are randomly sampled from our

search space can lead to improvements on CIFAR-10 over

the baseline augmentation policy. However, the improvements exhibited by random policies are less than those

shown by the AutoAugment policy (2.6% ± 0.1% vs.

3.0% ± 0.1% error rate). Furthermore, the probability and

magnitude information learned within the AutoAugment

policy seem to be important, as its effectiveness is reduced

significantly when those parameters are randomized. We

emphasize again that we trained our controller using RL out

of convenience, augmented random search and evolutionary

strategies can be used just as well. The main contribution

of this paper is in our approach to data augmentation and

in the construction of the search space; not in discrete optimization methodology

就是说随机选择也行,就是不如用学习策略去搜索出来的参数好

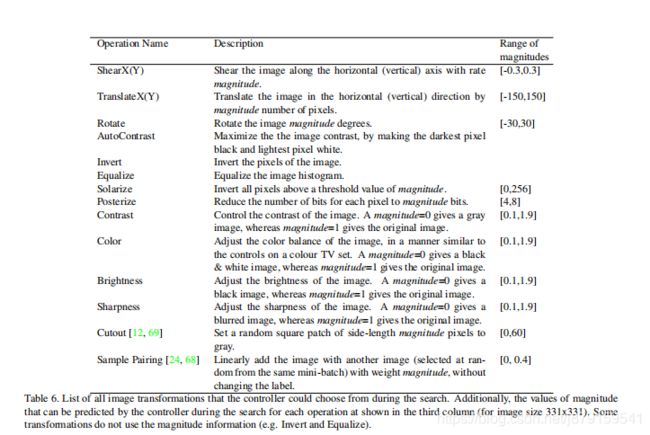

9、16种数据增强

参考博客:

论文笔记:AutoAugment