VGG网络详解以及实战

前言

VGG 在2014年由牛津大学的视觉几何组 (Visual Geometry Group)提出,主要工作是证明了增加网络的深度能够在一定程度上影响网络最终的性能。

VGG16相比AlexNet的一个创新之处是采取连续的几个3x3的卷积核代替AlexNet中的较大卷积核。这样可以减少训练中的参数并且能够保证具有相同的感受野。

VGG网络结构

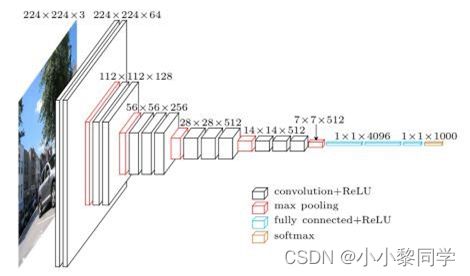

下面为VGG的网络结构图,我们以VGG16为例。

在这里我们发现VGG16总体上有13个卷积层和3个全连接层。通常我们把13个卷积层分为5个卷积块(VGG块)。以下为VGG16的三维结构图。

这里需要注意的是最后一个全连接层没有添加激活函数,而是通过softmax运算得一个概率分布。在这里我们事先了解一下特征图(feature map)和感受野。

特征图

特征图是输入然后经过一次运算的一个结果,其计算公式如下:

⌊ ( n h − k h + p b + s h ) / s h ⌋ × ⌊ ( n w − k w + p w + s w ) / s w ⌋ \lfloor(n_{h}-k_{h}+p_{b}+s_{h})/s_{h}\rfloor\times\lfloor(n_{w}-k_{w}+p_{w}+s_{w})/s_{w}\rfloor ⌊(nh−kh+pb+sh)/sh⌋×⌊(nw−kw+pw+sw)/sw⌋

n h n_{h} nh:输入形状的高

k h k_{h} kh:卷积核的高

p h p_{h} ph:填充大小

s h s_{h} sh:垂直步幅

注意:假如填充为1,通常是上下,左右各填充一行/列,因此在代入公式计算时 p h p_{h} ph为2。最后计算的结果要向下取整。下面我们以VGG16为例计算每层输出形状的大小。

第一个卷积块: 输入为224×224×3,经过64个kernel size为2×3×3的padding=1,stride = 1卷积后得到shape为224×224×64的block层。在这里我们先计算一下cov3-64与输入进行互相关运算时的高和宽,代入计算公式 ⌊ ( 224 − 3 + 2 + 1 ) / 1 ⌋ × ⌊ ( 224 − 3 + 2 + 1 ) / 1 ⌋ \lfloor(224-3+2+1)/1\rfloor\times\lfloor(224-3+2+1)/1\rfloor ⌊(224−3+2+1)/1⌋×⌊(224−3+2+1)/1⌋,即输出的高和宽为224×224,可知这个卷积核不改变输出的高和宽,因此此时输出为224×224×64(批量大小看为1先)。

Max-pooling层: 输入为224×224×64,经过pool size=2,stride=2的减半池化后得到尺寸为112×112×64的池化层,剩下的根据公式计算即可。

感受野

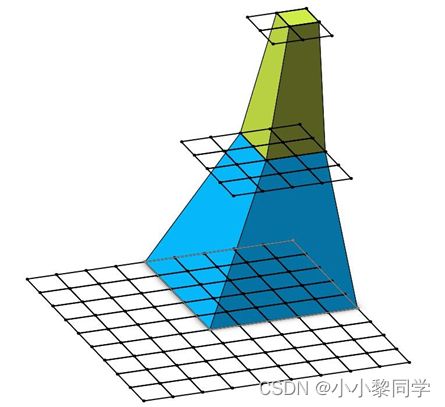

在CNN中,对于某一层的任意元素x,其感受野是指在前向传播期间可能影响x计算的所有元素。通俗地说是输出feature map上的一个单元 对应输入层上的区域大小。以下图为例,输出层 最上层中一个单元对应中间层上区域大小为2×2,对应输入层 最下层上大小为5×5

感受野计算公式如下:

F i = ( F ( i + 1 ) − 1 ) + S t r i d e + K s i z e F_{i}=(F(i+1)-1)+Stride+Ksize Fi=(F(i+1)−1)+Stride+Ksize

· F ( i ) F(i) F(i) :为第 i i i层的感受野

· S t r i d e Stride Stride:第 i i i 层的步距

· K s i z e Ksize Ksize:卷积核 或 池化核 尺寸

堆叠三个3×3的卷积核替代7x7的卷积核。验证替代前后感受野是否相同?

· Feature map: F = 1

· Conv3x3(3): F = ( 1 − 1 ) × 1 + 3 = 3

· Conv3x3(2): F = ( 3 − 1 ) × 1 + 3 = 5

· Conv3x3(1): F = ( 5 − 1 ) × 1 + 3 = 7

卷积核的步幅为1,在这里我们发现,堆叠三个3×3的卷积核与一个7×7的卷积核在其原始图像中的感受野一样。

验证堆叠3×3卷积核后可学习参数是否有减少?

在这里我们不使用偏置项,因此可学习参数只有权重项,卷积核的步幅为1,其中输入和输出通道为 C C C。

· 使用7×7卷积核所需参数个数: 7 × 7 × C × C = 49 C 2 7\times7\times C\times C=49C^{2} 7×7×C×C=49C2

· 堆叠三个3×3的卷积核所需参数个数: 3 × 3 × C × C + 3 × 3 × C × C + 3 × 3 × C × C = 27 C 2 3\times3\times C\times C+3\times3\times C\times C+3\times3\times C\times C=27C^{2} 3×3×C×C+3×3×C×C+3×3×C×C=27C2

使用pytorch搭建VGG

1.model.py

#导入相关的包

import torch.nn as nn

import torch

class VGG(nn.Module):

def __init__(self, features, num_classes=1000, init_weights=False):

super(VGG, self).__init__()#调用VGG父类的初始化函数

self.features = features

self.classifier = nn.Sequential(

nn.Linear(512*7*7, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, num_classes)

)

if init_weights:#init_weights=True时,执行下面的权重初始化方法

self._initialize_weights()

def forward(self, x):#正向传播

# N x 3 x 224 x 224

x = self.features(x)

# N x 512 x 7 x 7

x = torch.flatten(x, start_dim=1)

# N x 512*7*7

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):#判断是否为卷积核

# nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

nn.init.xavier_uniform_(m.weight)

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):#判断是否为全连接层

nn.init.xavier_uniform_(m.weight)

# nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

cfgs = {

'vgg11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'vgg19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

def make_features(cfg: list):

layers = []

in_channels = 3

for v in cfg:

if v == "M":

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

layers += [conv2d, nn.ReLU(True)]

in_channels = v

return nn.Sequential(*layers)

def vgg(model_name="vgg16", **kwargs):

assert model_name in cfgs, "Warning: model number {} not in cfgs dict!".format(model_name)

cfg = cfgs[model_name]

model = VGG(make_features(cfg), **kwargs)

return model

2.train.py

import os

import sys

import json

import torch

import torch.nn as nn

from torchvision import transforms, datasets

import torch.optim as optim

from tqdm import tqdm

from model import vgg

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 32

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=nw)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=nw)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# test_data_iter = iter(validate_loader)

# test_image, test_label = test_data_iter.next()

model_name = "vgg16"

net = vgg(model_name=model_name, num_classes=5, init_weights=True)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.0001)

epochs = 30

best_acc = 0.0

save_path = './{}Net.pth'.format(model_name)

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

outputs = net(images.to(device))

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('Finished Training')

if __name__ == '__main__':

main()

3.predict.py

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import vgg

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# load image

img_path = "../tulip.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_indict = json.load(f)

# create model

model = vgg(model_name="vgg16", num_classes=5).to(device)

# load model weights

weights_path = "./vgg16Net.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path, map_location=device))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()

参考资料

1.https://www.bilibili.com/video/BV1q7411T7Y6?spm_id_from=333.999.0.0