tensorflow2.4使用GooleNet实现识别植物花朵图像项目

tensorflow2.4使用GooleNet实现识别植物花朵图像项目

1. 数据集下载

链接:https://pan.baidu.com/s/1zs9U76OmGAIwbYr91KQxgg

提取码:bhjx

2. GooleNet网络介绍

GoogleNet是google推出的基于Inception模块的深度神经网络模型,在2014年的ImageNet竞赛中夺得了冠军。

GoogleNet在当时的创新点有两个:

- 使用了模型融合

在GoogleNet中,运用了许多的Inception模块。

上图中,左边是原始的Inception结构,右边是优化后的Inception结构。

Inception结构特点:使用不同卷积核,提取不同特征,最后融合起来。 - 使用1×1卷积

- 1×1卷积作用:

- 增加网络非线性——网络层数更多。

- 减少计算量和需要训练的权值。

- 1×1卷积作用:

3. 代码演示

3.1 导入依赖库

from tensorflow.keras.preprocessing import image

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint, LearningRateScheduler, TensorBoard

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input

import tensorflow as tf

import numpy as np

import tensorflow as tf

from tensorflow.keras.layers import Dense, Dropout, Conv2D, MaxPool2D, Flatten, Input, concatenate, AvgPool2D

from tensorflow.keras.models import Model

3.2 定义超参数

classes = 17 # 类别

batch_size = 32 # 批次大小

epochs = 100 # 训练的轮次

img_size = 224 # resize图片的大小

lr = 1e-3 # 学习率

datasets = './dataset/data_flower' # 数据集的路径

3.3 定义数据集及数据增强

def data_process_func():

# ---------------------------------- #

# 训练集进行的数据增强操作

# 1. rotation_range -> 随机旋转角度

# 2. width_shift_range -> 随机水平平移

# 3. width_shift_range -> 随机数值平移

# 4. rescale -> 数据归一化

# 5. shear_range -> 随机错切变换

# 6. zoom_range -> 随机放大

# 7. horizontal_flip -> 水平翻转

# 8. brightness_range -> 亮度变化

# 9. fill_mode -> 填充方式

# ---------------------------------- #

train_data = ImageDataGenerator(

rotation_range=50,

width_shift_range=0.1,

height_shift_range=0.1,

rescale=1/255.0,

shear_range=10,

zoom_range=0.1,

horizontal_flip=True,

brightness_range=(0.7, 1.3),

fill_mode='nearest'

)

# ---------------------------------- #

# 测试集数据增加操作

# 归一化即可

# ---------------------------------- #

test_data = ImageDataGenerator(

rescale=1/255

)

# ---------------------------------- #

# 训练器生成器

# 测试集生成器

# ---------------------------------- #

train_generator = train_data.flow_from_directory(

f'{datasets}/train',

target_size=(img_size, img_size),

batch_size=batch_size

)

test_generator = test_data.flow_from_directory(

f'{datasets}/test',

target_size=(img_size, img_size),

batch_size=batch_size

)

return train_generator, test_generator

3.4 定义网络结构

def Inception(x, filters, name):

t1 = Conv2D(filters=filters[0], kernel_size=(1, 1), strides=(1, 1), padding='same', activation='relu', name=f'{name}Inception_1_Conv1')(x)

t2 = Conv2D(filters=filters[1], kernel_size=(1, 1), strides=(1, 1), padding='same', activation='relu', name=f'{name}Inception_2_Conv1')(x)

t2 = Conv2D(filters=filters[2], kernel_size=(3, 3), strides=(1, 1), padding='same', activation='relu', name=f'{name}Inception_2_Conv2')(t2)

t3 = Conv2D(filters=filters[3], kernel_size=(1, 1), strides=(1, 1), padding='same', activation='relu', name=f'{name}Inception_3_Conv1')(x)

t3 = Conv2D(filters=filters[4], kernel_size=(5, 5), strides=(1, 1), padding='same', activation='relu', name=f'{name}Inception_3_Conv2')(t3)

pooling = MaxPool2D(pool_size=(3, 3), strides=(1, 1), padding='same', name=f'{name}Inception_4_Pool1')(x)

pooling = Conv2D(filters=filters[5],kernel_size=(1, 1), strides=(1, 1), padding='same', activation='relu', name=f'{name}Inception_4_Pool2')(pooling)

x = concatenate([t1, t2, t3, pooling], axis=3)

return x

def Goolenet(inputs, classes):

x = Conv2D(filters=64, kernel_size=(7, 7), strides=(2, 2), padding='same', activation='relu', name='Conv1')(inputs)

x = MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding='same', name='Pool1')(x)

x = Conv2D(filters=64, kernel_size=(1, 1), strides=(1, 1), padding='same', activation='relu', name='Conv2')(x)

x = Conv2D(filters=192, kernel_size=(3, 3), strides=(1, 1), padding='same', activation='relu', name='Conv3')(x)

x = MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding='same', name='Pool2')(x)

x = Inception(x, filters=[64, 96, 128, 16, 32, 32], name='Inception_block1')

x = Inception(x, filters=[128, 128, 192, 32, 96, 64], name='Inception_block2')

x = MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding='same', name='Pool3')(x)

x = Inception(x, filters=[192,96,208,16,48,64], name='Inception_block3')

x = Inception(x, filters=[160,112,224,24,64,64], name='Inception_block4')

x = Inception(x, filters=[128,128,256,24,64,64], name='Inception_block5')

x = Inception(x, filters=[112,144,288,32,64,64], name='Inception_block6')

x = Inception(x, filters=[256,160,320,32,128,128], name='Inception_block7')

x = MaxPool2D(pool_size=3,strides=2,padding='same', name='Pool4')(x)

x = Inception(x, [256,160,320,32,128,128], name='Inception_block8')

x = Inception(x, [384,192,384,48,128,128], name='Inception_block9')

x = AvgPool2D(pool_size=7,strides=7,padding='same', name='AvgPool1')(x)

x = Flatten()(x)

x = Dropout(0.4)(x)

x = Dense(classes, activation='softmax')(x)

return x

3.5 定义学习率调整函数

# 学习率调整

def adjust_lr(epoch, lr=lr):

print("Seting to %s" % (lr))

if epoch < 3:

return lr

else:

return lr * 0.93

3.6 开始训练模型

# 使用GPU

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

inputs = Input(shape=(img_size,img_size,3))

# 构造器

train_generator, test_generator = data_process_func()

model = Model(inputs=inputs, outputs=Goolenet(inputs=inputs, classes=classes))

callbackss = [

EarlyStopping(monitor='val_loss', patience=10, verbose=1), # val_loss若10个轮次还不下降,就停止训练

ModelCheckpoint('logs/ep{epoch:03d}-loss{loss:.3f}-val_loss{val_loss:.3f}.h5',monitor='val_loss',

save_weights_only=True, save_best_only=False, period=1), # 每个轮次都保存权重文件,在logs文件夹下

LearningRateScheduler(adjust_lr)

# TensorBoard(log_dir='./logs1')

]

print('---------->epoch0 starting--------->')

model.compile(optimizer=Adam(lr=lr), loss='categorical_crossentropy', metrics=['accuracy'])

history = model.fit(

x = train_generator,

validation_data = test_generator,

epochs = epochs,

workers = 1,

callbacks = callbackss,

)

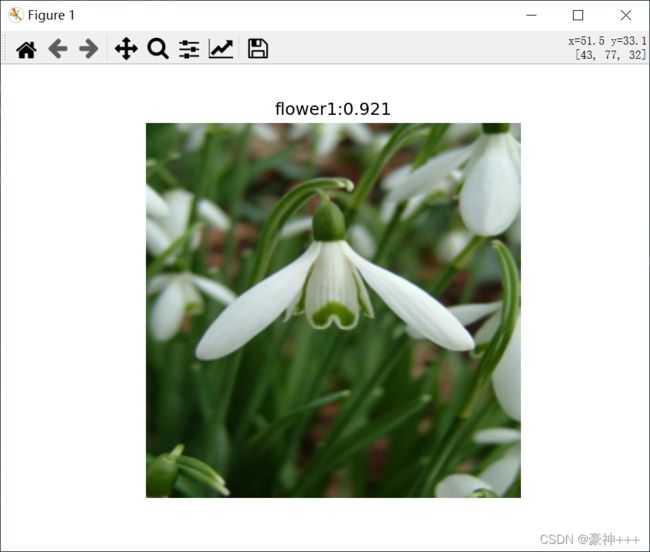

3.7 预测图片

import tensorflow as tf

from PIL import Image

from tensorflow.keras.models import load_model

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input

from tensorflow.keras.preprocessing.image import img_to_array, load_img

import numpy as np

import os

import matplotlib.pyplot as plt

# -------------------------------------------------#

# 指定所测试集的路径

# 权重路径

# 网络

# 类别

# 图片大小

# -------------------------------------------------#

if True:

datasets = './dataset/data_flower/test'

names = os.listdir(datasets)

weight = './model_data/test_acc-0.754-Goolenet_flowers_val_loss0.944-1.h5'

classes = 17

img_size = 224

# -------------------------------------------------#

# 归一化

def preprocess_input(x):

x /= 255

return x

inputs = Input(shape=(img_size,img_size,3))

model = Model(inputs=inputs, outputs=Goolenet(inputs=inputs, classes=classes))

# -------------------------------------------------#

# 载入模型

# -------------------------------------------------#

model.load_weights(weight)

while True:

img_path = input('input img_path:')

try:

img = Image.open(img_path)

img = img.resize((224, 224))

image_data = np.expand_dims(preprocess_input(np.array(img, np.float32)), 0)

except:

print('The path is error!')

continue

else:

plt.imshow(img)

plt.axis('off')

p =model.predict(image_data)[0]

print(model.predict(image_data))

pred_name = names[np.argmax(p)]

plt.title('%s:%.3f'%(pred_name, np.max(p)))

plt.show()

输入:./dataset/data_flower/test/flower1/image_0082.jpg