决策树的生成与剪枝(原理与代码)

所做的实验为快来一起挖掘幸福感!读取数据函数稍微改改就能用

(遇到特征有连续值时(就比如西瓜数据集3.0),参考决策树(decision tree)(三)——连续值处理

依照这篇博客,我自己尝试实现了一下:决策树处理连续值的代码)

文章目录

- 决策树的剪枝(ID3、C4.5)

-

- 1.概述

- 2.两种参照

-

-

- 2.1基于损失函数

- 2.2基于精确度

-

- 3.两种算法

-

- 3.1预剪枝

-

- 3.1.1原理介绍

- 3.1.2实例分析

- 3.1.3代码

- 3.1.4理解与感悟

- 3.2后剪枝

-

- 3.2.1原理介绍

- 3.2.2实例分析

- 3.2.3代码

- 3.2.4理解与感悟

- 各函数代码与注解

-

- 1.得到所有的特征名,删除其中的id、happiness、province, city, county四个无用特征用于后续分析

- 2.分别得到训练数据和测试数据

- 3.计算输入序列信息熵

- 4.计算某特征下的条件信息熵

- 5.计算信息增益

- 6.计算信息增益率

- 7.投票,得到出现次数最多的标签

- 8.通过信息增益或者信息增益比,选择最好的特征

- 9.根据特征,划分数据集得到子集

- 10.创造一颗带内部结点标签的树,便于后剪枝

- 11.由带标签树转化为不带标签树,便于可视化

- 12.后剪枝

- 13.或者直接预剪枝构建决策树

- 14.决策树用于分类

- 15.决策树的可视化

- 16.主函数,采用预剪枝、交叉验证

- 相关拓展

决策树的剪枝(ID3、C4.5)

1.概述

决策树生成算法递归地产生决策树,直到不能继续下去为止。这样产生的树往往 对训练数据的分类很准确,但对未知的测试数据的分类却没有那么准确,即出现过拟 合现象。过拟合的原因在于学习时过多地考虑如何提高对训练数据的正确分类,从而 构建出过于复杂的决策树。解决这个问题的办法是考虑决策树的复杂度,可以考虑在生成决策树时,顺便对其进行剪枝---预剪枝,还有一种方法是对已生成的决策树进行简化。

2.两种参照

2.1基于损失函数

设树T的叶子结点个数为|T|,t是其中一个叶子结点。在这个叶子结点中,有Nt个样本。这些样本中有k类,第I类的样本点有Nti(I = 0、1...k),Ht(T)为叶结点t上的经验熵。定义α为调和参数,当α确定时,|T|越大,树的拟合程度提高的同时复杂度也会提高。若α取很小,此时基本上只考虑拟合程度而不考虑树的复杂度,容易导致过拟合;当α很大,此时树的复杂度占很大的比重,为了使损失函数总体小,树的复杂度会很小。总的来说,α用于平衡损失函数与树的复杂度。损失函数大致表示为所有叶子结点的经验熵与α X 叶子结点个数,具体公式为:

C a ( T ) = ∑ t = 1 ∣ T ∣ N t H t ( T ) + α ∣ T ∣ C_a(T) = \sum_{t=1}^{|T|}{N_t}{H_t(T)}+α|T| Ca(T)=t=1∑∣T∣NtHt(T)+α∣T∣

其中,叶结点t上的经验熵Ht(T)的表达式为

H t ( T ) = − ∑ k N t k N t l o g N t k N t {H_t(T)}=-\sum_{k}\frac{N_{tk}}{N_t}log\frac{N_{tk}}{N_t} Ht(T)=−k∑NtNtklogNtNtk

将经验熵代入损失函数得:

C a ( T ) = ∑ t = 1 ∣ T ∣ N t H t ( T ) + α ∣ T ∣ = − ∑ t = 1 ∣ T ∣ ∑ k = 1 K N t k l o g N t k N t + α ∣ T ∣ C_a(T)= \sum_{t=1}^{|T|}{N_t}{H_t(T)}+α|T|=-\sum_{t=1}^{|T|}\sum_{k=1}^{K}N_{tk}log\frac{N_{tk}}{N_t}+α|T| Ca(T)=t=1∑∣T∣NtHt(T)+α∣T∣=−t=1∑∣T∣k=1∑KNtklogNtNtk+α∣T∣

简化为:

C a ( T ) = C ( T ) + α ∣ T ∣ C_a(T) = C(T) +α|T| Ca(T)=C(T)+α∣T∣

2.2基于精确度

由于损失函数的计算更为复杂,所以可以基于验证集的精确度进行剪枝。文章具体使用的也是这种参照方法。概括性讲,对于训练集的某一特征,可以选择是否将其按照它的取值划分训练集。此时可以利用验证集的精确度来决定。当不划分时,精确度的计算公式表达为:验证集中,类别标签等于当前特征标签的样本个数/验证集中所有样本个数;当划分时,验证集随着该特征向下划分,计算不同取值下类别标签等于当前特征标签的样本个数,所有相等的验证集样本数//验证集中所有样本个数便是划分后的精确度。比较精确度,若划分后的精确度>=划分后的精确度,则划分。两者相等时也决定划分的原因是因为,虽然当前的划分对于精确度没有提升,但划分后不乏会有提升的可能。

具体的步骤在下面的算法中体现。

3.两种算法

3.1预剪枝

3.1.1原理介绍

预剪枝需要将训练集划分成训练集和验证集两部分。在根据训练集生成决策树时,面对一个已经被选中的(信息增益最大)的待划分特征,需要根据验证集在该特征划分前、划分后两种情况下不同的精确度来决定是否划分。若划分后的精确度高于或者等于划分前,则划分;若划分后的精确度小于划分前,则直接给定该特征的叶子结点,其类别标签为训练样例数最多的类别。

3.1.2实例分析

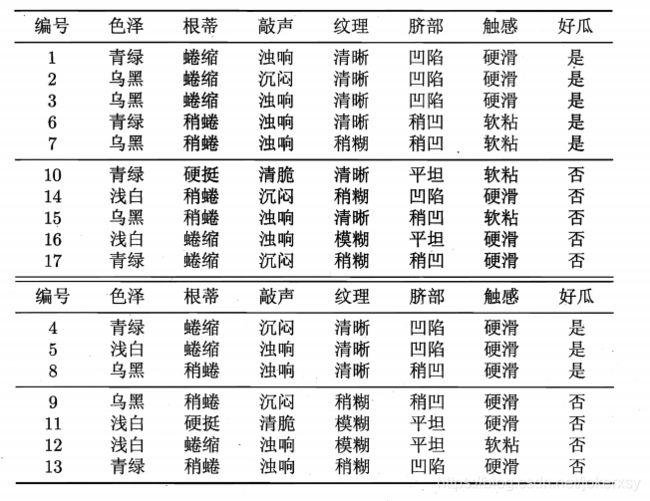

参照上图中,西瓜书的数据集。[1,2,3,6,7,10,14,15,16,17]为训练集,[4,5,8,9,11,12,13]为验证集。

(1)按照训练集计算所有特征的信息增益,得到脐部的信息增益最大,设定为根结点,考虑是否将其进行划分。若不划分,由于10个训练样本中5个为好瓜、5个为坏瓜,规定该内部结点上的类别标签为好瓜。则验证集的精确率为3/7,即4、5、8三个样本判断正确。

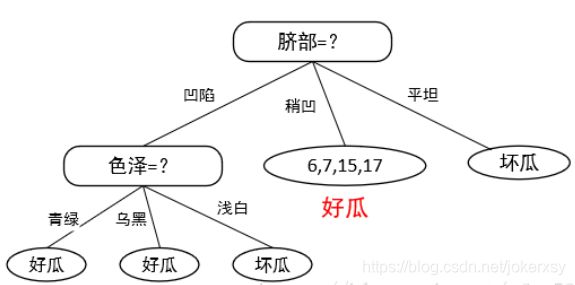

若按照凹陷、稍凹、平坦进行划分,则得到下图:

脐带为凹陷的训练样本中,好瓜占多数,则标为好瓜;剩下的依次标为好瓜、坏瓜。此时验证集的正确率为5/7,具体数据见表格:

5/7大于3/7,决定划分。

(2)考虑脐部为凹陷时,根据[1,2,3,14]组成的子集计算除脐部外所有特征的信息增益,发现色泽最大。则考虑是否要对色泽进行划分。

若不划分,则验证集的精确度和上一步得到的结果完全一样,就是5/7.

若划分,则得到下图:

由于脐部凹陷且色泽青绿的只有1(好瓜),则标为好瓜;剩下的依次为好瓜、坏瓜。在验证集中,脐部为凹陷的有三个样本:4、5、13,其中,4和13由于色泽青绿判为好瓜,5色泽浅白判为坏瓜,其余的验证集由于脐部非凹陷,则被判为与上次一样的结果。具体数据见列表:

由于脐部凹陷且色泽青绿的只有1(好瓜),则标为好瓜;剩下的依次为好瓜、坏瓜。在验证集中,脐部为凹陷的有三个样本:4、5、13,其中,4和13由于色泽青绿判为好瓜,5色泽浅白判为坏瓜,其余的验证集由于脐部非凹陷,则被判为与上次一样的结果。具体数据见列表:

得到精确度4/7<5/7,则不划分,该结点设为叶子结点,标签为好瓜。

得到精确度4/7<5/7,则不划分,该结点设为叶子结点,标签为好瓜。

(3)与上面一样,接下来对脐部为稍凹的情况进行分析,计算得到信息增益的特征,并用验证集来决定是否对其进行划分。

3.1.3代码

# 精确度与上面介绍的有所不同,为计算简单,分母改成了当前验证集样本树,而不是所有。

def createTreePrePruning(dataTrain, labelTrain, dataValid, labelValid, feat_name, method='id3'):

dataTrain = np.asarray(dataTrain)

labelTrain = np.asarray(labelTrain)

dataValid = np.asarray(dataValid)

labelValid = np.asarray(labelValid)

feat_name = np.asarray(feat_name)

# 如果结果为单一结果

if len(set(labelTrain)) == 1:

return labelTrain[0]

# 如果没有待分类特征

elif dataTrain.size == 0:

return voteLabel(labelTrain)

# 其他情况则选取特征

bestFeat, bestEnt = bestFeature(dataTrain, labelTrain, method=method)

# 取特征名称

bestFeatName = feat_name[bestFeat]

# 从特征名称列表删除已取得特征名称

feat_name = np.delete(feat_name, [bestFeat])

# 根据最优特征进行分割

dataTrainSet, labelTrainSet = splitFeatureData(dataTrain, labelTrain, bestFeat)

# 预剪枝评估

# 划分前的分类标签

labelTrainLabelPre = voteLabel(labelTrain)

labelTrainRatioPre = equalNums(labelTrain, labelTrainLabelPre) / labelTrain.size

# 划分后的精度计算

if dataValid is not None:

dataValidSet, labelValidSet = splitFeatureData(dataValid, labelValid, bestFeat)

# 划分前的验证标签正确比例

labelValidRatioPre = equalNums(labelValid, labelTrainLabelPre) / labelValid.size

# 划分后 每个特征值的分类标签正确的数量

labelTrainEqNumPost = 0

for val in labelTrainSet.keys():

labelTrainEqNumPost += equalNums(labelValidSet.get(val), voteLabel(labelTrainSet.get(val))) + 0.0

# 划分后 正确的比例

labelValidRatioPost = labelTrainEqNumPost / labelValid.size

# 如果没有评估数据 但划分前的精度等于最小值0.5 则继续划分,这一步不是很理解

if dataValid is None and labelTrainRatioPre == 0.5:

decisionTree = {bestFeatName: {}}

for featValue in dataTrainSet.keys():

decisionTree[bestFeatName][featValue] = createTreePrePruning(dataTrainSet.get(featValue),

labelTrainSet.get(featValue)

, None, None, feat_name, method)

elif dataValid is None:

return labelTrainLabelPre

# 如果划分后的精度相比划分前的精度下降, 则直接作为叶子节点返回

elif labelValidRatioPost < labelValidRatioPre:

return labelTrainLabelPre

else:

# 根据选取的特征名称创建树节点

decisionTree = {bestFeatName: {}}

# 对最优特征的每个特征值所分的数据子集进行计算

for featValue in dataTrainSet.keys():

decisionTree[bestFeatName][featValue] = createTreePrePruning(dataTrainSet.get(featValue),

labelTrainSet.get(featValue)

, dataValidSet.get(featValue),

labelValidSet.get(featValue)

, feat_name, method)

return decisionTree所做的实验为快来一起挖掘幸福感!

利用预剪枝得到的决策树图像为:

正确率在百分之六十左右

3.1.4理解与感悟

预剪枝的优点是:操作简单,仅需在原有的决策树基础上,每一次选择特征后进行判断是否要划分即可;效率高,能够在创建树的同时进行剪枝;适合大规模问题。但它也有自己的缺点,就是说预剪枝的本质是贪心的,每一次条件不符合就禁止了分支的展开,这样容易出现欠拟合的情况。

3.2后剪枝

3.2.1原理介绍

后剪枝在由训练集生成的决策树上进行,并且,这棵决策树一定是有标记的,即每一个内部结点(非叶子结点)都有类别标记。验证集对已生成的、标记过的决策树进行修剪。每一次找到信息增益最大的特征,利用验证集判断是否要划分。按照已有标记计算未划分时验证集的精确度;假设划分,验证集非空,则将验证集按照特征取值划分,并与已生成决策树比较,计算精确度。比较两者,决定是否裁剪。

3.2.2实例分析

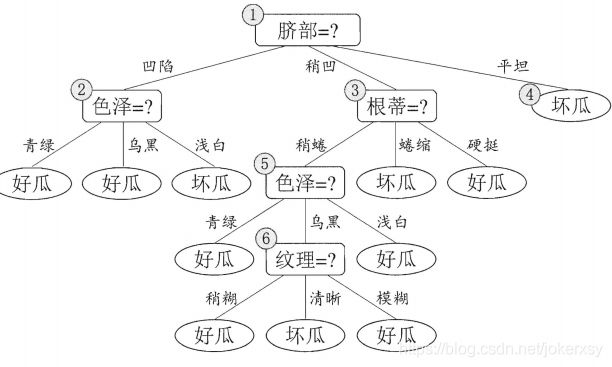

上图为利用训练集构建的未被裁剪的决策树,接着利用验证集对其进行后剪枝。

上图为利用训练集构建的未被裁剪的决策树,接着利用验证集对其进行后剪枝。

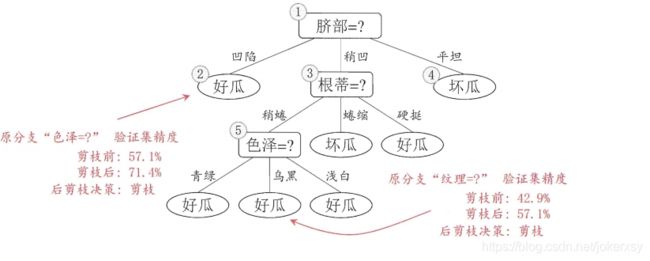

(1)利用未被裁剪的决策树,计算得验证集的精确度为42.9%。现在考虑纹理(⑥)这一结点。若将其领衔的分支剪除,则相当于把⑤替换为叶结点.替换后的叶结点包含编号为 {7 15} 的训练样本,于是该叶结点的类别标记为"好瓜",此时决策树的验证集精度提高至 57.1%. 于是,后剪枝策略决定剪枝。

(2)然后考察结点⑤,若将其领衔的子树替换为叶结点,则替换后的叶结点包含编号为 {6 15} 的训练样例,叶结点类别标记为"好瓜’此时决策树验证集精度仍为 57.1%. 于是,可以不进行剪枝。

(3)接下去分别考虑,色泽、根蒂、脐部,最终得到下图:

剪枝后的决策树对于验证集的精确度为71.4%。

剪枝后的决策树对于验证集的精确度为71.4%。

3.2.3代码

def treePostPruning(labeledTree, dataValid, labelValid, feats):

labelValidSet = {}

newTree = labeledTree.copy()

dataValid = np.asarray(dataValid)

labelValid = np.asarray(labelValid)

feats = np.asarray(feats)

featName = list(labeledTree.keys())[0]

featCol = np.argwhere(feats == featName)[0][0]

feats = np.delete(feats, [featCol])

newTree[featName] = labeledTree[featName].copy()

featValueDict = newTree[featName]

featPreLabel = featValueDict.pop("_vpdl")

# print("当前节点预划分标签:" + featPreLabel)

# 是否为子树的标记

subTreeFlag = 0

# 分割测试数据 如果有数据 则进行测试或递归调用 np的array我不知道怎么判断是否None, 用is None是错的

dataFlag = 1 if sum(dataValid.shape) > 0 else 0

if dataFlag == 1:

# print("当前节点有划分数据!")

dataValidSet, labelValidSet = splitFeatureData(dataValid, labelValid, featCol)

for featValue in featValueDict.keys():

# print("当前节点属性 {0} 的子节点:{1}".format(featValue ,str(featValueDict[featValue])))

if dataFlag == 1 and type(featValueDict[featValue]) == dict:

subTreeFlag = 1

# 如果是子树则递归

newTree[featName][featValue] = treePostPruning(featValueDict[featValue], dataValidSet.get(featValue),

labelValidSet.get(featValue), feats)

# 如果递归后为叶子 则后续进行评估

if type(featValueDict[featValue]) != dict:

subTreeFlag = 0

# 如果没有数据 则转换子树

if dataFlag == 0 and type(featValueDict[featValue]) == dict:

subTreeFlag = 1

# print("当前节点无划分数据!直接转换树:"+str(featValueDict[featValue]))

newTree[featName][featValue] = convertTree(featValueDict[featValue])

# print("转换结果:" + str(convertTree(featValueDict[featValue])))

# 如果全为叶子节点, 评估需要划分前的标签,这里思考两种方法,

# 一是,不改变原来的训练函数,评估时使用训练数据对划分前的节点标签重新打标

# 二是,改进训练函数,在训练的同时为每个节点增加划分前的标签,这样可以保证评估时只使用测试数据,避免再次使用大量的训练数据

# 这里考虑第二种方法 写新的函数 createTreeWithLabel,当然也可以修改createTree来添加参数实现

if subTreeFlag == 0:

ratioPreDivision = equalNums(labelValid, featPreLabel) / labelValid.size

equalNum = 0

for val in labelValidSet.keys():

if val in featValueDict:

equalNum += equalNums(labelValidSet[val], featValueDict[val])

else:

equalNum += len(labelValidSet[val])/5 # 一共五类,随便选一类

ratioAfterDivision = equalNum / labelValid.size

# 如果划分后的测试数据准确率低于划分前的,则划分无效,进行剪枝,即使节点等于预划分标签

# 注意这里取的是小于,如果有需要 也可以取 小于等于

if ratioAfterDivision < ratioPreDivision:

newTree = featPreLabel

return newTree3.2.4理解与感悟

后剪枝决策树通常比预剪枝决策树保留更多的分支。一般情形下,后剪枝决策树的欠拟合风险很小,泛化性能往往优先于预剪枝决策树。但后剪枝过程是在生成完全决策树之后进行的 并且要白底向上地对树中的所有非叶结点进行逐一考察,因此其训练时间开销比未剪枝决策树和预剪枝决策树都要大得多。

各函数代码与注解

1.得到所有的特征名,删除其中的id、happiness、province, city, county四个无用特征用于后续分析

def get_feats(filename):

with open(filename,'r') as fn:

feats_name = fn.read().strip().split()

# 删除id、happiness、province, city, county

for i in ['id','happiness','province', 'city', 'county']:

feats_name.remove(i)

return feats_name2.分别得到训练数据和测试数据

def get_data(filename):

with open(filename,'r') as fn:

all_data = fn.read().strip().split("\n")

all_data_line = []

for i in range(len(all_data)):

all_data_line.append(list(map(int,all_data[i].split())))

all_data_line = np.array(all_data_line)

if filename.startswith("train"):

# 删除id、province、city、county列以及第一列的类别项,并将类别项移到最后一列(代码要求)

tmp = all_data_line[:,1]

all_data_line = np.delete(all_data_line,[0,1,3,4,5],axis=1)

r = all_data_line.shape[1]

all_data_line = np.insert(all_data_line,r,values=tmp, axis=1) # 插入到最后一列

else:# 测试集

all_data_line = np.delete(all_data_line,[0,2,3,4],axis=1)

return all_data_line3.计算输入序列信息熵

def singleEntropy(x):

# 转换为 numpy 矩阵

x = np.asarray(x)

# 取所有不同值

xValues = set(x)

# 计算熵值

entropy = 0

for xValue in xValues:

p = equalNums(x, xValue) / x.size

entropy -= p * log(p, 2)

return entropy4.计算某特征下的条件信息熵

def conditionnalEntropy(feature, y):

"""计算 某特征feature 条件下y的信息熵"""

# 转换为numpy

feature = np.asarray(feature)

y = np.asarray(y)

# 取特征的不同值

featureValues = set(feature)

# 计算熵值

entropy = 0

for feat in featureValues:

# 解释:feature == feat 是得到取feature中所有元素值等于feat的元素的索引(类似这样理解)

# y[feature == feat] 是取y中 feature元素值等于feat的元素索引的 y的元素的子集

p = equalNums(feature, feat) / feature.size

entropy += p * singleEntropy(y[feature == feat])

return entropy5.计算信息增益

def infoGain(feature, y):

return singleEntropy(y) - conditionnalEntropy(feature, y)6.计算信息增益率

def infoGainRatio(feature, y):

return 0 if singleEntropy(feature) == 0 else infoGain(feature, y) / singleEntropy(feature)7.投票,得到出现次数最多的标签

def voteLabel(labels):

uniqLabels = list(set(labels))

labels = np.asarray(labels)

finalLabel = 0

labelNum = []

for label in uniqLabels:

# 统计每个标签值得数量

labelNum.append(equalNums(labels, label))

# 返回数量最大的标签

return uniqLabels[labelNum.index(max(labelNum))]8.通过信息增益或者信息增益比,选择最好的特征

def bestFeature(dataSet, labels, method ='id3'):

assert method in ['id3', 'c45'], "method 须为id3或c45"

dataSet = np.asarray(dataSet)

labels = np.asarray(labels)

# 根据输入的method选取 评估特征的方法:id3 -> 信息增益; c45 -> 信息增益率

def calcEnt(feature, labels):

if method == 'id3':

return infoGain(feature, labels)

elif method == 'c45' :

return infoGainRatio(feature, labels)

# 特征数量 即 data 的列数量

featureNum = dataSet.shape[1]

# 计算最佳特征

bestEnt = 0

bestFeat = -1

for feature in range(featureNum):

ent = calcEnt(dataSet[:, feature], labels)

if ent >= bestEnt:

bestEnt = ent

bestFeat = feature

# print("feature " + str(feature + 1) + " ent: " + str(ent)+ "\t bestEnt: " + str(bestEnt))

return bestFeat, bestEnt9.根据特征,划分数据集得到子集

def splitFeatureData(data, labels, feature):

"""feature 为特征列的索引"""

# 取特征列

print(np.asarray(data).shape)

print(feature)

features = np.asarray(data)[:, feature]

# 数据集中删除特征列

data = np.delete(np.asarray(data), feature, axis=1)

# 标签

labels = np.asarray(labels)

uniqFeatures = set(features)

dataSet = {}

labelSet = {}

for feat in uniqFeatures:

dataSet[feat] = data[features == feat]

labelSet[feat] = labels[features == feat]

return dataSet, labelSet10.创造一颗带内部结点标签的树,便于后剪枝

def createTreeWithLabel(dataSet, labels, feats, method ='id3'):

dataSet = np.asarray(dataSet)

labels = np.asarray(labels)

feats = np.asarray(feats)

# 如果不划分的标签为

votedLabel = voteLabel(labels)

# 如果结果为单一结果

if len(set(labels)) == 1:

return votedLabel

# 如果没有待分类特征

elif dataSet.size == 0:

return votedLabel

# 其他情况则选取特征

bestFeat, bestEnt = bestFeature(dataSet, labels, method = method)

# 取特征名称

bestFeatName = feats[bestFeat]

# 从特征名称列表删除已取得特征名称

feats = np.delete(feats, [bestFeat])

# 根据选取的特征名称创建树节点 划分前的标签votedPreDivisionLabel=_vpdl

decisionTree = {bestFeatName: {"_vpdl": votedLabel}}

# 根据最优特征进行分割

dataSet, labelSet = splitFeatureData(dataSet, labels, bestFeat)

# 对最优特征的每个特征值所分的数据子集进行计算

for featValue in dataSet.keys():

decisionTree[bestFeatName][featValue] = createTreeWithLabel(dataSet.get(featValue), labelSet.get(featValue), feats, method)

return decisionTree11.由带标签树转化为不带标签树,便于可视化

def convertTree(labeledTree):

normalTree = labeledTree.copy()

nodeName = list(labeledTree.keys())[0]

normalTree[nodeName] = labeledTree[nodeName].copy()

for val in list(labeledTree[nodeName].keys()):

if val == "_vpdl":

normalTree[nodeName].pop(val)

elif type(labeledTree[nodeName][val]) == dict:

normalTree[nodeName][val] = convertTree(labeledTree[nodeName][val])

return normalTree12.后剪枝

def treePostPruning(labeledTree, dataValid, labelValid, feats):

labelValidSet = {}

newTree = labeledTree.copy()

dataValid = np.asarray(dataValid)

labelValid = np.asarray(labelValid)

feats = np.asarray(feats)

featName = list(labeledTree.keys())[0]

featCol = np.argwhere(feats == featName)[0][0]

feats = np.delete(feats, [featCol])

newTree[featName] = labeledTree[featName].copy()

featValueDict = newTree[featName]

featPreLabel = featValueDict.pop("_vpdl")

# print("当前节点预划分标签:" + featPreLabel)

# 是否为子树的标记

subTreeFlag = 0

# 分割测试数据 如果有数据 则进行测试或递归调用 np的array我不知道怎么判断是否None, 用is None是错的

dataFlag = 1 if sum(dataValid.shape) > 0 else 0

if dataFlag == 1:

# print("当前节点有划分数据!")

dataValidSet, labelValidSet = splitFeatureData(dataValid, labelValid, featCol)

for featValue in featValueDict.keys():

# print("当前节点属性 {0} 的子节点:{1}".format(featValue ,str(featValueDict[featValue])))

if dataFlag == 1 and type(featValueDict[featValue]) == dict:

subTreeFlag = 1

# 如果是子树则递归

newTree[featName][featValue] = treePostPruning(featValueDict[featValue], dataValidSet.get(featValue),

labelValidSet.get(featValue), feats)

# 如果递归后为叶子 则后续进行评估

if type(featValueDict[featValue]) != dict:

subTreeFlag = 0

# 如果没有数据 则转换子树

if dataFlag == 0 and type(featValueDict[featValue]) == dict:

subTreeFlag = 1

# print("当前节点无划分数据!直接转换树:"+str(featValueDict[featValue]))

newTree[featName][featValue] = convertTree(featValueDict[featValue])

# print("转换结果:" + str(convertTree(featValueDict[featValue])))

# 如果全为叶子节点, 评估需要划分前的标签,这里思考两种方法,

# 一是,不改变原来的训练函数,评估时使用训练数据对划分前的节点标签重新打标

# 二是,改进训练函数,在训练的同时为每个节点增加划分前的标签,这样可以保证评估时只使用测试数据,避免再次使用大量的训练数据

# 这里考虑第二种方法 写新的函数 createTreeWithLabel,当然也可以修改createTree来添加参数实现

if subTreeFlag == 0:

ratioPreDivision = equalNums(labelValid, featPreLabel) / labelValid.size

equalNum = 0

for val in labelValidSet.keys():

if val in featValueDict:

equalNum += equalNums(labelValidSet[val], featValueDict[val])

else:

equalNum += len(labelValidSet[val])/5 # 一共五类,随便选一类

ratioAfterDivision = equalNum / labelValid.size

# 如果划分后的测试数据准确率低于划分前的,则划分无效,进行剪枝,即使节点等于预划分标签

# 注意这里取的是小于,如果有需要 也可以取 小于等于

if ratioAfterDivision < ratioPreDivision:

newTree = featPreLabel

return newTree13.或者直接预剪枝构建决策树

equalNums = lambda x,y: 0 if x is None else x[x==y].size

def createTreePrePruning(dataTrain, labelTrain, dataTest, labelTest, names, method='id3'):

trainData = np.asarray(dataTrain)

labelTrain = np.asarray(labelTrain)

testData = np.asarray(dataTest)

labelTest = np.asarray(labelTest)

names = np.asarray(names)

# 如果结果为单一结果

if len(set(labelTrain)) == 1:

return labelTrain[0]

# 如果没有待分类特征

elif trainData.size == 0:

return voteLabel(labelTrain)

# 其他情况则选取特征

bestFeat, bestEnt = bestFeature(dataTrain, labelTrain, method=method)

# 取特征名称

bestFeatName = names[bestFeat]

# 从特征名称列表删除已取得特征名称

names = np.delete(names, [bestFeat])

# 根据最优特征进行分割

dataTrainSet, labelTrainSet = splitFeatureData(dataTrain, labelTrain, bestFeat)

# 预剪枝评估

# 划分前的分类标签

labelTrainLabelPre = voteLabel(labelTrain)

labelTrainRatioPre = equalNums(labelTrain, labelTrainLabelPre) / labelTrain.size

# 划分后的精度计算

if dataTest is not None:

dataTestSet, labelTestSet = splitFeatureData(dataTest, labelTest, bestFeat)

# 划分前的测试标签正确比例

labelTestRatioPre = equalNums(labelTest, labelTrainLabelPre) / labelTest.size

# 划分后 每个特征值的分类标签正确的数量

labelTrainEqNumPost = 0

for val in labelTrainSet.keys():

labelTrainEqNumPost += equalNums(labelTestSet.get(val), voteLabel(labelTrainSet.get(val))) + 0.0

# 划分后 正确的比例

labelTestRatioPost = labelTrainEqNumPost / labelTest.size

# 如果没有评估数据 但划分前的精度等于最小值0.5 则继续划分

if dataTest is None and labelTrainRatioPre == 0.5:

decisionTree = {bestFeatName: {}}

for featValue in dataTrainSet.keys():

decisionTree[bestFeatName][featValue] = createTreePrePruning(dataTrainSet.get(featValue),

labelTrainSet.get(featValue)

, None, None, names, method)

elif dataTest is None:

return labelTrainLabelPre

# 如果划分后的精度相比划分前的精度下降, 则直接作为叶子节点返回

elif labelTestRatioPost < labelTestRatioPre:

return labelTrainLabelPre

else:

# 根据选取的特征名称创建树节点

decisionTree = {bestFeatName: {}}

# 对最优特征的每个特征值所分的数据子集进行计算

for featValue in dataTrainSet.keys():

decisionTree[bestFeatName][featValue] = createTreePrePruning(dataTrainSet.get(featValue),

labelTrainSet.get(featValue)

, dataTestSet.get(featValue),

labelTestSet.get(featValue)

, names, method)

return decisionTree14.决策树用于分类

def classify(inputTree,featLabels,testVec):

#获取决策树节点

firstStr=next(iter(inputTree))

#下一个字典

secondDict=inputTree[firstStr]

featIndex=featLabels.index(firstStr)

classLabel = 0

for key in secondDict.keys(): # key指的是当前特征可以取到的值

if testVec[featIndex]==key:

if type(secondDict[key]).__name__=='dict':

classLabel=classify(secondDict[key],featLabels,testVec)

else:

classLabel=secondDict[key]

return classLabel15.决策树的可视化

def getNumLeafs(myTree):

numLeafs=0

firstStr=next(iter(myTree))

secondDict=myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

numLeafs+=getNumLeafs(secondDict[key])

else: numLeafs+=1

return numLeafs

def getTreeDepth(myTree):

maxDepth = 0 #初始化决策树深度

firstStr = next(iter(myTree)) #python3中myTree.keys()返回的是dict_keys,不在是list,所以不能使用myTree.keys()[0]的方法获取结点属性,可以使用list(myTree.keys())[0]

secondDict = myTree[firstStr] #获取下一个字典

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict': #测试该结点是否为字典,如果不是字典,代表此结点为叶子结点

thisDepth = 1 + getTreeDepth(secondDict[key])

else: thisDepth = 1

if thisDepth > maxDepth: maxDepth = thisDepth #更新层数

return maxDepth

def plotNode(nodeTxt, centerPt, parentPt, nodeType):

arrow_args = dict(arrowstyle="<-") #定义箭头格式

font = FontProperties(fname=r"c:\windows\fonts\simsun.ttc", size=14) #设置中文字体

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction', #绘制结点

xytext=centerPt, textcoords='axes fraction',

va="center", ha="center", bbox=nodeType, arrowprops=arrow_args, FontProperties=font)

def plotMidText(cntrPt, parentPt, txtString):

xMid = (parentPt[0]-cntrPt[0])/2.0 + cntrPt[0] #计算标注位置

yMid = (parentPt[1]-cntrPt[1])/2.0 + cntrPt[1]

createPlot.ax1.text(xMid, yMid, txtString, va="center", ha="center", rotation=30)

def plotTree(myTree, parentPt, nodeTxt):

decisionNode = dict(boxstyle="sawtooth", fc="0.8") #设置结点格式

leafNode = dict(boxstyle="round4", fc="0.8") #设置叶结点格式

numLeafs = getNumLeafs(myTree) #获取决策树叶结点数目,决定了树的宽度

depth = getTreeDepth(myTree) #获取决策树层数

firstStr = next(iter(myTree)) #下个字典

cntrPt = (plotTree.xOff + (1.0 + float(numLeafs))/2.0/plotTree.totalW, plotTree.yOff) #中心位置

plotMidText(cntrPt, parentPt, nodeTxt) #标注有向边属性值

plotNode(firstStr, cntrPt, parentPt, decisionNode) #绘制结点

secondDict = myTree[firstStr] #下一个字典,也就是继续绘制子结点

plotTree.yOff = plotTree.yOff - 1.0/plotTree.totalD #y偏移

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict': #测试该结点是否为字典,如果不是字典,代表此结点为叶子结点

plotTree(secondDict[key],cntrPt,str(key)) #不是叶结点,递归调用继续绘制

else: #如果是叶结点,绘制叶结点,并标注有向边属性值

plotTree.xOff = plotTree.xOff + 1.0/plotTree.totalW

plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode)

plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key))

plotTree.yOff = plotTree.yOff + 1.0/plotTree.totalD

def createPlot(inTree):

fig = plt.figure(1, facecolor='white')#创建fig

fig.clf()#清空fig

axprops = dict(xticks=[], yticks=[])

createPlot.ax1 = plt.subplot(111, frameon=False, **axprops)#去掉x、y轴

plotTree.totalW = float(getNumLeafs(inTree))#获取决策树叶结点数目

plotTree.totalD = float(getTreeDepth(inTree))#获取决策树层数

plotTree.xOff = -0.5/plotTree.totalW; plotTree.yOff = 1.0#x偏移

plotTree(inTree, (0.5,1.0), '')#绘制决策树

plt.show()#显示绘制结果16.主函数,采用预剪枝、交叉验证

if __name__=='__main__':

# 得到所有特征名

feats = get_feats("colunm_headers.txt")

# 得到所有数据,情况有变,文件名不应该是train,还没改

all_data = get_data("train.txt")

best_acc = 0

for i in range(0,7): # 共八千行,选择一千行为测试集

all_data_copy = all_data

test_data = all_data_copy[i * 1000:(i + 1) * 1000] # 测试集(含特征、标签)

test_labels = test_data[:, -1].tolist() # 取标签

test_feat = np.delete(test_data, -1, axis=1).tolist() # 取特征(删掉最后一列)

all_data_copy = np.delete(all_data_copy,slice(i * 1000,(i + 1) * 1000),axis = 0) # 删除该行

for j in range(0,6): # 选择一千行为验证集

valid_data = all_data_copy[j * 1000:(j + 1) * 1000]

valid_labels = valid_data[:, -1].tolist()

valid_feat = np.delete(valid_data, -1, axis=1).tolist()

# 剩下的6000行为训练集

train_data = np.delete(all_data_copy, slice(j * 1000,(j + 1) * 1000),axis=0)

train_labels = train_data[:,-1].tolist()

train_feat = np.delete(train_data,-1,axis=1).tolist()

# 后剪枝

# TreeBeforePostPruningLabeled = createTreeWithLabel(train_feat,train_labels,feats)

# TreeBeforePostPruning = convertTree(TreeBeforePostPruningLabeled)

# Tree = treePostPruning(TreeBeforePostPruningLabeled,valid_data,valid_labels,feats)

# createPlot(TreeBeforePostPruning)

Tree = createTreePrePruning(train_feat,train_labels,valid_feat,valid_labels,feats)

# createPlot(Tree)

# 剪枝以后,若全剪光,则只是一个numpy.int32类型的叶子结点;否则为字典

if not isinstance(Tree,dict):

continue

err = 0

for k in range(1000):

result=classify(Tree,feats,test_feat[k])

if result == test_labels[k]:

err += 1

acc = err/1000

if acc > best_acc:

best_acc = acc

best_i = i

best_j = j

print("正确率为:",acc)

print("最优的正确率为:",best_acc)

print("此时,i为:",best_i,"j为:",best_j)预剪枝得到的结果

正确率为: 0.602

正确率为: 0.588

正确率为: 0.577

......

......

正确率为: 0.576

最优的正确率为: 0.64

此时,i为: 5 j为: 2

即选取5000-6000为测试集、2000-3000为验证集,剩下的为训练集的时候,正确率最高,为0.64如果采用后剪枝,结果为

正确率为: 0.445

正确率为: 0.454

正确率为: 0.469

......

......

正确率为: 0.441

最优的正确率为: 0.539

此时,i为: 5 j为: 5

即选取5000-6000为测试集、6000-7000为验证集,剩下的为训练集的时候,正确率最高,为0.539相关拓展

使用sklearn库分析决策树性能:

if __name__ == "__main__":

from sklearn import datasets

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score

DTC = DecisionTreeClassifier()

# 得到所有特征名

feats = get_feats("colunm_headers.txt")

# 得到所有数据,情况有变,文件名不应该是train,还没改

all_data = get_data("train.txt")

targets = all_data[:, -1]

features = np.delete(all_data, -1, axis=1)

train_features, test_features, train_targets, test_targets = train_test_split(features, targets, train_size=0.7,

shuffle=0)

DTC.fit(train_features, train_targets)

predict_targets = DTC.predict(test_features)

right = 0

all_len = test_targets.shape[0]

for i in range(all_len):

if predict_targets[i]==test_targets[i]:

right += 1

print("正确率为:", right / all_len)

>>> 正确率为: 0.48375运用决策树对鸢尾花进行分类

from sklearn import datasets

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score

iris = datasets.load_iris()

DTC = DecisionTreeClassifier()

targets = iris.target

features = iris.data

train_features,test_features,train_targets,test_targets = train_test_split(features,targets,train_size=0.7,shuffle=42)

DTC.fit(train_features,train_targets)

predict_targets = DTC.predict(test_features)

print(r2_score(predict_targets,test_targets))

>>>0.96875

R^2相关指数平均在0.95左右,分类效果极佳。参考决策树python源码实现(含预剪枝和后剪枝)

决策树的预剪枝与后剪枝

《统计学习方法第二版》