Softmax Multi-Class Classifier 多分类器

Softmax Multi-Class Classifier 多分类器

- MNIST数据集

- Fashion-MNIST数据集

- Flatten

- One-Hot Encoding

- Softmax介绍

- 应用Softmax实现多分类

- 交叉熵(Cross Entropy)

- Softmax与逻辑回归的联系

- GPU加速运算原理

- 使用Keras框架实现Softmax多分类

- 代码实现

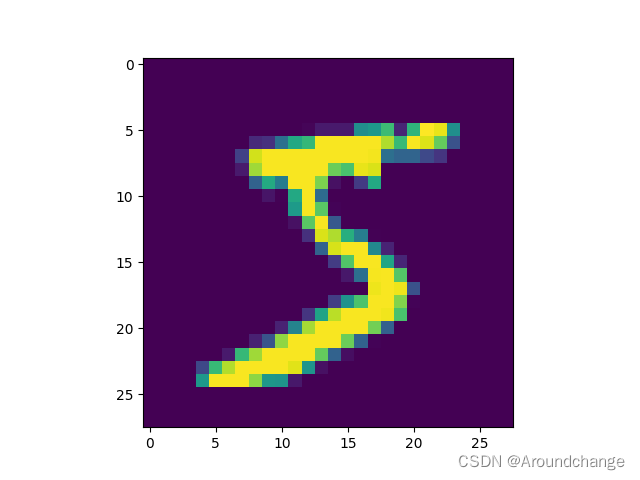

- 相关测试图片

MNIST数据集

- MNIST数据集为手写体数字的数据集,由每一张图片组成,每一张图片为一张黑白的图片,像素值都在0-255之间,图片的大小均为28×28,也即是由28行以及28列组成。对于一个数据集而言,我们要将它分成训练集以及测试集,而对于MNIST数据集而言,它的训练集由60000张图片组成,测试集由10000张图片组成。由于每张图片的大小为28×28,所以训练集X_train的shape为60000×28×28,测试集X_test的shape为10000×28×28。每张图片有对应的Label,也即是图片上的数字。

Fashion-MNIST数据集

- Fashion-MNIST数据集一共有10类,也即是Label有10种,和MNIST数据集一样,每一张图片为一张黑白的图片,像素值都在0-255之间,图片的大小均为28×28。MNIST数据集的每一张图片为数字,而Fashion-MNIST数据集的每一张图片则是服饰。同时,与MNIST数据集一样,它的训练集由60000张图片组成,测试集由10000张图片组成。由于每张图片的大小也为28×28,所以训练集X_train的shape为60000×28×28,测试集X_test的shape为10000×28×28。

Flatten

- 针对于MNIST与Fashion-MNIST数据集,每一张图片的大小均为28×28。对于一张黑白图片,我们可以用一个二维矩阵来表示。Flatten的意思就是我们将图片扁平化,将它拉直,也即是将二维矩阵变成一维的向量,将矩阵的第一行放在一维向量的起始位置,矩阵的第二行紧接着放在起始位置的后面,以此类推,一直到第28行。

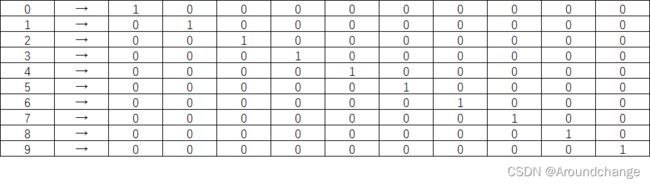

One-Hot Encoding

- 对于MNIST数据集,有对应数字为0-9的Label,如果我们直接将它给Moudel,Moudel会误认为这些数字存在数值上的差异,也即是会觉得与自身差值小的数字相距近,而与自身差值大的数字相距远。Label只是对于我们Feature的一个描述,并不是说它们存在什么距离,为了消除这个影响,我们将引入One-Hot Encoding。MNIST数据集的Label有10个数,我们可以初始化一个10维的向量,或者理解为数组,我们将Label的数字对应下标设为1,其它位置都设为0,这样便消除了影响。

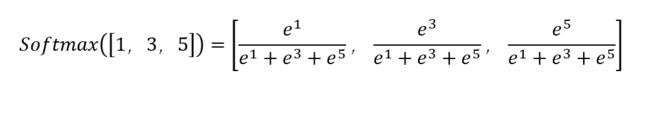

Softmax介绍

- 取三个数中的最大值可以表示为Max([1,3,5])=5,而Softmax([1,3,5])=[0.015,0.117,0.868]。其运算过程如下:

- 由于0.015+0.117+0.868=1,于是我们可以将它与概率联系起来。同时也可以看出,Softmax函数将最小值放得更小了,而将最大值放得更大了。

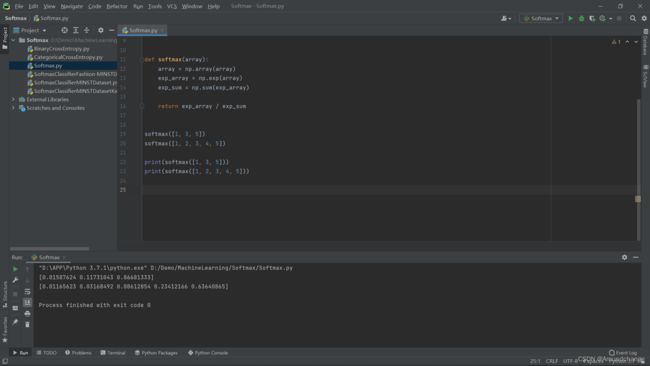

- Softmax函数代码实现:

import numpy as np

def softmax(array):

array = np.array(array)

exp_array = np.exp(array)

exp_sum = np.sum(exp_array)

return exp_array / exp_sum

softmax([1, 3, 5])

softmax([1, 2, 3, 4, 5])

print(softmax([1, 3, 5]))

print(softmax([1, 2, 3, 4, 5]))

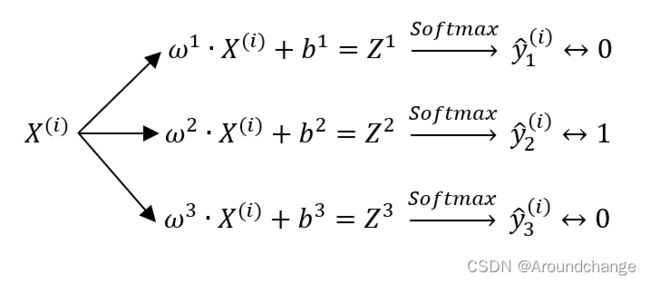

应用Softmax实现多分类

- 首先我们实现一个三分类的分类任务,我们拿出训练集中的一个Sample,它的Feature以及经过One-Hot Encoding后的Label为:

- 以Label为1,One-Hot Encoding为[0,1,0]举例,对应的Train为:

- 以Sample为3举例,对应的Predict为:

- 对于上式而言,假设预测值分别对应0.3,0.2,0.5,这个时候我们就将它预测为第三类,0.5是最大的值,对应0,0,1,也即是Label为2的值。

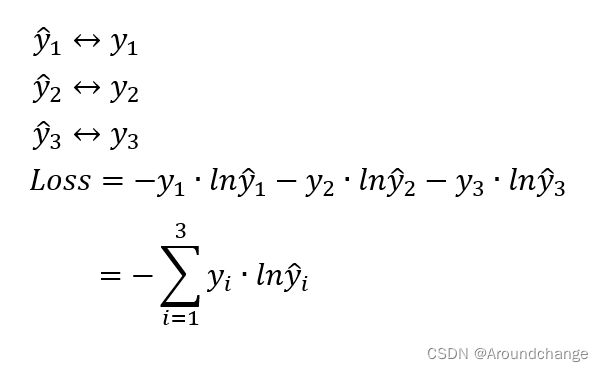

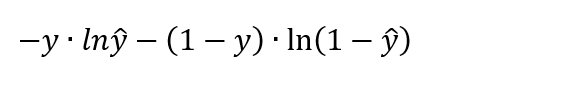

交叉熵(Cross Entropy)

Softmax与逻辑回归的联系

- 逻辑回归可以参考之前写的这一篇博文:Logistic Regression 逻辑回归

- Categorical Cross Entropy

- Binary Cross Entropy

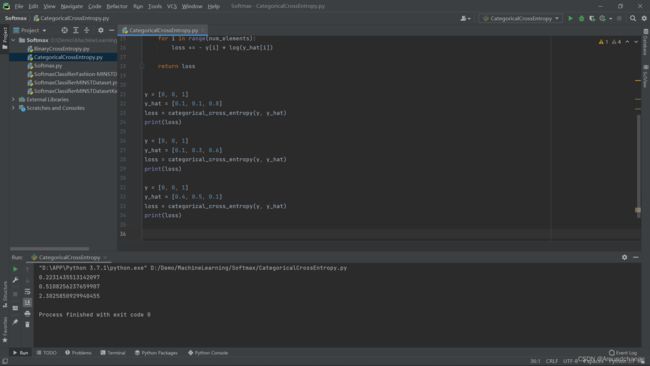

- Categorical Cross Entropy代码实现:

import numpy as np

from numpy import log

def categorical_cross_entropy(y, y_hat):

num_elements = len(y)

loss = 0

for i in range(num_elements):

loss += - y[i] * log(y_hat[i])

return loss

y = [0, 0, 1]

y_hat = [0.1, 0.1, 0.8]

loss = categorical_cross_entropy(y, y_hat)

print(loss)

y = [0, 0, 1]

y_hat = [0.1, 0.3, 0.6]

loss = categorical_cross_entropy(y, y_hat)

print(loss)

y = [0, 0, 1]

y_hat = [0.4, 0.5, 0.1]

loss = categorical_cross_entropy(y, y_hat)

print(loss)

import numpy as np

from numpy import log

def binary_cross_entropy(y, y_hat):

loss = -y * log(y_hat) - (1 - y) * log(1 - y_hat)

return loss

binary_cross_entropy(0, 0.01)

binary_cross_entropy(1, 0.99)

binary_cross_entropy(0, 0.3)

binary_cross_entropy(0, 0.8)

print(binary_cross_entropy(0, 0.01))

print(binary_cross_entropy(1, 0.99))

print(binary_cross_entropy(0, 0.3))

print(binary_cross_entropy(0, 0.8))

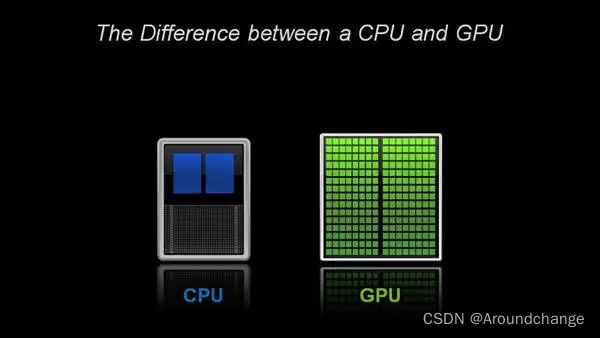

GPU加速运算原理

- 首先,GPU与CPU在核心数上有很大的差别。

- 在矩阵运算的乘法中,行列相乘时互不影响,也即是可以同时放到GPU的各个核心中进行计算,从而实现并行操作。由于GPU的核心数远大于CPU,所以在矩阵运算上,虽然单次处理速度GPU可能没有CPU快,但是由于CPU核心数有限,对于数量级较大的运算,GPU更具有优势。

使用Keras框架实现Softmax多分类

代码实现

- SoftmaxClassifierMINSTDataset

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical # One-Hot Encoding

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# print(X_train.shape)

# print(X_test.shape)

# print(y_train.shape)

# print(y_test.shape)

# plt.imshow(X_train[0])

# plt.show()

# print(y_train[0])

n_train = X_train.shape[0] # 60000

n_test = X_test.shape[0] # 10000

flatten_size = 28 * 28

# X_train(60000 * 28 * 28)

X_train = X_train.reshape((n_train, flatten_size))

# 0 ~ 255 --> 0 ~ 1

X_train = X_train / 255

# 0 ~ 9 --> One-Hot Encoding

# 3 --> [0, 0, 0, 1, 0, 0, 0, 0, 0, 0]

y_train = to_categorical(y_train)

X_test = X_test.reshape((n_test, flatten_size))

X_test = X_test / 255

y_test = to_categorical(y_test)

X_train.shape

# print(X_train.shape)

y_train.shape

# print(y_train.shape)

y_train[0]

# print(y_train[0])

def softmax(x):

exp_x = np.exp(x)

sum_e = np.sum(exp_x, axis=1)

for i in range(x.shape[0]):

exp_x[1, :] = exp_x[i, :] / sum_e[i]

return exp_x

W = np.zeros((784, 10))

b = np.zeros((1, 10))

N = 100

lr = 0.00001

for i in range(N):

det_w = np.zeros((784, 10))

det_b = np.zeros((1, 10))

logits = np.dot(X_train, W) + b

y_hat = softmax(logits)

det_w = np.dot(X_train.T, (y_hat - y_train))

det_b = np.sum((y_hat - y_train), axis=0)

W = W - lr * det_w

b = b - lr * det_b

logits_train = np.dot(X_train, W) + b

y_train_hat = softmax(logits_train)

y_hat = np.argmax(y_train_hat, axis=1)

y = np.argmax(y_train, axis=1)

count = 0

for i in range(len(y_hat)):

if y[i] == y_hat[i]:

count += 1

print('Accuracy On Training Set Is {}%'.format(round(count / n_train, 2) * 100))

logits_test = np.dot(X_test, W) + b

y_test_hat = softmax(logits_test)

y_hat = np.argmax(y_test_hat, axis=1)

y = np.argmax(y_test, axis=1)

count = 0

for i in range(len(y_hat)):

if y[i] == y_hat[i]:

count += 1

print('Accuracy On Testing Set Is {}%'.format(round(count / n_test, 2) * 100))

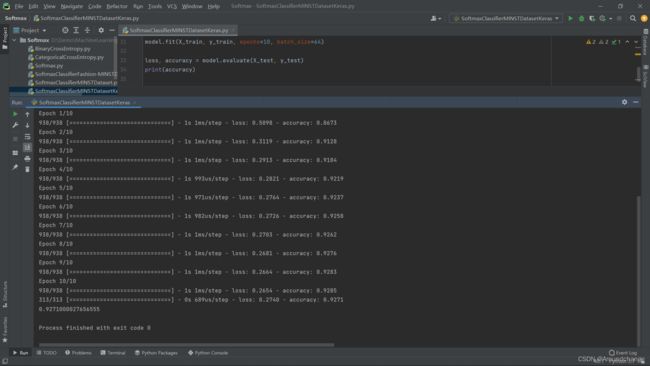

import numpy as np

import pandas as pd

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.optimizers import RMSprop

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train / 255

X_test = X_test / 255

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

model = Sequential()

model.add(Flatten(input_shape=(28, 28)))

model.add(Dense(units=10, activation='softmax'))

# model.summary()

model.compile(loss='categorical_crossentropy',

metrics=['accuracy'],

optimizer=RMSprop())

model.fit(X_train, y_train, epochs=10, batch_size=64)

loss, accuracy = model.evaluate(X_test, y_test)

print(accuracy)

import numpy as np

import pandas as pd

from tensorflow.keras.datasets import fashion_mnist

import matplotlib.pyplot as plt

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.optimizers import RMSprop

(X_train, y_train), (X_test, y_test) = fashion_mnist.load_data()

X_train = X_train / 255

X_test = X_test / 255

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

# plt.imshow(X_train[0])

# plt.show()

# plt.imshow(X_train[100])

# plt.show()

# X_train.shape

# X_test.shape

# print(X_train.shape)

# print(X_test.shape)

model = Sequential()

model.add(Flatten(input_shape=(28, 28)))

model.add(Dense(units=10, activation='softmax'))

# model.summary()

model.compile(loss='categorical_crossentropy',

metrics=['accuracy'],

optimizer=RMSprop())

model.fit(X_train, y_train, epochs=10, batch_size=64)

loss, accuracy = model.evaluate(X_test, y_test)

print(accuracy)

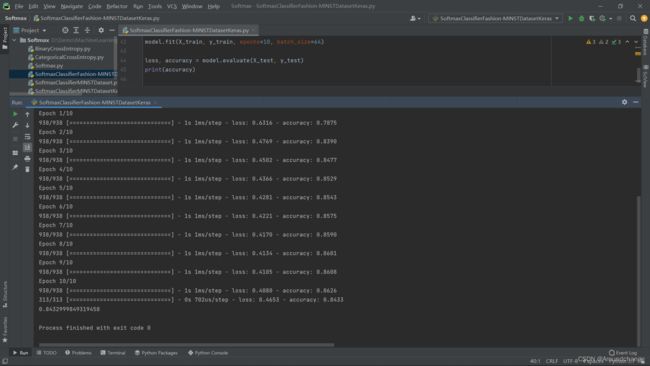

- 运行结果:

- 通过以上运行结果可以看出,经过训练后,我们的Accuracy只能达到80%以上,也即是用Softmax对Fashion-MINST数据集进行处理时显得有些吃力,我们可以用深度学习的方法对其进行优化。Softmax一般用在深度学习的最后一层做分类任务,在它之前我们可以加入一些深度学习的DNN、CNN、RNN进行优化,提取它的Feature,这样一来就会让Softmax在分类时表现得更加出色。

相关测试图片

完整代码已上传至Github,各位下载时麻烦给个follow和star,感谢!

链接:SoftmaxMulti-ClassClassifier 多分类器