coco数据集和voc数据集的划分与使用

一、coco2017的目录结构

但coco数据集太大了,划分至原来的四分之一(参考链接):

# coding:utf8

__first_version_author__ = 'tylin'

__second_version_author__ = 'wfnian'

# Interface for accessing the Microsoft COCO dataset.

# Microsoft COCO Toolbox. version 2.0

# Data, paper, and tutorials available at: http://mscoco.org/

# Code written by Piotr Dollar and Tsung-Yi Lin, 2014.

# Licensed under the Simplified BSD License [see bsd.txt]

import json

import time

import shutil

import os

from collections import defaultdict

import json

from pathlib import Path

class COCO:

def __init__(self, annotation_file=None, origin_img_dir=""):

"""

Constructor of Microsoft COCO helper class for reading and visualizing annotations.

:param annotation_file (str): location of annotation file

:param image_folder (str): location to the folder that hosts images.

:return:

"""

# load dataset

self.origin_dir = origin_img_dir

self.dataset, self.anns, self.cats, self.imgs = dict(), dict(), dict(), dict() # imgToAnns 一个图片对应多个注解(mask) 一个类别对应多个图片

self.imgToAnns, self.catToImgs = defaultdict(list), defaultdict(list)

if not annotation_file == None:

print('loading annotations into memory...')

tic = time.time()

dataset = json.load(open(annotation_file, 'r'))

assert type(dataset) == dict, 'annotation file format {} not supported'.format(type(dataset))

print('Done (t={:0.2f}s)'.format(time.time() - tic))

self.dataset = dataset

self.createIndex()

def createIndex(self):

# create index 给图片->注解,类别->图片建立索引

print('creating index...')

anns, cats, imgs = {}, {}, {}

imgToAnns, catToImgs = defaultdict(list), defaultdict(list)

if 'annotations' in self.dataset:

for ann in self.dataset['annotations']:

imgToAnns[ann['image_id']].append(ann)

anns[ann['id']] = ann

if 'images' in self.dataset:

for img in self.dataset['images']:

imgs[img['id']] = img

if 'categories' in self.dataset:

for cat in self.dataset['categories']:

cats[cat['id']] = cat

if 'annotations' in self.dataset and 'categories' in self.dataset:

for ann in self.dataset['annotations']:

catToImgs[ann['category_id']].append(ann['image_id'])

print('index created!')

# create class members

self.anns = anns

self.imgToAnns = imgToAnns

self.catToImgs = catToImgs

self.imgs = imgs

self.cats = cats

def build(self, tarDir=None, tarFile='./new.json', N=1000):

load_json = {'images': [], 'annotations': [], 'categories': [], 'type': 'instances', "info": {"description": "This is stable 1.0 version of the 2014 MS COCO dataset.", "url": "http:\/\/mscoco.org", "version": "1.0", "year": 2014, "contributor": "Microsoft COCO group", "date_created": "2015-01-27 09:11:52.357475"}, "licenses": [{"url": "http:\/\/creativecommons.org\/licenses\/by-nc-sa\/2.0\/", "id": 1, "name": "Attribution-NonCommercial-ShareAlike License"}, {"url": "http:\/\/creativecommons.org\/licenses\/by-nc\/2.0\/", "id": 2, "name": "Attribution-NonCommercial License"}, {"url": "http:\/\/creativecommons.org\/licenses\/by-nc-nd\/2.0\/",

"id": 3, "name": "Attribution-NonCommercial-NoDerivs License"}, {"url": "http:\/\/creativecommons.org\/licenses\/by\/2.0\/", "id": 4, "name": "Attribution License"}, {"url": "http:\/\/creativecommons.org\/licenses\/by-sa\/2.0\/", "id": 5, "name": "Attribution-ShareAlike License"}, {"url": "http:\/\/creativecommons.org\/licenses\/by-nd\/2.0\/", "id": 6, "name": "Attribution-NoDerivs License"}, {"url": "http:\/\/flickr.com\/commons\/usage\/", "id": 7, "name": "No known copyright restrictions"}, {"url": "http:\/\/www.usa.gov\/copyright.shtml", "id": 8, "name": "United States Government Work"}]}

if not Path(tarDir).exists():

Path(tarDir).mkdir()

for i in self.imgs:

if(N == 0):

break

tic = time.time()

img = self.imgs[i]

load_json['images'].append(img)

fname = os.path.join(tarDir, img['file_name'])

anns = self.imgToAnns[img['id']]

for ann in anns:

load_json['annotations'].append(ann)

if not os.path.exists(fname):

shutil.copy(self.origin_dir+'/'+img['file_name'], tarDir)

print('copy {}/{} images (t={:0.1f}s)'.format(i, N, time.time() - tic))

N -= 1

for i in self.cats:

load_json['categories'].append(self.cats[i])

with open(tarFile, 'w+') as f:

json.dump(load_json, f, indent=4)

coco = COCO('../../datasets/coco/annotations/instances_train2017.json',

origin_img_dir='../../datasets/coco/train2017') # 完整的coco数据集的图片和标注的路径

coco.build('./mini_train2017', './mini_instances_train2017.json', 29568) # 保存图片路径

coco = COCO('../../datasets/coco/annotations/instances_val2017.json',

origin_img_dir='../../datasets/coco/val2017') # 完整的coco数据集的图片和标注的路径

coco.build('./mini_val2017', './mini_instances_val2017.json', 1250) # 保存图片路径

# 在2017年数据集中,训练集118287张,验证5000张,测试集40670张.

# 118287/4 = 29568 5000/4 = 1250

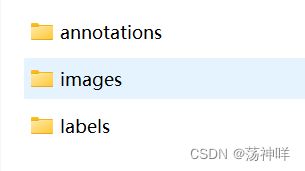

划分后结构如下:

在代码的coco.yaml文件中,更改如下语句:

path: E:\code\yolo\yolov5-2\coco\images\

# dataset root dir /coco #E:/dataset/coco/

train: train2017 # train images (relative to 'path') 118287 images train2017.txt

val: val2017 # train images (relative to 'path') 5000 images val2017.txt

#test: test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

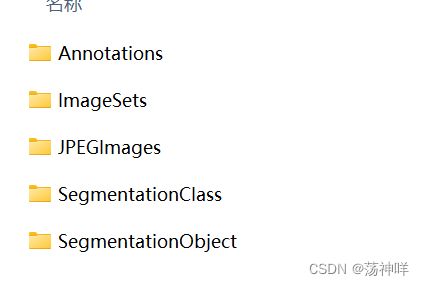

二、voc2012

1、目录结构如下:

目标检测只需要前三个文件夹。

ImageSets中除去main的文件夹都可以删掉,main中的所有txt文件也可以删掉,一般都是自己划分。

在此之前将JPEGImages文件夹改名为images。(该步非常重要,否则会找不到标签)

现在目录结构为:

在根目录(这三个文件夹的同一路径下)创建split_train_val.py,将voc划分为8:1:1(参考链接):

# -*- coding: utf-8 -*-

"""

Author:smile

Date:2022/09/11 10:00

顺序:脚本A1

简介:分训练集、验证集和测试集,按照 8:1:1 的比例来分,训练集8,验证集1,测试集1

"""

import os

import random

import argparse

parser = argparse.ArgumentParser()

# xml文件的地址,根据自己的数据进行修改 xml一般存放在Annotations下

parser.add_argument('--xml_path', default='E:/dataset/VOC2012ok/Annotations/', type=str, help='input xml label path')

# 数据集的划分,地址选择自己数据下的ImageSets/Main

parser.add_argument('--txt_path', default='E:/dataset/VOC2012ok/ImageSets/Main/', type=str, help='output txt label path')

opt = parser.parse_args()

train_percent = 0.8 # 训练集所占比例

val_percent = 0.1 # 验证集所占比例

test_persent = 0.1 # 测试集所占比例

xmlfilepath = opt.xml_path

txtsavepath = opt.txt_path

total_xml = os.listdir(xmlfilepath)

if not os.path.exists(txtsavepath):

os.makedirs(txtsavepath)

num = len(total_xml)

list = list(range(num))

t_train = int(num * train_percent)

t_val = int(num * val_percent)

train = random.sample(list, t_train)

num1 = len(train)

for i in range(num1):

list.remove(train[i])

val_test = [i for i in list if not i in train]

val = random.sample(val_test, t_val)

num2 = len(val)

for i in range(num2):

list.remove(val[i])

file_train = open(txtsavepath + '/train.txt', 'w')

file_val = open(txtsavepath + '/val.txt', 'w')

file_test = open(txtsavepath + '/test.txt', 'w')

for i in train:

name = total_xml[i][:-4] + '\n'

file_train.write(name)

for i in val:

name = total_xml[i][:-4] + '\n'

file_val.write(name)

for i in list:

name = total_xml[i][:-4] + '\n'

file_test.write(name)

file_train.close()

file_val.close()

file_test.close()

运行完毕后会在iImageSets-Main文件夹下生成三个txt文件。

再创建voc_label.py:

# -*- coding: utf-8 -*-

import xml.etree.ElementTree as ET

import os

sets = ['train', 'val', 'test'] # 如果你的Main文件夹没有test.txt,就删掉'test'

# classes = ["a", "b"] # 改成自己的类别,VOC数据集有以下20类别

classes = ["aeroplane", 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog',

'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor'] # class names

abs_path = os.getcwd()

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return x, y, w, h

def convert_annotation(image_id):

in_file = open(abs_path + '/Annotations/%s.xml' % (image_id), encoding='UTF-8')

out_file = open(abs_path + '/labels/%s.txt' % (image_id), 'w')

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

# difficult = obj.find('Difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

b1, b2, b3, b4 = b

# 标注越界修正

if b2 > w:

b2 = w

if b4 > h:

b4 = h

b = (b1, b2, b3, b4)

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

for image_set in sets:

if not os.path.exists(abs_path + '/labels/'):

os.makedirs(abs_path + '/labels/')

image_ids = open(abs_path + '/ImageSets/Main/%s.txt' % (image_set)).read().strip().split()

list_file = open(abs_path + '/%s.txt' % (image_set), 'w')

for image_id in image_ids:

list_file.write(abs_path + '/images/%s.jpg\n' % (image_id)) # 要么自己补全路径,只写一半可能会报错

convert_annotation(image_id)

list_file.close()

之后使用数据集用的就是根目录这三个txt文件,train.txt val.txt test.txt.

在使用时,需要修改voc.yaml文件:

path: E:\dataset\VOC2012ok #/VOC

train: train.txt # train images (relative to 'path') 16551 images

val: val.txt # val images (relative to 'path') 4952 images

test: test.txt # test images (optional)

引用:

1、

版权声明:本文为CSDN博主「zstar-_」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq1198768105/article/details/125645443

2、https://zhuanlan.zhihu.com/p/423898204