torch注册自定义算子并导出onnx(Enet, Centernet)

torch注册自定义算子

- 定义算子计算函数

-

- 定义算子的由input到output的计算函数(op_custom.cpp)

- 将函数注册到torch中(op_custom.cpp)

- 创建setup.py

- 编译生成.so文件

-

- 开始编译

- 测试是否注册成功

-

- 进行测试->加载模型(test.py)

-

- 第一种:

- 第二种:

- 进行测试->将算子通过symbol注册到torch/onnx(test.py)

- 进行测试->导出模型到onnx(test.py)

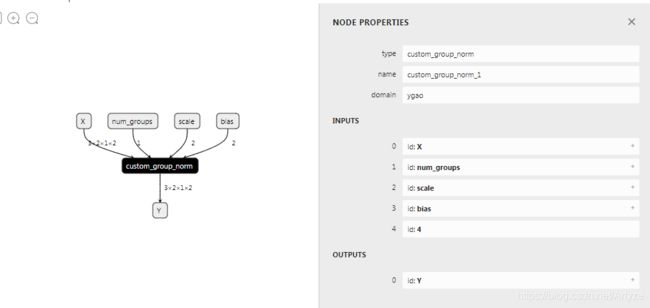

- 查看onnx

- 9. Enet转onnx

- centernet转onnx

定义算子计算函数

定义算子的由input到output的计算函数(op_custom.cpp)

,这一步是非常关键的,一定要确保正确,最好要多测试几遍

#include 将函数注册到torch中(op_custom.cpp)

static auto registry = torch::RegisterOperators("ygao::custom_group_norm", &custom_group_norm);

其中ygao是指定的你的算子所在的域,大致可以理解成命名空间,比如torch的nn或者jit

创建setup.py

torch是通过cpp_externsion来指定编译的,所以我们此时写一个类似cmakelist.txt的setup.py

from setuptools import setup, Extension

from torch.utils import cpp_extension

setup(name='custom_group_norm',

ext_modules=[cpp_extension.CppExtension('custom_group_norm', ['op_custom.cpp'])],

include_dirs = ["/workspace/ygao/software_backup/eigen-eigen-b3f3d4950030"],

cmdclass={'build_ext': cpp_extension.BuildExtension})

在里面要指定你的生成的库文件的名字”custom_group_norm”;需要的所有的源文件,头文件等等,cmdclass都是一样的,不用修改

编译生成.so文件

开始编译

执行如下命令:

python setup.py install

经过一段时间的编译之后(中途缺啥头文件或者源文件在setup.py中补齐即可),就可以在当前目录下得到build文件夹,其中有一个lib.linux-x86_64-3.7的文件夹,里面就是我们需要的.so文件

测试是否注册成功

进行测试->加载模型(test.py)

创建一个test.py,首先把刚才的库文件load进来,这一步有两种方式

第一种:

torch.ops.load_library("build/lib.linux-x86_64-3.7/dcn_v2_cpu.cpython-37m-x86_64-linux-gnu.so")

这种方式的op调用方式是

torch.ops.ygao.custom_group_norm(x, num_groups, scale, bias, torch.tensor([0.]))

第二种:

import custom_group_norm as cop

这种方式的op调用方式是:

cop.ygao.custom_group_norm(x, num_groups, scale, bias, torch.tensor([0.]))

进行测试->将算子通过symbol注册到torch/onnx(test.py)

def my_group_norm(g, input, num_groups, scale, bias, eps):

return g.op("ygao::my_group_norm", input, num_groups, scale, bias, epsilon_f=eps)

register_custom_op_symbolic('ygao::custom_group_norm', my_group_norm, 11)

这里需要注意的一点是,这种情况下,即使没有第六步,也可以成功的将模型转出到onnx,但是会报一个未知算子的warning。而这一步就是相当于将my_group_norm这个函数放到了symbol_opset11.py中,并且将函数名换成ygao::custom_group_norm,也就是只要碰到运算ygao::custom_group_norm,就会输出ygao::custom_group_norm类型的算子

进行测试->导出模型到onnx(test.py)

def export_custom_op():

class CustomModel(torch.nn.Module):

def forward(self, x, num_groups, scale, bias):

return torch.ops.ygao.custom_group_norm(x, num_groups, scale, bias, torch.tensor([0.]))

X = torch.randn(3, 2, 1, 2)

num_groups = torch.tensor([2.])

scale = torch.tensor([2., 1.])

bias = torch.tensor([1., 0.])

inputs = (X, num_groups, scale, bias)

f = './model.onnx'

torch.onnx.export(CustomModel(), inputs, f,

opset_version=9,

example_outputs=None,

input_names=["X", "num_groups", "scale", "bias"], output_names=["Y"],

custom_opsets={"ygao": 11})

export_custom_op()

这一步就相对比较简单,首先创建一个model,这个model只有custom_group_norm这一个算子,然后定义好input后,通过torch.onnx.export导出即可

查看onnx

9. Enet转onnx

Enet本身网络难点有三:

- max_unpool2d,这是torch支持,但是torch/onnx及onnx, onnxruntime都不支持的算子

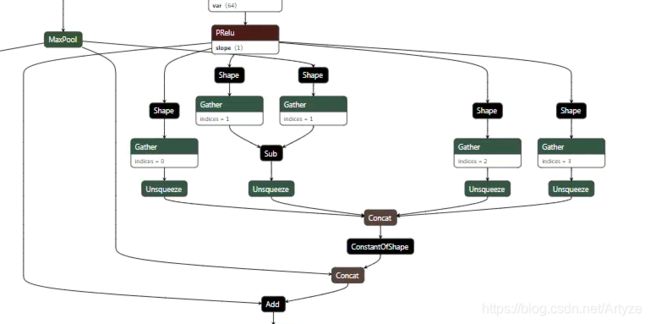

- 得到max_unpool2d的output_shape的一套组合

- 非常复杂的两张通道不同的feature map的add组合

所以开始解决问题:

- 在torch/onnx中添加算子max_unpool2d

这里有一个torch的bug需要提一下,除了torch == 1.1.0这个版本外,即使添加了max_unpool2d的转化支持,也是不能成功转化的,这个问题torch的老哥还没有给出具体的解决方法,所以要将torch的版本降为1.1.0

然后在torch/onnx/symbolic.py中添加如下代码:def max_unpool2d(g, self, indices, output_size): return g.op("MaxUnpool", self, indices, output_size)

之后开始转换即可得到最初版本的enet.onnx

- 替换得到max_unpool2d的output_size的代码块

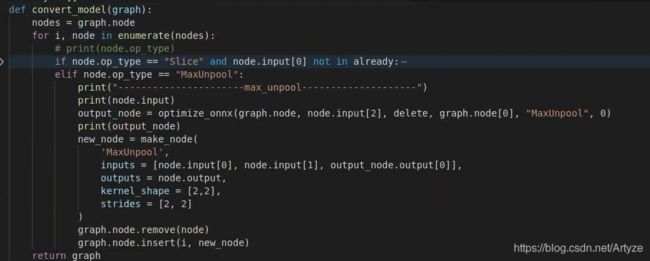

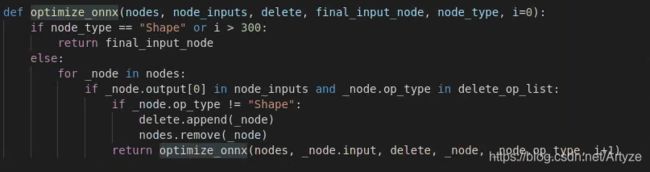

本身onnx和onnxruntime是支持MaxUnpool算子的,但是区别在于MaxUnpool需要attribute:kernel_shape, 以及MaxUnpool的output_size需要的是NCHW,而并不是像上图那样仅有HW,也就是上图是torch/onnx画蛇添足了,因此通过递归即可将这部分去掉,代码如下:

因为是远程,代码不好拷贝,就贴图了

因为是远程,代码不好拷贝,就贴图了 - 将通道不同的两组feature map相加的算子组合优化使用的是现成的工具,链接如下

onnx-simplifier

注意这两个顺序不能错,否则是不会成功的

最终就可以得到简化版的Enet

是不是简洁了许多

具体的处理python脚本,我上传到了github上,可自行下载使用:

选择opt_maxunpool即可

centernet转onnx

centernet主要的问题就是如何在torch/onnx添加可变形卷积的支持,解决方式也很简单,只要按照我上面写的添加新算子的步骤即可