convE模型

文章目录

-

- 《convolutional 2D knowledge graph embedding》论文解读

-

- 研究问题

- 写作动机(motivation)

- 模型详细描述

- 模型的整体框架图

- 实验

-

- 数据集

- 实验结果

- Reference

《convolutional 2D knowledge graph embedding》论文解读

研究问题

现有知识图谱均存在属性、实体、关系的缺失,现实世界中知识图谱的用途较为多样,并涉及到问答、推荐等多种领域,为此对知识图谱的补全进行研究显得尤为重要。

写作动机(motivation)

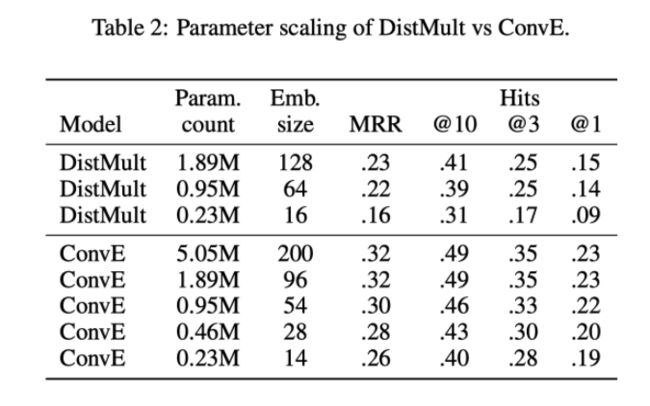

本文从神经网络进行知识图谱为出发点,考虑到浅层的连接预测任务常常用来做链接预测任务,但浅层的链接预测模型缺乏提取深层次特征的能力,盲目增加embedding_size又会导致过拟合现象,本文基于次设计参数高效、计算快速的卷积神经网络来做知识图谱表示学习。

模型详细描述

模型表示为

ψ ( e s , e o ) = f ( v e c ( f ( [ e s ^ ; r r ^ ] ) ) ∗ ω ) e o \psi(e_s,e_o)=f(vec(f([\hat{e_s};\hat{r_r}]))*\omega)e_o ψ(es,eo)=f(vec(f([es^;rr^]))∗ω)eo

其中 e s ^ \hat{e_s} es^和 r r ^ \hat{r_r} rr^分别表示头实体与关系的向量表示,*表示卷积操作,f是一个非线性的函数,采用relu函数做激活,score fuction定义为 p = σ ( ω r ( e s , e o ) ) p=\sigma(\omega_r(e_s,e_o)) p=σ(ωr(es,eo))

损失函数使用二元的交叉熵

L ( p , t ) = − 1 N ∑ ( t i ⋅ l o g ( p i ) + ( 1 − t i ) ⋅ l o g ( 1 − p i ) ) L(p,t)=-\frac{1}{N}\sum{(t_i \cdot log(p_i)+(1-t_i)\cdot log(1-p_i))} L(p,t)=−N1∑(ti⋅log(pi)+(1−ti)⋅log(1−pi))

convE模型源代码

class ConvE(torch.nn.Module):

def __init__(self, args, num_entities, num_relations):

super(ConvE, self).__init__()

self.emb_e = torch.nn.Embedding(num_entities, args.embedding_dim, padding_idx=0)

self.emb_rel = torch.nn.Embedding(num_relations, args.embedding_dim, padding_idx=0)

self.inp_drop = torch.nn.Dropout(args.input_drop)

self.hidden_drop = torch.nn.Dropout(args.hidden_drop)

self.feature_map_drop = torch.nn.Dropout2d(args.feat_drop)

self.loss = torch.nn.BCELoss()

self.emb_dim1 = args.embedding_shape1

self.emb_dim2 = args.embedding_dim // self.emb_dim1

self.conv1 = torch.nn.Conv2d(1, 32, (3, 3), 1, 0, bias=args.use_bias)

self.bn0 = torch.nn.BatchNorm2d(1)

self.bn1 = torch.nn.BatchNorm2d(32)

self.bn2 = torch.nn.BatchNorm1d(args.embedding_dim)

self.register_parameter('b', Parameter(torch.zeros(num_entities)))

self.fc = torch.nn.Linear(args.hidden_size,args.embedding_dim)

print(num_entities, num_relations)

def init(self):

xavier_normal_(self.emb_e.weight.data)

xavier_normal_(self.emb_rel.weight.data)

def forward(self, e1, rel):

e1_embedded= self.emb_e(e1).view(-1, 1, self.emb_dim1, self.emb_dim2)

rel_embedded = self.emb_rel(rel).view(-1, 1, self.emb_dim1, self.emb_dim2)

stacked_inputs = torch.cat([e1_embedded, rel_embedded], 2)

stacked_inputs = self.bn0(stacked_inputs)

x= self.inp_drop(stacked_inputs)

x= self.conv1(x)

x= self.bn1(x)

x= F.relu(x)

x = self.feature_map_drop(x)

x = x.view(x.shape[0], -1)

x = self.fc(x)

x = self.hidden_drop(x)

x = self.bn2(x)

x = F.relu(x)

x = torch.mm(x, self.emb_e.weight.transpose(1,0))

x += self.b.expand_as(x)

pred = torch.sigmoid(x)

return

convE模型首先将实体和关系向量表示转化为2维,并对其进行cat操作,并对编码后的数据进行dropout操作,之后进行卷积、batchnormalize等,之后经过一个全联接层并使用softmax得到对应的概率。

模型的整体框架图

实验

数据集

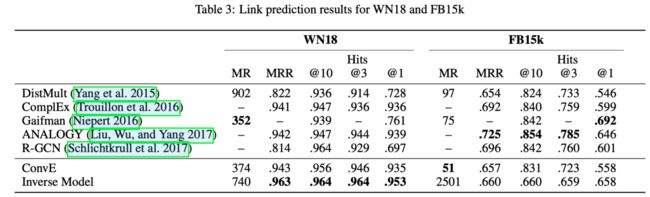

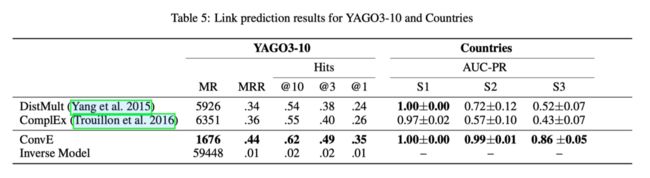

为验证模型的有效性,作者使用WN18,FB15K,YAGO3-10,Countires等公开数据集进行对比

实验结果

Reference

http://nysdy.com/post/Convolutional%202D%20Knowledge%20Graph%20Embeddings/

源代码地址