torch2caffe

文章目录

-

- 双线性上采样

- InstanceNorm

双线性上采样

在语义分割的网络中往往涉及到上采样这样一个过程,而原生的caffe不支持常用的双线性插值,我们希望将caffe的双线性插值的权重赋给torch,或者直接计算出双线性插值的权重赋值给torch和caffe

对哦,我直接算出来赋值给权重不就可以了,为什么要折腾这么久caffe?

看了以后,暂时的认知时插值和反卷积是不一样的,可能由于 1. 反卷积的向外padding;2. 内插这个操作到底是怎么实现的?

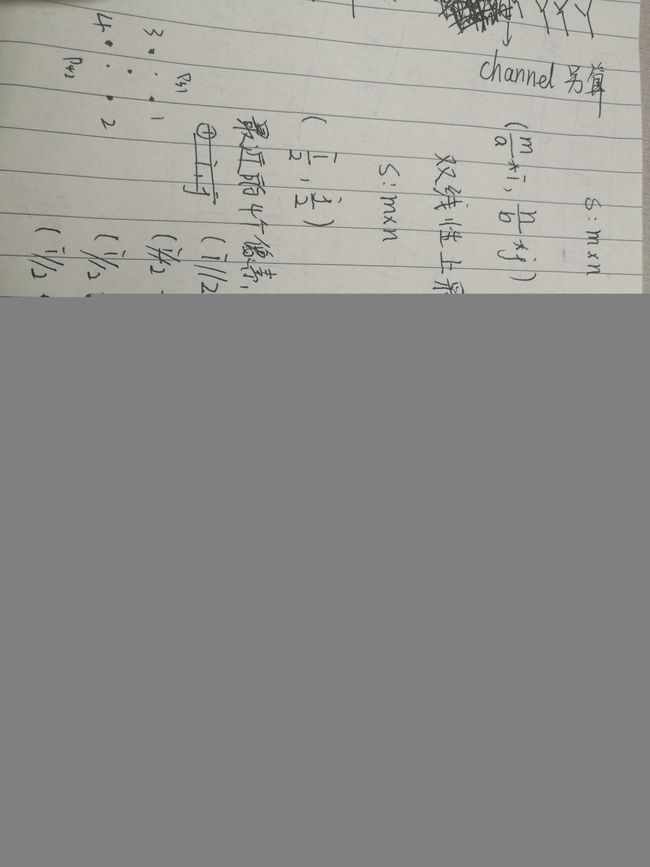

int x=(i+0.5)*m/a-0.5

int y=(j+0.5)*n/b-0.5

instead of

int x=i*m/a

int y=j*n/b

- torch 双线性插值和caffe双线性插值对比

- 首先要搞清楚插值时 align_corners的区别,看这个知乎和这个博客

其实也是似懂非懂,对于False,就是按照双线性插值的定义来的,并不是等值插值,根据具体值来计算的,而对于True可以保留更多的原始信息

- caffe的deconv实现与torch bilinear对比

使用pycaffe构建一个反卷积的网络

- pycaffe net

import caffe

from caffe import layers, params

def mmnet():

#construct net

net = caffe.NetSpec()

#infer/deploy

#placeholder

input = "data"

dim1 = 1

dim2 = 1

dim3 = 4

dim4 = 4

net.data = layers.Layer()

#up 2

#net.conv0_1 = layers.Convolution(net.data, num_output=64, kernel_size=1, stride=1, pad=0, bias_term=False, group=1, weight_filler=dict(type='constant'))

#net.conv0_2 = layers.Deconvolution(net.conv0_1, convolution_param=dict(num_output=64, kernel_size=2, stride=2, pad=0, bias_term=False, group=64, weight_filler=dict(type='bilinear')), param=dict(lr_mult=0, decay_mult=0))

#test

net.up = layers.Deconvolution(net.data, convolution_param=dict(num_output=1, kernel_size=4, stride=2, pad=1, bias_term=False, group=1, weight_filler=dict(type='bilinear')), param=dict(lr_mult=0, decay_mult=0))

#write to file

deploy_str='input: {}\ninput_dim: {}\ninput_dim: {}\ninput_dim: {}\ninput_dim: {}'.format('"'+input+'"', dim1, dim2, dim3, dim4)

return deploy_str+'\n'+'layer {'+'layer {'.join(str(net.to_proto()).split('layer {')[2:])

with open('deploy.prototxt', 'w') as f:

f.write(str(mmnet()))

- prototxt

input: "data"

input_dim: 1

input_dim: 1

input_dim: 4

input_dim: 4

layer {

name: "up"

type: "Deconvolution"

bottom: "data"

top: "up"

param {

lr_mult: 0.0

decay_mult: 0.0

}

convolution_param {

num_output: 1

bias_term: false

pad: 1

kernel_size: 4

group: 1

stride: 2

weight_filler {

type: "bilinear"

}

}

}

- 使用pycaffe进行推断

import caffe

import numpy as np

net_file = 'deploy.prototxt'

model_file = 'deploy.caffemodel'

net = caffe.Net(net_file, caffe.TEST)

net.save('deploy.caffemodel')

net = caffe.Net(net_file, model_file, caffe.TEST)

for param in net.params:

print(param)

print(net.params[param][0].data.shape)

print(net.params[param][0].data[0:4,0,:,:])

im = np.arange(1, 17).reshape((1, 1, 4, 4))

print(im)

net.blobs['data'].data[...] = im

out = net.forward()

#print(out.shape)

print(out['up'].shape)

print(out['up'])

输出:

up

(1, 1, 4, 4)

[[[0.0625 0.1875 0.1875 0.0625]

[0.1875 0.5625 0.5625 0.1875]

[0.1875 0.5625 0.5625 0.1875]

[0.0625 0.1875 0.1875 0.0625]]]

[[[[ 1 2 3 4]

[ 5 6 7 8]

[ 9 10 11 12]

[13 14 15 16]]]]

(1, 1, 8, 8)

[[[[ 0.5625 0.9375 1.3125 1.6875 2.0625 2.4375 2.8125 2.25 ]

[ 1.5 2.25 2.75 3.25 3.75 4.25 4.75 3.75 ]

[ 3. 4.25 4.75 5.25 5.75 6.25 6.75 5.25 ]

[ 4.5 6.25 6.75 7.25 7.75 8.25 8.75 6.75 ]

[ 6. 8.25 8.75 9.25 9.75 10.25 10.75 8.25 ]

[ 7.5 10.25 10.75 11.25 11.75 12.25 12.75 9.75 ]

[ 9. 12.25 12.75 13.25 13.75 14.25 14.75 11.25 ]

[ 7.3125 9.9375 10.3125 10.6875 11.0625 11.4375 11.8125 9. ]]]]

- torch的实验

import torch

import torch.nn.functional as F

x = torch.arange(1, 17).view(1, 1 ,4 ,4).float()

print(x)

y1 = F.interpolate(x, scale_factor=2, mode='bilinear', align_corners=True)

print(y1.shape)

print(y1)

y2 = F.interpolate(x, scale_factor=2, mode='bilinear', align_corners=False)

print(y2)

w = torch.tensor([[0.0625, 0.1875, 0.1875, 0.0625], [0.1875, 0.5625, 0.5625, 0.1875], [0.1875, 0.5625, 0.5625, 0.1875], [0.0625, 0.1875, 0.1875, 0.0625]]).view((1, 1, 4, 4)).float()

print(w.shape)

print(w)

z = F.conv_transpose2d(x, w, stride=2, padding=1)

print(z.shape)

print(z)

tensor([[[[ 1., 2., 3., 4.],

[ 5., 6., 7., 8.],

[ 9., 10., 11., 12.],

[13., 14., 15., 16.]]]])

torch.Size([1, 1, 8, 8])

tensor([[[[ 1.0000, 1.4286, 1.8571, 2.2857, 2.7143, 3.1429, 3.5714,

4.0000],

[ 2.7143, 3.1429, 3.5714, 4.0000, 4.4286, 4.8571, 5.2857,

5.7143],

[ 4.4286, 4.8571, 5.2857, 5.7143, 6.1429, 6.5714, 7.0000,

7.4286],

[ 6.1429, 6.5714, 7.0000, 7.4286, 7.8571, 8.2857, 8.7143,

9.1429],

[ 7.8571, 8.2857, 8.7143, 9.1429, 9.5714, 10.0000, 10.4286,

10.8571],

[ 9.5714, 10.0000, 10.4286, 10.8571, 11.2857, 11.7143, 12.1429,

12.5714],

[11.2857, 11.7143, 12.1429, 12.5714, 13.0000, 13.4286, 13.8571,

14.2857],

[13.0000, 13.4286, 13.8571, 14.2857, 14.7143, 15.1429, 15.5714,

16.0000]]]])

tensor([[[[ 1.0000, 1.2500, 1.7500, 2.2500, 2.7500, 3.2500, 3.7500,

4.0000],

[ 2.0000, 2.2500, 2.7500, 3.2500, 3.7500, 4.2500, 4.7500,

5.0000],

[ 4.0000, 4.2500, 4.7500, 5.2500, 5.7500, 6.2500, 6.7500,

7.0000],

[ 6.0000, 6.2500, 6.7500, 7.2500, 7.7500, 8.2500, 8.7500,

9.0000],

[ 8.0000, 8.2500, 8.7500, 9.2500, 9.7500, 10.2500, 10.7500,

11.0000],

[10.0000, 10.2500, 10.7500, 11.2500, 11.7500, 12.2500, 12.7500,

13.0000],

[12.0000, 12.2500, 12.7500, 13.2500, 13.7500, 14.2500, 14.7500,

15.0000],

[13.0000, 13.2500, 13.7500, 14.2500, 14.7500, 15.2500, 15.7500,

16.0000]]]])

torch.Size([1, 1, 4, 4])

tensor([[[[0.0625, 0.1875, 0.1875, 0.0625],

[0.1875, 0.5625, 0.5625, 0.1875],

[0.1875, 0.5625, 0.5625, 0.1875],

[0.0625, 0.1875, 0.1875, 0.0625]]]])

torch.Size([1, 1, 8, 8])

tensor([[[[ 0.5625, 0.9375, 1.3125, 1.6875, 2.0625, 2.4375, 2.8125,

2.2500],

[ 1.5000, 2.2500, 2.7500, 3.2500, 3.7500, 4.2500, 4.7500,

3.7500],

[ 3.0000, 4.2500, 4.7500, 5.2500, 5.7500, 6.2500, 6.7500,

5.2500],

[ 4.5000, 6.2500, 6.7500, 7.2500, 7.7500, 8.2500, 8.7500,

6.7500],

[ 6.0000, 8.2500, 8.7500, 9.2500, 9.7500, 10.2500, 10.7500,

8.2500],

[ 7.5000, 10.2500, 10.7500, 11.2500, 11.7500, 12.2500, 12.7500,

9.7500],

[ 9.0000, 12.2500, 12.7500, 13.2500, 13.7500, 14.2500, 14.7500,

11.2500],

[ 7.3125, 9.9375, 10.3125, 10.6875, 11.0625, 11.4375, 11.8125,

9.0000]]]])

两者是不一样的,我尝试了一下使用反卷积来实现双线性插值好像没有想到好的方法

- 但是最近邻插值可以使用caffe反卷积实现

将网络初始化修改为

net.up = layers.Deconvolution(net.data, convolution_param=dict(num_output=1, kernel_size=2, stride=2, pad=0, bias_term=False, group=1, weight_filler=dict(type='constant', value=1.0)), param=dict(lr_mult=0, decay_mult=0))

输出:

up

(1, 1, 2, 2)

[[[1. 1.]

[1. 1.]]]

[[[[ 1 2 3 4]

[ 5 6 7 8]

[ 9 10 11 12]

[13 14 15 16]]]]

(1, 1, 8, 8)

[[[[ 1. 1. 2. 2. 3. 3. 4. 4.]

[ 1. 1. 2. 2. 3. 3. 4. 4.]

[ 5. 5. 6. 6. 7. 7. 8. 8.]

[ 5. 5. 6. 6. 7. 7. 8. 8.]

[ 9. 9. 10. 10. 11. 11. 12. 12.]

[ 9. 9. 10. 10. 11. 11. 12. 12.]

[13. 13. 14. 14. 15. 15. 16. 16.]

[13. 13. 14. 14. 15. 15. 16. 16.]]]]

以上实验参考自:

- caffe构建网络

- caffe加载网络参数并推断

另外对于反卷积尺寸的计算,请参考

- torch官网

- 这个博客

- 这个知乎 但是博客和知乎说的不一样。。。

InstanceNorm

在图像生成/恢复/增强领域网络的norm 通常选择InstanceNorm, 那么怎么实现这个呢?

看这个pytorch2caffe github 实现即可