Spark应用之日志数据清洗--实例解析

Spark应用之日志数据清洗

- 一、日志数据

- 二、准备工作

- 三、数据清洗

-

- 3.1 清洗数据 user.csv

- 3.2 清洗数据 event.csv

- 3.3 清洗数据 user_friends.csv

- 3.4 清洗数据 event_attendees.csv

- 3.5 清洗数据 train.csv

一、日志数据

下载链接:events

提取码: ngs3

二、准备工作

- 下载日志数据

- 将下载的日志数据上传至 hdfs:/eventData 文件夹

- linux 启动spark,spark安装根目录输入:

sbin/start-all.sh - 为了方便展示,使用Zeppelin插件,导入spark sql 相关工具包:

import org.apache.spark.sql.types._

import org.apache.spark.sql.functions._

三、数据清洗

3.1 清洗数据 user.csv

- 查看数据(前10行):

hdfs dfs -cat /eventData/users.csv |head -n 10

- 使用 spark 载入数据:

val dfUsers = spark.read.format("csv").option("header","true").load("hdfs:///eventData/users.csv")

- 查看表数据和表头

dfUsers.show

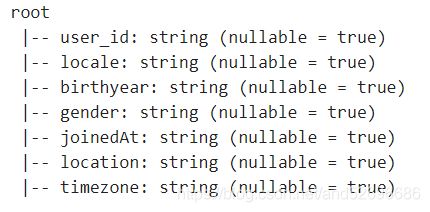

dfUsers.printSchema

需求1:查看 user.csv 是否有重复数据

查看总行数与 distinct usre_id 的数量是否一致,一致说明无重复数据,user_id数量少说明有重复数据

dfUsers.count

dfUsers.select("user_id").distinct.count

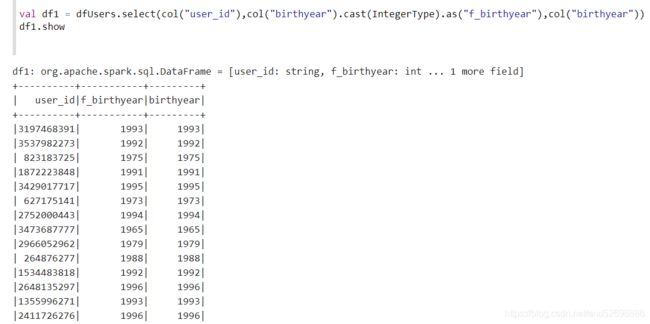

需求2:有多少用户没有输入或输入了无效的出生年份?

- 创建df1,查询三个字段:user_id ,f_birthyear,birthyear

val df1 = dfUsers.select(col("user_id"),col("birthyear").cast(IntegerType).as("f_birthyear"),col("birthyear"))

df1.show

- 创建df2,查询用户birthyear为空

val df2 = df1.filter(col("f_birthyear").isNull)

df2.show

- 查询df1的数据总量和df2中 birthyear 为空或者无效的数据量

df1.count

df2.count

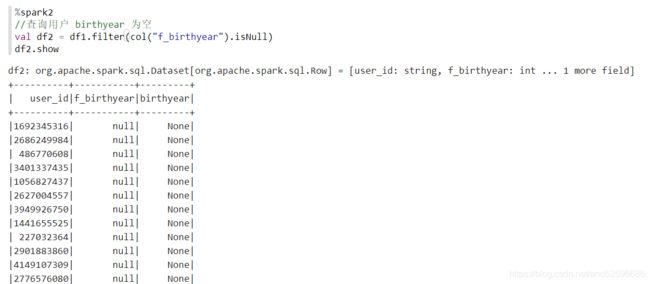

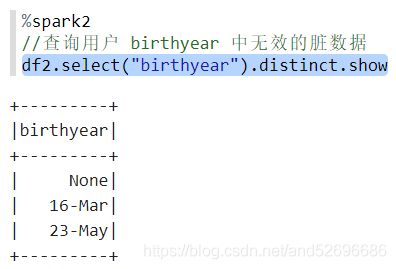

- 查询用户 birthyear 中无效的脏数据

df2.select("birthyear").distinct.show

注: 这里解释以下为什么 df2 要查询三个字段 user_id , f_birthyear , birthyear

- user_id主键不解释;

- 使用birthyear转型为IntegerType的字段"f_birthyear"是因为birthyear可能存在脏数据,不是整形的数字,可能是字符串之类的例如这里查询出来的"16-Mar"、“23-May”,无法转为为整形,强行转化显示为null,所以此处增加一个字段 f_birthyear 转化为整形,筛选一次 f_birthyear isNull 可以把 birthyear 所有空的或者无效脏数据找出来;

- 增加原有的字段 birthyear 是因为 使用filter过滤 f_birthyear isNull 时,把"birthyear"中所有空的None、错误类型字符串"16-Mar"都找了出来,统一转为Null,此时在单独查找 birthyear,birthyear中就只剩None类型以及脏数据,在去重查找即可;

需求3:使用用户的平均出生年份来替换用户无效的、缺失的birthyear数据

- ①首先将birtyear转型 IntergerType

val df3 = dfUsers.withColumn("birthyear",col("birthyear").cast(IntegerType))

df3.show

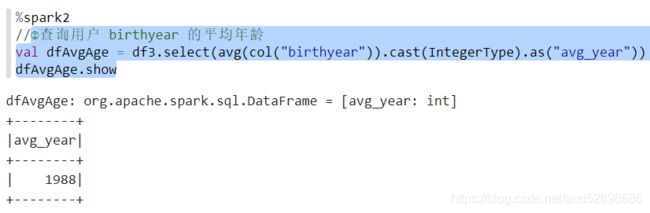

- ②查询用户 birthyear 的平均年龄

val dfAvgAge = df3.select(avg(col("birthyear")).cast(IntegerType).as("avg_year"))

dfAvgAge.show

- ③用平均年龄 avg_year 替换缺失、无效的数据

val df4 = df3.crossJoin(dfAvgAge).withColumn("new_birthyear",when(col("birthyear").isNull,col("avg_year")).otherwise(col("birthyear")))

df4.show

- 或者简单一点,在查询出结果的基础上将平均出生年份1988直接写死,将birthyear中 Null直接全部替换为1988

val df5 = df3.withColumn("birthyear",when(col("birthyear").isNull,lit(1988)).otherwise(col("birthyear")))

df5.show

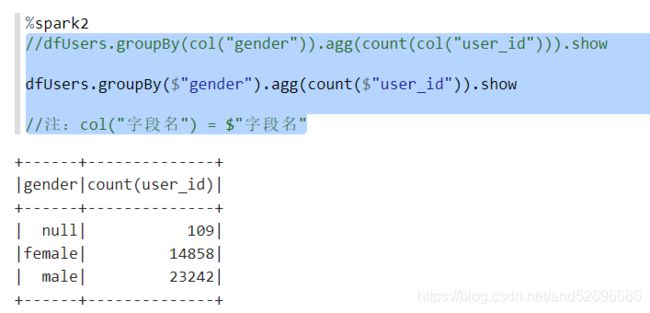

需求4:查询性别,发现"gender"字段中显示为 null,female,male,规范化,将null替换为unknown

dfUsers.groupBy($"gender").agg(count($"user_id")).show

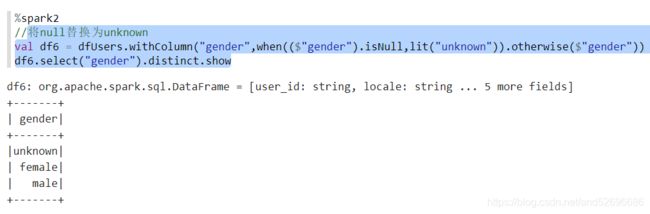

- 将null替换为unknown

val df6 = dfUsers.withColumn("gender",when(($"gender").isNull,lit("unknown")).otherwise($"gender"))

df6.select("gender").distinct.show

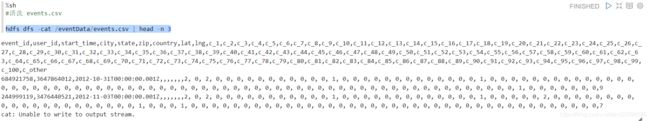

3.2 清洗数据 event.csv

- 查看数据(前3行):

hdfs dfs -cat /eventData/events.csv | head -n 3

- 将 events.csv 数据加载到spark中

val dfEvents = spark.read.format("csv").option("header","true").load("hdfs:///eventData/events.csv")

需求1:查询数据总量(不重复)

dfEvents.cache.count

dfEvents.select("event_id").distinct.count

需求2:查询事件表中有没有用户id与用户表用户id一致

- 创建临时表 events

dfEvents.createOrReplaceTempView("events")

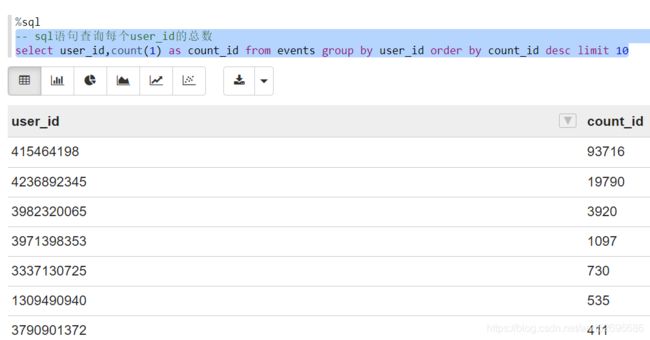

- sql语句查询每个user_id的总数

select user_id,count(1) as count_id from events group by user_id order by count_id desc limit 10

- 创建临时表 users

dfUsers.createOrReplaceTempView("users")

- sql语句查询询事件表中有没有用户id与用户表用户id一致数量

select count(1) from events e join users u on e.user_id = u.user_id

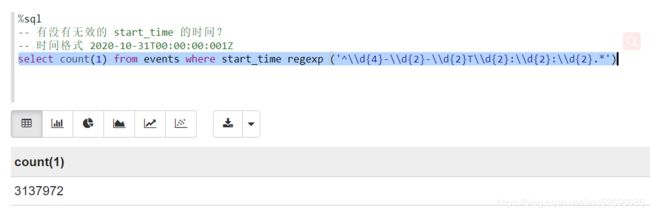

需求3:查询有没有无效的 start_time 的时间

时间格式为:2020-10-31T00:00:00:001Z

由需求1查询 event.csv 数据总量为:3137972

- sql 查询有效的起始时间数据总量:

select count(1) from events where start_time regexp ('^\\d{4}-\\d{2}-\\d{2}T\\d{2}:\\d{2}:\\d{2}.*')

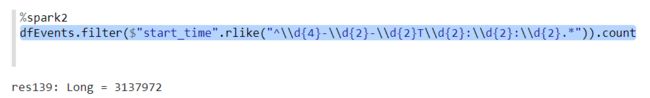

注: 使用 spark 查询有效的起始时间数据总量:

dfEvents.filter($"start_time".rlike("^\\d{4}-\\d{2}-\\d{2}T\\d{2}:\\d{2}:\\d{2}.*")).count

3.3 清洗数据 user_friends.csv

- 查看数据(前3行)

hdfs dfs -cat /eventData/user_friends.csv | head -n 3

- 将 user_friends.csv 数据加载到 spark 中

val dfUserFriends = spark.read.format("csv").option("header","true").load("hdfs:///eventData/user_friends.csv")

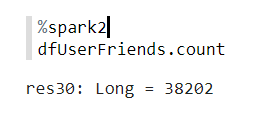

需求1:查询数据总量

dfUserFriends.count

需求2:如何实现数据查询 每行数据中user_id,friends_id 一 一对应

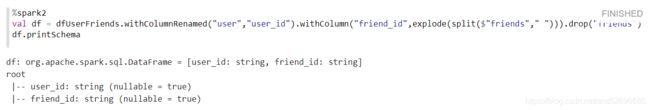

- 行转列,使用 explode 算子,将 friends 字段中的friend_id 行转列,与user_id 一 一对应

val df = dfUserFriends.withColumnRenamed("user","user_id").withColumn("friend_id",explode(split($"friends"," "))).drop("friends")

//查看表头

df.printSchema

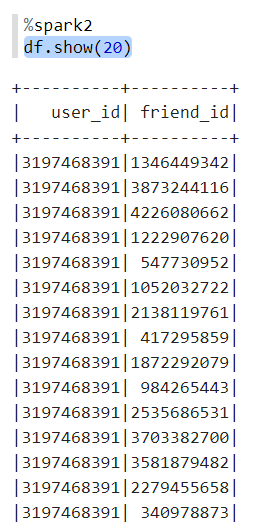

- 查看行转列后 user_id, friend_id 信息

df.show(20)

- 创建临时表:

dfUserFriends.createOrReplaceTempView("user_friends")

- sql 语句实现行转列

select

user user_id,

friend_id

from user_friends

lateral view explode(split(friends,' ')) as friend_id

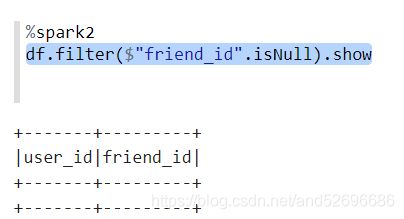

需求3:查询行转列之后的表中有多少有效数据 (friend_id非空)

- 查看 friend_id 有没有空数据

df.filter($"friend_id".isNull).show

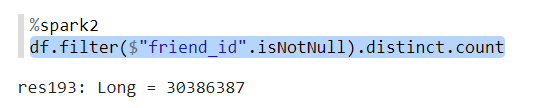

- 查询 friend_id 有多少有效数据

df.filter($"friend_id".isNotNull).distinct.count

- 查询 df 总数据量

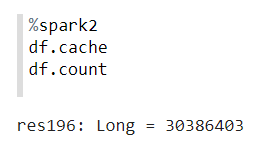

df.cache

df.count

可以看到 friend_id 有效数据比总数据少16条,说明 friend_id 中存在重复数据

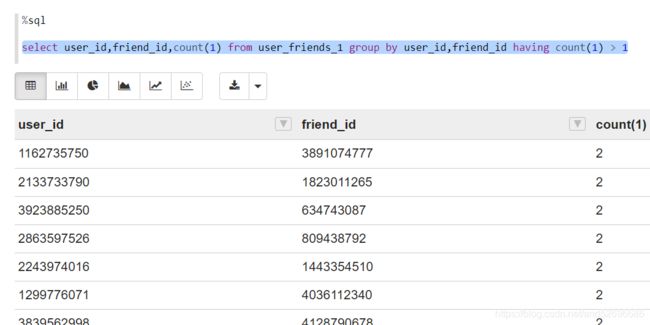

- 用 sql 语句查询 friend_id 中的重复数据,创建临时表

df.createOrReplaceTempView("user_friends_1")

- sql 语句查询

select user_id,friend_id,count(1) from user_friends_1 group by user_id,friend_id having count(1) > 1

df.groupBy($"user_id",$"friend_id").agg(count($"user_id").as("cnt")).filter($"cnt">lit(1)).show

需求4:统计列表中谁拥有最多的朋友

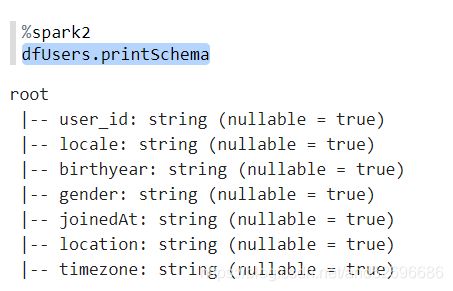

- 查看 df 的表头信息

df.printSchema

- 查看 dfUsers 的表头信息

dfUsers.printSchema

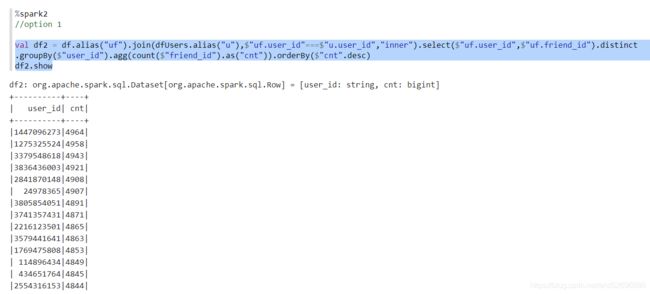

- 方法①

val df2 = df.alias("uf").join(dfUsers.alias("u"),$"uf.user_id"===$"u.user_id","inner").select($"uf.user_id",$"uf.friend_id").distinct

.groupBy($"user_id").agg(count($"friend_id").as("cnt")).orderBy($"cnt".desc)

df2.show

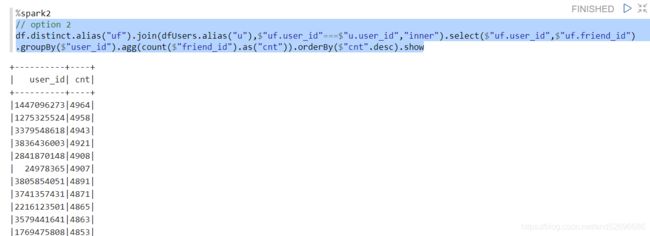

- 方法②

df.distinct.alias("uf").join(dfUsers.alias("u"),$"uf.user_id"===$"u.user_id","inner").select($"uf.user_id",$"uf.friend_id")

.groupBy($"user_id").agg(count($"friend_id").as("cnt")).orderBy($"cnt".desc).show

- 方法③

df.distinct.groupBy($"user_id").agg(count($"friend_id").as("cnt"))

.join(dfUsers,"user_id").select("user_id","cnt")

.orderBy($"cnt".desc).show

注: 这里推荐使用方法③ ,sql语句 join 查询核心思想是减少数据量

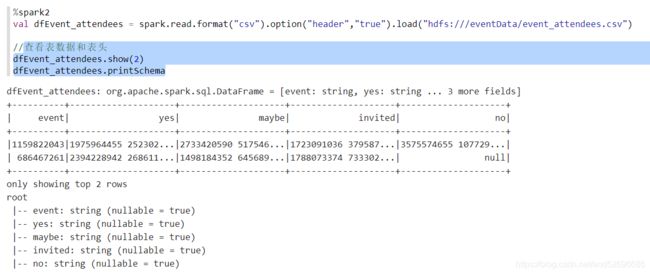

3.4 清洗数据 event_attendees.csv

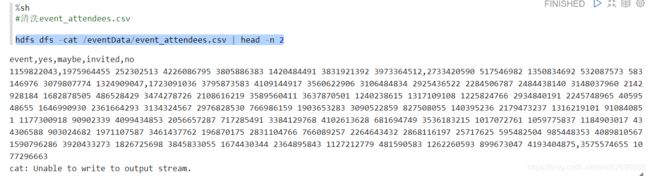

- 查看数据(前2行)

hdfs dfs -cat /eventData/event_attendees.csv | head -n 2

- 将数据加载至spark

val dfEvent_attendees = spark.read.format("csv").option("header","true").load("hdfs:///eventData/event_attendees.csv")

- 查看表数据和表头

dfEvent_attendees.show(2)

dfEvent_attendees.printSchema

需求1:表头为event,yes,maybe,invited, no, 将表头格式改为event_id,user_id,attend_type

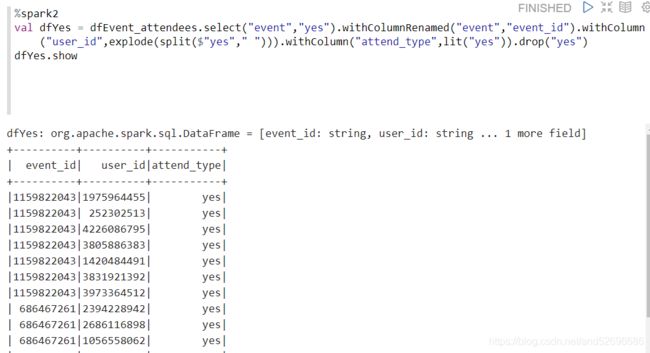

- 方法① 查询 event,yes 、 event,maybe 、 event,invited 、event,no 这四种字段组合,分别进行 行转列 ,最后在join组合一起即可

// event,yes

val dfYes = dfEvent_attendees.select("event","yes").withColumnRenamed("event","event_id").withColumn("user_id",explode(split($"yes"," "))).withColumn("attend_type",lit("yes")).drop("yes")

dfYes.show

//event,maybe

val dfMaybe = dfEvent_attendees.select("event","maybe").withColumnRenamed("event","event_id").withColumn("user_id",explode(split($"maybe"," "))).withColumn("attend_type",lit("maybe")).drop("maybe")

//event,invited

val dfInvited= dfEvent_attendees.select("event","invited").withColumnRenamed("event","event_id").withColumn("user_id",explode(split($"invited"," "))).withColumn("attend_type",lit("invited")).drop("invited")

//event,no

val dfNo = dfEvent_attendees.select("event","no").withColumnRenamed("event","event_id").withColumn("user_id",explode(split($"no"," "))).withColumn("attend_type",lit("no")).drop("no")

- 多表联合

val dfResult = dfYes.union(dfMaybe).union(dfInvited).union(dfNo)

- 查看有无重复数据

dfResult.count

dfResult.distinct.count

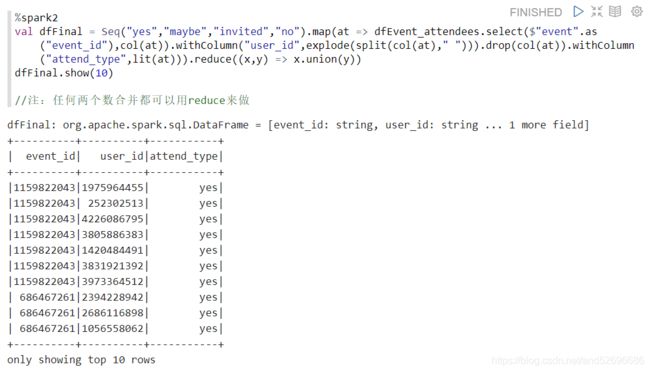

- 方法② 直接全部查询 yes,maybe,invited,no 四种受邀状态类型,放到1个集合Seq中,分类、聚合,一步到位

val dfFinal = Seq("yes","maybe","invited","no").map(at => dfEvent_attendees.select($"event".as("event_id"),col(at)).withColumn("user_id",explode(split(col(at)," "))).drop(col(at)).withColumn("attend_type",lit(at))).reduce((x,y) => x.union(y))

dfFinal.show(10)

- 查看去重后数据总量

dfFinal.distinct.count

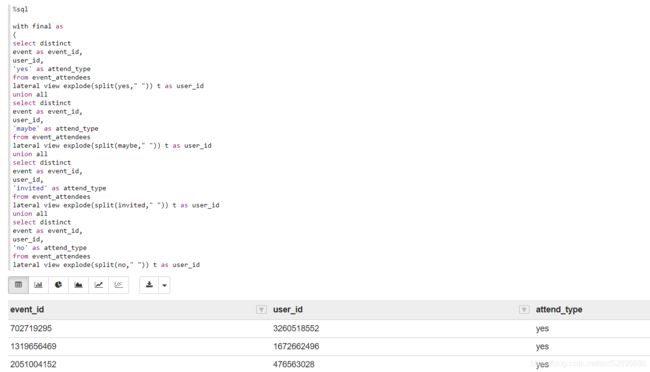

- 方法③ sql语句实现

- 创建临时表

dfEvent_attendees.createOrReplaceTempView("event_attendees")

- sql 语句

%sql

with final as

(

select distinct

event as event_id,

user_id,

'yes' as attend_type

from event_attendees

lateral view explode(split(yes," ")) t as user_id

union all

select distinct

event as event_id,

user_id,

'maybe' as attend_type

from event_attendees

lateral view explode(split(maybe," ")) t as user_id

union all

select distinct

event as event_id,

user_id,

'invited' as attend_type

from event_attendees

lateral view explode(split(invited," ")) t as user_id

union all

select distinct

event as event_id,

user_id,

'no' as attend_type

from event_attendees

lateral view explode(split(no," ")) t as user_id

) select * from final

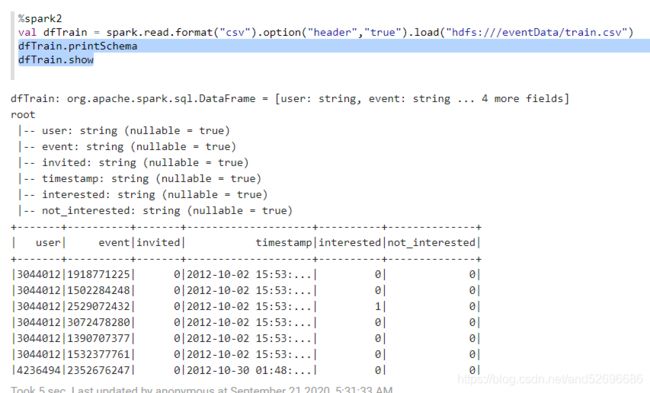

3.5 清洗数据 train.csv

- 查看数据(前3行)

hdfs dfs -cat /eventData/train.csv | head -n 3

- 将数据加载至spark

val dfTrain = spark.read.format("csv").option("header","true").load("hdfs:///eventData/train.csv")

- 查看表头和数据

dfTrain.printSchema

dfTrain.show

dfTrain.count

dfTrain.select("user","event").distinct.count

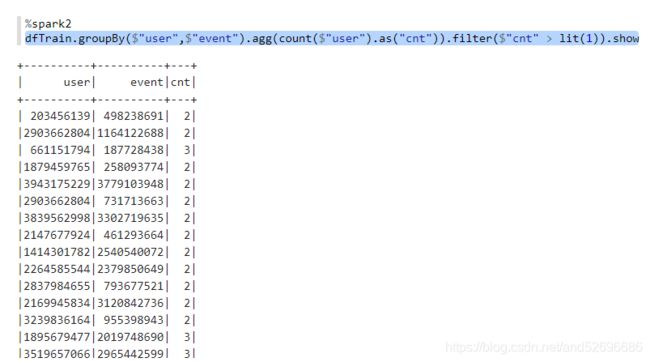

需求2:如果存在,找出他们并且进行分析

dfTrain.groupBy($"user",$"event").agg(count($"user").as("cnt")).filter($"cnt" > lit(1)).show

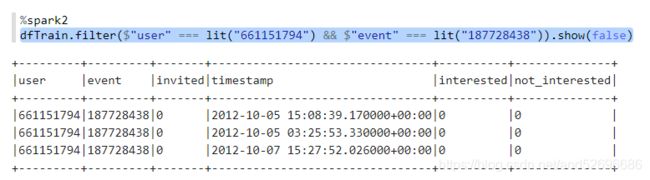

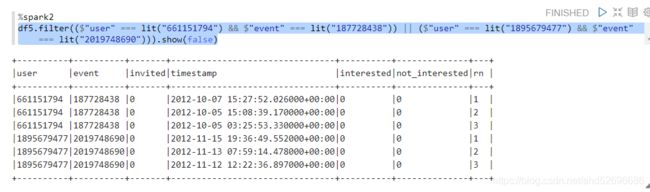

- 例如分析第三行数据,show(false) 展示字段全部信息

dfTrain.filter($"user" === lit("661151794") && $"event" === lit("187728438")).show(false)

- 对时间进行排序

dfTrain.filter($"user" === lit("661151794") && $"event" === lit("187728438")).orderBy($"timestamp").show(false)

- 去重操作①:上来就去重

dfTrain.dropDuplicates("user","event").filter($"user" === lit("661151794") && $"event" === lit("187728438")).orderBy($"timestamp".asc).show(false)

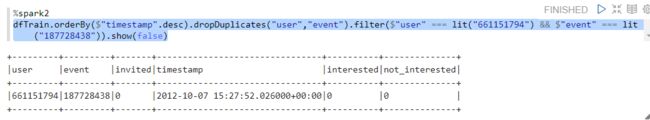

- 去重操作②:先对时间进行排序,再去重

dfTrain.orderBy($"timestamp".desc).dropDuplicates("user","event").filter($"user" === lit("661151794") && $"event" === lit("187728438")).show(false)

-

dropDuplicates() 算子去重,可以指定具体字段,无论多少条重复数据,默认保留第一条

-

distinct 去重,根据每一条数据,进行完整内容的比对和去重

-

去重操作③:使用窗口函数排序,指定序号去重

导入窗口函数:

import org.apache.spark.sql.expressions.Window

使用窗口函数查询:

指定保留 rn=1

val df5 = dfTrain.withColumn("rn",row_number() over Window.partitionBy($"user",$"event").orderBy($"timestamp".desc))//.filter($"rn" === lit(1).drop("rn"))

df5.filter($"user" === lit("661151794") && $"event" === lit("187728438")).show(false)

- 一次查询多行

df5.filter(($"user" === lit("661151794") && $"event" === lit("187728438")) || ($"user" === lit("1895679477") && $"event" === lit("2019748690"))).show(false)

- 使用正则查询时间数据总量

dfTrain.filter($"timestamp".rlike("^\\d{4}-\\d{2}-\\d{2}\\s\\d{2}:\\d{2}:\\d{2}.*")).count

注:

- “abc” || lit(“abc”) -> col(“abc”)

- collect 少用,数据量大的话内存会溢出, 除非确定数据量很小的情况下可以使用

- 以上 Zeppelin -spark 源码:pr_events.json

提取码:0gtv