从0到1,反距离加权IDW(Inverse Distance Weighted Interpolation) 插值变形算法

论文:

Image Warping with Scattered Image Warping with Scattered Data Interpolation

局部变形算法:液化,膨胀

全局变形算法:IDW,MLS,特征线变形

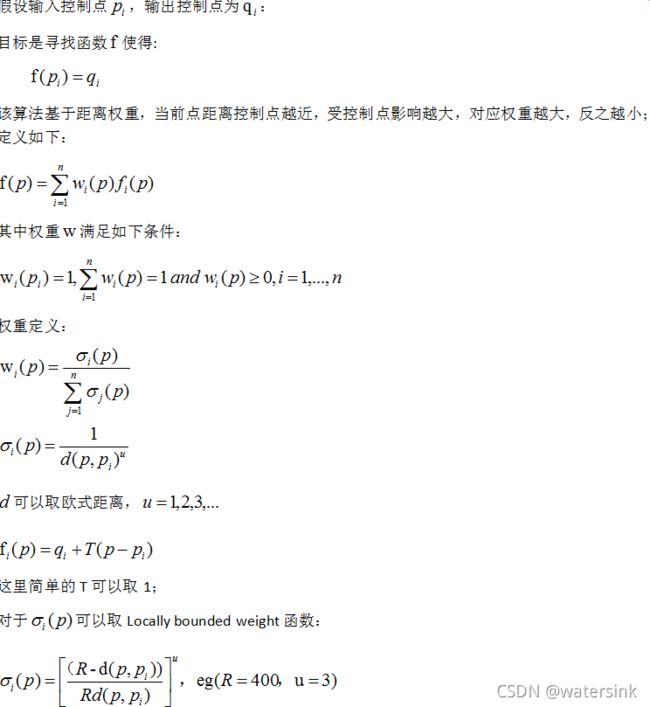

算法思路:

算法优缺点:

优点:实现简单,cpu实现,gpu实现都友好

缺点:速度与点的个数,图片长,宽,这3个指标成正比,点个数越多,速度越慢,图片越大速度越慢。如果点太少,形变会不平滑。

应用场景:

大脸,瘦脸,大眼,等任何形变场景

基本实现:

好处,更容易结合公式看清原理,缺点,速度很慢。

class IDW(object):

def __init__(self,):

pass

def IDW(self, srcImg, input_points, output_points):

start = time.time()

height, width, _ = srcImg.shape

keypoints_num = len(input_points)

u = 2

UX = np.expand_dims(np.vstack([np.arange(width).astype(np.float32).reshape(1, -1)] * height), axis = 2)

UY = np.expand_dims(np.hstack([np.arange(height).astype(np.float32).reshape(-1, 1)] * width), axis = 2)

input_points_X = np.ones((height,width,keypoints_num), np.float32) * input_points[:,0]

input_points_Y = np.ones((height,width,keypoints_num), np.float32) * input_points[:,1]

output_points_X = np.ones((height,width,keypoints_num), np.float32) * output_points[:,0]

output_points_Y = np.ones((height,width,keypoints_num), np.float32) * output_points[:,1]

v_tmp = np.power( (UX - input_points_X)*(UX - input_points_X) + (UY - input_points_Y)*(UY - input_points_Y), u)

for k in range(keypoints_num):

v_tmp[input_points[k,1],input_points[k,0],:] = 1

v = 1/v_tmp

ww = np.expand_dims(1/np.sum(v,axis = 2), axis =2)

UX = np.squeeze( (np.sum(v*(output_points_X + UX - input_points_X), axis = 2, keepdims=True)) * ww).astype(np.float32)

UY = np.squeeze( (np.sum(v*(output_points_Y + UY - input_points_Y), axis = 2, keepdims=True)) * ww).astype(np.float32)

copyImg = cv2.remap(srcImg, UX, UY, interpolation=cv2.INTER_LINEAR)

end = time.time()

print("IDW time cost:{} s".format(end - start))

return copyImgnumba向量化加速实现:

好处,基于向量化实现,速度更快,缺点,没有足够快

from numba import jit, vectorize, int64, float64, njit, prange

def IDW(self, srcImg, input_points, output_points):

@vectorize([float64(float64,float64, float64, float64, int64)], target='parallel')

def l2_power_numba(UX, input_points_X, UY, input_points_Y, u):

tmp_x = UX - input_points_X

tmp_y = UY - input_points_Y

ux_sum = tmp_x * tmp_x

uy_sum = tmp_y * tmp_y

if u==1:

return ux_sum + uy_sum

else:

return np.power(ux_sum + uy_sum, u)

@vectorize([float64(float64)], target='parallel')

def div_numba(v_tmp):

return 1/v_tmp

start = time.time()

height, width, _ = srcImg.shape

keypoints_num = len(input_points)

u = 2

UX = np.expand_dims(np.vstack([np.arange(width).astype(np.float32).reshape(1, -1)] * height), axis = 2)

UY = np.expand_dims(np.hstack([np.arange(height).astype(np.float32).reshape(-1, 1)] * width), axis = 2)

input_points_X = np.ones((1,1,keypoints_num), np.float32)

input_points_X = input_points[:,0]

input_points_Y = np.ones((1,1,keypoints_num), np.float32)

input_points_Y = input_points[:,1]

output_points_X = np.ones((1,1,keypoints_num), np.float32)

output_points_X = output_points[:,0]

output_points_Y = np.ones((1,1,keypoints_num), np.float32)

output_points_Y = output_points[:,1]

v_tmp = l2_power_numba(UX, input_points_X, UY, input_points_Y, u)

for k in range(keypoints_num):

v_tmp[input_points[k,1],input_points[k,0],:] = v_tmp[input_points[k,1]-1,input_points[k,0]-1,:]

v = div_numba(v_tmp)

ww = np.expand_dims(1/np.sum(v,axis = 2), axis =2)

UX = np.squeeze( UX + np.sum(v * (output_points_X- input_points_X), axis=2, keepdims=True) * ww).astype(np.float32)

UY = np.squeeze( UY + np.sum(v * (output_points_Y- input_points_Y), axis=2, keepdims=True) * ww).astype(np.float32)

copyImg = cv2.remap(srcImg, UX, UY, interpolation=cv2.INTER_LINEAR)

end = time.time()

print("IDW FAST time cost:{} s".format(end - start))

return copyImgcpu最佳加速实现:

好处,基于scipy加速计算距离,公式合并,减少一次乘法,2d矩阵变1d矩阵。

from scipy.spatial.distance import cdist

class IDW(object):

def __init__(self,

original_control_points=None,

deformed_control_points=None,

power=1):

if original_control_points is None:

self.original_control_points = np.array([[0., 0., 0.], [0., 0., 1.],

[0., 1., 0.], [1., 0., 0.],

[0., 1., 1.], [1., 0., 1.],

[1., 1., 0.], [1., 1.,

1.]])

else:

self.original_control_points = original_control_points

if deformed_control_points is None:

self.deformed_control_points = np.array([[0., 0., 0.], [0., 0., 1.],

[0., 1., 0.], [1., 0., 0.],

[0., 1., 1.], [1., 0., 1.],

[1., 1., 0.], [1., 1.,

1.]])

else:

self.deformed_control_points = deformed_control_points

self.power = power

def __call__(self, src_pts):

displ = self.deformed_control_points - self.original_control_points

dist = cdist(src_pts, self.original_control_points, metric='sqeuclidean')

dist = dist** self.power

# Weights are set as the reciprocal of the distance if the distance is

# not zero, otherwise 1.0 where distance is zero.

dist[dist == 0.0] = 1

weights = 1. / dist

#weights[dist == 0.0] = 1.0

offset = np.dot(weights, displ) / np.sum(weights, axis=1, keepdims=True)

return src_pts + offset

def IDW_cpu(self, srcImg, input_points, output_points):

start = time.time()

idw = IDW(original_control_points=input_points.astype(np.float64), deformed_control_points=output_points.astype(np.float64), power=3)

h, w = srcImg.shape[:-1]

x = np.empty((h, w), np.float64)

x[:, :] = np.arange(w)

y = np.empty((h, w), np.float64)

y[:, :] = np.arange(h)[:, np.newaxis]

mesh = np.array([x.ravel(), y.ravel()])

mesh = mesh.T

new_mesh = idw(mesh.astype(np.float64))

UX = new_mesh[:,0].reshape(h,w).astype(np.float32)

UY = new_mesh[:,1].reshape(h,w).astype(np.float32)

copyImg = cv2.remap(srcImg, UX, UY, interpolation=cv2.INTER_LINEAR)

end = time.time()

print("IDW time cost:{} s".format(end - start))

return copyImgpytorch基于cuda加速实现:

好处,基于最佳cpu版本实现,基于pytorch实现加速,速度快的一逼。缺点,图片太大,注意显存开销。解决思路,使用pytorch1.8以上版本,进行显存设置。当然也可以考虑tensorflow基于静态图的优化。

torch.cuda.set_per_process_memory_fraction(0.5, 0)

参数1:fraction 限制的上限比例,如0.5 就是总GPU显存的一半,可以是0~1的任意float大小;

参数2:device 设备号; 如0 表示GPU卡 0号;class IDW_TORCH(object):

def __init__(self,

original_control_points=None,

deformed_control_points=None,

power=1):

if torch.cuda.is_available():

device = "cuda"

else:

device = "cpu"

self.original_control_points = original_control_points.to(device)

self.deformed_control_points = deformed_control_points.to(device)

self.power = power

self.device = device

def __call__(self, src_pts):

src_pts = src_pts.to(self.device)

displ = self.deformed_control_points - self.original_control_points

dist = torch.cdist(src_pts, self.original_control_points)

dist = dist ** (self.power*2)

# Weights are set as the reciprocal of the distance if the distance is

# not zero, otherwise 1.0 where distance is zero.

dist[dist == 0.0] = 1

weights = 1. / dist

offset = torch.matmul(weights, displ) / torch.sum(weights, axis=1, keepdims=True)

return src_pts + offset

def IDW_torch(self, srcImg, input_points, output_points):

start = time.time()

idw = IDW_TORCH(original_control_points=torch.from_numpy(input_points.astype(np.float32)),

deformed_control_points=torch.from_numpy(output_points.astype(np.float32)),

power=3)

h, w = srcImg.shape[:-1]

x = torch.empty((h, w))

x[:, :] = torch.arange(w)

y = torch.empty((h, w))

y[:, :] = torch.arange(h)[:, np.newaxis]

#mesh = torch.vstack([x.flatten(), y.flatten()]).T

mesh = torch.stack([x.flatten(), y.flatten()], dim=1)

new_mesh = idw(mesh.float()).cpu().numpy()

UX = new_mesh[:,0].reshape(h,w).astype(np.float32)

UY = new_mesh[:,1].reshape(h,w).astype(np.float32)

self.idwUX = UX

self.idwUY = UY

copyImg = cv2.remap(srcImg, UX, UY, interpolation=cv2.INTER_LINEAR)

end = time.time()

print("IDW TORCH time cost:{} s".format(end - start))

return copyImg上面所有代码 input_points, output_points,2个矩阵的维度都是(n,2),n表示点的个数。坐标就是n个点的x,y坐标。只需要拿到人脸图片上,变换前的坐标位置,变换后的坐标位置,调用上面的函数就可以。生成图片基于opencv自带函数cv2.remap,更加高效。

大眼瘦脸运行效果: