第13章 半监督学习

第13章 半监督学习

13.1 未标记样本

有标记样本:样本的类别标记已知

未标记样本:样本的类别标记未知

主动学习的目标是 使用尽量少的查询来获得尽量号的性能

半监督学习(semi-supervised learning):让学习器不依赖外界交互,自动地利用未标记样本来提升学习性能

聚类假设(cluster assumption):假设数据存在簇结构,同一簇的样本属于同一类别

流形假设(manifold assumption):假设数据分布在一个流形结构上,邻近的样本拥有相似的输出值。

纯半监督学习:假定训练数据中的未标记样本并待预测的数据

直推学习:假定学习过程中所考虑的未标记样本恰是待预测数据

13.2 生成式方法

生成式方法(generative methods)是直接基于生成式模型的方法。假设所有数据都是由同一潜在的模型生成的。这个假设通过潜在模型的参数将未标记数据与学习目标联系起来,而未标记数据的标记可看作模型的缺失参数。

给定样本x,其真实类别标记为 y ∈ Y y\mathcal{\in Y} y∈Y,其中 Y = { 1 , 2 , … , N } \mathcal{Y} = \left\{ 1,2,\ldots,N \right\} Y={1,2,…,N}为所有可能的类别,则概率密度生成

p ( x ) = ∑ i = 1 N α i ∗ p ( x ∣ μ i , Σ i ) p\left( x \right) = \sum_{i = 1}^{N}{\alpha_{i}*p\left( x \middle| \mu_{i},\Sigma_{i} \right)} p(x)=i=1∑Nαi∗p(x∣μi,Σi)

其中混合系数 α i ≥ 0 , ∑ i = 1 N α i = 1 ; p ( x ∣ μ i , Σ i ) \alpha_{i} \geq 0,\sum_{i = 1}^{N}{\alpha_{i} = 1;p\left( x \middle| \mu_{i},\Sigma_{i} \right)} αi≥0,∑i=1Nαi=1;p(x∣μi,Σi)是样本 x x x属于第 i i i个高斯混合成分的概率; μ i \mu_{i} μi和 Σ i \Sigma_{i} Σi为该高斯混合成分的参数

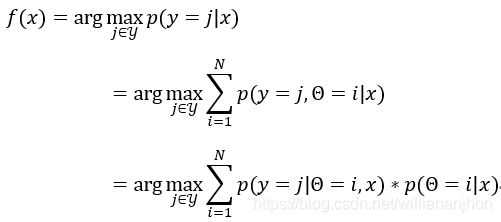

令 f ( x ) ∈ Y f\left( x \right)\mathcal{\in Y} f(x)∈Y表示模型 f f f对 x x x的预测标记, Θ = { 1 , 2 , … , N } \Theta = \left\{ 1,2,\ldots,N \right\} Θ={1,2,…,N}表示样本 x x x隶属的高斯混合成分。由最大化后验概率可知

其中

p ( Θ = i ∣ x ) = α i ∗ p ( x ∣ μ i , Σ i ) ∑ i = 1 N α i ∗ p ( x ∣ μ i , Σ i ) p\left( \Theta = i \middle| x \right) = \frac{\alpha_{i}*p\left( x \middle| \mu_{i},\Sigma_{i} \right)}{\sum_{i = 1}^{N}{\alpha_{i}*p\left( x \middle| \mu_{i},\Sigma_{i} \right)}} p(Θ=i∣x)=∑i=1Nαi∗p(x∣μi,Σi)αi∗p(x∣μi,Σi)

为样本 x x x由第 i i i个高斯成分生成的后验概率, p ( y = j ∣ Θ = i , x ) p\left( y = j \middle| \Theta = i,x \right) p(y=j∣Θ=i,x)为 x x x由第 i i i个高斯成分生成且其类别为 j j j的概率

给定有标记样本集 D l = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , … , ( x l , y l ) } D_{l} = \left\{ \left( x_{1},y_{1} \right),\left( x_{2},y_{2}\right),\ldots,\left( x_{l},y_{l} \right) \right\} Dl={(x1,y1),(x2,y2),…,(xl,yl)}和未标记样本集 D u = { x l + 1 , x l + 2 , … , x l + u } , l ≪ u , l + u = m D_{u} =\left\{ x_{l + 1},x_{l + 2},\ldots,x_{l + u} \right\},l \ll u,l + u =m Du={xl+1,xl+2,…,xl+u},l≪u,l+u=m,假设所有样本独立同分布,且都是由同一高斯混合模型生成的。用极大似然法来估计高斯混合模型的参数 { α i , μ i , Σ i ∣ 1 ≤ i ≤ N } , D l ∪ D u \left\{ \alpha_{i},\mu_{i},\Sigma_{i} \middle| 1 \leq i \leq N \right\},D_{l} \cup D_{u} {αi,μi,Σi∣1≤i≤N},Dl∪Du的对数似然为

LL ( D l ∪ D u ) = ∑ ( x j , y j ) ∈ D l ln ( ∑ i = 1 N α i ∗ p ( x j ∣ μ i , Σ i ) ∗ p ( y j ∣ Θ = i , x ) ) + ∑ x j ∈ D u ln ( ∑ i = 1 N α i ∗ p ( x j ∣ μ i , Σ i ) ) \text{LL}\left( D_{l} \cup D_{u} \right) = \sum_{\left( x_{j},y_{j} \right) \in D_{l}}^{}{\ln\left( \sum_{i = 1}^{N}{\alpha_{i}*p\left( x_{j} \middle| \mu_{i},\Sigma_{i} \right)}*p\left( y_{j} \middle| \Theta = i,x \right) \right)} + \sum_{x_{j} \in D_{u}}^{}{\ln\left( \sum_{i = 1}^{N}{\alpha_{i}*p\left( x_{j} \middle| \mu_{i},\Sigma_{i} \right)} \right)} LL(Dl∪Du)=(xj,yj)∈Dl∑ln(i=1∑Nαi∗p(xj∣μi,Σi)∗p(yj∣Θ=i,x))+xj∈Du∑ln(i=1∑Nαi∗p(xj∣μi,Σi))

上式由两项组成:基于有标记数据 D l D_{l} Dl的有监督项和基于未标记数据 D u D_{u} Du的无监督项

高斯混合模型参数估计可用EM算法求解,迭代更新式如下:

E步:根据当前模型参数计算未标记样本 x j x_{j} xj属于各高斯混合成分的概率

γ ji = α i ∗ p ( x j ∣ μ i , Σ i ) ∑ i = 1 N α i ∗ p ( x j ∣ μ i , Σ i ) \gamma_{\text{ji}} = \frac{\alpha_{i}*p\left( x_{j} \middle| \mu_{i},\Sigma_{i} \right)}{\sum_{i = 1}^{N}{\alpha_{i}*p\left( x_{j} \middle| \mu_{i},\Sigma_{i} \right)}} γji=∑i=1Nαi∗p(xj∣μi,Σi)αi∗p(xj∣μi,Σi)

M步:基于 γ ji \gamma_{\text{ji}} γji更新模型参数,其中 l i l_{i} li表示第 i i i类的有标记样本数目

μ i = 1 ∑ x j ∈ D u γ ji + l i ( ∑ x j ∈ D u γ ji x j + ∑ ( x j , y j ) ∈ D l ∧ y j = i x j ) \mu_{i} = \frac{1}{\sum_{x_{j} \in D_{u}}^{}{\gamma_{\text{ji}} + l_{i}}}\left( \sum_{x_{j} \in D_{u}}^{}{\gamma_{\text{ji}}x_{j}} + \sum_{\left( x_{j},y_{j} \right) \in D_{l} \land y_{j} = i}^{}x_{j} \right) μi=∑xj∈Duγji+li1⎝⎛xj∈Du∑γjixj+(xj,yj)∈Dl∧yj=i∑xj⎠⎞

Σ i = 1 ∑ x j ∈ D u γ ji + l i ( ∑ x j ∈ D u γ ji ( x j − μ i ) ( x j − μ i ) T + ∑ ( x j , y j ) ∈ D l ∧ y j = i ( x j − μ i ) ( x j − μ i ) T ) \Sigma_{i} = \frac{1}{\sum_{x_{j} \in D_{u}}^{}{\gamma_{\text{ji}} + l_{i}}}\left( \sum_{x_{j} \in D_{u}}^{}{\gamma_{\text{ji}}\left( x_{j} - \mu_{i} \right)}\left( x_{j} - \mu_{i} \right)^{T} + \sum_{\left( x_{j},y_{j} \right) \in D_{l} \land y_{j} = i}^{}{\left( x_{j} - \mu_{i} \right)\left( x_{j} - \mu_{i} \right)^{T}} \right) Σi=∑xj∈Duγji+li1⎝⎛xj∈Du∑γji(xj−μi)(xj−μi)T+(xj,yj)∈Dl∧yj=i∑(xj−μi)(xj−μi)T⎠⎞

α i = 1 m ( ∑ x j ∈ D u γ ji + l i ) \alpha_{i} = \frac{1}{m}\left( \sum_{x_{j} \in D_{u}}^{}{\gamma_{\text{ji}} + l_{i}} \right) αi=m1⎝⎛xj∈Du∑γji+li⎠⎞

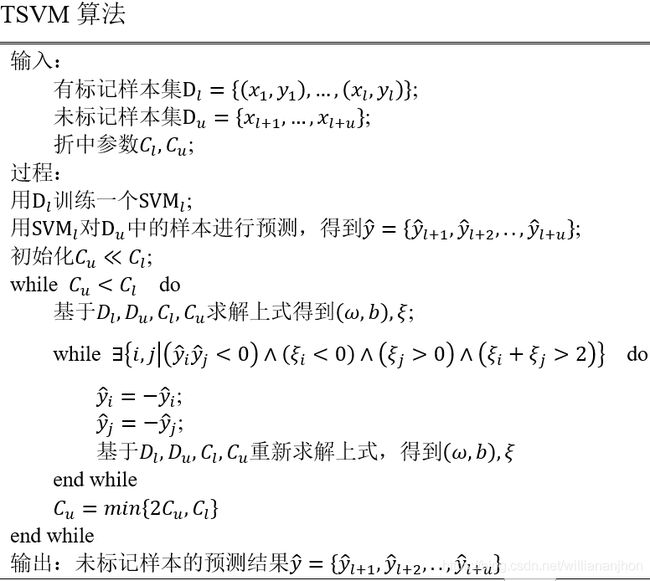

13.3 半监督SVM

半监督支持向量机(Semi-Supervised Support Vector Machine,S3VM)是支持向量机在半监督学习上的推广。

TSVM(Transductive Support Vector Machine)试图考虑对未标记样本进行各种可能的标记指派(label assignment)。

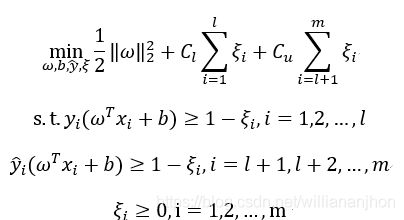

给定 D l = { ( x 1 , y 1 ) , … , ( x l , y l ) } D_{l} = \left\{ \left( x_{1},y_{1} \right),\ldots,\left( x_{l},y_{l} \right) \right\} Dl={(x1,y1),…,(xl,yl)}和 D u = { x l + 1 , … , x l + u } D_{u} = \left\{ x_{l + 1},\ldots,x_{l + u} \right\} Du={xl+1,…,xl+u},其中 y i ∈ { − 1 , + 1 } , l ≪ u , l + u = m y_{i} \in \left\{ - 1, + 1 \right\},l \ll u,l + u = m yi∈{−1,+1},l≪u,l+u=m。TSVM的学校目标是为 D u D_{u} Du中的样本给出预测标记 y ^ = { y ^ l + 1 , y ^ l + 2 , . . , y ^ l + u } , y ^ i ∈ { − 1 , + 1 } \hat{y} = \left\{ {\hat{y}}_{l + 1},{\hat{y}}_{l + 2},..,{\hat{y}}_{l + u} \right\},{\hat{y}}_{i} \in \left\{ - 1, + 1 \right\} y^={y^l+1,y^l+2,..,y^l+u},y^i∈{−1,+1},使得

其中 ( ω , b ) \left( \omega,b \right) (ω,b)确定一个划分超平面; ξ \xi ξ为松弛向量, ξ i ( i = 1 , 2 , … , l ) \xi_{i}\left( i = 1,2,\ldots,l \right) ξi(i=1,2,…,l)对应于有标记样本, ξ i ( i = l + 1 , l + 2 , … , m ) \xi_{i}\left( i = l + 1,l + 2,\ldots,m \right) ξi(i=l+1,l+2,…,m)对应于未标记样本; C l C_{l} Cl和 C u C_{u} Cu是由樱花指定的用于平衡模型复杂度、有标记样本与未标记样本重要程度的折中参数

13.4 图半监督学习

给定 D l = { ( x 1 , y 1 ) , … , ( x l , y l ) } D_{l} = \left\{ \left( x_{1},y_{1} \right),\ldots,\left( x_{l},y_{l} \right) \right\} Dl={(x1,y1),…,(xl,yl)}和 D u = { x l + 1 , x l + 2 , … , x l + u } , l ≪ u , D_{u} = \left\{ x_{l + 1},x_{l + 2},\ldots,x_{l + u} \right\},l \ll u, Du={xl+1,xl+2,…,xl+u},l≪u,

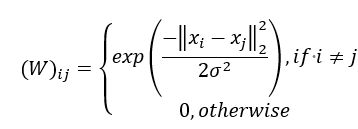

l + u = m l + u = m l+u=m,先基于 D l ∪ D u D_{l} \cup D_{u} Dl∪Du构建一个图 G = ( V , E ) G = \left( V,E \right) G=(V,E),其中结点集 V = { x 1 , … , x l , x l + 1 , … , x l + u } V = \left\{ x_{1},\ldots,x_{l},x_{l + 1},\ldots,x_{l + u} \right\} V={x1,…,xl,xl+1,…,xl+u},边集 E E E可表示为一个亲和矩阵(affinity matrix),长基于高斯函数定义为

其中 i , j ∈ { 1 , 2 , … , m } , σ > 0 i,j \in \left\{ 1,2,\ldots,m \right\},\sigma >0 i,j∈{1,2,…,m},σ>0是用户指定的高斯函数带宽参数。

假定从图 G = ( V , E ) G = \left( V,E \right) G=(V,E)将学得一个实值函数 f : V → R f:V\mathbb{\rightarrow R} f:V→R,其对于的分类规则为 y i = sign ( f ( x i ) ) , y i ∈ { − 1. + 1 } y_{i} = \operatorname{sign}\left( f\left( x_{i} \right) \right),y_{i} \in \left\{ - 1. + 1 \right\} yi=sign(f(xi)),yi∈{−1.+1}。关于 f f f的能量函数

E ( f ) = 1 2 ∑ i = 1 m ∑ j = 1 m ( W ) ij ( f ( x i ) − f ( x j ) ) 2 E\left( f \right) = \frac{1}{2}\sum_{i = 1}^{m}{\sum_{j = 1}^{m}{\left( W \right)_{\text{ij}}\left( f\left( x_{i} \right) - f\left( x_{j} \right) \right)^{2}}} E(f)=21i=1∑mj=1∑m(W)ij(f(xi)−f(xj))2

= 1 2 ( ∑ i = 1 m d i f 2 ( x i ) + ∑ j = 1 m d j f 2 ( x j ) − 2 ∑ i = 1 m ∑ j = 1 m ( W ) ij f ( x i ) f ( x j ) ) = \frac{1}{2}\left( \sum_{i = 1}^{m}{d_{i}f^{2}\left( x_{i} \right)} + \sum_{j = 1}^{m}{d_{j}f^{2}\left( x_{j} \right)} - 2\sum_{i = 1}^{m}{\sum_{j = 1}^{m}\left( W \right)_{\text{ij}}}f\left( x_{i} \right)f\left( x_{j} \right) \right) =21(i=1∑mdif2(xi)+j=1∑mdjf2(xj)−2i=1∑mj=1∑m(W)ijf(xi)f(xj))

= ∑ i = 1 m d i f 2 ( x i ) − ∑ i = 1 m ∑ j = 1 m ( W ) ij f ( x i ) f ( x j ) = f T ( D − W ) f = \sum_{i = 1}^{m}{d_{i}f^{2}\left( x_{i} \right)} - \sum_{i = 1}^{m}{\sum_{j = 1}^{m}\left( W \right)_{\text{ij}}}f\left( x_{i} \right)f\left( x_{j} \right) = \mathbf{f}^{\mathbf{T}}\left( \mathbf{D - W} \right)\mathbf{f} =i=1∑mdif2(xi)−i=1∑mj=1∑m(W)ijf(xi)f(xj)=fT(D−W)f

其中 f = ( f l T ; f u T ) , f l = ( f ( x 1 ) , f ( x 2 ) , … , f ( x l ) ) \mathbf{f}\mathbf{=}\left( \mathbf{f}_{l}^{T}\mathbf{;}\mathbf{f}_{u}^{T} \right)\mathbf{,}\mathbf{f}_{l}\mathbf{=}\left( f\left( x_{1} \right),f\left( x_{2} \right),\ldots,f\left( x_{l} \right) \right) f=(flT;fuT),fl=(f(x1),f(x2),…,f(xl)), f u = ( f ( x l + 1 ) , f ( x l + 2 ) , … , f ( x l + u ) ) \mathbf{f}_{u}\mathbf{=}\left( f\left( x_{l + 1} \right),f\left( x_{l + 2} \right),\ldots,f\left( x_{l + u} \right) \right) fu=(f(xl+1),f(xl+2),…,f(xl+u)),分别为 f f f在有标记样本和未标记样本上的预测结果, D = diag ( d 1 , d 2 , … , d l + u ) \mathbf{D} = \text{di}\text{ag}\left( d_{1},d_{2},\ldots,d_{l + u} \right) D=diag(d1,d2,…,dl+u)是对角矩阵,其对角元素 d i = ∑ j = 1 l + u ( W ) ij d_{i} = \sum_{j = 1}^{l + u}\left( W \right)_{\text{ij}} di=∑j=1l+u(W)ij为矩阵 W \mathbf{W} W的第 i i i行元素之和。

具有最小能量的函数 f f f在有标记样本上满足 f ( x i ) = y i ( i = 1 , 2 , . . , l ) f\left( x_{i} \right) = y_{i}\left( i = 1,2,..,l\right) f(xi)=yi(i=1,2,..,l),在未标记样本上满足 △ f = 0 \mathbf{\bigtriangleup}\mathbf{f}\mathbf{= 0} △f=0,其中 △ = D − W \mathbf{\bigtriangleup}\mathbf{=}\mathbf{D -W} △=D−W为拉普拉斯矩阵(Laplacian matrix),以第l行于第l列为界,采用分块矩阵表示方法 W = [ W ll W lu W ul W uu ] \mathbf{W}\mathbf{=}\begin{bmatrix} \mathbf{W}_{\text{ll}} & \mathbf{W}_{\text{lu}} \\ \mathbf{W}_{\text{ul}} & \mathbf{W}_{\text{uu}} \\ \end{bmatrix} W=[WllWulWluWuu],则上式为

E ( f ) = ( f l T ; f u T ) ( [ D ll D lu D ul D uu ] − [ W ll W lu W ul W uu ] ) [ f l f u ] E\left( f \right) = \left( \mathbf{f}_{l}^{T}\mathbf{;}\mathbf{f}_{u}^{T} \right)\left( \begin{bmatrix} \mathbf{D}_{\text{ll}} & \mathbf{D}_{\text{lu}} \\ \mathbf{D}_{\text{ul}} & \mathbf{D}_{\text{uu}} \\ \end{bmatrix}\mathbf{-}\begin{bmatrix} \mathbf{W}_{\text{ll}} & \mathbf{W}_{\text{lu}} \\ \mathbf{W}_{\text{ul}} & \mathbf{W}_{\text{uu}} \\ \end{bmatrix} \right)\begin{bmatrix} \mathbf{f}_{l} \\ \mathbf{f}_{u} \\ \end{bmatrix} E(f)=(flT;fuT)([DllDulDluDuu]−[WllWulWluWuu])[flfu]

= f l T ( D ll − W ll ) f l − 2 f u T W ul f l + f u T ( D uu − W uu ) f u = \mathbf{f}_{l}^{T}\left( \mathbf{D}_{\text{ll}}\mathbf{-}\mathbf{W}_{\text{ll}} \right)\mathbf{f}_{l}\mathbf{-}2\mathbf{f}_{u}^{T}\mathbf{W}_{\text{ul}}\mathbf{f}_{l}\mathbf{+}\mathbf{f}_{u}^{T}\left( \mathbf{D}_{\text{uu}}\mathbf{-}\mathbf{W}_{\text{uu}} \right)\mathbf{f}_{u} =flT(Dll−Wll)fl−2fuTWulfl+fuT(Duu−Wuu)fu

由 ∂ E ( f ) ∂ f u = 0 \frac{\partial E\left( f \right)}{\partial\mathbf{f}_{u}} = 0 ∂fu∂E(f)=0,得

f u = ( D uu − W uu ) − 1 W ul f l \mathbf{f}_{u}\mathbf{=}\left( \mathbf{D}_{\text{uu}}\mathbf{-}\mathbf{W}_{\text{uu}} \right)^{- 1}\mathbf{W}_{\text{ul}}\mathbf{f}_{l} fu=(Duu−Wuu)−1Wulfl

令

P = D − 1 W = [ D ll − 1 D lu D ul D uu − 1 ] [ W ll W lu W ul W uu ] = [ D ll − 1 W ll D ll − 1 W lu D uu − 1 W ul D uu − 1 W uu ] \mathbf{P} = \mathbf{D}^{- 1}\mathbf{W}\mathbf{=}\begin{bmatrix} \mathbf{D}_{\text{ll}}^{- 1} & \mathbf{D}_{\text{lu}} \\ \mathbf{D}_{\text{ul}} & \mathbf{D}_{\text{uu}}^{- 1} \\ \end{bmatrix}\begin{bmatrix} \mathbf{W}_{\text{ll}} & \mathbf{W}_{\text{lu}} \\ \mathbf{W}_{\text{ul}} & \mathbf{W}_{\text{uu}} \\ \end{bmatrix}\mathbf{=}\begin{bmatrix} \mathbf{D}_{\text{ll}}^{- 1}\mathbf{W}_{\text{ll}} & {\mathbf{D}_{\text{ll}}^{- 1}\mathbf{W}}_{\text{lu}} \\ \mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{ul}} & \mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{uu}} \\ \end{bmatrix} P=D−1W=[Dll−1DulDluDuu−1][WllWulWluWuu]=[Dll−1WllDuu−1WulDll−1WluDuu−1Wuu]

即 P uu = D uu − 1 W uu , P ul = D uu − 1 W ul \mathbf{P}_{\text{uu}} = \mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{uu}}\mathbf{,}\mathbf{P}_{\text{ul}} = \mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{ul}} Puu=Duu−1Wuu,Pul=Duu−1Wul,则

f u = ( D uu ( I − D uu − 1 W uu ) ) − 1 W ul f l = ( I − D uu − 1 W uu ) D uu − 1 W ul f l = ( I − P uu ) − 1 P ul f l \mathbf{f}_{u}\mathbf{=}\left( \mathbf{D}_{\text{uu}}\left( \mathbf{I}\mathbf{-}\mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{uu}} \right) \right)^{- 1}\mathbf{W}_{\text{ul}}\mathbf{f}_{l}\mathbf{=}\left( \mathbf{I}\mathbf{-}\mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{uu}} \right)\mathbf{D}_{\text{uu}}^{- 1}\mathbf{W}_{\text{ul}}\mathbf{f}_{l}\mathbf{=}\left( \mathbf{I -}\mathbf{P}_{\text{uu}} \right)^{\mathbf{-}1}\mathbf{P}_{\text{ul}}\mathbf{f}_{l} fu=(Duu(I−Duu−1Wuu))−1Wulfl=(I−Duu−1Wuu)Duu−1Wulfl=(I−Puu)−1Pulfl

定义一个 ( l + u ) × ∣ Y ∣ \left( l + u \right) \times \left| \mathcal{Y} \right| (l+u)×∣Y∣的非负标记矩阵 F = ( F 1 T , F 2 T , … , F l + u T ) T F = \left( F_{1}^{T},F_{2}^{T},\ldots,F_{l + u}^{T} \right)^{T} F=(F1T,F2T,…,Fl+uT)T,其中第 i i i行元素 F i = ( ( F ) i 1 , ( F ) i 2 , … , ( F ) i ∣ Y ∣ ) F_{i} = \left( \left( F \right)_{i1},\left( F \right)_{i2},\ldots,\left( F \right)_{i\left| \mathcal{Y} \right|} \right) Fi=((F)i1,(F)i2,…,(F)i∣Y∣)为示例 x i x_{i} xi的标记向量,相应的分类规则为

对 i = 1 , 2 , … , m , j = 1 , 2 , … , ∣ Y ∣ i = 1,2,\ldots,m,j = 1,2,\ldots,\left| \mathcal{Y} \right| i=1,2,…,m,j=1,2,…,∣Y∣,将F初始化为

F ( 0 ) = ( Y ) ij = { 1 , i f ( 1 ≤ i ≤ l ) ∧ ( y i = j ) 0 , o t h e r w i s e F\left( 0 \right) = \left( Y \right)_{\text{ij}} = \left\{ \begin{matrix} 1,if\left( 1 \leq i \leq l \right) \land \left( y_{i} = j \right) \\ 0,otherwise \\ \end{matrix} \right.\ F(0)=(Y)ij={1,if(1≤i≤l)∧(yi=j)0,otherwise

基于W构造一个传播矩阵 S = D − 1 2 W D − 1 2 S = D^{- \frac{1}{2}}WD^{- \frac{1}{2}} S=D−21WD−21,其中 D − 1 2 = diag ( 1 d 1 , 1 d 2 , … , 1 d l + u ) D^{- \frac{1}{2}} = \text{diag}\left( \frac{1}{\sqrt{d_{1}}},\frac{1}{\sqrt{d_{2}}},\ldots,\frac{1}{\sqrt{d_{l + u}}} \right) D−21=diag(d11,d21,…,dl+u1),有迭代计算式

F ( t + 1 ) = α S F ( t ) + ( 1 − α ) Y F\left( t + 1 \right) = \alpha SF\left( t \right) + \left( 1 - \alpha \right)Y F(t+1)=αSF(t)+(1−α)Y

其中 α ∈ ( 0 , 1 ) \alpha \in \left( 0,1 \right) α∈(0,1)为用户指定的参数,用于对标记传播 SF ( t ) \text{SF}\left( t \right) SF(t)与初始化项Y的重要性进行折中,上式迭代至收敛可得

![]()

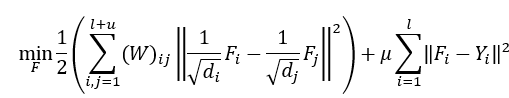

该算法对应于正则化框架

其中 μ > 0 \mu > 0 μ>0为正则化参数

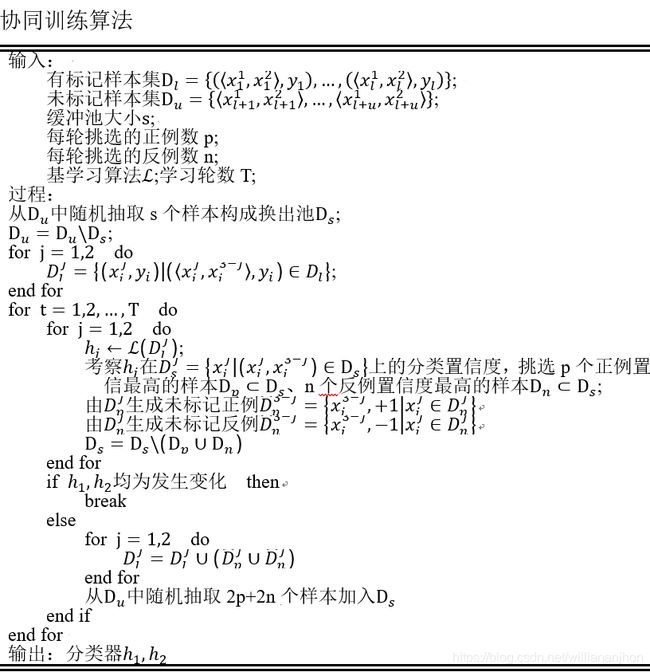

13.5 基于分歧的方法

基于分歧的方法(disagreement-based methods)使用多学习器,而学习器直接的分歧对未标记数据的利用至关重要。

协同训练正是很好地利用了多视图的相容互补性。

充分:每个视图都包含足以产生最优学习器的信息。

13.6 半监督聚类

聚类任务中获得监督信息的类型,第一种类型是必连和勿连约束;第二种类型的监督信息则是少量的有标记样本

约束k均值算法是利用第一类监督信息。给定样本集 D = { x 1 , x 2 , … , x m } D = \left\{ x_{1},x_{2},\ldots,x_{m} \right\} D={x1,x2,…,xm}以及必连关系集合 M \mathcal{M} M和勿连关系集合 C , ( x i , x j ) ∈ M \mathcal{C,}\left( x_{i},x_{j} \right)\mathcal{\in M} C,(xi,xj)∈M表示 x i x_{i} xi和 x j x_{j} xj必属于同簇, ( x i , x j ) ∈ C \left( x_{i},x_{j} \right) \in \mathcal{C} (xi,xj)∈C表示 x i x_{i} xi和 x j x_{j} xj必不属于同簇。

给定样本集 D = { x 1 , x 2 , … , x m } D = \left\{ x_{1},x_{2},\ldots,x_{m} \right\} D={x1,x2,…,xm},假定少量的有标记样本集为 S = ⋃ j = 1 k S j ⊂ D S = \bigcup_{j = 1}^{k}{S_{j} \subset D} S=⋃j=1kSj⊂D,其中 S j ≠ ∅ S_{j} \neq \varnothing Sj̸=∅为隶属第 j j j个聚类簇的样本。