用sklearn进行手写数字的识别训练,获取学习率和惩罚系数,机器学习入门推荐案例(1)

理论总是让人感觉乏味和枯燥,对于学习率和惩罚系数是如何确定的呢?我们可以用列举法来评价每个参数的得分,进而获取最优的模型参数。

一、 获取手写数据集合

from sklearn.datesets import load_digits

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

import matplotlib.pyplot as plt

def load_data():

data = load_digits()

x, y = data.data, data.target

#切割测试集和训练集

train_x, test_x, train_y, test_y = train_test_split(test_size= 0.3, random_state=10)

#标准化数据

ss = StandardScaler()

ss.fit(train_x)

train_x = ss.transform(train_x)

test_x = ss.transform(test_x)

return train_x, test_x, train_y, test_y;

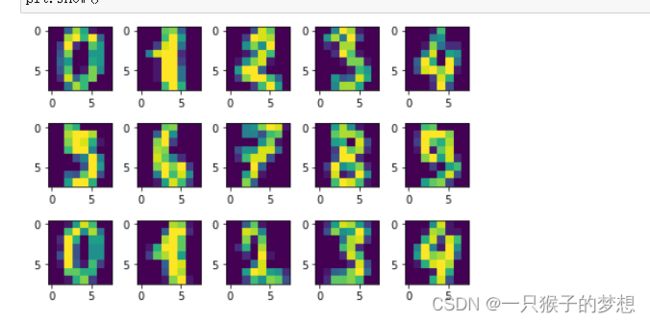

处理完数据后,可以展示一下数据的样式,让我们看看图片张啥样

def show_img(X):

images = X.reshape(-1, 8, 8 ) # 格式为(-1, 64,)图片格式为8 * 8

# 构建绘图

fig, axi = plt.subslots(3, 5)

for i, ax in enumerate(axi.flat):

img = images[i]

ax.imshow(img, cmap='gray')

ax.set(xticks=[], yticks=[])

plt.tight_layout()

plt.show()

开始寻找最优的学习率和惩罚系数

def model_fit(X,y, k=5):

learning_rates = [0.001, 0.003, 0.01, 0.03, 0.1, 0.3, 1, 3]

penalties = [0, 0.03, 0.01, 0.1, 0.3, 1, 3] #惩罚系数

all_models = []

for learning_rate in learning_rates:

for penalty in penalties:

print('正训练模型:下降参数为%s,正则化为%s'%(learning_rate, penalty))

#建立逻辑回归模型

model_score = []

#运用交叉验证法取样

kf = KFold(n_split= k, shuffle= True, random_state = 20)

model = SGDclassifier(loss = 'log', learning_rate = "constant", penalty = "l2", eta0 = learning_rate, alpha = penalty)

for train_idx, dev_idx in kf.split(X):

x_train, x_dev = X[train_idx], x[dev_idx]

y_train, y_dev = y[train_idx], y[dev_idx]

model.fit(x_train, x_dev)

s = model.score(y_train, y_dev)

model_score.append(s)

all_models.append([np.mean(model_score), learning_rate, penalty])

print("模型最优解:", sorted(all_models, reverse= True)[0]

测试运行

if __name__ == "__main__":

train_x, test_x, train_y, test_y = load_data()

print(train_x.shape)

print(train_y.shape)

# model_fit(train_x, train_y)

model = SGDClassifier(loss='log', penalty='l2',learning_rate="constant", alpha=0.01, eta0=0.01)

model.fit(train_x, train_y)

y_pred = model.predict(test_x)

print(test_y)

print(y_pred)

完整源码:

# -*- coding: utf-8 -*-

"模型的正则化和超参数的获取"

#1 引入需要的包

import numpy as np

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split, KFold

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import SGDClassifier

#2 划分数据为训练和测试

def load_data():

data = load_digits()

x, y = data.data, data.target

train_x, test_x, train_y, test_y = train_test_split(x, y , test_size = 0.3, random_state = 20)

#3 标准化数据

ss = StandardScaler()

ss.fit(train_x)

train_x = ss.transform(train_x)

test_x = ss.transform(test_x)

return train_x, test_x, train_y, test_y

#4 进行模型的验证取最佳的超参数和正则化参数

def model_fit(x, y, k = 5):

learning_rates = [0.001, 0.003, 0.01, 0.03, 0.1, 0.3, 1, 3]

penalties = [0, 0.03, 0.01, 0.1, 0.3, 1, 3]

all_models = []

for learning_rate in learning_rates:

for penalty in penalties:

print('正训练模型:下降参数为%s,正则化为%s'%(learning_rate, penalty))

#建立逻辑回归模型

model_score = []

model = SGDClassifier(loss='log', penalty='l2', learning_rate='constant', eta0=learning_rate, alpha=penalty)

kf = KFold(n_splits=k, shuffle=True, random_state=10)

for train_idx, dev_idx in kf.split(x):

x_train, x_dev = x[train_idx], x[dev_idx]

y_train, y_dev = y[train_idx], y[dev_idx]

model.fit(x_train, y_train)

s = model.score(x_dev, y_dev)

model_score.append(s)

all_models.append([np.mean(model_score), learning_rate, penalty])

print('最优模型:', sorted(all_models, reverse= True)[0])

if __name__ == "__main__":

train_x, test_x, train_y, test_y = load_data()

print(train_x.shape)

print(train_y.shape)

# model_fit(train_x, train_y)

model = SGDClassifier(loss='log', penalty='l2',learning_rate="constant", alpha=0.01, eta0=0.01)

model.fit(train_x, train_y)

y_pred = model.predict(test_x)

print(test_y)

print(y_pred)