caffe源码阅读6-vision_layers.hpp+各cpp

vision_layers.hpp:

ConvolutionLayer类,CuDNNConvolutionLayer类;

Im2colLayer类;

LRNLayer类;

PoolingLayer类,CuDNNPoolingLayer类。

在这个文件中一共包含了上面的4种层。因为在卷积的时候,caffe为了加速处理,使用了小技巧im2col,所以我们先来看看im2colLayer:

1. im2colLayer:

1.1. 原理介绍:

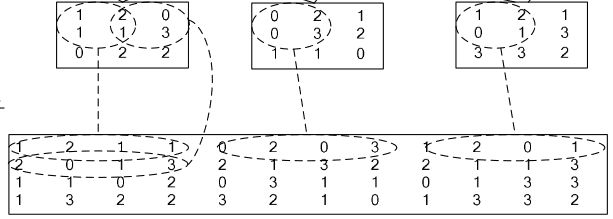

用两个图来进行说明:

如上图,相当于是把很多个小矩阵行化之后合并成一个大矩阵。

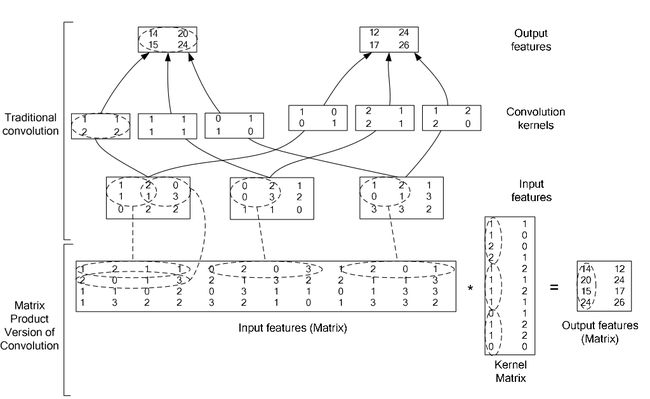

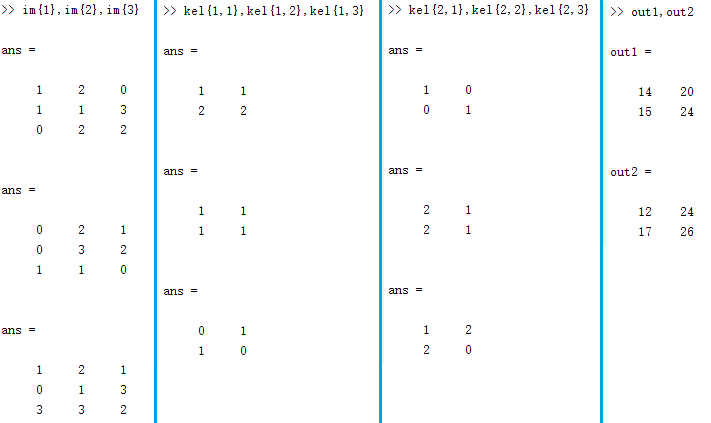

这个图演示了卷积的过程。可能稍微有些看不太明白,我用matlab简单的演示一下:

clear,clc

im = {[1,2,0;1,1,3;0,2,2;],[0,2,1;0,3,2;1,1,0;],[1,2,1;0,1,3;3,3,2]};

kel = {[1,1;2,2;],[1,1;1,1;],[0,1;1,0;];[1,0;0,1;],[2,1;2,1;],[1,2;2,0;]};

out1 = zeros(2,2);

out2 = zeros(2,2);

for i = 1:2

for j = 1:2

for k = 1:3

out1(i,j) = out1(i,j) + sum(sum(kel{1,k}.*im{k}(i:i+1,j:j+1)));

out2(i,j) = out2(i,j) + sum(sum(kel{2,k}.*im{k}(i:i+1,j:j+1)));

end

end

end

out1

out2上图中一共3个输入矩阵,6个卷积核。以上的代码演示的是传统卷积部分,也就是图片的上半部分:

然后,下半部分的卷积过程应该就好理解了。那么其实我们可以想象得到,两种方式做的乘法加法次数应该都是相同的,为什么下面版本的卷积会起到加速的作用呢?还多了一个转换的过程多麻烦啊!我暂时的理解是:这个应该与计算的多级缓存有关系,弄成一个大矩阵之后,换页的次数应该会大大降低,从而提高了速度。(后来发现还有另外一个好处,以后分析道全连接层的代码时会发现跟卷积层很像,卷积层的计算其实可以就看成一种局部全连接层嘛,而经过im2col转换之后,就变得和全连接层的计算一样了)

1.2. 源码分析:

A helper for image operations that rearranges image regions into column vectors. Used by ConvolutionLayer to perform convolution by matrix multiplication.

1.2.1. 属性变量:

int kernel_h_, kernel_w_;

int stride_h_, stride_w_;

int channels_;

int height_, width_;

int pad_h_, pad_w_;对跑过caffe的人来说,这几个变量并不陌生吧。因为在配置文件(例如:train_val.prototxt)中常常见到这几个东西。但是我们一般可能设置的都是单个值,因为我们一般使用的都是方形。从这里来看,原来可以使用矩形的。其实这些东西在caffe.proto中也可以看得到。

1.2.2. 构造函数:

explicit Im2colLayer(const LayerParameter& param)

: Layer(param) {}

virtual void LayerSetUp(const vector*>& bottom,

vector*>* top);

virtual void Reshape(const vector*>& bottom,

vector*>* top); 虽然这里只有一个是构造函数, Im2colLayer()。感觉这三个放在这里一起介绍合适一些。

在im2col_layer.cpp中找到对应的实现。

其中Im2colLayer()直接继承使用了Layer工厂类中的Layer()。

LayerSetUp()的实现如下:

template

void Im2colLayer::LayerSetUp(const vector*>& bottom,

vector*>* top) {

ConvolutionParameter conv_param = this->layer_param_.convolution_param();

CHECK(!conv_param.has_kernel_size() !=

!(conv_param.has_kernel_h() && conv_param.has_kernel_w()))

<< "Filter size is kernel_size OR kernel_h and kernel_w; not both";

CHECK(conv_param.has_kernel_size() ||

(conv_param.has_kernel_h() && conv_param.has_kernel_w()))

<< "For non-square filters both kernel_h and kernel_w are required.";

CHECK((!conv_param.has_pad() && conv_param.has_pad_h()

&& conv_param.has_pad_w())

|| (!conv_param.has_pad_h() && !conv_param.has_pad_w()))

<< "pad is pad OR pad_h and pad_w are required.";

CHECK((!conv_param.has_stride() && conv_param.has_stride_h()

&& conv_param.has_stride_w())

|| (!conv_param.has_stride_h() && !conv_param.has_stride_w()))

<< "Stride is stride OR stride_h and stride_w are required.";

if (conv_param.has_kernel_size()) {

kernel_h_ = kernel_w_ = conv_param.kernel_size();

} else {

kernel_h_ = conv_param.kernel_h();

kernel_w_ = conv_param.kernel_w();

}

CHECK_GT(kernel_h_, 0) << "Filter dimensions cannot be zero.";

CHECK_GT(kernel_w_, 0) << "Filter dimensions cannot be zero.";

if (!conv_param.has_pad_h()) {

pad_h_ = pad_w_ = conv_param.pad();

} else {

pad_h_ = conv_param.pad_h();

pad_w_ = conv_param.pad_w();

}

if (!conv_param.has_stride_h()) {

stride_h_ = stride_w_ = conv_param.stride();

} else {

stride_h_ = conv_param.stride_h();

stride_w_ = conv_param.stride_w();

}

} 该方法函数首先调用的是:layer_param_.convolution_param()

还记得在Layer工厂类中的属性变量就有layer_param_吗?其定义如下:

LayerParameter layer_param_;那么LayerParameter是怎样的一个类型呢?可以在caffe.proto中找到(详见: caffe源码阅读4-layer.hpp)

那么这个类中的方法convolution_param()是个什么东西?

从上面的源码返回类型来看,返回值是ConvolutionParameter类型的:

// Message that stores parameters used by ConvolutionLayer

message ConvolutionParameter {

optional uint32 num_output = 1; // The number of outputs for the layer

optional bool bias_term = 2 [default = true]; // whether to have bias terms

// Pad, kernel size, and stride are all given as a single value for equal

// dimensions in height and width or as Y, X pairs.

optional uint32 pad = 3 [default = 0]; // The padding size (equal in Y, X)

optional uint32 pad_h = 9 [default = 0]; // The padding height

optional uint32 pad_w = 10 [default = 0]; // The padding width

optional uint32 kernel_size = 4; // The kernel size (square)

optional uint32 kernel_h = 11; // The kernel height

optional uint32 kernel_w = 12; // The kernel width

optional uint32 group = 5 [default = 1]; // The group size for group conv

optional uint32 stride = 6 [default = 1]; // The stride (equal in Y, X)

optional uint32 stride_h = 13; // The stride height

optional uint32 stride_w = 14; // The stride width

optional FillerParameter weight_filler = 7; // The filler for the weight

optional FillerParameter bias_filler = 8; // The filler for the bias

enum Engine {

DEFAULT = 0;

CAFFE = 1;

CUDNN = 2;

}

optional Engine engine = 15 [default = DEFAULT];

}所以 convolution_param()差不多相当于返回了本层参数的详细信息。

LayerSetUp()中接着就是检测本层初始化的时候,该有的属性变量是否都具有,是否正确,例如需要包含kernel_size的话,就不用kernel_h和kernel_w了。

然后就开始对这些属性变量进行赋值。有点奇怪的是,LayerSetUp()代码中根本没有使用参数const vector

下来来看看Reshape()函数:

template

void Im2colLayer::Reshape(const vector*>& bottom,

vector*>* top) {

channels_ = bottom[0]->channels();

height_ = bottom[0]->height();

width_ = bottom[0]->width();

(*top)[0]->Reshape(

bottom[0]->num(), channels_ * kernel_h_ * kernel_w_,

(height_ + 2 * pad_h_ - kernel_h_) / stride_h_ + 1,

(width_ + 2 * pad_w_ - kernel_w_) / stride_w_ + 1);

}

该函数实现的是将本层的图像(或者说矩阵吧)大小改成和bottom一样。将top的也修改的和bottom一致,不过是做了变换之后的,与卷积核大小,步长等等有关。

1.2.3. 前馈反馈函数:

vision_layers.hpp中对这里的声明是:

protected:

virtual void Forward_cpu(const vector*>& bottom,

vector*>* top);

virtual void Forward_gpu(const vector*>& bottom,

vector*>* top);

virtual void Backward_cpu(const vector*>& top,

const vector& propagate_down, vector*>* bottom);

virtual void Backward_gpu(const vector*>& top,

const vector& propagate_down, vector*>* bottom); 而在im2col_layer.cpp中的实现是:

template

void Im2colLayer::Forward_cpu(const vector*>& bottom,

vector*>* top) {

const Dtype* bottom_data = bottom[0]->cpu_data();

Dtype* top_data = (*top)[0]->mutable_cpu_data();

for (int n = 0; n < bottom[0]->num(); ++n) {

im2col_cpu(bottom_data + bottom[0]->offset(n), channels_, height_,

width_, kernel_h_, kernel_w_, pad_h_, pad_w_,

stride_h_, stride_w_, top_data + (*top)[0]->offset(n));

}

}

template

void Im2colLayer::Backward_cpu(const vector*>& top,

const vector& propagate_down, vector*>* bottom) {

const Dtype* top_diff = top[0]->cpu_diff();

Dtype* bottom_diff = (*bottom)[0]->mutable_cpu_diff();

for (int n = 0; n < top[0]->num(); ++n) {

col2im_cpu(top_diff + top[0]->offset(n), channels_, height_, width_,

kernel_h_, kernel_w_, pad_h_, pad_w_,

stride_h_, stride_w_, bottom_diff + (*bottom)[0]->offset(n));

}

} 这两个代码看起来很简单,因为具体的操作过程都在 im2col_cpu()和 col2im_cpu()中。这里有没有感觉到有点奇怪?奇怪的有两个地方:

1 为什么只有cpu的计算呢?不应该也有gpu的计算吗?难道这里的计算不需要gpu?其实不然,在im2col_layer.cu中实现了gpu的部分,和这里cpu的代码基本长得一样。只是调用的是im2col_gpu()和col2im_gpu()。

2 为什么只对bottom[0]和top[0]操作呢?不应该可能有多个bottom和多个top吗?呃,这里我也懵了,先不管,继续往后面看吧。

然后关于im2col_layer中的其余几个函数,呃,暂时看上去没有什么特别的用处一样。

1.3 im2col, col2im的实现

在im2col.hpp+cpp中,一共包含4个函数:

#ifndef _CAFFE_UTIL_IM2COL_HPP_

#define _CAFFE_UTIL_IM2COL_HPP_

namespace caffe {

template

void im2col_cpu(const Dtype* data_im, const int channels,

const int height, const int width, const int kernel_h, const int kernel_w,

const int pad_h, const int pad_w, const int stride_h,

const int stride_w, Dtype* data_col);

template

void col2im_cpu(const Dtype* data_col, const int channels,

const int height, const int width, const int patch_h, const int patch_w,

const int pad_h, const int pad_w, const int stride_h,

const int stride_w, Dtype* data_im);

template

void im2col_gpu(const Dtype* data_im, const int channels,

const int height, const int width, const int kernel_h, const int kernel_w,

const int pad_h, const int pad_w, const int stride_h,

const int stride_w, Dtype* data_col);

template

void col2im_gpu(const Dtype* data_col, const int channels,

const int height, const int width, const int patch_h, const int patch_w,

const int pad_h, const int pad_w, const int stride_h,

const int stride_w, Dtype* data_im);

} // namespace caffe

#endif // CAFFE_UTIL_IM2COL_HPP_

gpu和cpu的实现基本一样,所以我们只看cpu的实现吧:

1.3.1 im2col_cpu():

template

void im2col_cpu(const Dtype* data_im, const int channels,

const int height, const int width, const int kernel_h, const int kernel_w,

const int pad_h, const int pad_w,

const int stride_h, const int stride_w,

Dtype* data_col) {

int height_col = (height + 2 * pad_h - kernel_h) / stride_h + 1;

int width_col = (width + 2 * pad_w - kernel_w) / stride_w + 1;

int channels_col = channels * kernel_h * kernel_w;

for (int c = 0; c < channels_col; ++c) {

int w_offset = c % kernel_w;

int h_offset = (c / kernel_w) % kernel_h;

int c_im = c / kernel_h / kernel_w;

for (int h = 0; h < height_col; ++h) {

for (int w = 0; w < width_col; ++w) {

int h_pad = h * stride_h - pad_h + h_offset;

int w_pad = w * stride_w - pad_w + w_offset;

if (h_pad >= 0 && h_pad < height && w_pad >= 0 && w_pad < width)

data_col[(c * height_col + h) * width_col + w] =

data_im[(c_im * height + h_pad) * width + w_pad];

else

data_col[(c * height_col + h) * width_col + w] = 0;

}

}

}

} 原理已经在最开始介绍过了,那么这里的代码读起来应该不是那么费劲。

这里是将输入数据data_im经过im2col之后,将新的数据存入data_col中。至于说data_col的通道数,高,宽的计算,自己稍微推理一下吧。

需要注意的是:pad_h乘了2,pad_w也乘了2,我们首先需要明确pad_h的意思是在输入数据的高度方向两边各增加pad_h个单位长度,所以乘了2。那么pad_w也是一样的道理。

下面我画一个图来便于理解:(参考https://www.zhihu.com/question/28385679)

在源码当中还有一个小细节:

// Explicit instantiation

template void im2col_cpu(const float* data_im, const int channels,

const int height, const int width, const int kernel_h, const int kernel_w,

const int pad_h, const int pad_w, const int stride_h,

const int stride_w, float* data_col);

template void im2col_cpu(const double* data_im, const int channels,

const int height, const int width, const int kernel_h, const int kernel_w,

const int pad_h, const int pad_w, const int stride_h,

const int stride_w, double* data_col); 扎眼一看,还以为两个长一样,其实不然。

第一个的data_im, data_col是float*类型的;第一个的data_im, data_col是double*类型的;同时也说明了不支持整型。

1.3.2 col2im_cpu():

template

void col2im_cpu(const Dtype* data_col, const int channels,

const int height, const int width, const int patch_h, const int patch_w,

const int pad_h, const int pad_w,

const int stride_h, const int stride_w,

Dtype* data_im) {

caffe_set(height * width * channels, Dtype(0), data_im);

int height_col = (height + 2 * pad_h - patch_h) / stride_h + 1;

int width_col = (width + 2 * pad_w - patch_w) / stride_w + 1;

int channels_col = channels * patch_h * patch_w;

for (int c = 0; c < channels_col; ++c) {

int w_offset = c % patch_w;

int h_offset = (c / patch_w) % patch_h;

int c_im = c / patch_h / patch_w;

for (int h = 0; h < height_col; ++h) {

for (int w = 0; w < width_col; ++w) {

int h_pad = h * stride_h - pad_h + h_offset;

int w_pad = w * stride_w - pad_w + w_offset;

if (h_pad >= 0 && h_pad < height && w_pad >= 0 && w_pad < width)

data_im[(c_im * height + h_pad) * width + w_pad] +=

data_col[(c * height_col + h) * width_col + w];

}

}

}

} 有了前面的介绍,加上我画的那个图,反过来的过程就很容易理解了吧。

2 ConvolutionLayer:

param provides ConvolutionParameter convolution_param, with ConvolutionLayer options:

- num_output. The number of filters.

- kernel_size / kernel_h / kernel_w. The filter dimensions, given by kernel_size for square filters or kernel_h and kernel_w for rectangular filters.

- stride / stride_h / stride_w (\b optional, default 1). The filter stride, given by stride_size for equal dimensions or stride_h and stride_w for different strides. By default the convolution is dense with stride 1.

- pad / pad_h / pad_w (\b optional, default 0). The zero-padding for convolution, given by pad for equal dimensions or pad_h and pad_w for different padding. Input padding is computed implicitly instead of actually padding.

- group (\b optional, default 1). The number of filter groups. Group convolution is a method for reducing parameterization by selectively connecting input and output channels. The input and output channel dimensions must be divisible by the number of groups. For group @f$ \geq 1 @f$, the convolutional filters' input and output channels are separated s.t. each group takes 1 / group of the input channels and makes 1 / group of the output channels. Concretely 4 input channels, 8 output channels, and 2 groups separate input channels 1-2 and output channels 1-4 into the first group and input channels 3-4 and output channels 5-8 into the second group.

- bias_term (\b optional, default true). Whether to have a bias.

- engine: convolution has CAFFE (matrix multiplication) and CUDNN (library kernels + stream parallelism) engines.以上是ConvolutionLayer类的源码注释:主要介绍各参数,其中有一个参数需要注意一下:group,如果该参数赋值为2,假设输入的channel有4个,而输出的channel为8,则输出的8个channel中的前4个来源于输入的前2个,输出的后4个来源于输入的后2个。

2.1 原理介绍:

可以参考:

caffe源码阅读(2)卷积层

cnn_tutorial

前馈:y = w*x + b

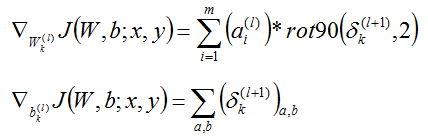

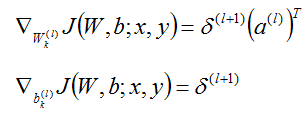

反馈:

卷积层参数传递求解:

但实际上经过im2col之后转换成了全连接的计算方式:(其实卷积层的参数传递计算与全连接层的计算本质上是一样的)

2.2 源码分析:

2.2.1 属性变量:

int kernel_h_, kernel_w_;

int stride_h_, stride_w_;

int num_;

int channels_;

int pad_h_, pad_w_;

int height_, width_;

int group_;

int num_output_;

int height_out_, width_out_;

bool bias_term_;

/// M_ is the channel dimension of the output for a single group, which is the

/// leading dimension of the filter matrix.

int M_;

/// K_ is the dimension of an unrolled input for a single group, which is the

/// leading dimension of the data matrix.

int K_;

/// N_ is the spatial dimension of the output, the H x W, which are the last

/// dimensions of the data and filter matrices.

int N_;

Blob col_buffer_;

Blob bias_multiplier_; 前面一堆就没什么好说的了吧,关键来看看后面的几个:( http://blog.csdn.net/xiaoyezi_1834/article/details/50786363)

M_:相当于是卷积核的数量;

K_:kernel_h × kernel_w,相当于是卷积核元素个数

N_:((height + 2*pad_h – kernel_h)/stride_h+ 1)*((weight +2*pad_w – kernel_w)/stride_w + 1),而不是简单的height×weight

col_buffer_:存储在前面介绍的im2col时的结果

2.2.2 前馈和反馈:

构造函数,SetUp(),Reshpe()这里就不做说明了。直接看前馈和反馈吧:

首先来看前馈函数:

template

void ConvolutionLayer::Forward_cpu(const vector*>& bottom,

vector*>* top) {

for (int i = 0; i < bottom.size(); ++i) {

const Dtype* bottom_data = bottom[i]->cpu_data();

Dtype* top_data = (*top)[i]->mutable_cpu_data();

Dtype* col_data = col_buffer_.mutable_cpu_data();

const Dtype* weight = this->blobs_[0]->cpu_data();

int weight_offset = M_ * K_; // number of filter parameters in a group

int col_offset = K_ * N_; // number of values in an input region / column

int top_offset = M_ * N_; // number of values in an output region / column

for (int n = 0; n < num_; ++n) {

// im2col transformation: unroll input regions for filtering

// into column matrix for multiplication.

im2col_cpu(bottom_data + bottom[i]->offset(n), channels_, height_,

width_, kernel_h_, kernel_w_, pad_h_, pad_w_, stride_h_, stride_w_,

col_data);

int offsetN = (*top)[i]->offset(n);

// Take inner products for groups.

for (int g = 0; g < group_; ++g) {

caffe_cpu_gemm(CblasNoTrans, CblasNoTrans, M_, N_, K_,

(Dtype)1., weight + weight_offset * g, col_data + col_offset * g,

(Dtype)0., top_data + (*top)[i]->offset(n) + top_offset * g);

}

// Add bias.

if (bias_term_) {

caffe_cpu_gemm(CblasNoTrans, CblasNoTrans, num_output_,

N_, 1, (Dtype)1., this->blobs_[1]->cpu_data(),

bias_multiplier_.cpu_data(),

(Dtype)1., top_data + (*top)[i]->offset(n));

}

}

}

} 这个原理已经在上面介绍过了,然后在代码中用到了 im2col_cpu(),这个函数已经在前面介绍过了。接下来代码中先做的事情是:y = y + w * x,最后的if判断是否需要加上偏置,如果要加上,则再进行 y = y + b。

其中调用了caffe_cpu_gemm()来实现代数运算:(源码实现在math_functions.cpp中)

template<>

void caffe_cpu_gemm(const CBLAS_TRANSPOSE TransA,

const CBLAS_TRANSPOSE TransB, const int M, const int N, const int K,

const float alpha, const float* A, const float* B, const float beta,

float* C) {

int lda = (TransA == CblasNoTrans) ? K : M;

int ldb = (TransB == CblasNoTrans) ? N : K;

cblas_sgemm(CblasRowMajor, TransA, TransB, M, N, K, alpha, A, lda, B,

ldb, beta, C, N);

}

template<>

void caffe_cpu_gemm(const CBLAS_TRANSPOSE TransA,

const CBLAS_TRANSPOSE TransB, const int M, const int N, const int K,

const double alpha, const double* A, const double* B, const double beta,

double* C) {

int lda = (TransA == CblasNoTrans) ? K : M;

int ldb = (TransB == CblasNoTrans) ? N : K;

cblas_dgemm(CblasRowMajor, TransA, TransB, M, N, K, alpha, A, lda, B,

ldb, beta, C, N);

} 这里提供了两个版本的caffe_cpu_gemm(),这个时候你会发现,原来代数运算真正的实现者是BLAS:

官方API:

http://www.netlib.org/lapack/explore-html/db/d66/cblas__sgemm_8c_a584e7569aee83c27b2b2bf22ea4f9f23.html#a584e7569aee83c27b2b2bf22ea4f9f23

博客园的介绍:(尽管这里是MKL,其实他们的参数,功能都是一样的,估计实现代码也差不多)

http://www.cnblogs.com/darkknightzh/p/5553336.html

CSDN某人的介绍:(比较详细)

http://blog.csdn.net/cleverysm/article/details/1925549

再来看反馈部分:

template

void ConvolutionLayer::Backward_cpu(const vector*>& top,

const vector& propagate_down, vector*>* bottom) {

const Dtype* weight = NULL;

Dtype* weight_diff = NULL;

if (this->param_propagate_down_[0]) {

weight = this->blobs_[0]->cpu_data();

weight_diff = this->blobs_[0]->mutable_cpu_diff();

caffe_set(this->blobs_[0]->count(), Dtype(0), weight_diff);

}

Dtype* bias_diff = NULL;

if (bias_term_ && this->param_propagate_down_[1]) {

bias_diff = this->blobs_[1]->mutable_cpu_diff();

caffe_set(this->blobs_[1]->count(), Dtype(0), bias_diff);

}

const int weight_offset = M_ * K_;

const int col_offset = K_ * N_;

const int top_offset = M_ * N_;

for (int i = 0; i < top.size(); ++i) {

const Dtype* top_diff = NULL;

// Bias gradient, if necessary.

if (bias_term_ && this->param_propagate_down_[1]) {

top_diff = top[i]->cpu_diff();

for (int n = 0; n < num_; ++n) {

caffe_cpu_gemv(CblasNoTrans, num_output_, N_,

1., top_diff + top[0]->offset(n),

bias_multiplier_.cpu_data(), 1.,

bias_diff);

}

}

if (this->param_propagate_down_[0] || propagate_down[i]) {

if (!top_diff) {

top_diff = top[i]->cpu_diff();

}

Dtype* col_data = col_buffer_.mutable_cpu_data();

Dtype* col_diff = col_buffer_.mutable_cpu_diff();

const Dtype* bottom_data = (*bottom)[i]->cpu_data();

Dtype* bottom_diff = (*bottom)[i]->mutable_cpu_diff();

for (int n = 0; n < num_; ++n) {

// Since we saved memory in the forward pass by not storing all col

// data, we will need to recompute them.

im2col_cpu(bottom_data + (*bottom)[i]->offset(n), channels_, height_,

width_, kernel_h_, kernel_w_, pad_h_, pad_w_,

stride_h_, stride_w_, col_data);

// gradient w.r.t. weight. Note that we will accumulate diffs.

if (this->param_propagate_down_[0]) {

for (int g = 0; g < group_; ++g) {

caffe_cpu_gemm(CblasNoTrans, CblasTrans, M_, K_, N_,

(Dtype)1., top_diff + top[i]->offset(n) + top_offset * g,

col_data + col_offset * g, (Dtype)1.,

weight_diff + weight_offset * g);

}

}

// gradient w.r.t. bottom data, if necessary.

if (propagate_down[i]) {

if (weight == NULL) {

weight = this->blobs_[0]->cpu_data();

}

for (int g = 0; g < group_; ++g) {

caffe_cpu_gemm(CblasTrans, CblasNoTrans, K_, N_, M_,

(Dtype)1., weight + weight_offset * g,

top_diff + top[i]->offset(n) + top_offset * g,

(Dtype)0., col_diff + col_offset * g);

}

// col2im back to the data

col2im_cpu(col_diff, channels_, height_, width_,

kernel_h_, kernel_w_, pad_h_, pad_w_,

stride_h_, stride_w_, bottom_diff + (*bottom)[i]->offset(n));

}

}

}

}

} 该函数最开始的部分对需要计算的参数(权重,偏置)的指针初始化,相当于是指向了需要存储的位置。

for循环,才是真正计算的主体:

其中用到了caffe_cpu_gemv(),可以查看文档,相当于实现了:y = alpha*A*x + beta*y,其中x, y表示向量,而A表示矩阵。

那么for循环的开始处理偏置的问题,根据计算原理,直接将top_diff+top[0]->offset(n)累加到bias_diff中即可。程序中实现的是:

bias_diff = 1.0 * [top_diff+top[0]->offset(n)] * bias_multiplier_.cpu_data() + 1.0 * bias_diff

而bias_mutiplier_在caffe中默认被设置为1,所以相当于是实现了一个累加的过程。

for循环后面就接着处理权重的问题了,这个时候因为可能批量处理时有很多个图,所以又涉及到一个for循环。

根据计算原理,在程序中对应的相当于是:

weight_diff+weight_offset*g = 1.0 * [top_diff+top[i]->offset(n)+top_offset*g] * [col_data+col_offset*g] + 1.0 * [weight_diff+weight_offset*g]

其中的g表示分组处理。

最后将误差传递到低层:(相当于是delta(l) = W * delta(l+1))

在程序中的体现是:col_diff+col_offset*g = 1.0 * [weight + weight_offset*g] * [top_diff + top[i]->offset(n) + top_offset * g] + 0.0 * [col_diff+col_offset*g]

引用caffe源码阅读(2)卷积层的一句话:

卷积运算是先要将kernel旋转180度之后再扫过去的。可以看出Caffe源码是没有这一步的,所以最后学出来的“kernel”实际上是应该还要旋转回来才是正确的卷积核。

CuDNNConvolutionLayer就跳过了,其实现的方式大致和ConvolutionLayer差不多。下面来看LRNLayer

3 LRNLayer:

Normalize the input in a local region across or within feature maps.

3.1 原理介绍:

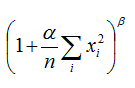

局部相应归一化(local response normalization):每个输入的值都除以:

其中alpha, beta都在源码和配置文件中都有定义;

而n则表示局部相应范围的大小,在源码中使用size_来表达(在配置文件中用local_size),实际上n和size_是一种函数映射关系:(where n is the size of each local region)

如果在通道间计算归一化,则n=size_;

如果是通道内计算归一化,则n=size_*size_;

从配置文件说起,:

一半我们的网络配置文件中的LRN层参数如下:(caffeNet中截取的一段)

那么其中的local_size:(在源码中用size_)layer { name: "norm1" type: "LRN" bottom: "pool1" top: "norm1" lrn_param { local_size: 5 alpha: 0.0001 beta: 0.75 } }1)通道间归一化时表示求和的通道数;

2)通道内归一化时表示求和区间的边长;

默认值为5。

alpha,beta也就是上面公式中的两个参数。

其实在写这个配置文件的时候还有一个可选参数:

norm_region: 选择对相邻通道间归一化还是通道内空间区域归一化,默认为ACROSS_CHANNELS,即通道间归一化;在通道间归一化模式中,局部区域范围在相邻通道间,但没有空间扩展(即尺寸为 local_size x 1 x 1);

在通道内归一化模式中,局部区域在空间上扩展,但只针对独立通道进行(即尺寸为 1 x local_size x local_size);

3.2 源码分析:

3.2.1 属性变量:

int size_;

int pre_pad_;

Dtype alpha_;

Dtype beta_;

int num_;

int channels_;

int height_;

int width_;上面的变量当中,基本都是熟悉的部分。其余的变量在原理介绍中已经说过了。

再来看看别的变量:

// Fields used for normalization ACROSS_CHANNELS

// scale_ stores the intermediate summing results

Blob scale_;

// Fields used for normalization WITHIN_CHANNEL

shared_ptr > split_layer_;

vector*> split_top_vec_;

shared_ptr > square_layer_;

Blob square_input_;

Blob square_output_;

vector*> square_bottom_vec_;

vector*> square_top_vec_;

shared_ptr > pool_layer_;

Blob pool_output_;

vector*> pool_top_vec_;

shared_ptr > power_layer_;

Blob power_output_;

vector*> power_top_vec_;

shared_ptr > product_layer_;

Blob product_input_;

vector*> product_bottom_vec_; 看上去很多东西,但实际上根据我们的配置文件,基本不会用到WITHIN_CHANNEL,所以下面一堆变量暂时不用关心。

3.2.2 构造函数:

template

void LRNLayer::LayerSetUp(const vector*>& bottom,

vector*>* top) {

size_ = this->layer_param_.lrn_param().local_size();

CHECK_EQ(size_ % 2, 1) << "LRN only supports odd values for local_size";

pre_pad_ = (size_ - 1) / 2;

alpha_ = this->layer_param_.lrn_param().alpha();

beta_ = this->layer_param_.lrn_param().beta();

if (this->layer_param_.lrn_param().norm_region() ==

LRNParameter_NormRegion_WITHIN_CHANNEL) {

// Set up split_layer_ to use inputs in the numerator and denominator.

split_top_vec_.clear();

split_top_vec_.push_back(&product_input_);

split_top_vec_.push_back(&square_input_);

LayerParameter split_param;

split_layer_.reset(new SplitLayer(split_param));

split_layer_->SetUp(bottom, &split_top_vec_);

// Set up square_layer_ to square the inputs.

square_bottom_vec_.clear();

square_top_vec_.clear();

square_bottom_vec_.push_back(&square_input_);

square_top_vec_.push_back(&square_output_);

LayerParameter square_param;

square_param.mutable_power_param()->set_power(Dtype(2));

square_layer_.reset(new PowerLayer(square_param));

square_layer_->SetUp(square_bottom_vec_, &square_top_vec_);

// Set up pool_layer_ to sum over square neighborhoods of the input.

pool_top_vec_.clear();

pool_top_vec_.push_back(&pool_output_);

LayerParameter pool_param;

pool_param.mutable_pooling_param()->set_pool(

PoolingParameter_PoolMethod_AVE);

pool_param.mutable_pooling_param()->set_pad(pre_pad_);

pool_param.mutable_pooling_param()->set_kernel_size(size_);

pool_layer_.reset(new PoolingLayer(pool_param));

pool_layer_->SetUp(square_top_vec_, &pool_top_vec_);

// Set up power_layer_ to compute (1 + alpha_/N^2 s)^-beta_, where s is

// the sum of a squared neighborhood (the output of pool_layer_).

power_top_vec_.clear();

power_top_vec_.push_back(&power_output_);

LayerParameter power_param;

power_param.mutable_power_param()->set_power(-beta_);

power_param.mutable_power_param()->set_scale(alpha_);

power_param.mutable_power_param()->set_shift(Dtype(1));

power_layer_.reset(new PowerLayer(power_param));

power_layer_->SetUp(pool_top_vec_, &power_top_vec_);

// Set up a product_layer_ to compute outputs by multiplying inputs by the

// inverse demoninator computed by the power layer.

product_bottom_vec_.clear();

product_bottom_vec_.push_back(&product_input_);

product_bottom_vec_.push_back(&power_output_);

LayerParameter product_param;

EltwiseParameter* eltwise_param = product_param.mutable_eltwise_param();

eltwise_param->set_operation(EltwiseParameter_EltwiseOp_PROD);

product_layer_.reset(new EltwiseLayer(product_param));

product_layer_->SetUp(product_bottom_vec_, top);

}

} 看上去这么大一堆,实际上如果我们忽略if里面的内容,只有很少一部分的初始化过程。因为if里面的一堆设置都是在通道内做归一化,而我们通常是做通道间归一化的,所以暂时也不用关心。

值得注意的是,在最开始对size_做了一个奇偶性的判断,只能够接受奇数!

Reshape()这里就暂时过掉了,基本都是那么回事。

3.2.3 前馈函数:

template

void LRNLayer::Forward_cpu(const vector*>& bottom,

vector*>* top) {

switch (this->layer_param_.lrn_param().norm_region()) {

case LRNParameter_NormRegion_ACROSS_CHANNELS:

CrossChannelForward_cpu(bottom, top);

break;

case LRNParameter_NormRegion_WITHIN_CHANNEL:

WithinChannelForward(bottom, top);

break;

default:

LOG(FATAL) << "Unknown normalization region.";

}

} 后面的前馈反馈函数都涉及到两个模式,我们这里都只看通道间归一化模式:

通道间归一化的前馈函数:

template

void LRNLayer::CrossChannelForward_cpu(

const vector*>& bottom, vector*>* top) {

const Dtype* bottom_data = bottom[0]->cpu_data();

Dtype* top_data = (*top)[0]->mutable_cpu_data();

Dtype* scale_data = scale_.mutable_cpu_data();

// start with the constant value

for (int i = 0; i < scale_.count(); ++i) {

scale_data[i] = 1.;

}

Blob padded_square(1, channels_ + size_ - 1, height_, width_);

Dtype* padded_square_data = padded_square.mutable_cpu_data();

caffe_set(padded_square.count(), Dtype(0), padded_square_data);

Dtype alpha_over_size = alpha_ / size_;

// go through the images

for (int n = 0; n < num_; ++n) {

// compute the padded square

caffe_sqr(channels_ * height_ * width_,

bottom_data + bottom[0]->offset(n),

padded_square_data + padded_square.offset(0, pre_pad_));

// Create the first channel scale

for (int c = 0; c < size_; ++c) {

caffe_axpy(height_ * width_, alpha_over_size,

padded_square_data + padded_square.offset(0, c),

scale_data + scale_.offset(n, 0));

}

for (int c = 1; c < channels_; ++c) {

// copy previous scale

caffe_copy(height_ * width_,

scale_data + scale_.offset(n, c - 1),

scale_data + scale_.offset(n, c));

// add head

caffe_axpy(height_ * width_, alpha_over_size,

padded_square_data + padded_square.offset(0, c + size_ - 1),

scale_data + scale_.offset(n, c));

// subtract tail

caffe_axpy(height_ * width_, -alpha_over_size,

padded_square_data + padded_square.offset(0, c - 1),

scale_data + scale_.offset(n, c));

}

}

// In the end, compute output

caffe_powx(scale_.count(), scale_data, -beta_, top_data);

caffe_mul(scale_.count(), top_data, bottom_data, top_data);

} 改程序前面会涉及到一个变量scale_data,这个数据来源于属性变量中的scale_,该变量初始化在 Reshape()中,相当于是创建了一个跟bottom[0]一样尺寸大小的Blob。

然后接着对scale_data全部赋初值1,注意这里为什么要赋初值为1!!这是因为在原理介绍中的公式括号里面的1.往后看完应该会明白。

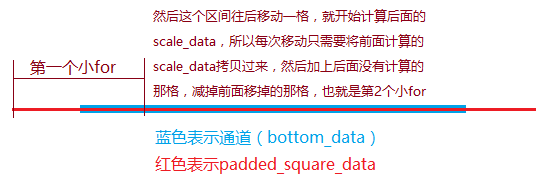

接着创建了一个对象padded_square,它的大小和bottom一样,除了在通道维上比bottom多了size_-1。为什么要多size_-1呢?

还是否记得前面介绍过做局部归一化的时候,每个点要除以一个值(暂时我们设为x_centre),而这个值得计算当中涉及到一个求和,这个求和的范围是以x_centre为中心,边长为size_的区域。如果是对通道间做归一化,那么这个区域相当于可以理解成1维的;如果是通道内做归一化,那么这个区域可以理解成2维的。

因为这里的代码是通道间的,我们就以1维的为例来说明为什么要多size_-1:

通过上面这个图来解释,应该就相当清楚了,图中的蓝色线条相当于是源码中的channels_,红色线条相当于是channels_ + size_ - 1.

源码接着对对象padded_square的数据部分赋初值0。

这里涉及到一些数学函数,在math_function.cpp中能够找到:

templatevoid caffe_set(const int N, const Dtype alpha, Dtype* Y) { if (alpha == 0) { memset(Y, 0, sizeof(Dtype) * N); // NOLINT(caffe/alt_fn) return; } for (int i = 0; i < N; ++i) { Y[i] = alpha; } }

这个就不做说明了吧。template <> void caffe_sqr(const int n, const float* a, float* y) { vsSqr(n, a, y); } template <> void caffe_sqr (const int n, const double* a, double* y) { vdSqr(n, a, y); }

如果光看sqr还真不知道是计算什么,可能是平方,也可能是开根。然后网上也没有找到vsSqr(),不知道是什么库里面的。在Caffe源码(一):math_functions分析中基本将里面的函数全部都讲了,暂时无法考证是否全部正确,就默认他是对的吧。根据这篇博客的介绍,这里是计算平方的,也就是实现了:y = a^2

template <> void caffe_axpy(const int N, const float alpha, const float* X, float* Y) { cblas_saxpy(N, alpha, X, 1, Y, 1); } template <> void caffe_axpy (const int N, const double alpha, const double* X, double* Y) { cblas_daxpy(N, alpha, X, 1, Y, 1); }

可以在BLAS API中查到,这里实现的是:Y = alpha*X + Y (X, Y都是向量,alpha是一个值)templatevoid caffe_copy(const int N, const Dtype* X, Dtype* Y) { if (X != Y) { if (Caffe::mode() == Caffe::GPU) { #ifndef CPU_ONLY // NOLINT_NEXT_LINE(caffe/alt_fn) CUDA_CHECK(cudaMemcpy(Y, X, sizeof(Dtype) * N, cudaMemcpyDefault)); #else NO_GPU; #endif } else { memcpy(Y, X, sizeof(Dtype) * N); // NOLINT(caffe/alt_fn) } } } template void caffe_copy (const int N, const int* X, int* Y); template void caffe_copy (const int N, const unsigned int* X, unsigned int* Y); template void caffe_copy (const int N, const float* X, float* Y); template void caffe_copy (const int N, const double* X, double* Y);

这里有几种copy函数,相当于是将X复制到Y里面去。

template <> void caffe_powx(const int n, const float* a, const float b, float* y) { vsPowx(n, a, b, y); } template <> void caffe_powx (const int n, const double* a, const double b, double* y) { vdPowx(n, a, b, y); }

相当于是计算幂:y = a^b,其中y, a是向量,b是一个值template <> void caffe_mul(const int n, const float* a, const float* b, float* y) { vsMul(n, a, b, y); } template <> void caffe_mul (const int n, const double* a, const double* b, double* y) { vdMul(n, a, b, y); }

相当于是向量乘法,按元素乘,也就是:y[i] = a[i]*b[i]

涉及到的这个函数介绍完了,再来接着看前馈函数CrossChannelForward_cpu():

计算alpha_over_size好理解,也就是原理介绍中的alpha/n。

接下来的大for循环:

将bottom中的数据平方之后存入padded_square_data中;相当于是原理介绍中的x_i^2

接下来的两个for循环,想画图来说明,还真不知道怎么画合适:

尽量用语言描述清楚吧:

第一个for注释上说的是计算第一个通道的scale:

我将大for看完之后,才明白scale是原理介绍中公式部分的括号部分,也就是(1 + alpha/n * sum(x_i^2))。

而scale已经赋值为1了,所以剩下的部分就是累加 “加号” 后面的部分。

大for遍历的是图像个数,第一个小for遍历的是size_也就是原理介绍中的求和范围大小。

需要注意的是,这里的计算直接作用于每个通道的所有数据(height_*weight_),最后累加到scale_data里面,注意scale_data的尺寸大小和bottom大小是一样的,所以我这里的描述为了不引起歧义,再啰嗦一点,尽管是对每个通道的所有数据都操作了,但是也分别累加到scale_data对应的位置的。

第二个for:有了前面的那张图 + 前面第一个for的解释,我想应该能够理解了。

经过这个大for之后,相当于是把原理介绍中的括号部分计算完了。

这个前馈函数的最后两行:第一个是计算幂,注意参数是 -beta。为什么要用负的呢?还记得原理介绍中是这样说的:“每个输入的值都除以”,所以为了将除转换成乘,就用负的幂。

最后一行也就是实现乘法,也就是原始数据乘以scale_data。

哎,终于把这个前馈弄完了,下面接着研究它的反馈函数。

3.2.4 反馈函数:

template

void LRNLayer::Backward_cpu(const vector*>& top,

const vector& propagate_down, vector*>* bottom) {

switch (this->layer_param_.lrn_param().norm_region()) {

case LRNParameter_NormRegion_ACROSS_CHANNELS:

CrossChannelBackward_cpu(top, propagate_down, bottom);

break;

case LRNParameter_NormRegion_WITHIN_CHANNEL:

WithinChannelBackward(top, propagate_down, bottom);

break;

default:

LOG(FATAL) << "Unknown normalization region.";

}

} 同样也只看通道间的反馈:

template

void LRNLayer::CrossChannelBackward_cpu(

const vector*>& top, const vector& propagate_down,

vector*>* bottom) {

const Dtype* top_diff = top[0]->cpu_diff();

const Dtype* top_data = top[0]->cpu_data();

const Dtype* bottom_data = (*bottom)[0]->cpu_data();

const Dtype* scale_data = scale_.cpu_data();

Dtype* bottom_diff = (*bottom)[0]->mutable_cpu_diff();

Blob padded_ratio(1, channels_ + size_ - 1, height_, width_);

Blob accum_ratio(1, 1, height_, width_);

Dtype* padded_ratio_data = padded_ratio.mutable_cpu_data();

Dtype* accum_ratio_data = accum_ratio.mutable_cpu_data();

// We hack a little bit by using the diff() to store an additional result

Dtype* accum_ratio_times_bottom = accum_ratio.mutable_cpu_diff();

caffe_set(padded_ratio.count(), Dtype(0), padded_ratio_data);

Dtype cache_ratio_value = 2. * alpha_ * beta_ / size_;

caffe_powx(scale_.count(), scale_data, -beta_, bottom_diff);

caffe_mul(scale_.count(), top_diff, bottom_diff, bottom_diff);

// go through individual data

int inverse_pre_pad = size_ - (size_ + 1) / 2;

for (int n = 0; n < num_; ++n) {

int block_offset = scale_.offset(n);

// first, compute diff_i * y_i / s_i

caffe_mul(channels_ * height_ * width_,

top_diff + block_offset, top_data + block_offset,

padded_ratio_data + padded_ratio.offset(0, inverse_pre_pad));

caffe_div(channels_ * height_ * width_,

padded_ratio_data + padded_ratio.offset(0, inverse_pre_pad),

scale_data + block_offset,

padded_ratio_data + padded_ratio.offset(0, inverse_pre_pad));

// Now, compute the accumulated ratios and the bottom diff

caffe_set(accum_ratio.count(), Dtype(0), accum_ratio_data);

for (int c = 0; c < size_ - 1; ++c) {

caffe_axpy(height_ * width_, 1.,

padded_ratio_data + padded_ratio.offset(0, c), accum_ratio_data);

}

for (int c = 0; c < channels_; ++c) {

caffe_axpy(height_ * width_, 1.,

padded_ratio_data + padded_ratio.offset(0, c + size_ - 1),

accum_ratio_data);

// compute bottom diff

caffe_mul(height_ * width_,

bottom_data + top[0]->offset(n, c),

accum_ratio_data, accum_ratio_times_bottom);

caffe_axpy(height_ * width_, -cache_ratio_value,

accum_ratio_times_bottom, bottom_diff + top[0]->offset(n, c));

caffe_axpy(height_ * width_, -1.,

padded_ratio_data + padded_ratio.offset(0, c), accum_ratio_data);

}

}

} 这里相当于是在计算反函数!具体的自己研究吧~

这里的解释应该有误,误差传递通常应该是遵循:

4 PoolingLayer:

Pools the input image by taking the max, average, etc. within regions.

4.1 原理介绍:

在官网的介绍中,说提供了三种方式:MAX, AVG, STOCHASTIC. 但实际上在源码中只看到前两种,最后一种随机的没有。

前馈的过程比较简单:MAX就是将“卷积核”(过滤器)覆盖范围最大的那个值传递下去;而AVG就是将覆盖范围的平均值传递下去。

反馈的过程:

MAX:这个在前馈的时候需要记录传递下去的值的坐标,所以反馈的时候也只反馈给对应的坐标,其余的位置误差设定为0.

AVG:直接将top层的误差分成N份,反馈回去。N通常等于过滤器的大小(kernel_h * kernel_w)。边角部分的N可能会小一些,这是因为当过滤器去覆盖的时候,可能超出了边界。

4.2 源码分析:

LayerSetUp(), Reshape()都基本差不多的。

4.2.1 属性变量:

int kernel_h_, kernel_w_;

int stride_h_, stride_w_;

int pad_h_, pad_w_;

int channels_;

int height_, width_;

int pooled_height_, pooled_width_;

Blob rand_idx_;

Blob max_idx_; 重点来看看最后三行变量。pooled_height_, pooled_width_这两个好理解吧,也就是降采样(或者说池化)之后的大小。

rand_idx_应该是随机降采样时用的,可惜在源码中没有看到有这种降采样的实现。

那么max_idx_就是用于max pooling咯?

4.2.2 前馈反馈函数:

前馈函数:

// TODO(Yangqing): Is there a faster way to do pooling in the channel-first

// case?

template

void PoolingLayer::Forward_cpu(const vector*>& bottom,

vector*>* top) {

const Dtype* bottom_data = bottom[0]->cpu_data();

Dtype* top_data = (*top)[0]->mutable_cpu_data();

const int top_count = (*top)[0]->count();

// We'll output the mask to top[1] if it's of size >1.

const bool use_top_mask = top->size() > 1;

int* mask = NULL; // suppress warnings about uninitalized variables

Dtype* top_mask = NULL;

// Different pooling methods. We explicitly do the switch outside the for

// loop to save time, although this results in more code.

switch (this->layer_param_.pooling_param().pool()) {

case PoolingParameter_PoolMethod_MAX:

// Initialize

if (use_top_mask) {

top_mask = (*top)[1]->mutable_cpu_data();

caffe_set(top_count, Dtype(-1), top_mask);

} else {

mask = max_idx_.mutable_cpu_data();

caffe_set(top_count, -1, mask);

}

caffe_set(top_count, Dtype(-FLT_MAX), top_data);

// The main loop

for (int n = 0; n < bottom[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

int hstart = ph * stride_h_ - pad_h_;

int wstart = pw * stride_w_ - pad_w_;

int hend = min(hstart + kernel_h_, height_);

int wend = min(wstart + kernel_w_, width_);

hstart = max(hstart, 0);

wstart = max(wstart, 0);

const int pool_index = ph * pooled_width_ + pw;

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

const int index = h * width_ + w;

if (bottom_data[index] > top_data[pool_index]) {

top_data[pool_index] = bottom_data[index];

if (use_top_mask) {

top_mask[pool_index] = static_cast(index);

} else {

mask[pool_index] = index;

}

}

}

}

}

}

// compute offset

bottom_data += bottom[0]->offset(0, 1);

top_data += (*top)[0]->offset(0, 1);

if (use_top_mask) {

top_mask += (*top)[0]->offset(0, 1);

} else {

mask += (*top)[0]->offset(0, 1);

}

}

}

break;

case PoolingParameter_PoolMethod_AVE:

for (int i = 0; i < top_count; ++i) {

top_data[i] = 0;

}

// The main loop

for (int n = 0; n < bottom[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

int hstart = ph * stride_h_ - pad_h_;

int wstart = pw * stride_w_ - pad_w_;

int hend = min(hstart + kernel_h_, height_ + pad_h_);

int wend = min(wstart + kernel_w_, width_ + pad_w_);

int pool_size = (hend - hstart) * (wend - wstart);

hstart = max(hstart, 0);

wstart = max(wstart, 0);

hend = min(hend, height_);

wend = min(wend, width_);

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

top_data[ph * pooled_width_ + pw] +=

bottom_data[h * width_ + w];

}

}

top_data[ph * pooled_width_ + pw] /= pool_size;

}

}

// compute offset

bottom_data += bottom[0]->offset(0, 1);

top_data += (*top)[0]->offset(0, 1);

}

}

break;

case PoolingParameter_PoolMethod_STOCHASTIC:

NOT_IMPLEMENTED;

break;

default:

LOG(FATAL) << "Unknown pooling method.";

}

} 看着这么大一堆,别被吓着,其实仔细看看内容,里面实现了MAX 和 AVG的降采样,都比较好理解,具体的坐标对应关系有兴趣的自己研究研究。

反馈函数:

template

void PoolingLayer::Backward_cpu(const vector*>& top,

const vector& propagate_down, vector*>* bottom) {

if (!propagate_down[0]) {

return;

}

const Dtype* top_diff = top[0]->cpu_diff();

Dtype* bottom_diff = (*bottom)[0]->mutable_cpu_diff();

// Different pooling methods. We explicitly do the switch outside the for

// loop to save time, although this results in more codes.

caffe_set((*bottom)[0]->count(), Dtype(0), bottom_diff);

// We'll output the mask to top[1] if it's of size >1.

const bool use_top_mask = top.size() > 1;

const int* mask = NULL; // suppress warnings about uninitialized variables

const Dtype* top_mask = NULL;

switch (this->layer_param_.pooling_param().pool()) {

case PoolingParameter_PoolMethod_MAX:

// The main loop

if (use_top_mask) {

top_mask = top[1]->cpu_data();

} else {

mask = max_idx_.cpu_data();

}

for (int n = 0; n < top[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

const int index = ph * pooled_width_ + pw;

const int bottom_index =

use_top_mask ? top_mask[index] : mask[index];

bottom_diff[bottom_index] += top_diff[index];

}

}

bottom_diff += (*bottom)[0]->offset(0, 1);

top_diff += top[0]->offset(0, 1);

if (use_top_mask) {

top_mask += top[0]->offset(0, 1);

} else {

mask += top[0]->offset(0, 1);

}

}

}

break;

case PoolingParameter_PoolMethod_AVE:

// The main loop

for (int n = 0; n < top[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

int hstart = ph * stride_h_ - pad_h_;

int wstart = pw * stride_w_ - pad_w_;

int hend = min(hstart + kernel_h_, height_ + pad_h_);

int wend = min(wstart + kernel_w_, width_ + pad_w_);

int pool_size = (hend - hstart) * (wend - wstart);

hstart = max(hstart, 0);

wstart = max(wstart, 0);

hend = min(hend, height_);

wend = min(wend, width_);

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

bottom_diff[h * width_ + w] +=

top_diff[ph * pooled_width_ + pw] / pool_size;

}

}

}

}

// offset

bottom_diff += (*bottom)[0]->offset(0, 1);

top_diff += top[0]->offset(0, 1);

}

}

break;

case PoolingParameter_PoolMethod_STOCHASTIC:

NOT_IMPLEMENTED;

break;

default:

LOG(FATAL) << "Unknown pooling method.";

}

} 因为反馈的原理在前面介绍过了,所以这个代码看起来也不是那么复杂。