【python爬虫】用python爬取京东口红商品

前几天学了点python,又去看了下爬虫怎么玩,于是闲的无聊想爬一下口红的数据

本来想爬淘宝天猫的数据,因为能直接看到单品的销量,结果被淘宝反爬的登录验证难到了。。嫌麻烦于是乎转战爬京东,京东的反爬是在是太。。那个啥了(难怪网上这么多爬虫教程都是教爬京东的),下面讲讲怎么爬取京东的商品数据

如果对我的数据分析有兴趣的朋友可以看一下我另外一篇【数据分析】直男带你看京东100页口红数据

一、分析京东数据接口

直接在京东搜索口红

然后按F12打开开发者工具,在Network一栏选中Preserve log,然后再次刷新网页,可以在Network下面找到当前页面的doc

在这里我们会发现,京东搜索只会出现一百页的商品数据,每页有60件商品,而请求的页数参数page是++2的形式(每页递增2,如第一页的page=1,第二页的则page=2)使得我们在输网址的时候可以直接替换page的数量进入到相应的页数

接着我们在搜索的列表页对商品右键检查我们会发现

一页中展示的60件商品的id就挂在每个li里的data-sku上

随便点击多几个商品,我们又会发现

![]()

进入每个商品的通用网址就是 https://item.jd.com/xxxxxx.html 啊

所以我们只要获取了这个商品的id,不就可以直接进入这个页面拿数据了嘛

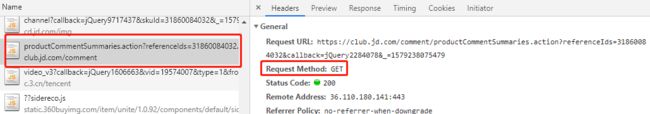

然后,我们再在商品的详细页按F12打开开发者工具,像第一步那样,我们再来找他的后台接口,于是沃州道了这个

咦这不就是我想要的数据,于是我马上点开Preview旁边的Headers,果不其然,我们就能找到一个请求数据的连接:

https://club.jd.com/comment/productCommentSummaries.action?referenceIds=xxxxxx

还是get的请求方式,所以只要在浏览器输入这个连接,就能看到返回的相应json数据啦

ps:因为京东是统计数据是一个系列一个系列统计的,一个系列里面包括了很多件单品,京东只会对这个系列的商品进行数据统计,而不会给每件单品进行数据统计(所以为什么一开始我想爬的不是京东就是因为这个。。),并且只要买过了该商品且不评价,京东都会默认打上好评,所以会看到有13万+的默认好评在一个系列里

二、爬取单页数据

京东的商品单页列表用了懒加载的技术,只有当滚动条滚到一定的位置才会加载完全部信息,所以在使用splash无果后只能用回最慢的方式。。没错就是用selenium的webdriver自己爬。。这里不讲怎么使用selenium,有兴趣的朋友可以自己上网搜索一下相关的知识

回到正题,首先我们先定义一下不会怎么改动的全局变量

注意!以下信息都是要根据个人实际情况改动

# webdriver路径

DRIVER_PATH = r'F:\ChromeDriver\chromedriver.exe'

# 搜索口红的第一页

FIRST_PATH = r'https://search.jd.com/Search?keyword=%E5%8F%A3%E7%BA%A2&enc=utf-8&wq=%E5%8F%A3%E7%BA%A2&pvid=6be9016dfc5540edbef74a51f2c2a273'

# 商品详细数据连接

COMMENTS_PATH = r'https://club.jd.com/comment/productCommentSummaries.action?referenceIds={}'

# 请求头

HEADERS = {

'user-agen': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36',

'cookie': 'shshshfpa=04032253-4191-5900-7bc8-664ce874ea19-1564630009; shshshfpb=aBXeSWUEdSZyGsicpC%2F9zZw%3D%3D; unpl=V2_ZzNtbUUERRN9CxEBfhwLA2IARVQRUBQVcwBHXCxMXwZgBEEOclRCFnQUR1RnGFgUZwsZXkpcQRxFCEdkeBBVAWMDE1VGZxBFLV0CFSNGF1wjU00zQwBBQHcJFF0uSgwDYgcaDhFTQEJ2XBVQL0oMDDdRFAhyZ0AVRQhHZHIfXA1nABtZRmdzEkU4dlJ6GVQAYDMTbUNnAUEpC0JUfRpbSG4FElVCVEoRcThHZHg%3d; user-key=0cddf1e3-7e4c-458f-a258-13c777bfb684; cn=0; xtest=6082.cf6b6759; ipLoc-djd=19-1704-37734-0; areaId=19; qrsc=3; rkv=V0000; __jdu=871912855; __jdv=76161171|baidu|-|organic|not set|1578915642730; __jdc=122270672; __jda=122270672.871912855.1556255082.1578963146.1578965142.14; shshshfp=0ec44d3b52c16ee1648e3119b9a5b39c; 3AB9D23F7A4B3C9B=LH2BN4LNQMSBEZOSKTIQF6FSY6G632CD4V43AVJPMIME573PKI4R4FOSP7OXAR6AVGFTEMS34GNI3TUVEF7MSVUXSM; shshshsID=9012381ec3bd3c7dcf09f15b9e835832_4_1578965247902; __jdb=122270672.4.871912855|14.1578965142'

}

driver = webdriver.Chrome(executable_path=DRIVER_PATH)

接着,定义一个函数来根据每页的url连接以列表的形式返回每个字典里的商品的id、标题、店铺名,考虑到信息要页面加载完才能获得,我们用webdriver操作浏览器自动往下滑进行加载

# 根据url处理列表页信息

def parse_list_page(url):

driver.get(url)

# 向下滑动 加载完全页面

for i in range(2):

driver.execute_script('document.documentElement.scrollTop=6000')

time.sleep(3) # 3秒再滑

# 拿到网页完整源代码

resp = driver.page_source

html = etree.HTML(resp)

goods_ids = html.xpath('.//ul[@class="gl-warp clearfix"]/li[@class="gl-item"]/@data-sku')

goods_names_tag = html.xpath('.//div[@class="p-name p-name-type-2"]/a/em')

goods_stores_tag = html.xpath('.//div[@class="p-shop"]')

goods_names = []

for goods_name in goods_names_tag:

goods_names.append(goods_name.xpath('string(.)').strip())

goods_stores = []

for goods_store in goods_stores_tag:

goods_stores.append(goods_store.xpath('string(.)').strip())

goods_infos = list()

for i in range(0, len(goods_ids)):

goods_info = dict()

goods_info['goods_id'] = goods_ids[i]

goods_info['goods_name'] = goods_names[i]

goods_info['goods_store'] = goods_stores[i]

goods_infos.append(goods_info)

return goods_infos

获得了商品的id之后呢,结合上面分析出来的连接,我们就可以使用requests库自己去爬取商品的详细数据啦

# 根据商品id获取商品的评论数量统计信息

def get_goods_comment(goods_id):

url = COMMENTS_PATH.format(goods_id)

resp_text = requests.get(url=url, headers=HEADERS).text

comment_json = json.loads(resp_text)

# print(comment_json)

comments_count = comment_json['CommentsCount'][0]

# 评论总数

comment_count = comments_count['CommentCount']

# 默认好评数

default_good_count = comments_count['DefaultGoodCount']

# 好评数

good_count = comments_count['GoodCount']

# 普通评价数

general_count = comments_count['GeneralCount']

# 差评数

poor_count = comments_count['PoorCount']

# 追评数

after_count = comments_count['AfterCount']

# 好评率

good_rate = comments_count['GoodRate']

comment_info = dict()

comment_info['comment_count'] = comment_count

comment_info['default_good_count'] = default_good_count

comment_info['good_count'] = good_count

comment_info['general_count'] = general_count

comment_info['poor_count'] = poor_count

comment_info['after_count'] = after_count

comment_info['good_rate'] = good_rate

return comment_info

三、翻页爬取

上面我们说了,京东的商品搜索页用了懒加载,所以我们只能用webdriver自己翻页,继续去爬下一页的数据。。

def get_next_page_url():

try:

next_btn = driver.find_element_by_xpath('.//a[@class="pn-next"]')

next_btn.click()

cur_url = driver.current_url

return cur_url

except NoSuchElementException as e:

return ""

四、拼接数据

上面发现,我们的数据都是分两部分获得的(既获得了两个字典),要把两部分拼接在一起,才能得出一个商品的完整数据,所以我们在这个爬虫的run方法里就要对这两部分进行拼接,拼接完后再去翻页爬取下一页的数据

i = 1

info_list = list()

def spider_run(url):

goods_infos = parse_list_page(url)

for goods_info in goods_infos:

comment_info = get_goods_comment(goods_info['goods_id'])

# 将信息合并成一个商品的完整信息

info = dict(goods_info, **comment_info)

print(info)

info_list.append(info)

print(len(info_list))

global i

pandas.DataFrame(info_list).to_csv('jd_kh_{}.csv'.format(i))

i += 1

info_list.clear()

next_page_url = get_next_page_url()

if next_page_url != "":

spider_run(next_page_url)

ps:我是将每一页的数据先保存起来,最后再用python将全部数据整合去重、数据清洗,这样虽然麻烦,但是因为爬取的数据量大,在有几次请求京东的数据时会出现返回失败的情况,所以分页保存数据可以通过观察文件大小一下看出哪一页的数据数量对不上,再爬完全部数据后再对该页通过修改连接重新爬取,就能得到较为完整的数据

最后放一下完整的源码

至此一个小爬虫就完成啦!

import json

import pandas

import requests

from lxml import etree

from selenium import webdriver

import time

from selenium.common.exceptions import NoSuchElementException

DRIVER_PATH = r'F:\ChromeDriver\chromedriver.exe'

FIRST_PATH = r'https://search.jd.com/Search?keyword=%E5%8F%A3%E7%BA%A2&enc=utf-8&wq=%E5%8F%A3%E7%BA%A2&pvid=6be9016dfc5540edbef74a51f2c2a273'

COMMENTS_PATH = r'https://club.jd.com/comment/productCommentSummaries.action?referenceIds={}'

HEADERS = {

'user-agen': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36',

'cookie': 'shshshfpa=04032253-4191-5900-7bc8-664ce874ea19-1564630009; shshshfpb=aBXeSWUEdSZyGsicpC%2F9zZw%3D%3D; unpl=V2_ZzNtbUUERRN9CxEBfhwLA2IARVQRUBQVcwBHXCxMXwZgBEEOclRCFnQUR1RnGFgUZwsZXkpcQRxFCEdkeBBVAWMDE1VGZxBFLV0CFSNGF1wjU00zQwBBQHcJFF0uSgwDYgcaDhFTQEJ2XBVQL0oMDDdRFAhyZ0AVRQhHZHIfXA1nABtZRmdzEkU4dlJ6GVQAYDMTbUNnAUEpC0JUfRpbSG4FElVCVEoRcThHZHg%3d; user-key=0cddf1e3-7e4c-458f-a258-13c777bfb684; cn=0; xtest=6082.cf6b6759; ipLoc-djd=19-1704-37734-0; areaId=19; qrsc=3; rkv=V0000; __jdu=871912855; __jdv=76161171|baidu|-|organic|not set|1578915642730; __jdc=122270672; __jda=122270672.871912855.1556255082.1578963146.1578965142.14; shshshfp=0ec44d3b52c16ee1648e3119b9a5b39c; 3AB9D23F7A4B3C9B=LH2BN4LNQMSBEZOSKTIQF6FSY6G632CD4V43AVJPMIME573PKI4R4FOSP7OXAR6AVGFTEMS34GNI3TUVEF7MSVUXSM; shshshsID=9012381ec3bd3c7dcf09f15b9e835832_4_1578965247902; __jdb=122270672.4.871912855|14.1578965142'

}

driver = webdriver.Chrome(executable_path=DRIVER_PATH)

# 根据url处理列表页信息

def parse_list_page(url):

driver.get(url)

# 向下滑动 加载完全页面

for i in range(2):

driver.execute_script('document.documentElement.scrollTop=6000')

time.sleep(3) # 3秒再滑

# 拿到网页完整源代码

resp = driver.page_source

html = etree.HTML(resp)

goods_ids = html.xpath('.//ul[@class="gl-warp clearfix"]/li[@class="gl-item"]/@data-sku')

goods_names_tag = html.xpath('.//div[@class="p-name p-name-type-2"]/a/em')

goods_stores_tag = html.xpath('.//div[@class="p-shop"]')

goods_names = []

for goods_name in goods_names_tag:

goods_names.append(goods_name.xpath('string(.)').strip())

goods_stores = []

for goods_store in goods_stores_tag:

goods_stores.append(goods_store.xpath('string(.)').strip())

goods_infos = list()

for i in range(0, len(goods_ids)):

goods_info = dict()

goods_info['goods_id'] = goods_ids[i]

goods_info['goods_name'] = goods_names[i]

goods_info['goods_store'] = goods_stores[i]

goods_infos.append(goods_info)

return goods_infos

# 根据商品id获取商品的评论数量统计信息

def get_goods_comment(goods_id):

url = COMMENTS_PATH.format(goods_id)

resp_text = requests.get(url=url, headers=HEADERS).text

comment_json = json.loads(resp_text)

# print(comment_json)

comments_count = comment_json['CommentsCount'][0]

# 评论总数

comment_count = comments_count['CommentCount']

# 默认好评数

default_good_count = comments_count['DefaultGoodCount']

# 好评数

good_count = comments_count['GoodCount']

# 普通评价数

general_count = comments_count['GeneralCount']

# 差评数

poor_count = comments_count['PoorCount']

# 追评数

after_count = comments_count['AfterCount']

# 好评率

good_rate = comments_count['GoodRate']

comment_info = dict()

comment_info['comment_count'] = comment_count

comment_info['default_good_count'] = default_good_count

comment_info['good_count'] = good_count

comment_info['general_count'] = general_count

comment_info['poor_count'] = poor_count

comment_info['after_count'] = after_count

comment_info['good_rate'] = good_rate

return comment_info

def get_next_page_url():

try:

next_btn = driver.find_element_by_xpath('.//a[@class="pn-next"]')

next_btn.click()

cur_url = driver.current_url

return cur_url

except NoSuchElementException as e:

return ""

i = 1

info_list = list()

def spider_run(url):

goods_infos = parse_list_page(url)

for goods_info in goods_infos:

comment_info = get_goods_comment(goods_info['goods_id'])

# 将信息合并成一个商品的完整信息

info = dict(goods_info, **comment_info)

print(info)

info_list.append(info)

print(len(info_list))

global i

pandas.DataFrame(info_list).to_csv('jd_kh_{}.csv'.format(i))

i += 1

info_list.clear()

next_page_url = get_next_page_url()

if next_page_url != "":

spider_run(next_page_url)

if __name__ == '__main__':

spider_run(FIRST_PATH)