理解COCO数据集中的关键点标注

一、COCO中目标关键点的标注格式

打开person_keypoints_val2017.json文件。

从头至尾按照顺序分为以下段落,看起来和目标检测差不多。

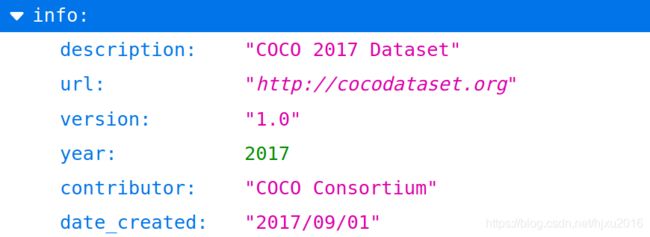

1、info 类型,没什么好讲的,就是一些信息记录,如下

1、info 类型,没什么好讲的,就是一些信息记录,如下

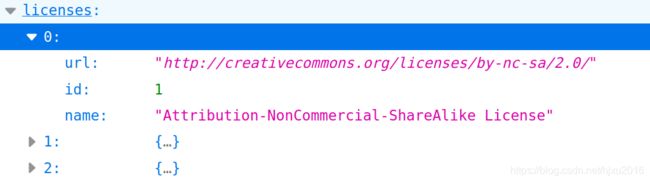

2、licenses、包含多个license的数组,姑且看成是一种版权许可证吧

2、licenses、包含多个license的数组,姑且看成是一种版权许可证吧

3、images、记录了一些关键信息,如图像的文件名、宽、高,图像ID等信息

3、images、记录了一些关键信息,如图像的文件名、宽、高,图像ID等信息

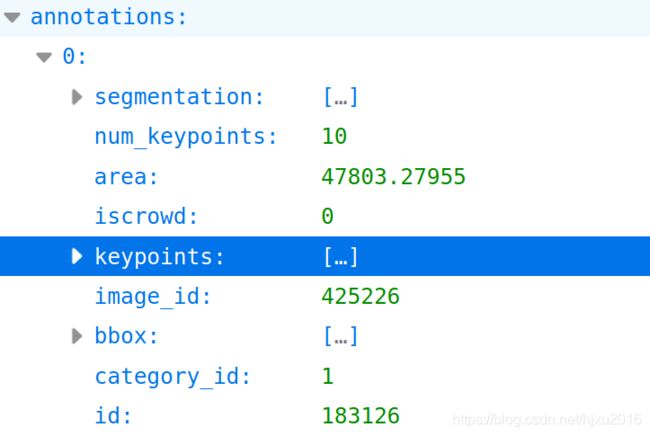

4、annotation、包含了目标检测中annotation所有字段,另外额外增加了2个字段。

新增的keypoints是一个长度为 3 ∗ k 3*k 3∗k的数组,其中 k k k 是 c a t e g o r y category category 中 k e y p o i n t s keypoints keypoints 的总数量。每一个 k e y p o i n t keypoint keypoint 是一个长度为3的数组,第一和第二个元素分别是 x x x 和 y y y 坐标值,第三个元素是个标志位 v v v, v v v 为 0 0 0 时表示这个关键点没有标注(这种情况下 x = y = v = 0 x=y=v=0 x=y=v=0), v v v 为 1 1 1 时表示这个关键点标注了但是不可见(被遮挡了), v v v 为 2 2 2 时表示这个关键点标注了同时也可见。

num_keypoints表示这个目标上被标注的关键点的数量( v > 0 ) v>0) v>0),比较小的目标上可能就无法标注关键点。

从person_keypoints_val2017.json文件中摘出一个annotation的实例如下:

从person_keypoints_val2017.json文件中摘出一个annotation的实例如下:

{

"segmentation": [[125.12,539.69,140.94,522.43,100.67,496.54,84.85,469.21,73.35,450.52,104.99,342.65,168.27,290.88,179.78,288,189.84,286.56,191.28,260.67,202.79,240.54,221.48,237.66,248.81,243.42,257.44,256.36,253.12,262.11,253.12,275.06,299.15,233.35,329.35,207.46,355.24,206.02,363.87,206.02,365.3,210.34,373.93,221.84,363.87,226.16,363.87,237.66,350.92,237.66,332.22,234.79,314.97,249.17,271.82,313.89,253.12,326.83,227.24,352.72,214.29,357.03,212.85,372.85,208.54,395.87,228.67,414.56,245.93,421.75,266.07,424.63,276.13,437.57,266.07,450.52,284.76,464.9,286.2,479.28,291.96,489.35,310.65,512.36,284.76,549.75,244.49,522.43,215.73,546.88,199.91,558.38,204.22,565.57,189.84,568.45,184.09,575.64,172.58,578.52,145.26,567.01,117.93,551.19,133.75,532.49]],

"num_keypoints": 10,

"area": 47803.27955,

"iscrowd": 0,

"keypoints": [0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,142,309,1,177,320,2,191,398,2,237,317,2,233,426,2,306,233,2,92,452,2,123,468,2,0,0,0,251,469,2,0,0,0,162,551,2],

"image_id": 425226,"bbox": [73.35,206.02,300.58,372.5],"category_id": 1,

"id": 183126

},

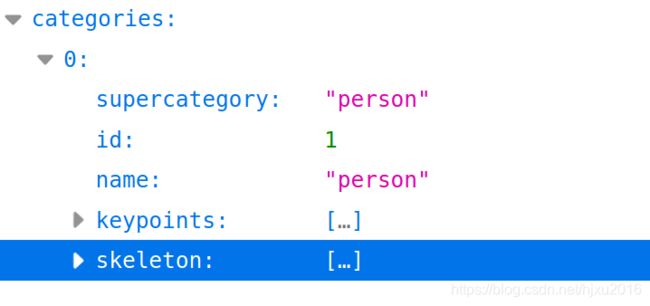

5、categories字段、对于每一个category结构体,相比目标检测中的中的category新增了2个额外的字段, k e y p o i n t s keypoints keypoints 是一个长度为k的数组,包含了每个关键点的名字; s k e l e t o n skeleton skeleton 定义了各个关键点之间的连接性(比如人的左手腕和左肘就是连接的,但是左手腕和右手腕就不是)。目前,COCO的keypoints只标注了person category (分类为人)。

从person_keypoints_val2017.json文件中摘出一个category的实例如下:

从person_keypoints_val2017.json文件中摘出一个category的实例如下:

{

"supercategory": "person",

"id": 1,

"name": "person",

"keypoints": ["nose","left_eye","right_eye","left_ear","right_ear","left_shoulder","right_shoulder","left_elbow","right_elbow","left_wrist","right_wrist","left_hip","right_hip","left_knee","right_knee","left_ankle","right_ankle"],

"skeleton": [[16,14],[14,12],[17,15],[15,13],[12,13],[6,12],[7,13],[6,7],[6,8],[7,9],[8,10],[9,11],[2,3],[1,2],[1,3],[2,4],[3,5],[4,6],[5,7]]

}

二、读取Coco数据集,并显示关键点到图像上

这里有两种方法显示,一种是直接利用pycocotools.coco中的

COCO.showAnns()函数,这种方式虽然能将关键点显示在图像上,但如果不去看源码的话,很难理解关键点标注的内容。

笔者提供另外一种方法,用Opencv加载图像,并且将关键点标注在原图上。

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

* * *** * * * *

* * * ** * *

**** * ** * *

* * * ** * *

* * ** * * ****

@File :KeyPointStu/showPoint.py

@Date :2020/12/3 下午7:07

@Require :numpy、pycocotools、opencv

@Author :hjxu2016, https://blog.csdn.net/hjxu2016/

@Funtion :读取Coco数据集,并显示关键点到图像上

"""

import numpy as np

from pycocotools.coco import COCO

import cv2

aColor = [(0, 255, 0, 0), (255, 0, 0, 0), (0, 0, 255, 0), (0, 255, 255, 0)]

annFile = './coco/2017/annotations/person_keypoints_val2017.json'

img_prefix = './coco/2017/val2017'

# initialize COCO api for instance annotations

coco = COCO(annFile)

# getCatIds(catNms=[], supNms=[], catIds=[])

# 通过输入类别的名字、大类的名字或是种类的id,来筛选得到图片所属类别的id

catIds = coco.getCatIds(catNms=['person'])

print("catIds: ", catIds)

# getImgIds(imgIds=[], catIds=[])

# 通过图片的id或是所属种类的id得到图片的id

# 得到图片的id信息后,就可以用loadImgs得到图片的信息了

# 在这里我们随机选取之前list中的一张图片

imgIds = 425226

img = coco.loadImgs(imgIds)[0]

matImg = cv2.imread('%s/%s' % (img_prefix, img['file_name']))

# # 通过输入图片的id、类别的id、实例的面积、是否是人群来得到图片的注释id

annIds = coco.getAnnIds(imgIds=img['id'], catIds=catIds, iscrowd=None)

# # 通过注释的id,得到注释的信息

anns = coco.loadAnns(annIds)

print(anns)

for ann in anns:

sks = np.array(coco.loadCats(ann['category_id'])[0]['skeleton']) - 1

kp = np.array(ann['keypoints'])

x = kp[0::3]

y = kp[1::3]

v = kp[2::3]

for sk in sks:

# c = (np.random.random((1, 3)) * 0.6 + 0.4).tolist()[0]

c = aColor[np.random.randint(0, 4)]

if np.all(v[sk] > 0):

# 画点之间的连接线

cv2.line(matImg, (x[sk][0], y[sk][0]), (x[sk][1], y[sk][1]), c, 1)

for i in range(x.shape[0]):

c = aColor[np.random.randint(0, 4)]

cv2.circle(matImg, (x[i], y[i]), 2, c, lineType=1)

cv2.imshow("show", matImg)

cv2.waitKey(0)