Keras 实现 FCN 语义分割并训练自己的数据之 FCN-16s、FCN-8s

Keras 实现 FCN 语义分割并训练自己的数据之 FCN-16s、FCN-8s

-

- 一. 转置卷积

- 二. FCN-16s

- 三. FCN-8s

- 四. 总结

在 Keras 实现 FCN 语义分割并训练自己的数据之 FCN-32s 中已经实现了最简单的 FCN 语义分割, 但是分割效果很不理想, 所以接下来按论文讲的方式提升分割效果

一. 转置卷积

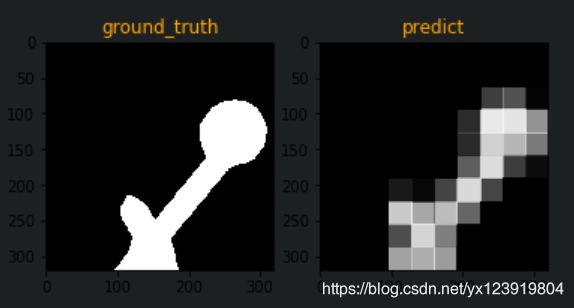

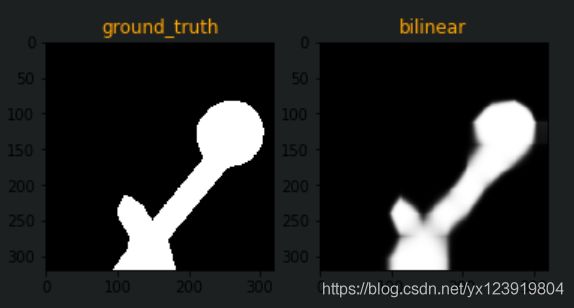

在 Keras 实现 FCN 语义分割并训练自己的数据之 FCN-32s 中恢复原图尺寸的方法简单粗暴, 用不需要学习的 32 倍插值方式上采样, 插值方式是 nearest, UpSampling2D 默认就是 nearest. 这个方式效果很不理想. 但是如果把插值方式改成 bilinear, 效果马上就好了很多了

model.add(keras.layers.UpSampling2D(size = (32, 32), interpolation = "bilinear",

name = "upsamping_6"))

nearest 效果

bilinear 方式

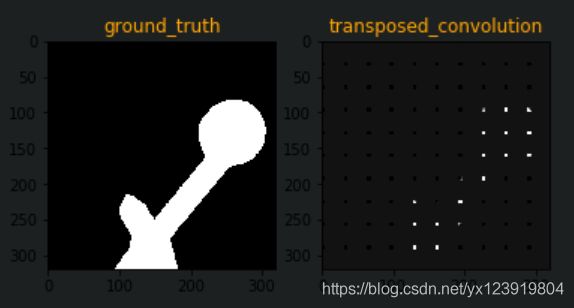

论文中提到了转置卷积, 转置卷积是需要学习参数的, 所以效果应该会比插值方式来得好, 马上验证一下, 把前面的 UpSampling2D 换成转置卷积

# model.add(keras.layers.UpSampling2D(size = (32, 32), interpolation = "bilinear",

# name = "upsamping_6"))

model.add(keras.layers.Conv2DTranspose(filters = 512,

kernel_size = (3, 3),

strides = (32, 32),

padding = "same",

kernel_initializer = "he_uniform",

name = "Conv2DTranspose_6"))

可以看到, 效果更不咋的, 应该是参数不适合. 你试着改一下参数看看. 最后上采样我们还是用 bilinear 插值, 前面的浅层上采样的时候用转置卷积

二. FCN-16s

之所以 FCN-32s 的分割效果不佳, 主要是多次卷积丢失了原图的细节, 所以要把前面浅层的卷积信息利用起来, 就是所谓的 Skip Architecture(跳跃结构), 要用到 Skip Architechture 就要改一下网络的实现方式. 因为要用到中间层, 所以模型修改如下(函数式)

# 定义模型

project_name = "fcn_segment"

channels = 3

std_shape = (320, 320, channels) # 输入尺寸, std_shape[0]: img_rows, std_shape[1]: img_cols

# 这个尺寸按你的图像来, 如果你的图大小不一, 那 img_rows 和 image_cols

# 都要设置成 None, 如果你在用 Generator 加载数据时有扩展边缘, 那 std_shape

# 就是扩展后的尺寸

img_input = keras.layers.Input(shape = std_shape, name = "input")

conv_1 = keras.layers.Conv2D(32, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_1")(img_input)

max_pool_1 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_1")(conv_1)

conv_2 = keras.layers.Conv2D(64, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_2")(max_pool_1)

max_pool_2 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_2")(conv_2)

conv_3 = keras.layers.Conv2D(128, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_3")(max_pool_2)

max_pool_3 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_3")(conv_3)

conv_4 = keras.layers.Conv2D(256, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_4")(max_pool_3)

max_pool_4 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_4")(conv_4)

conv_5 = keras.layers.Conv2D(512, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_5")(max_pool_4)

max_pool_5 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_5")(conv_5)

用上面的方式, 我们可以很方便的使用中间过程的每一次输出, 接下来, 把 max_pool_5 利用 转置卷积 上采样 2 倍, 得到与 max_pool_4 相同的尺寸, 再把两个相加就是 16s 了

# max_pool_5 转置卷积上采样 2 倍至 max_pool_4 一样大

up6 = keras.layers.Conv2DTranspose(256, kernel_size = (3, 3),

strides = (2, 2),

padding = "same",

kernel_initializer = "he_normal",

name = "upsamping_6")(max_pool_5)

_16s = keras.layers.add([max_pool_4, up6])

# _16s 上采样 16 倍后与输入尺寸相同

up7 = keras.layers.UpSampling2D(size = (16, 16), interpolation = "bilinear",

name = "upsamping_7")(_16s)

# 这里 kernel 也是 3 * 3, 也可以同 FCN-32s 那样修改的

conv_7 = keras.layers.Conv2D(1, kernel_size = (3, 3), activation = "sigmoid",

padding = "same", name = "conv_7")(up7)

最后从输入到输出组合

model = keras.Model(img_input, conv_7, name = project_name)

model.compile(optimizer = "adam",

loss = "binary_crossentropy",

metrics = ["accuracy"])

model.summary()

这样, 16s 就完成了, 训练和预测和 Keras 实现 FCN 语义分割并训练自己的数据之 FCN-32s 一样, 最后预测效果明显好了很多

三. FCN-8s

8s 和 16s 差异的地方是 8s 还要结合 max_pool_3, 代码如下

project_name = "fcn_segment"

channels = 3

std_shape = (320, 320, channels) # 输入尺寸, std_shape[0]: img_rows, std_shape[1]: img_cols

# 这个尺寸按你的图像来, 如果你的图大小不一, 那 img_rows 和 image_cols

# 都要设置成 None, 如果你在用 Generator 加载数据时有扩展边缘, 那 std_shape

# 就是扩展后的尺寸

img_input = keras.layers.Input(shape = std_shape, name = "input")

conv_1 = keras.layers.Conv2D(32, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_1")(img_input)

max_pool_1 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_1")(conv_1)

conv_2 = keras.layers.Conv2D(64, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_2")(max_pool_1)

max_pool_2 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_2")(conv_2)

conv_3 = keras.layers.Conv2D(128, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_3")(max_pool_2)

max_pool_3 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_3")(conv_3)

conv_4 = keras.layers.Conv2D(256, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_4")(max_pool_3)

max_pool_4 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_4")(conv_4)

conv_5 = keras.layers.Conv2D(512, kernel_size = (3, 3), activation = "relu",

padding = "same", name = "conv_5")(max_pool_4)

max_pool_5 = keras.layers.MaxPool2D(pool_size = (2, 2), strides = (2, 2),

name = "max_pool_5")(conv_5)

# max_pool_5 转置卷积上采样 2 倍和 max_pool_4 一样大

up6 = keras.layers.Conv2DTranspose(256, kernel_size = (3, 3),

strides = (2, 2),

padding = "same",

kernel_initializer = "he_normal",

name = "upsamping_6")(max_pool_5)

_16s = keras.layers.add([max_pool_4, up6])

# _16s 转置卷积上采样 2 倍和 max_pool_3 一样大

up_16s = keras.layers.Conv2DTranspose(128, kernel_size = (3, 3),

strides = (2, 2),

padding = "same",

kernel_initializer = "he_normal",

name = "Conv2DTranspose_16s")(_16s)

_8s = keras.layers.add([max_pool_3, up_16s])

# _8s 上采样 8 倍后与输入尺寸相同

up7 = keras.layers.UpSampling2D(size = (8, 8), interpolation = "bilinear",

name = "upsamping_7")(_8s)

# 这里 kernel 也是 3 * 3, 也可以同 FCN-32s 那样修改的

conv_7 = keras.layers.Conv2D(1, kernel_size = (3, 3), activation = "sigmoid",

padding = "same", name = "conv_7")(up7)

model = keras.Model(img_input, conv_7, name = project_name)

model.compile(optimizer = "adam",

loss = "binary_crossentropy",

metrics = ["accuracy"])

model.summary()

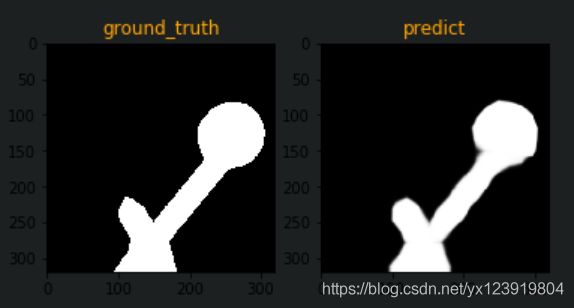

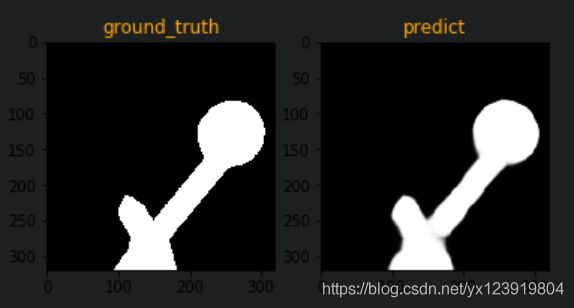

最后预测如下, 还是没有二值化, 其实二值化之后和 ground_truth 基本一致了

四. 总结

FCN 语义分割就这样完成了, 网络结构不一定要和论文一模一样, 依据任务的特点来决定, 只要掌握了其原理(卷积学习目标特征再依据特征从小分辨率图像还原到原图像, Encoder-Decoder), 怎么变换都可以. 其他网络不也是从 FCN 变化而来的吗? 你可以试着改变网络结构来训练看一下有没有效果的提升

上一篇: Keras 实现 FCN 语义分割并训练自己的数据之 FCN-32s

下一篇: Keras 实现 FCN 语义分割并训练自己的数据之 多分类