【再学Tensorflow2】TensorFlow2的建模流程:Titanic生存预测

TensorFlow2的建模流程

- 1. 使用Tensorflow实现神经网络模型的一般流程

- 2. Titanic生存预测问题

-

- 2.1 数据准备

- 2.2 定义模型

- 2.3 训练模型

- 2.4 模型评估

- 2.5 使用模型

- 2.6 保存模型

- 参考资料

在机器学习和深度学习领域,通常使用TensorFlow来实现机器学习模型,尤其常用于实现神经网络模型。从原理上说可以使用张量构建计算图来定义神经网络,并通过自动微分机制训练模型。自从Tensorflow2发布之后,大大降低了Tensorflow的使用门槛。这里为简洁起见,一般推荐使用TensorFlow的高层次keras接口来实现神经网络网模型。

1. 使用Tensorflow实现神经网络模型的一般流程

- 准备数据

- 定义模型

- 训练模型

- 评估模型

- 使用模型

- 保存模型

对新手来说,其中最困难的部分实际上是准备数据过程。在实践中通常会遇到的数据类型包括结构化数据,图片数据,文本数据,时间序列数据。这里,我们将分别以Titanic生存预测问题,CIFAR2图片分类问题,IMBD电影评论分类问题,国内新冠疫情结束时间预测问题为例,演示应用Tensorflow对这四类数据的建模方法。

2. Titanic生存预测问题

2.1 数据准备

Titanic数据集的目标是根据乘客信息预测他们在Titanic号撞击冰山沉没后能否生存。结构化数据一般会使用Pandas中的DataFrame进行预处理。这些历史数据已经分为训练集和测试集。

导入依赖包

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras import models, layers

导入数据

dftrain_raw = pd.read_csv('../Data/Titanic/train.csv')

dftest_raw = pd.read_csv('../Data/Titanic/test.csv')

dftrain_raw.head(10)

- Survived:0代表死亡,1代表存活【y标签】

- Pclass:乘客所持票类,有三种值(1,2,3) 【转换成onehot编码】

- Name:乘客姓名 【舍去】

- Sex:乘客性别 【转换成bool特征】

- Age:乘客年龄(有缺失) 【数值特征,添加“年龄是否缺失”作为辅助特征】

- SibSp:乘客兄弟姐妹/配偶的个数(整数值) 【数值特征】

- Parch:乘客父母/孩子的个数(整数值)【数值特征】

- Ticket:票号(字符串)【舍去】

- Fare:乘客所持票的价格(浮点数,0-500不等) 【数值特征】

- Cabin:乘客所在船舱(有缺失) 【添加“所在船舱是否缺失”作为辅助特征】

- Embarked:乘客登船港口:S、C、Q(有缺失)【转换成onehot编码,四维度 S,C,Q,nan】

探索数据

%matplotlib inline

%config InlineBackend.figure_format = 'png'

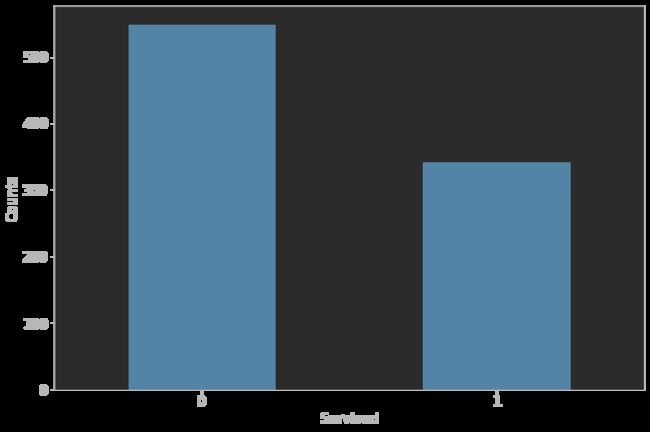

# label分布情况

ax = dftrain_raw['Survived'].value_counts().plot(kind='bar', figsize= (12,8), fontsize=15, rot=0)

ax.set_ylabel('Counts', fontsize=15)

ax.set_xlabel('Survived', fontsize=15)

plt.show()

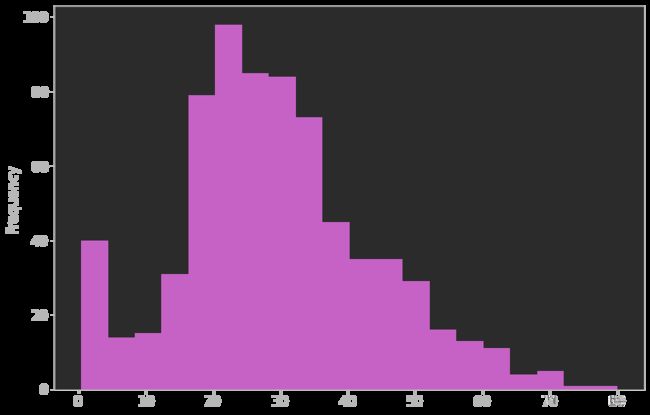

%matplotlib inline

%config InlineBackend.figure_format = 'png'

# 年龄分布情况

ax = dftrain_raw['Age'].plot(kind='hist', bins=20, color='purple', figsize=(12, 8), fontsize=15)

ax.set_ylabel('Frequency', fontsize = 15)

plt.show()

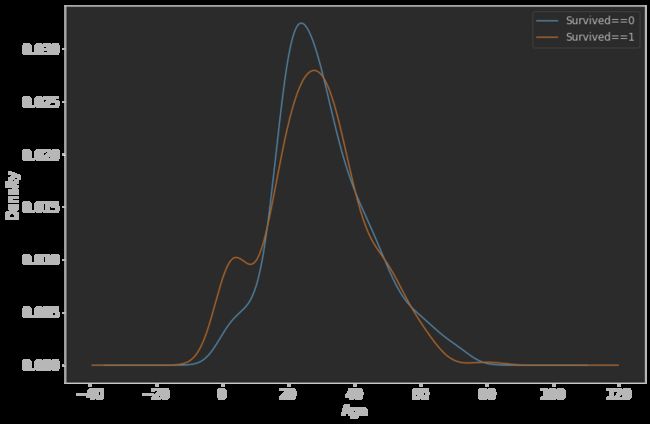

%matplotlib inline

%config InlineBackend.figure_format = "png"

# 年龄和label的相关性

ax = dftrain_raw.query('Survived == 0')['Age'].plot(kind='density', figsize=(12,8),fontsize=15)

dftrain_raw.query('Survived == 1')['Age'].plot(kind='density', figsize=(12,8), fontsize=15)

ax.legend(['Survived==0','Survived==1'], fontsize=12)

ax.set_ylabel('Density', fontsize=15)

ax.set_xlabel('Age', fontsize=15)

plt.show()

def preprocessing(dfdata):

dfresult = pd.DataFrame()

# Pclass 乘客所持票类,有三种值(1,2,3),转换成onehot编码

dfPclass = pd.get_dummies(dfdata['Pclass'])

dfPclass.columns = ['Pclass_' + str(x) for x in dfPclass.columns]

dfresult = pd.concat([dfresult, dfPclass], axis=1)

# Sex 乘客性别,转换成bool特征

dfSex = pd.get_dummies(dfdata['Sex'])

dfresult = pd.concat([dfresult, dfSex], axis=1)

# Age 乘客年龄(有缺失)[数值特征,添加“年龄是否缺失”作为辅助特征]

dfresult['Age'] = dfdata['Age'].fillna(0)

dfresult['Age_null'] = pd.isna(dfdata['Age']).astype('int32')

# SibSp, Parch, Fare: 乘客的兄弟姐妹/配偶的个数(整数值)[数值特征];乘客父母/孩子的个数;乘客所持票的价格[浮点数,0-500不等]

dfresult['SibSp'] = dfdata['SibSp']

dfresult['Parch'] = dfdata['Parch']

dfresult['Fare'] = dfdata['Fare']

# Carbin

dfresult['Cabin_null'] = pd.isna(dfdata['Cabin']).astype('int32')

# Embarked 乘客登船港口:S、C、Q(有缺失),转换成onehot编码,四维度 S,C,Q,nan

dfEmbarked = pd.get_dummies(dfdata['Embarked'], dummy_na=True)

dfEmbarked.columns = ['Embarked_' + str(x) for x in dfEmbarked.columns]

dfresult = pd.concat([dfresult, dfEmbarked], axis=1)

return dfresult

调用预处理函数

x_train = preprocessing(dftrain_raw)

y_train = dftrain_raw['Survived'].values

x_test = preprocessing(dftest_raw)

print('x_train.shape = ', x_train.shape)

print('x_test.shape = ' , x_test.shape)

输出结果:

x_train.shape = (891, 15)

x_test.shape = (418, 15)

2.2 定义模型

使用keras接口有一下三种方式构建模型:

- 使用Sequential按层顺序构建模型

- 使用函数式API构建任意结构模型

- 集成Model基类构建自定义模型

这里,我们使用最简单的Sequential,按层顺序模型。

tf.keras.backend.clear_session()

model = models.Sequential()

model.add(layers.Dense(20, activation='relu', input_shape=(15, )))

model.add(layers.Dense(10, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 20) 320

_________________________________________________________________

dense_1 (Dense) (None, 10) 210

_________________________________________________________________

dense_2 (Dense) (None, 1) 11

=================================================================

Total params: 541

Trainable params: 541

Non-trainable params: 0

_________________________________________________________________

2.3 训练模型

训练模型通常也有3种方法:内置fit方法,内置train_on_batch方法,以及自定义训练循环。此处我们选择最常用也是最简单的内置fit方法。

# 二分类问题选择二元交叉熵损失函数

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['AUC'])

history = model.fit(x_train, y_train, batch_size=64, epochs=50, validation_split=0.2)

运行过程:

Epoch 46/50

12/12 [==============================] - 0s 2ms/step - loss: 0.4421 - auc: 0.8540 - val_loss: 0.3939 - val_auc: 0.8757

Epoch 47/50

12/12 [==============================] - 0s 2ms/step - loss: 0.4427 - auc: 0.8567 - val_loss: 0.4168 - val_auc: 0.8657

Epoch 48/50

12/12 [==============================] - 0s 2ms/step - loss: 0.4631 - auc: 0.8470 - val_loss: 0.4044 - val_auc: 0.8693

Epoch 49/50

12/12 [==============================] - 0s 2ms/step - loss: 0.4602 - auc: 0.8451 - val_loss: 0.3830 - val_auc: 0.8832

Epoch 50/50

12/12 [==============================] - 0s 2ms/step - loss: 0.4450 - auc: 0.8574 - val_loss: 0.3892 - val_auc: 0.8776

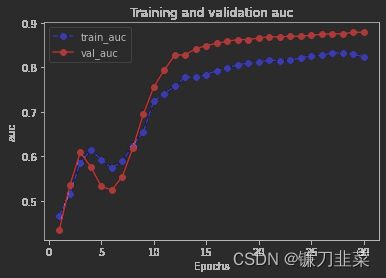

2.4 模型评估

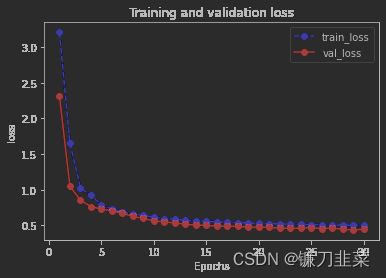

首先评估一下模型在训练集和验证集上的效果。

%matplotlib inline

%config InlineBackend.figure_format = 'png'

import matplotlib.pyplot as plt

def plot_metric(history, metric):

train_metrics = history.history[metric]

val_metrics = history.history['val_'+metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Training and validation '+ metric)

png plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend(["train_"+metric, 'val_'+metric])

plt.show()

查看loss变化

plot_metric(history,"loss")

plot_metric(history,'auc')

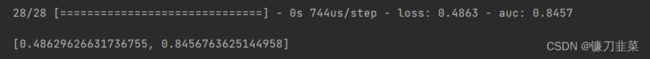

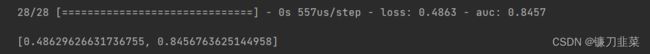

model.evaluate(x=x_train, y = y_train) # [0.48629626631736755, 0.8456763625144958]

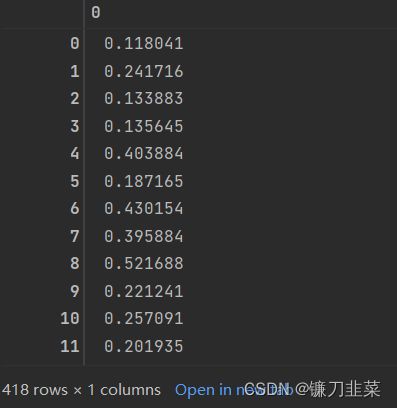

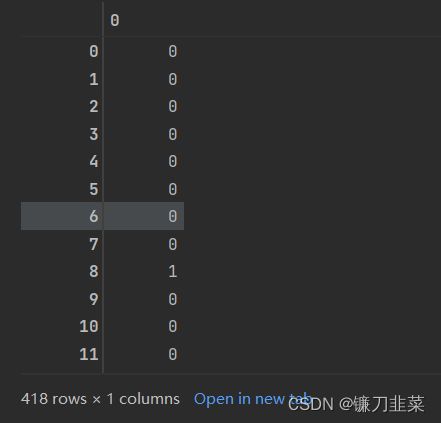

2.5 使用模型

预测概率:

model.predict(x_test)

model.predict_classes(x_test)

2.6 保存模型

可以使用Keras方式保存模型,也可以使用TensorFlow原生方式保存。前者仅仅适合使用Python环境恢复模型,后者则可以跨平台进行模型部署。推荐使用后一种方式进行保存。

- 使用Keras方式保存模型

# 保存模型结构及权重

model.save('../Output/keras_model_titanic.h5')

删除现有模型,加载保存的模型

del model # 删除现有模型

model = models.load_model('../Output/keras_model_titanic.h5')

model.evaluate(x_train, y_train)

- 使用Tensorflow原生方式保存

仅仅保存权重张量:

# 保存权重,该方式仅仅保存权重张量

model.save_weights('../Output/tf_model_titanic_weights.ckpt', save_format='tf')

保存结构参数

# 保存模型结构与模型参数到文件,该方式保存的模型具有跨平台性便于部署

model.save('../Output/tf_model_titanic_saved', save_format='tf')

print('export saved model.')

加载模型:

model_loaded = tf.keras.models.load_model('../Output/tf_model_titanic_saved')

model_loaded.evaluate(x_train, y_train)

参考资料

- 30天吃掉那只 TensorFlow2:[结构化数据建模流程范例]