Unity 智能语音助手

Unity智能语音聊天机器人

在本篇文章中,使用了百度的语音识别、语音合成、智能对话Unit的功能,制作成了一款简易的聊天机器人,在开始做之前呢,需要确定需要实现的核心功能,有以下几点:

(1)实现人机文字聊天

(2)实现人机语音聊天

(3)语音聊天记录播放

(4)文字聊天与语音聊天切换

创建UI界面

核心代码

一、人机文字聊天

chatDialog.onEndEdit.AddListener(delegate

{

if (chatDialog != null)

{

if (chatDialog.text.Equals(""))

{

return;

}

chat.AddChatMessage( ChatUI.enumChatMessageType.MessageRight, chatDialog.text);//自己的聊天UI界面

Unit_NLP(chatDialog.text);

JsonDecode(result);

}

});

/// /// 二、人机语音聊天

/// 三、语音聊天记录播放

//发送录音保存字典

public int myindex=0;

public Dictionary<int, AudioClip> myclipDic = new Dictionary<int, AudioClip>();

//收到录音保存字典

public int robotindex = 0;

public Dictionary<int, AudioClip> robotclipDic = new Dictionary<int, AudioClip>();

oid Start()

{

index = UnitManager.instance.myindex;

source = GameObject.Find("Canvas/robot").GetComponent<AudioSource>();

Litsen_btn = transform.GetComponent<Button>();

Litsen_btn.onClick.AddListener(() => {

if (MessageType.Equals("myself"))

{

GetValueByKey(UnitManager.instance.myclipDic, index - 1);

}

else if (MessageType.Equals("robot"))

{

GetValueByKey(UnitManager.instance.robotclipDic, index - 1);

}

});

}

public void GetValueByKey(Dictionary<int, AudioClip> dic, int key)

{

AudioClip clip = null;

dic.TryGetValue(key, out clip);

source.clip = clip;

source.Play();

}

通篇代码

using LitJson;

using System;

using System.Collections;

using System.Collections.Generic;

using System.IO;

using System.Net;

using System.Text;

using System.Text.RegularExpressions;

using UnityEngine;

using UnityEngine.Networking;

using UnityEngine.UI;

public class UnitManager : MonoBehaviour

{

public static UnitManager instance;

private void Awake()

{

instance = this;

}

public string api_key;

public string secret_key;

string accessToken = string.Empty;

InputField chatDialog;

Button speechToggle; //选择当前发送信息的类型(语音或者文字)

public ChatUI chat; //聊天界面

public bool isChooseSpeech = false; //判断当前的发送信息类型状态

//语音识别模块

string resulrStr = string.Empty;

int recordFrequency = 8000;

AudioClip saveAudioClip; //存储语音

string currentDeviceName = string.Empty; //当前录音设备名称

AudioSource source;

int recordMaxTime=20;

public Sprite[] _sp;

public GameObject speechButton;

//NLP

AudioSource tts_source;

string result=string.Empty;

//发送录音保存字典

public int myindex=0;

public Dictionary<int, AudioClip> myclipDic = new Dictionary<int, AudioClip>();

//收到录音保存字典

public int robotindex = 0;

public Dictionary<int, AudioClip> robotclipDic = new Dictionary<int, AudioClip>();

void Start()

{

StartCoroutine(_GetAccessToken());

chat = GameObject.Find("Canvas/ChatUI").GetComponent<ChatUI>();

chatDialog = GameObject.Find("Canvas/ChatUI/InputArea/InputField").GetComponent<InputField>();

speechToggle= GameObject.Find("Canvas/ChatUI/speechToggle").GetComponent<Button>();

tts_source = GameObject.Find("Canvas/ChatUI/speechToggle").GetComponent<AudioSource>();

source = transform.GetComponent<AudioSource>();

chatDialog.onEndEdit.AddListener(delegate

{

if (chatDialog != null)

{

if (chatDialog.text.Equals(""))

{

return;

}

chat.AddChatMessage( ChatUI.enumChatMessageType.MessageRight, chatDialog.text);//自己的聊天UI界面

Unit_NLP(chatDialog.text);

JsonDecode(result);

}

});

speechToggle.onClick.AddListener(ToChangeSpeechToggle);

}

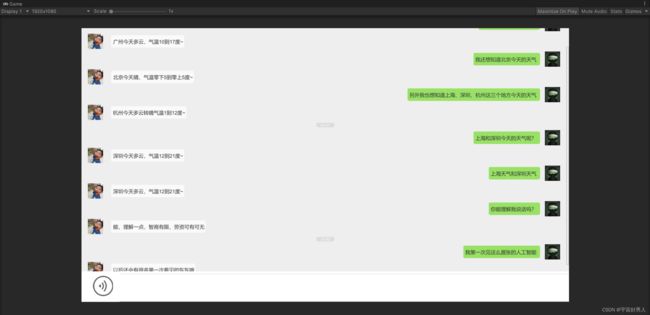

/// 演示效果

发送文字:

发送语音:

(因为语音播放演示不了,所以就打个样吧)

结尾,希望能与对这方面感兴趣的兄弟姐妹一起探讨,有需要源码做项目的也可以私聊。技术有限,现在是在Windows平台上实现了简易智能语音聊天机器人的基本功能,各方面的细节还有待优化,本篇结束啦,希望明天会更好。