规约算法.求内积

规约算法.求内积

-

- 0.引言

- 1.code

- 2.result

0.引言

有的地方也称之为归约算法.

内积: d = ⟨ x , y ⟩ d=\langle x, y\rangle d=⟨x,y⟩

v 1 = ( a 1 , a 2 , a 3 , … , a n ) \mathrm{v}_{1}=\left(\mathrm{a}_{1}, \mathrm{a}_{2}, \mathrm{a}_{3}, \ldots, \mathrm{a}_{\mathrm{n}}\right) v1=(a1,a2,a3,…,an) v 2 = ( b 1 , b 2 , b 3 , … , b n ) \mathrm{v}_{2}=\left(\mathrm{b}_{1}, \mathrm{b}_{2}, \mathrm{b}_{3}, \ldots, \mathrm{b}_{\mathrm{n}}\right) v2=(b1,b2,b3,…,bn) v 1 ⋅ v 2 = a 1 b 1 + a 2 b 2 + a 3 b 3 + … + a n b n \mathrm{v}_{1} \cdot \mathrm{v}_{2}=\mathrm{a}_{1} \mathrm{b}_{1}+\mathrm{a}_{2} \mathrm{b}_{2}+\mathrm{a}_{3} \mathrm{b}_{3}+\ldots+\mathrm{a}_{\mathrm{n}} \mathrm{b}_{\mathrm{n}} v1⋅v2=a1b1+a2b2+a3b3+…+anbn类似可以类比内积思想求解的一些问题:

- 求和

- 乘积

- 逻辑运算, 例如 and, or, xor…

- 极值, 例如 max, min.

V = { v 0 = ( e 0 0 ⋮ e 0 m − 1 ) , v 1 = ( e 1 0 ⋮ e 1 m − 1 ) , … , v p − 1 = ( e p − 1 0 ⋮ e p − 1 m − 1 ) } V=\left\{v_{0}=\left(\begin{array}{c}{e_{0}^{0}} \\ {\vdots} \\ {e_{0}^{m-1}}\end{array}\right), v_{1}=\left(\begin{array}{c}{e_{1}^{0}} \\ {\vdots} \\ {e_{1}^{m-1}}\end{array}\right), \ldots, v_{p-1}=\left(\begin{array}{c}{e_{p-1}^{0}} \\ {\vdots} \\ {e_{p-1}^{m-1}}\end{array}\right)\right\} V=⎩⎪⎨⎪⎧v0=⎝⎜⎛e00⋮e0m−1⎠⎟⎞,v1=⎝⎜⎛e10⋮e1m−1⎠⎟⎞,…,vp−1=⎝⎜⎛ep−10⋮ep−1m−1⎠⎟⎞⎭⎪⎬⎪⎫ r = ( e 0 0 ⊕ e 1 0 ⊕ ⋯ ⊕ e − 1 0 ⋮ e 0 m − 1 ⊕ e 1 m − 1 ⊕ ⋯ ⊕ e p − 1 m − 1 ) = ( ⊕ 10 p − 1 e i 0 ⋮ ⊕ i = 0 p − 1 e i m − 1 ) r=\left(\begin{array}{c}{e_{0}^{0} \oplus e_{1}^{0} \oplus \cdots \oplus e_{-1}^{0}} \\ {\vdots} \\ {e_{0}^{m-1} \oplus e_{1}^{m-1} \oplus \cdots \oplus e_{p-1}^{m-1}}\end{array}\right)=\left(\begin{array}{c}{\oplus_{10}^{p-1} e_{i}^{0}} \\ {\vdots} \\ {\oplus_{i=0}^{p-1} e_{i}^{m-1}}\end{array}\right) r=⎝⎜⎛e00⊕e10⊕⋯⊕e−10⋮e0m−1⊕e1m−1⊕⋯⊕ep−1m−1⎠⎟⎞=⎝⎜⎛⊕10p−1ei0⋮⊕i=0p−1eim−1⎠⎟⎞

1.code

解析见注释:

/* dot product of two vectors: d = */

#include "reduction_aux.h"

#include 总体思想分为三个阶段:

- 块大小, 256: 数组长度降低256倍: 大规模数组依旧很长, 例如 256万降低到1万;

- 对部分和继续使用上一步的算法;

- 使用一个块, 将最后结果规约;

如草稿纸:

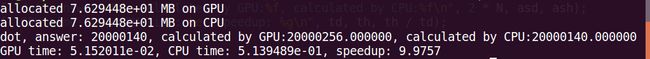

2.result

测试:注释掉那一句

/* load data to shared mem 加载数据并将乘积的结果保存至共享内存中*/

if (idx < N) {

sdata[tid] = x[idx] * y[idx];

}

else {

//sdata[tid] = 0;//开辟的线程实际上是大于N的,可以尝试一下注释掉这句,结果肯定是乱码.

}

猜测是乱码,竟然不是乱码,但是结果也是错误的,按照块的执行,多开的线程依然在执行,所以必须将其他没用到的线程里面的值设置为0,不然会导致结果错误!