图像分类篇-2:AlexNet

目录

前言

AlexNet网络介绍

使用Pytorch搭建AlexNet并训练

model.py

train.py

predict.py

课后:如何训练自己的数据集

前言

这个是按照B站up主的教程学习这方面知识的时候自己做的的笔记和总结,可能有点乱,主要是按照我自己的记录习惯

参考内容来自:

- up主的b站链接:霹雳吧啦Wz视频专辑-霹雳吧啦Wz视频合集-哔哩哔哩视频

- up主将代码和ppt都放在了github:https://github.com/WZMIAOMIAO

- up主的csdn博客:深度学习在图像处理中的应用(tensorflow2.4以及pytorch1.10实现)_太阳花的小绿豆的博客-CSDN博客_深度学习图像处理需要哪些软件

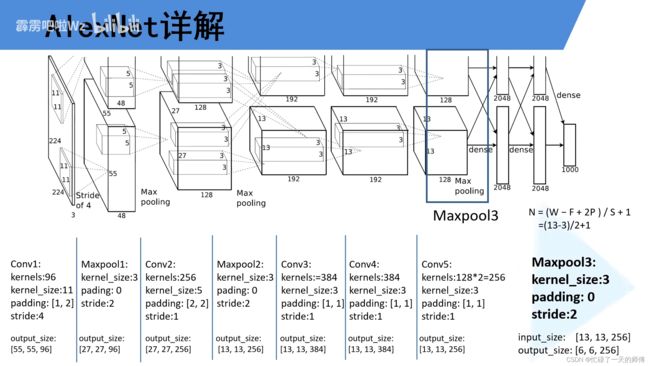

AlexNet网络介绍

本次训练使用数据集——花数据集

下载链接:https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz

up主给的存放步骤如下:

因为我是用conda里面自己创建的虚拟环境中的python运行的,所以我自己的实际操作如下:

使用Pytorch搭建AlexNet并训练

项目目录如下:

|-data_set

|-flower_data

|-flower_photos # 最开始通过链接下载的数据集

|-train # 最开始通过链接下载的数据集通过split_data分成训练集和验证集

|-val

|-split_data.py

|-imageprocessing

|-AlexNet

|-class_indices.json

|-model.py

|-predict.py

|-train.py

|-tulip.jpg # 郁金香图片,预测使用的

model.py

import torch.nn as nn

import torch

class AlexNet(nn.Module): # 创建一个类型AlexNet,继承nn.Module这个父类

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2), # input[3, 224, 224] output[48, 55, 55]

nn.ReLU(inplace=True), # inplace 可以理解为pytorch增加计算量降低内存使用量的一种方法,即通过该方法在内存中载入更大的模型

nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27]

nn.Conv2d(48, 128, kernel_size=5, padding=2), # output[128, 27, 27]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13]

nn.Conv2d(128, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1), # output[128, 13, 13]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6]

)

# nn.Sequential可以将一些系列结构打包 组成一个新的结构(网络层次多时采用,可以精简代码)

# 这里的features为专门提取图像特征的一个结构

self.classifier = nn.Sequential(

nn.Dropout(p=0.5), # p代表随机失活的一个比例,一般默认为0.5,这里只是列出来方便看

nn.Linear(128 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

) # classifier 包含了最后三层全连接层,这里是一个分类器

if init_weights: # 初始化权重

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1) # 展平处理

x = self.classifier(x) # 展平后得到输出

return x # 这个就是分类输出

def _initialize_weights(self):

for m in self.modules(): # 首先遍历self.modules这个模块,这个模块继承父类nn.module

if isinstance(m, nn.Conv2d): # 判断层结构是否为给定的这个类型

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') #kaiming_normal_凯明初始化 何凯明提出来的

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 均值0, 方差0.01

nn.init.constant_(m.bias, 0) # bias初始化为0

train.py

import os

import sys

import json

import time

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from tqdm import tqdm

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 如果当前有可以使用的gpu设备就默认使用硬件设备上第一块gpu设备

print("using {} device.".format(device))

data_transform = {

# 训练集

"train": transforms.Compose([transforms.RandomResizedCrop(224), # 随机裁剪 224* 224

transforms.RandomHorizontalFlip(), # 随机翻转

transforms.ToTensor(), # 转换成tensor

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]), # 标准化处理

# 验证集

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

# os.getcwd()获取当前文件所在的目录// os.path.join将两个路径连接在一起// ..代表返回上一层目录// ../..代表返回上上层目录

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"), # 载入的是训练集的数据

transform=data_transform["train"]) # 所采用的数据预处理是刚刚定义的data_transform,传入train,会返回训练集对应的预处理方式

# datasets.ImageFolder 加载数据集

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx #获取分类名称对应的索引

cla_dict = dict((val, key) for key, val in flower_list.items()) # 遍历刚刚所获得的字典,将key和val返回

# write dict into json file

json_str = json.dumps(cla_dict, indent=4) # 将cla_dict进行编码,编码成json的格式

with open('class_indices.json', 'w') as json_file: # 打开class_indices.json文件(索引与类别名称的对应关系),保存到一个json文件当中,方便预测的时候读取信息

json_file.write(json_str)

batch_size = 32

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0) # num_workers加载过程中所使用的线程数,windows下为0,现在好像windows系统可以不一定要0了看电脑设备

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"]) # ImageFolder载入测试集及预处理函数

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=nw)

# batch_size=4 只查看其中4张图片

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# 下面是之前LeNet讲过的如何去查看数据集的代码,想运行尝试需要将val中batch-size改成4,shuffle改为ture

# test_data_iter = iter(validate_loader)

# test_image, test_label = test_data_iter.next()

#

# def imshow(img):

# img = img / 2 + 0.5 # unnormalize

# npimg = img.numpy()

# plt.imshow(np.transpose(npimg, (1, 2, 0)))

# plt.show()

#

# print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

# imshow(utils.make_grid(test_image))

net = AlexNet(num_classes=5, init_weights=True) # 传入参数类别5,花分类5个类别

net.to(device) # 分配到刚刚指定的设备上

loss_function = nn.CrossEntropyLoss() # 损失函数 交叉熵

# pata = list(net.parameters()) # 之前用来调试查看模型参数的,这里用不到

optimizer = optim.Adam(net.parameters(), lr=0.0002) # Adam优化器,优化网络中所有参数

epochs = 10

save_path = './AlexNet.pth' # 保存权重的路径

best_acc = 0.0

train_steps = len(train_loader)

for epoch in range(epochs): # 迭代10次

# train

net.train() # 我们想使用dropout,但是不希望一直使用,所以用net.train() net.eval()来管理,这两句也可以管理后面的BN层

# 调用net.train()就会使用dropout方法

# 调用net.eval()就会关闭dropout方法

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

t1 = time.perf_counter() # 用来测试gpu cpu时间的

for step, data in enumerate(train_bar):

images, labels = data # 数据分为图像和对应的标签

optimizer.zero_grad() # 清空之前的梯度信息

outputs = net(images.to(device)) # 训练图像指定到设备当中

loss = loss_function(outputs, labels.to(device)) # loss_function计算预测值和真实值之间的损失,将labels指定到设备当中

loss.backward() # 损失反向传播

optimizer.step() # 更新每一个节点的参数

# print statistics

running_loss += loss.item()

# 打印训练进度

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

print()

print(time.perf_counter()-t1) # 通过时间差 测试gpu cpu时间的

# 训练完成进行验证

# validate

net.eval() # 关闭dropout方法

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad(): # 验证过程中不计算损失梯度

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1] # torch.max求出输出中预测最有可能的类别

acc += torch.eq(predict_y, val_labels.to(device)).sum().item() # 预测与真实标签进行对比

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc: # 当前准确率大于历史最优

best_acc = val_accurate

torch.save(net.state_dict(), save_path) # 保存当前权重

print('Finished Training')

if __name__ == '__main__':

main()

训练结果如下

D:\qiaochensoftware\minicondapython38\minicondainstall\envs\qc\python.exe E:/imageprocessing/AlexNet/train.py

using cuda:0 device.

Using 8 dataloader workers every process

using 3306 images for training, 364 images for validation.

train epoch[1/10] loss:1.121: 100%|██████████| 104/104 [00:13<00:00, 7.44it/s]13.985368

100%|██████████| 12/12 [00:14<00:00, 1.17s/it]

[epoch 1] train_loss: 1.396 val_accuracy: 0.396

train epoch[2/10] loss:1.049: 100%|██████████| 104/104 [00:11<00:00, 9.39it/s]11.076557300000001

100%|██████████| 12/12 [00:14<00:00, 1.17s/it]

[epoch 2] train_loss: 1.216 val_accuracy: 0.503

train epoch[3/10] loss:0.906: 100%|██████████| 104/104 [00:11<00:00, 9.22it/s]11.279007500000006

100%|██████████| 12/12 [00:14<00:00, 1.19s/it]

[epoch 3] train_loss: 1.114 val_accuracy: 0.530

train epoch[4/10] loss:0.981: 100%|██████████| 104/104 [00:11<00:00, 9.17it/s]11.343309899999994

100%|██████████| 12/12 [00:14<00:00, 1.17s/it]

[epoch 4] train_loss: 1.059 val_accuracy: 0.577

train epoch[5/10] loss:0.934: 100%|██████████| 104/104 [00:11<00:00, 9.37it/s]11.094776199999998

100%|██████████| 12/12 [00:13<00:00, 1.16s/it]

[epoch 5] train_loss: 1.000 val_accuracy: 0.615

train epoch[6/10] loss:0.715: 100%|██████████| 104/104 [00:11<00:00, 9.37it/s]11.093809300000004

100%|██████████| 12/12 [00:14<00:00, 1.17s/it]

[epoch 6] train_loss: 0.950 val_accuracy: 0.665

train epoch[7/10] loss:1.282: 100%|██████████| 104/104 [00:11<00:00, 9.34it/s]11.128479199999987

100%|██████████| 12/12 [00:14<00:00, 1.18s/it]

[epoch 7] train_loss: 0.912 val_accuracy: 0.665

train epoch[8/10] loss:0.880: 100%|██████████| 104/104 [00:11<00:00, 9.45it/s]11.011119999999977

100%|██████████| 12/12 [00:13<00:00, 1.15s/it]

[epoch 8] train_loss: 0.883 val_accuracy: 0.659

train epoch[9/10] loss:0.382: 100%|██████████| 104/104 [00:11<00:00, 9.29it/s]11.191952900000018

100%|██████████| 12/12 [00:13<00:00, 1.15s/it]

[epoch 9] train_loss: 0.855 val_accuracy: 0.687

train epoch[10/10] loss:0.704: 100%|██████████| 104/104 [00:11<00:00, 9.27it/s]11.221249999999998

100%|██████████| 12/12 [00:14<00:00, 1.18s/it]

[epoch 10] train_loss: 0.805 val_accuracy: 0.695

Finished TrainingProcess finished with exit code 0

训练完成会保存一个准确率最高的模型参数

predict.py

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 首先定义一个预处理函数,对载入图片进行预处理

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# load image

img_path = "tulip.jpg" # 直接载入一张图像,就和当前py文件一个目录下哈

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img) # 展示一下这张图片

# [N, C, H, W]

img = data_transform(img) # 对图片进行预处理

# expand batch dimension

img = torch.unsqueeze(img, dim=0) # 扩充一个维度,因为所有图片只要[高度,宽度,深度]三个维度,增加一个batch维度,并将该维度提到最前面

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f: # 读取索引对应的类别名称

class_indict = json.load(f)

# create model

model = AlexNet(num_classes=5).to(device) # 初始化网络

# load model weights

weights_path = "./AlexNet.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))

model.eval() # 进入eval模型,即关闭dropout

with torch.no_grad(): # 不跟踪损失梯度

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0) # softmax处理变成概率分布

predict_cla = torch.argmax(predict).numpy() # argmax获取概率最大处的索引值

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()

预测结果

课后:如何训练自己的数据集

up主视频最后教的,本人懒得实验了,但是记录下来以后用

本来这个项目最后的目录如下

|-data_set

|-flower_data

|-flower_photos # 最开始通过链接下载的数据集

|-train # 最开始通过链接下载的数据集通过split_data分成训练集和验证集

|-val

|-split_data.py

|-imageprocessing

|-AlexNet

|-class_indices.json

|-model.py

|-predict.py

|-train.py

|-tulip.jpg # 郁金香图片,预测使用的

直接进入flower_data文件夹下把文件删除,放自己的,按照这个花的一样摆放好,然后调用split_data.py就好了,后续需要修改参数网络train.py中的num_classes,这个是数据集类别。predict.py中num_classes也需要更改