卷积神经网络的猫狗识别

文章目录

- 一、准备工作

- 二、猫狗识别

-

- 2.1、下载数据集

-

- 2.1.1、 图片分类

- 2.1.2、图片数量统计

- 2.2、卷积神经网络CNN

-

- 2.2.1、网络模型搭建

- 2.2.2、图像生成器读取文件中数据

- 2.2.3、训练

- 2.2.4、保存模型

- 2.2.5、结果可视化

- 2.3、对模型进行调整

-

- 2.3.1、图像增强方法

- 2.3.2、模型调整

- 2.3.3、保存模型

- 2.3.4、绘制结果

一、准备工作

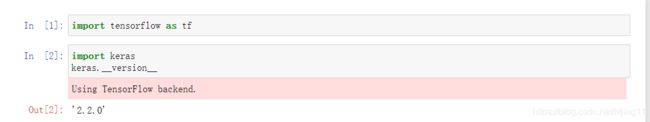

由于anaconda3中keras,tensorflow和Python版本对应的问题,如果不提前解决好的话后面会不停报错,然后就得重新再做,所以最好一开始就先把这些问题解决。

①首先,打开anaconda先新建一个环境,选择python3.6

②打开anaconda prompt,先激活刚才新建的环境

activate 名字

③下载相关库tensorflow(默认下载的一般都是2.X,后面就会各种报错,版本不支持,所以这里指定下载版本,我下的是1.10.0)

conda install --channel https://conda.anaconda.org/anaconda tensorflow=1.10.0

④下载keras(可以自己去找一下对应版本,tensorflow1.10对应keras2.2.0)

pip install keras==2.2.0

⑤下载完之后可以测试一下

注意:这里可以再看一下numpy的版本,如果版本过高后面也会报错,所以提前看一下如果版本高于1.16可以先卸载重新安装符合要求的版本。

(还有些东西可以中途报错之后再去装,装完之后重新执行那一步就行了)

二、猫狗识别

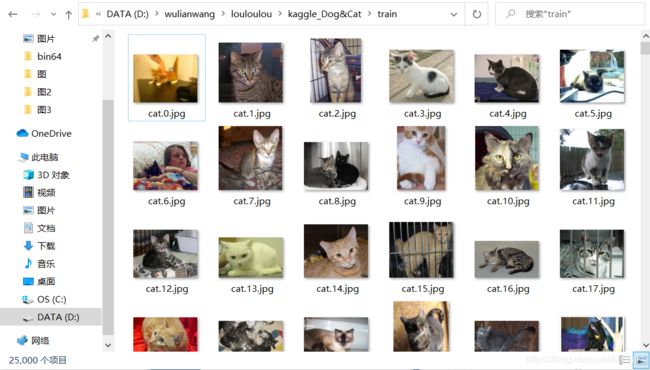

2.1、下载数据集

(这里使用的数据集是从Kaggle网站上下载的)

这是分类之后的目录:

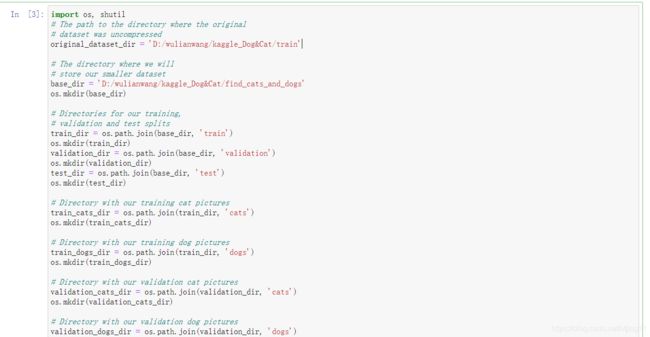

2.1.1、 图片分类

import os, shutil

# The path to the directory where the original

# dataset was uncompressed

original_dataset_dir = 'D:/wulianwang/louloulou/kaggle_Dog&Cat/train'

# The directory where we will

# store our smaller dataset

base_dir = 'D:/wulianwang/louloulou/kaggle_Dog&Cat/find_cats_and_dogs'

os.mkdir(base_dir)

# Directories for our training,

# validation and test splits

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

os.mkdir(validation_cats_dir)

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

# Directory with our validation cat pictures

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

# Directory with our validation dog pictures

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)

# Copy first 1000 cat images to train_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 cat images to validation_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 cat images to test_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy first 1000 dog images to train_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 dog images to validation_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 dog images to test_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)

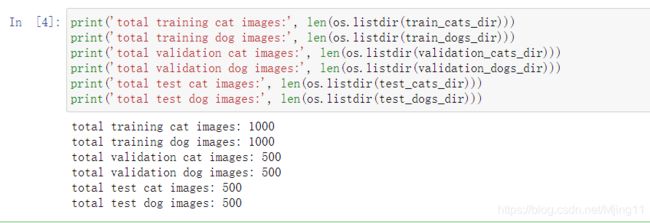

2.1.2、图片数量统计

print('total training cat images:', len(os.listdir(train_cats_dir)))

print('total training dog images:', len(os.listdir(train_dogs_dir)))

print('total validation cat images:', len(os.listdir(validation_cats_dir)))

print('total validation dog images:', len(os.listdir(validation_dogs_dir)))

print('total test cat images:', len(os.listdir(test_cats_dir)))

print('total test dog images:', len(os.listdir(test_dogs_dir)))

猫狗训练图片各1000张,验证图片各500张,测试图片各500张。

2.2、卷积神经网络CNN

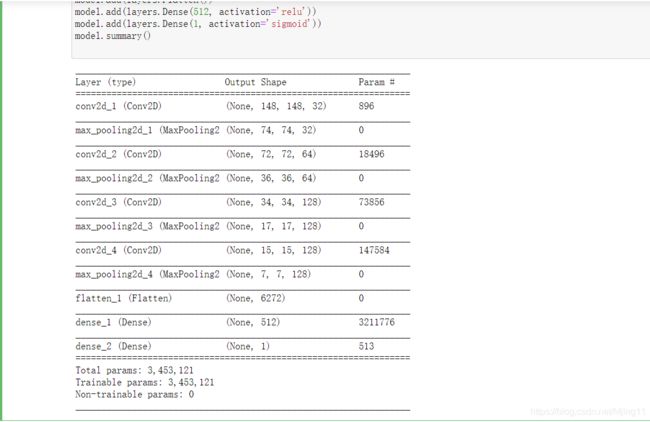

2.2.1、网络模型搭建

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.summary()

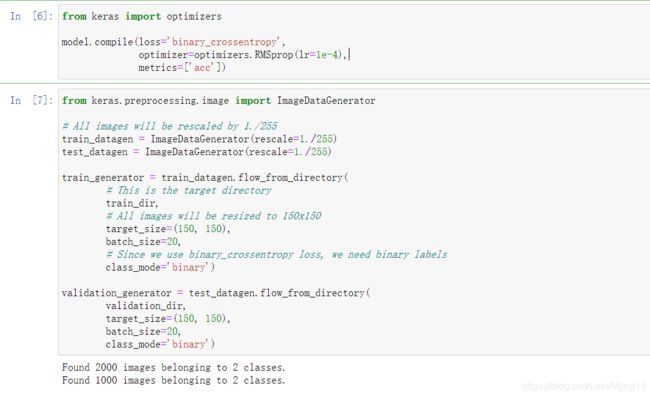

2.2.2、图像生成器读取文件中数据

from keras import optimizers

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

from keras.preprocessing.image import ImageDataGenerator

# All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

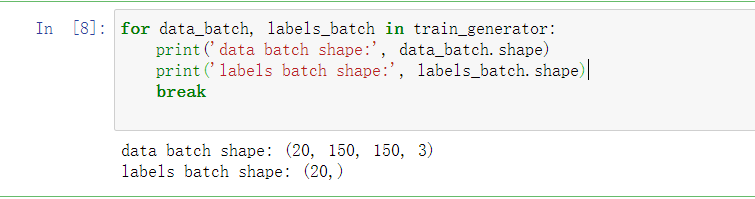

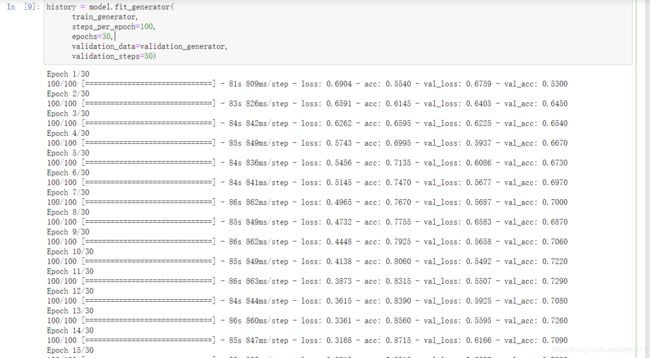

2.2.3、训练

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch.shape)

break

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=50)

2.2.4、保存模型

model.save('cats_and_dogs_small_1.h5')

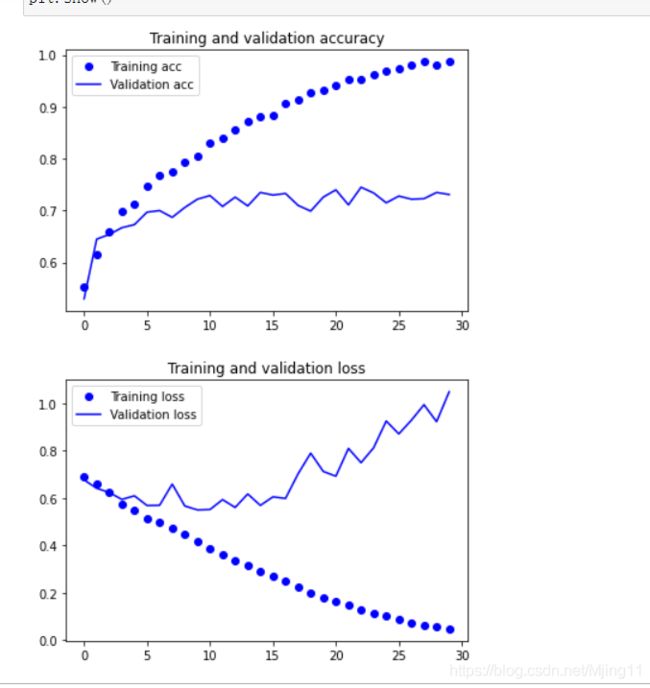

2.2.5、结果可视化

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

2.3、对模型进行调整

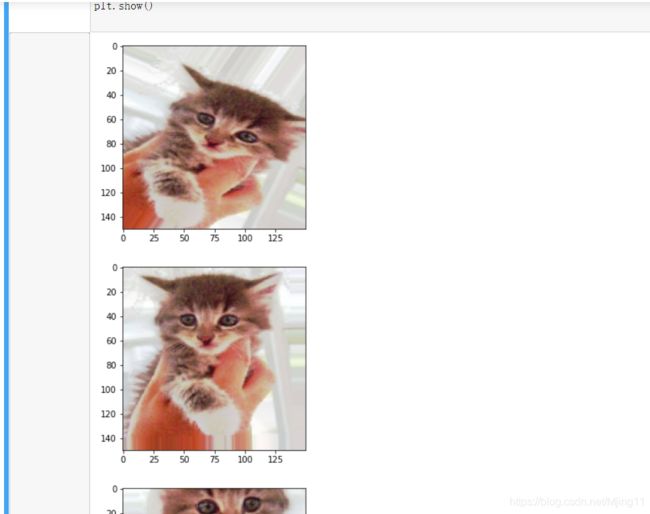

2.3.1、图像增强方法

datagen = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

# This is module with image preprocessing utilities

from keras.preprocessing import image

fnames = [os.path.join(train_cats_dir, fname) for fname in os.listdir(train_cats_dir)]

# We pick one image to "augment"

img_path = fnames[3]

# Read the image and resize it

img = image.load_img(img_path, target_size=(150, 150))

# Convert it to a Numpy array with shape (150, 150, 3)

x = image.img_to_array(img)

# Reshape it to (1, 150, 150, 3)

x = x.reshape((1,) + x.shape)

# The .flow() command below generates batches of randomly transformed images.

# It will loop indefinitely, so we need to `break` the loop at some point!

i = 0

for batch in datagen.flow(x, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(image.array_to_img(batch[0]))

i += 1

if i % 4 == 0:

break

plt.show()

2.3.2、模型调整

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,)

# Note that the validation data should not be augmented!

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=32,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=32,

class_mode='binary')

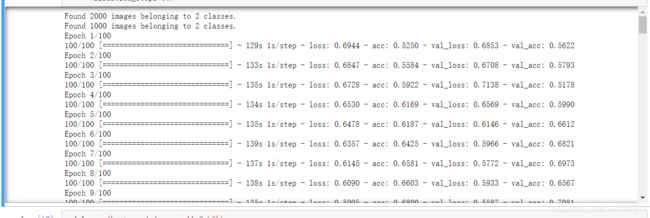

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=100,

validation_data=validation_generator,

validation_steps=50)

2.3.3、保存模型

model.save('cats_and_dogs_small_2.h5')

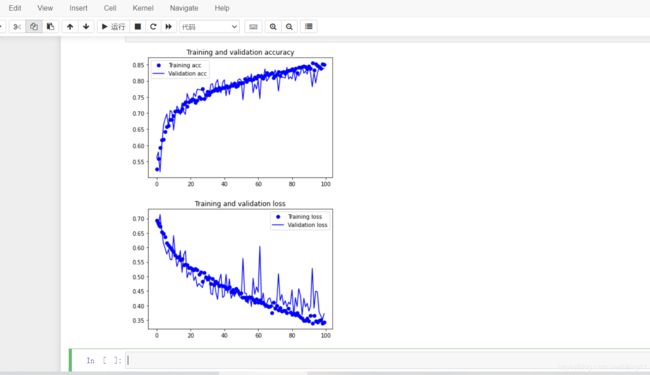

2.3.4、绘制结果

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()