Redis cluster 群集

一、Redis概述

1、Redis组建集群共有三种方式

第一种:Redis主从模式,搭建该集群有点就是非常简单并且每个数据保存在多个Redis中,这样保障Redis中的数据安全,缺点:当集群中的主服务器(Master)宕机后从服务器(Slave)不会自动接管主服务器的工作,需要人工干预

第二种:Redis哨兵模式,Redis哨兵主要采用单独开一个进程进行监控Redis集群运行状态,在Redis编译安装完成后,源文件可以看到一个sentinel.conf文件这个就是哨兵的配置文件,Redis哨兵也类似于Redis主从,在集群中选举一个主服务器(Master),所有写入的数据由主服务器接收,然后同步到集群中的从服务器(Slave)上,但是哨兵比Redis主从更加智能的,在哨兵集群中,当主服务器(Master)出现故障,集群会自动重新选举一台主服务器,这样不需要人工干预,系统运行更加稳定

第三种:Redis cluster,无论是Redis主从或者Redis哨兵,他们都有一个共同的问题,就是所有请求都是由一台服务器进行响应,既:主服务器(Master),如果请求/并发量太大,这台服务器将会成为瓶颈。而Redis 3.0以后提供了一个Redis cluster模式,它使用哈希槽分片,将所有数据分布在不同的服务器上

2、为什么要实现Redis Cluster

1、主从复制不能实现高可用

2、随着公司发展,用户数量增多,并发越来越多,业务需要更高的QPS,而主从复制中单机的QPS可能无法满足业务需求

3、数据量的考虑,现有服务器内存不能满足业务数据的需要时,单纯向服务器添加内存不能达到要求,此时需要考虑分布式需求,把数据分布到不同服务器上

4、网络流量需求:业务的流量已经超过服务器的网卡的上限值,可以考虑使用分布式来进行分流

5、离线计算,需要中间环节缓冲等别的需求

3、Redis数据分区

数据分布通常有 哈希分区 和 范围分区 两种方式(本文主要讲哈希分区)

哈希分区:离散程度好,数据分布与业务无关,无法顺序访问 Redis Cluster,Cassandra,Dynamo

顺序分区: 离散程度易倾斜,数据分布与业务相关,可以顺序访问 BigTable,HBase,Hypertable 由于Redis Cluster 采用哈希分区规则

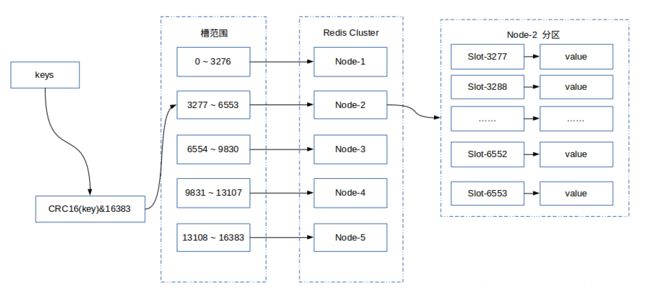

Redis Cluster 采用虚拟哈希槽分区,所有的键根据哈希函数映射到 0 ~ 16383 整数槽内,计算公式:slot = CRC16(key) & 16383。每一个节点负责维护一部分槽以及槽所映射的键值数据

Redis 虚拟槽分区的特点:

1、解耦数据和节点之间的关系,简化了节点扩容和收缩难度

2、节点自身维护槽的映射关系,不需要客户端或者代理服务维护槽分区元数据

3、支持节点、槽和键之间的映射查询,用于数据路由,在线集群伸缩等场景

如图:节点1的槽范围 0 ~ 3276 ;节点2的槽范围 3277 ~ 6553;节点3的槽范围 6554 ~ 9830;节点4的槽范围 9831 ~ 13107 ;节点5的槽范围 13108 ~ 16383。

4、 Redis集群选举原理

当slave发现自己的master变为FAIL状态时,便尝试进行Failover,以期成为新的master。由于挂掉的master可能会有多个slave,从而存在多个slave竞争成为master节点的过程

过程:

1、slave发现自己的master变为FAIL

2、将自己记录的集群currentEpoch加1,并广播FAILOVER_AUTH_REQUEST 信息

3、其他节点收到该信息,只有master响应,判断请求者的合法性,并发送FAILOVER_AUTH_ACK,对每一个epoch只发送一次ack

4、尝试failover的slave收集master返回的FAILOVER_AUTH_ACK

5、slave收到超过半数master的ack后变成新Master(这里解释了集群为什么至少需要三个主节点,如果只有两个,当其中一个挂了,只剩一个主节点是不能选举成功的)

6、slave广播Pong消息通知其他集群节点

从节点并不是在主节点一进入 FAIL 状态就马上尝试发起选举,而是有一定延迟,一定的延迟确保我们等待FAIL状态在集群中传播,slave如果立即尝试选举,其它masters或许尚未意识到FAIL状态,可能会拒绝投票

延迟计算公式:DELAY = 500ms + random(0 ~ 500ms) + SLAVE_RANK * 1000ms

SLAVE_RANK表示此slave已经从master复制数据的总量的rank。Rank越小代表已复制的数据越新。这种方式下,持有最新数据的slave将会首先发起选举

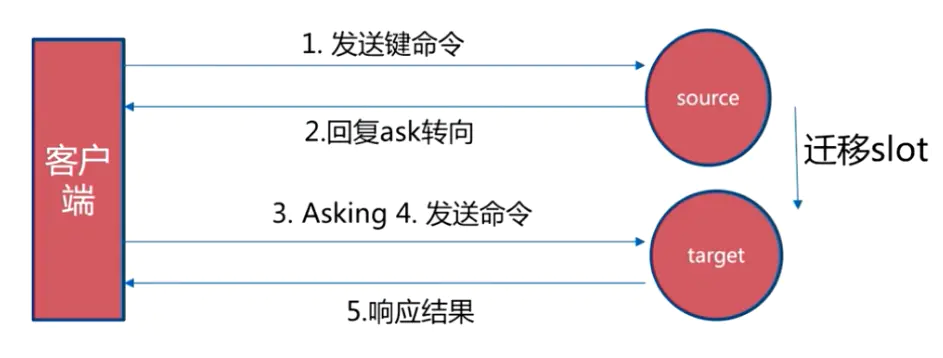

5、key跳转重定向

说明:客户端向已迁移(或错误的节点)发送键指令 ,该节点发现指令的key所在的槽位的值并不归自己管理,这时会给客户端回复一个特殊的ask转向携带目标操作的节点地址,告诉客户端去访问这个节点获取数据,客户端收到指令后除了跳转到正确的节点上去操作,还会同步更新纠正本地的槽位映射表缓存,后续所有的key都会使用新的槽位映射表。

二、搭建 redis Hadoop Cluster (伪集群)

| 系统 | IP | 主机名称 | 角色 | 端口 |

|---|---|---|---|---|

| CentOS 7.4 | 192.168.2.5 | MS1 | master slave |

6379 6380 |

| CentOS 7.4 | 192.168.2.6 | MS2 | master slave |

6379 6380 |

| CentOS 7.4 | 192.168.2.7 | MS3 | master slave |

6379 6380 |

(一)、搭建环境文献:Redis数据库持久化与主从复制(按双实例安装)

注意:不用做主从复制要不然会发现启动报错

在三台机器上进行操作:ip与主机名映射

echo '

192.168.2.5 MS1

192.168.2.6 MS2

192.168.2.7 MS3' >> /etc/hosts 1、设置配置文件 MS1、MS2、MS3的配置文件(举例MS1)

[root@MS1 ~]# mkdir -p /usr/local/redis/data/{6379,6380} #创建工作目录

[root@MS1 ~]# ls /usr/local/redis/data/

6379 6380

[root@MS1 ~]# mkdir -p /usr/local/redis/logs #创建日志存放目录

[root@MS1 ~]# vim /usr/local/redis/redis.conf

..........

302 logfile "/usr/local/redis/logs/6379.log" #修改log日志路径

........

454 dir "/usr/local/redis/data/6379" #修改数据保存位置

....

1251 appendonly yes #aof日志开启,每次写操作都会记录一条日志

....

1384 cluster-enabled yes #开启群集;去掉#号

....

1392 cluster-config-file nodes-6379.conf #集群的配置文件,首次启动自动生成

....

1398 cluster-node-timeout 15000 #超时15s

...

——————————————————————————————————————————————————————

[root@MS1 ~]# vim /usr/local/redis/redis2.conf

..........

302 logfile "/usr/local/redis/logs/6380.log"

........

454 dir "/usr/local/redis/data/6380"

....

1251 appendonly yes

....

1384 cluster-enabled yes

....

1392 cluster-config-file nodes-6380.conf

....

1398 cluster-node-timeout 15000

...

[root@MS1 ~]# systemctl restart redis2

[root@MS1 ~]# systemctl restart redis

[root@MS1 ~]# netstat -auptn |grep redis

tcp 0 0 192.168.2.5:6379 0.0.0.0:* LISTEN 63850/redis-server

tcp 0 0 192.168.2.5:6380 0.0.0.0:* LISTEN 70838/redis-server

tcp 0 0 192.168.2.5:16379 0.0.0.0:* LISTEN 63850/redis-server

tcp 0 0 192.168.2.5:16380 0.0.0.0:* LISTEN 70838/redis-server

2、 查看进程 cluster

[root@MS1 ~]# ps -ef | grep redis

root 63850 1 0 23:37 ? 00:00:00 /usr/local/redis/redis-server 192.168.2.5:6379 [cluster]

root 70838 1 0 23:41 ? 00:00:00 /usr/local/redis/redis-server 192.168.2.5:6380 [cluster]

(二)、创建cluster群集

1、 创建群集

[root@MS1 ~]# cd redis-6.2.6/src/

[root@MS1 src]# ./redis-cli --cluster create --cluster-replicas 1 192.168.2.5:6379 192.168.2.6:6379 192.168.2.7:6379 192.168.2.5:6380 192.168.2.6:6380 192.168.2.7:6380

[ERR] Node 192.168.2.7:6379 NOAUTH Authentication required.

发现报错 [ERR] Node 192.168.2.7:6379 NOAUTH Authentication required.

2、解决方法:init 6 (直接全部重启机器)

本次实验没有使用密码;注释密码

第一种:

sed -i 's/^[^#].*123.com/#&/' /usr/local/redis/redis.conf #将每行第一个字符不是‘#’但是包含‘123.com’的行首加上‘#’

第二种:

sed -i -e '901 s/^/#/' /usr/local/redis/redis.conf #指定行号901在开头加#号注意:如果设置密码需要加 -a 密码 ;注意是全部

注意:如果设置密码需要加 -a 密码 ;注意是全部

注意:如果设置密码需要加 -a 密码 ;注意是全部

3、 创建节点如下

[root@MS1 ~]# cd redis-6.2.6/src/

[root@MS1 src]# ./redis-cli --cluster create --cluster-replicas 1 192.168.2.5:6379 192.168.2.6:6379 192.168.2.7:6379 192.168.2.5:6380 192.168.2.6:6380 192.168.2.7:6380

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.2.6:6380 to 192.168.2.5:6379

Adding replica 192.168.2.7:6380 to 192.168.2.6:6379

Adding replica 192.168.2.5:6380 to 192.168.2.7:6379

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[0-5460] (5461 slots) master

M: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots:[5461-10922] (5462 slots) master

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[10923-16383] (5461 slots) master

S: b035a7bd973bd671092b4e30154e662e54167d95 192.168.2.5:6380

replicates 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b

S: 6e88437b8e7f8282d3ea42c57b1d310cae481302 192.168.2.6:6380

replicates beb1eccdbb03af22e32b5d8bf0b42bf221ff453b

S: dfcf3e8b52d711e774b456307773c889eec73e5c 192.168.2.7:6380

replicates f6ac2c7709166ff1b02bb67954765efa151ab957

Can I set the above configuration? (type 'yes' to accept): yes ——————输入yes即可

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 192.168.2.5:6379)

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 6e88437b8e7f8282d3ea42c57b1d310cae481302 192.168.2.6:6380

slots: (0 slots) slave

replicates beb1eccdbb03af22e32b5d8bf0b42bf221ff453b

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: b035a7bd973bd671092b4e30154e662e54167d95 192.168.2.5:6380

slots: (0 slots) slave

replicates 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b

S: dfcf3e8b52d711e774b456307773c889eec73e5c 192.168.2.7:6380

slots: (0 slots) slave

replicates f6ac2c7709166ff1b02bb67954765efa151ab957

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered. ---->>>>>>>>>>>>> 成功的信息

--cluster-replicas 参数指定群集中每一个主节点配备几个从节点,这里设置1,每个主只有一个从

4、查看群集状态

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 0 keys | 5461 slots | 1 slaves.

192.168.2.7:6379 (9f682a19...) -> 0 keys | 5461 slots | 1 slaves.

192.168.2.6:6379 (f6ac2c77...) -> 0 keys | 5462 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.2.5:6379)

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 6e88437b8e7f8282d3ea42c57b1d310cae481302 192.168.2.6:6380

slots: (0 slots) slave

replicates beb1eccdbb03af22e32b5d8bf0b42bf221ff453b

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: b035a7bd973bd671092b4e30154e662e54167d95 192.168.2.5:6380

slots: (0 slots) slave

replicates 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b

S: dfcf3e8b52d711e774b456307773c889eec73e5c 192.168.2.7:6380

slots: (0 slots) slave

replicates f6ac2c7709166ff1b02bb67954765efa151ab957

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

5、群集测试

[root@MS1 src]# ./redis-cli -h 192.168.2.5 -p 6379 #不使用-c

192.168.2.5:6379> set qvq aaa

(error) MOVED 9073 192.168.2.6:6379 #报错

192.168.2.5:6379> quit

[root@MS1 src]# ./redis-cli -h 192.168.2.5 -p 6379 -c #使用-c

192.168.2.5:6379> set qvq aaa #成功

-> Redirected to slot [9073] located at 192.168.2.6:6379

OK

在其他节点进行查看

[root@MS3 ~]# redis -h 192.168.2.7 -p 6379 -c

192.168.2.7:6379> get qvq

-> Redirected to slot [9073] located at 192.168.2.6:6379

"aaa"

192.168.2.6:6379>

会提示数据在192.168.2.6:6379上,并且自动跳转到 192.168.2.6:6379 主机上

(三)、扩展redis-cluster群集

先搭建一个redis:这里我直接复制的在192.168.2.7上复制了一个6381

[root@MS3 logs]# netstat -auptn |grep 81

tcp 0 0 192.168.2.7:6381 0.0.0.0:* LISTEN 87180/redis-server

tcp 0 0 192.168.2.7:16381 0.0.0.0:* LISTEN 87180/redis-server

1、 查看状态时报错

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 0 keys | 5461 slots | 1 slaves.

192.168.2.7:6379 (9f682a19...) -> 1 keys | 5461 slots | 1 slaves.

192.168.2.6:6379 (f6ac2c77...) -> 1 keys | 5462 slots | 1 slaves.

[OK] 2 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.2.5:6379)

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 6e88437b8e7f8282d3ea42c57b1d310cae481302 192.168.2.6:6380

slots: (0 slots) slave

replicates beb1eccdbb03af22e32b5d8bf0b42bf221ff453b

S: dfcf3e8b52d711e774b456307773c889eec73e5c 192.168.2.7:6380

slots: (0 slots) slave

replicates f6ac2c7709166ff1b02bb67954765efa151ab957

S: b035a7bd973bd671092b4e30154e662e54167d95 192.168.2.5:6380

slots: (0 slots) slave

replicates 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

[WARNING] Node 192.168.2.7:6379 has slots in importing state 9073.

[WARNING] The following slots are open: 9073.

>>> Check slots coverage...

[OK] All 16384 slots covered.

报错内容:

[WARNING] Node 192.168.2.7:6379 has slots in importing state 9073.

[WARNING] The following slots are open: 9073. 造成原因:

9073这个节点可能数据比较大,迁移太慢

解决办法:

登录 2.7:6379 的数据库中

使用redis命令取消slots迁移(9073为slot的ID)

[root@MS3 ~]# redis -h 192.168.2.7 -p 6379 -c

192.168.2.7:6379> cluster setslot 9073 stable

OK

2、先将192.168.2.7:6381加入群集中

[root@MS1 src]# ./redis-cli --cluster add-node 192.168.2.7:6381 192.168.2.5:6379

>>> Adding node 192.168.2.7:6381 to cluster 192.168.2.5:6379

>>> Performing Cluster Check (using node 192.168.2.5:6379)

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 6e88437b8e7f8282d3ea42c57b1d310cae481302 192.168.2.6:6380

slots: (0 slots) slave

replicates beb1eccdbb03af22e32b5d8bf0b42bf221ff453b

S: dfcf3e8b52d711e774b456307773c889eec73e5c 192.168.2.7:6380

slots: (0 slots) slave

replicates f6ac2c7709166ff1b02bb67954765efa151ab957

S: b035a7bd973bd671092b4e30154e662e54167d95 192.168.2.5:6380

slots: (0 slots) slave

replicates 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.2.7:6381 to make it join the cluster.

[OK] New node added correctly.

查看群集状态

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 0 keys | 5461 slots | 1 slaves.

192.168.2.7:6379 (9f682a19...) -> 1 keys | 5461 slots | 1 slaves.

192.168.2.6:6379 (f6ac2c77...) -> 1 keys | 5462 slots | 1 slaves.

192.168.2.7:6381 (1180cee4...) -> 0 keys | 0 slots | 0 slaves. #添加成功但是没有槽 slots

[OK] 2 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.2.5:6379)

...........

.....

.

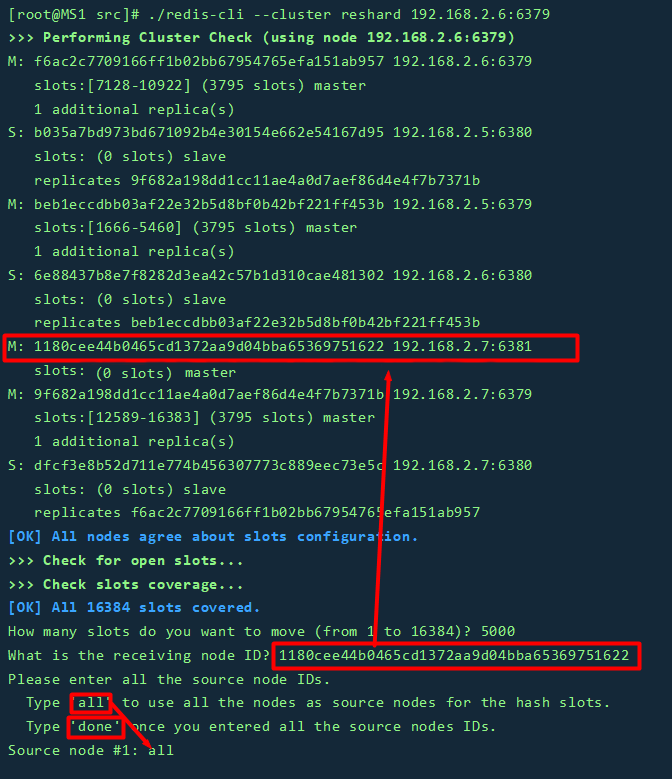

3、为 2.7:6381 master 主节点分配数据 Slots,从集群中的任意一个主节点中对其进行重新分片工作,因为只有主节点才有数据slots

[root@MS1 src]# ./redis-cli --cluster reshard 192.168.2.6:6379

>>> Performing Cluster Check (using node 192.168.2.6:6379)

M: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots:[7128-10922] (3795 slots) master

1 additional replica(s)

S: b035a7bd973bd671092b4e30154e662e54167d95 192.168.2.5:6380

slots: (0 slots) slave

replicates 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[1666-5460] (3795 slots) master

1 additional replica(s)

S: 6e88437b8e7f8282d3ea42c57b1d310cae481302 192.168.2.6:6380

slots: (0 slots) slave

replicates beb1eccdbb03af22e32b5d8bf0b42bf221ff453b

M: 1180cee44b0465cd1372aa9d04bba65369751622 192.168.2.7:6381

slots:(0 slots) master

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[12589-16383] (3795 slots) master

1 additional replica(s)

S: dfcf3e8b52d711e774b456307773c889eec73e5c 192.168.2.7:6380

slots: (0 slots) slave

replicates f6ac2c7709166ff1b02bb67954765efa151ab957

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5000 #分配多少个槽给 2.7:6381使用

What is the receiving node ID? 1180cee44b0465cd1372aa9d04bba65369751622 #2.7:6381的ID

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all #输入all

................................................

.......................................

..........................

.............查看群集状态

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 0 keys | 3795 slots | 1 slaves.

192.168.2.7:6379 (9f682a19...) -> 1 keys | 3795 slots | 1 slaves.

192.168.2.6:6379 (f6ac2c77...) -> 1 keys | 3795 slots | 1 slaves.

192.168.2.7:6381 (1180cee4...) -> 0 keys | 4999 slots | 0 slaves.

[OK] 2 keys in 4 masters.

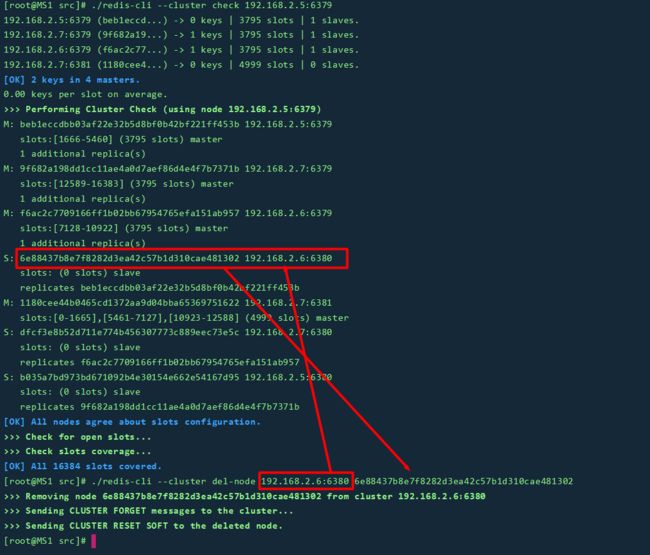

4、缩容

删除的顺序:先删除slave节点,在删除master节点

[root@MS1 src]# ./redis-cli --cluster del-node 192.168.2.6:6380 6e88437b8e7f8282d3ea42c57b1d310cae481302

>>> Removing node 6e88437b8e7f8282d3ea42c57b1d310cae481302 from cluster 192.168.2.6:6380

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

格式:./redis-cli --cluster del-node 从节点IP:端口 从节点的ID

5、动态删除 master 主 2.6:6379

删除master节点,先保证数据不丢失,必须把数据槽迁移到其他master主节点上,在删除主节点

5.1、重新分配,把要删除的master主节点的数据槽移动到其他master主节点是

[root@MS1 src]# ./redis-cli --cluster reshard 192.168.2.6:6379 --cluster-from f6ac2c7709166ff1b02bb67954765efa151ab957 --cluster-to beb1eccdbb03af22e32b5d8bf0b42bf221ff453b --cluster-slots 3795 --cluster-yes

............

......

..| --cluster-from | 要移走哈希的节点ID |

| --cluster-to | 要移入哈希的节点ID |

| --cluster-slots 3795 | 要移动的哈希槽数 |

5.2、删除192.168.2.6:6379主节点

IP地址 端口号 对应的ID号

[root@MS1 src]# ./redis-cli --cluster del-node 192.168.2.6:6379 f6ac2c7709166ff1b02bb67954765efa151ab957

>>> Removing node f6ac2c7709166ff1b02bb67954765efa151ab957 from cluster 192.168.2.6:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

5.3、槽位不均衡,解决方法

[root@MS1 src]# ./redis-cli --cluster rebalance --cluster-use-empty-masters 192.168.2.5:6379

>>> Performing Cluster Check (using node 192.168.2.5:6379)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 3 nodes. Total weight = 3.00

Moving 1667 slots from 192.168.2.5:6379 to 192.168.2.7:6379

...................................

.......................

.............

........

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 1 keys | 5461 slots | 1 slaves.

192.168.2.7:6379 (9f682a19...) -> 1 keys | 5462 slots | 1 slaves.

192.168.2.7:6381 (1180cee4...) -> 0 keys | 5461 slots | 0 slaves.

[OK] 2 keys in 3 masters.

0.00 keys per slot on average.

..............................

..............

.......6、给192.168.2.7:6381添加从节点

把192.168.2.6:6379加入群集

[root@MS1 src]# ./redis-cli --cluster add-node 192.168.2.6:6379 192.168.2.5:6379

>>> Adding node 192.168.2.6:6379 to cluster 192.168.2.5:6379

>>> Performing Cluster Check (using node 192.168.2.5:6379)

...........

......

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 1 keys | 5461 slots | 1 slaves.

192.168.2.6:6379 (f6ac2c77...) -> 0 keys | 0 slots | 0 slaves.

192.168.2.7:6379 (9f682a19...) -> 1 keys | 5462 slots | 1 slaves.

192.168.2.7:6381 (1180cee4...) -> 0 keys | 5461 slots | 0 slaves.

[OK] 2 keys in 4 masters.

..............

.........

.....

.6.1、指定2.6:6379 节点作为 2.7:6381的从节点,实现主从配置

在 2.6:6379 库中进行配置

[root@MS2 ~]# redis -h 192.168.2.6 -p 6379 -c

192.168.2.6:6379> cluster replicate 1180cee44b0465cd1372aa9d04bba65369751622 #指定 2.7:6381的ID

OK

查看群集状态

[root@MS1 src]# ./redis-cli --cluster check 192.168.2.5:6379

192.168.2.5:6379 (beb1eccd...) -> 1 keys | 5461 slots | 1 slaves.

192.168.2.7:6379 (9f682a19...) -> 1 keys | 5462 slots | 1 slaves.

192.168.2.7:6381 (1180cee4...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 2 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.2.5:6379)

M: beb1eccdbb03af22e32b5d8bf0b42bf221ff453b 192.168.2.5:6379

slots:[3795-5460],[7128-10922] (5461 slots) master

1 additional replica(s)

S: f6ac2c7709166ff1b02bb67954765efa151ab957 192.168.2.6:6379

slots: (0 slots) slave

replicates 1180cee44b0465cd1372aa9d04bba65369751622 #主2.7:6381的ID

M: 9f682a198dd1cc11ae4a0d7aef86d4e4f7b7371b 192.168.2.7:6379

slots:[1666-3332],[12589-16383] (5462 slots) master

1 additional replica(s)

M: 1180cee44b0465cd1372aa9d04bba65369751622 192.168.2.7:6381 #主2.7:6381

slots:[0-1665],[3333-3794],[5461-7127],[10923-12588] (5461 slots) master

1 additional replica(s)