Flink系列03: FlinkCEP从源码开始学习-PatternStream与执行模式匹配 - 附代码案例

前情提要:前两期研究如何定义个体模式和模式组的各种配置、链接,这期的目的是研究如何执行匹配的。

转载注明原作者:xiaozoom

转载博客地址:xiaozoom的csdn博客

xiaozoom的博客_CSDN博客-flinkCDC,数据同步,Flink领域博主

如何执行模式匹配

官网的解释是这样的:

DataStream input = ...

Pattern pattern = ...

EventComparator comparator = ... // optional

PatternStream patternStream = CEP.pattern(input, pattern, comparator); 需要:

- 一个事件源 input

- 定义好的模式(不论个体还是模式组)

- 用于在事件的TimeStamp相同时进行排序的EventComparator

- 定义方法:CEP.pattern

由于要执行存在验证是否连续的关系,很自然的会将parallelism强制设为1。input源不区分keyed和non-keyed。但是如果对non-keyed适用,可能会变成性能瓶颈。

因此,尽可能的在应用CEP之前,先用Key进行分流。

因此本期涉及到的类主要要:

-

org.apache.flink.cep.CEP

-

org.apache.flink.cep.PatternStream

public class CEP {

/**

* Creates a {@link PatternStream} from an input data stream and a pattern.

*

* @param input DataStream containing the input events

* @param pattern Pattern specification which shall be detected

* @param Type of the input events

* @return Resulting pattern stream

*/

public static PatternStream pattern(DataStream input, Pattern pattern) {

return new PatternStream<>(input, pattern);

}

/**

* Creates a {@link PatternStream} from an input data stream and a pattern.

*

* @param input DataStream containing the input events

* @param pattern Pattern specification which shall be detected

* @param comparator Comparator to sort events with equal timestamps

* @param Type of the input events

* @return Resulting pattern stream

*/

public static PatternStream pattern(

DataStream input, Pattern pattern, EventComparator comparator) {

final PatternStream stream = new PatternStream<>(input, pattern);

return stream.withComparator(comparator);

}

} 从简短的源代码,不难看出CEP只是定义好的入口类,真正需要研究还得看PatternStream类。

PatternStream类,没有继承任何超类,可以看出在代码层级中是属于比较底层的了。

基本的定义区:

private final PatternStreamBuilder builder;

private PatternStream(final PatternStreamBuilder builder) {

this.builder = checkNotNull(builder);

}

PatternStream(final DataStream inputStream, final Pattern pattern) {

this(PatternStreamBuilder.forStreamAndPattern(inputStream, pattern));

}

PatternStream withComparator(final EventComparator comparator) {

return new PatternStream<>(builder.withComparator(comparator));

}

public PatternStream sideOutputLateData(OutputTag lateDataOutputTag) {

return new PatternStream<>(builder.withLateDataOutputTag(lateDataOutputTag));

} 时间语义设定:

/** Sets the time characteristic to processing time. */

public PatternStream inProcessingTime() {

return new PatternStream<>(builder.inProcessingTime());

}

/** Sets the time characteristic to event time. */

public PatternStream inEventTime() {

return new PatternStream<>(builder.inEventTime());

}

默认情况下,在不明确设置事件时间或者处理时间时,会使用事件时间,这也就是为什么许多小伙伴在测试运行的时候无法获取任何事件的原因。如果自己写的案例代码无法获取结果,可以尝试调用inProcessingTime();

原因是因为:在EventTime模式下,FlinkCEP是基于水位线(水印、watermark)来触发的,如果不手动设置,自然不会产生任何事件被匹配到。

参考这个StackOverflow回答:Flink CEP not Working in event time but working in Processing Time

源代码佐证这一点,PatternStreamBuilder的最后一段,构造函数:

@Internal

final class PatternStreamBuilder {

...

private final TimeBehaviour timeBehaviour;

/**

* The time behaviour enum defines how the system determines time for time-dependent order and

* operations that depend on time.

*/

enum TimeBehaviour {

ProcessingTime,

EventTime

}

static PatternStreamBuilder forStreamAndPattern(

final DataStream inputStream, final Pattern pattern) {

return new PatternStreamBuilder<>(

inputStream, pattern, TimeBehaviour.EventTime, null, null);

}

功能区:

- 重载的select方法(根据文档,方法已经过时,在内部调用process新方法)

- 重载的flatSelect方法(根据文档,方法已经过时,在内部调用process新方法)

- 重载的process方法(Flink1.8版本引入的新方法,建议使用)

PatternProcessFunction的processMatch方法对每一个匹配的事件序列都会应用一次。match参数的key是每一个阶段的模式名称(比如 start, middle, end等等),值为一个List,按照时间戳顺序排列。官方给的样例代码如下(老样子,很迷):

class MyPatternProcessFunction extends PatternProcessFunction {

@Override

public void processMatch(Map> match, Context ctx, Collector out) throws Exception;

IN startEvent = match.get("start").get(0);

IN endEvent = match.get("end").get(0);

out.collect(OUT(startEvent, endEvent));

}

} 另外,这里的上下文ctx可以获取 当前的处理事件 和 当前匹配的时间戳 (最近匹配的一个元素的时间戳),还可以通过ctx把元素发送给旁路输出。

这里有几个需要确认的点:

是否每个事件序列都会应用一次?每个事件序列是否是指,比如当我保留了: a b1 c,a b2 c, a b1 b2 c这三个部分匹配的话,每种匹配输出一个结果,对于一个 a b+ c的模式组 配合一个固定的PatternProcessFunction会同时输出三条结果数据?

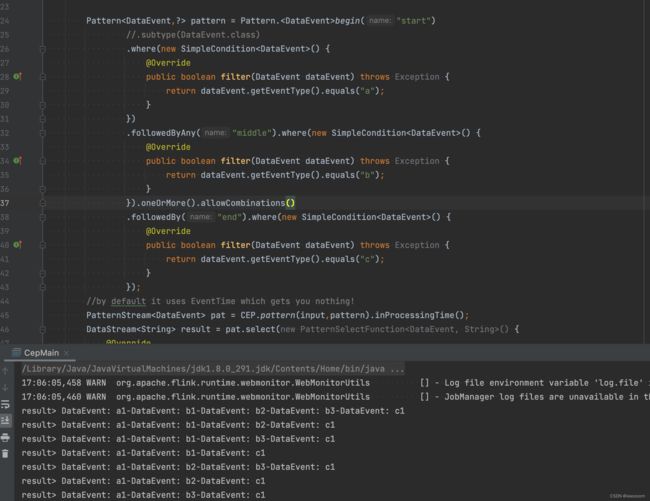

说了这么久,我们来上点干活(样板代码),以下基于flink1.13.6

package com.xiaozoom.cep.event;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

public class EventSource implements SourceFunction {

private static final long serialVersionUID = 1L;

private boolean isRunning = true;

@Override

public void run(SourceContext sourceContext) throws Exception {

while(isRunning){

sourceContext.collect(new DataEvent("a",1));

sourceContext.collect(new DataEvent("b",1));

sourceContext.collect(new DataEvent("d",1));

sourceContext.collect(new DataEvent("b",2));

sourceContext.collect(new DataEvent("d",2));

sourceContext.collect(new DataEvent("b",3));

sourceContext.collect(new DataEvent("c",1));

Thread.sleep(60000);

}

}

@Override

public void cancel() {

isRunning = false;

}

}

package com.xiaozoom.cep.event;

/**

* basic pojo

* @author xiaozoom

*/

public class DataEvent {

public String EventType;

public Integer EventValue;

public DataEvent() {

}

public DataEvent(String eventType, Integer eventValue) {

EventType = eventType;

EventValue = eventValue;

}

public String getEventType() {

return EventType;

}

public void setEventType(String eventType) {

EventType = eventType;

}

public Integer getEventValue() {

return EventValue;

}

public void setEventValue(Integer eventValue) {

EventValue = eventValue;

}

@Override

public String toString(){

return "DataEvent: " + getEventType() + getEventValue();

}

@Override

public int hashCode(){

return getEventType().hashCode() + getEventValue();

}

@Override

public boolean equals(Object other){

if(other instanceof DataEvent){

DataEvent otherE = (DataEvent) other;

return otherE.getEventType().equals(getEventType()) && otherE.getEventValue().equals(getEventValue());

}else{

return false;

}

}

}

package com.xiaozoom.cep;

import com.xiaozoom.cep.event.DataEvent;

import com.xiaozoom.cep.event.EventSource;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.cep.CEP;

import org.apache.flink.cep.PatternSelectFunction;

import org.apache.flink.cep.PatternStream;

import org.apache.flink.cep.nfa.aftermatch.AfterMatchSkipStrategy;

import org.apache.flink.cep.pattern.Pattern;

import org.apache.flink.cep.pattern.conditions.SimpleCondition;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.List;

import java.util.Map;

public class CepMain {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStream input = env.addSource(new EventSource());

Pattern pattern = Pattern.begin("start")

//.subtype(DataEvent.class)

.where(new SimpleCondition() {

@Override

public boolean filter(DataEvent dataEvent) throws Exception {

return dataEvent.getEventType().equals("a");

}

})

.followedByAny("middle").where(new SimpleCondition() {

@Override

public boolean filter(DataEvent dataEvent) throws Exception {

return dataEvent.getEventType().equals("b");

}

}).oneOrMore().consecutive()

.followedBy("end").where(new SimpleCondition() {

@Override

public boolean filter(DataEvent dataEvent) throws Exception {

return dataEvent.getEventType().equals("c");

}

});

//by default it uses EventTime which gets you nothing!

PatternStream pat = CEP.pattern(input,pattern).inProcessingTime();

DataStream result = pat.select(new PatternSelectFunction() {

@Override

public String select(Map> map) throws Exception {

StringBuilder result = new StringBuilder();

map.get("start").forEach(x -> {

result.append(x.toString()).append("-");

});

map.get("middle").forEach(x -> {

result.append(x.toString()).append("-");

});

map.get("end").forEach(x -> {

result.append(x.toString()).append("-");

});

result.deleteCharAt(result.length()-1);

return result.toString();

}

});

result.print("result");

env.execute();

}

}

一个标准的 a => skip_till any =>next b+(oneOreMore,Looping) =>skip till next =>c

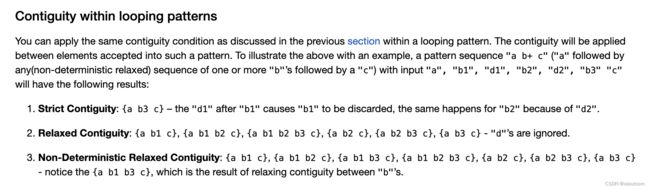

根据官方的描述:

第一种情况验证起来可能比较费劲,即+默认代表greedy,这一点没有说明,而在非strict连续的情况下使用时,又必须要拿掉,不然relax contiguity下结果会不同,Non-Deterministic下会报错。

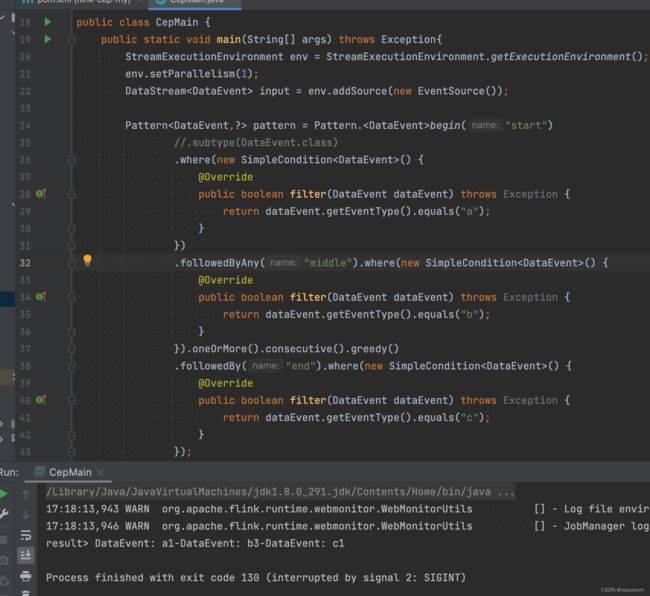

验证第二种情况:

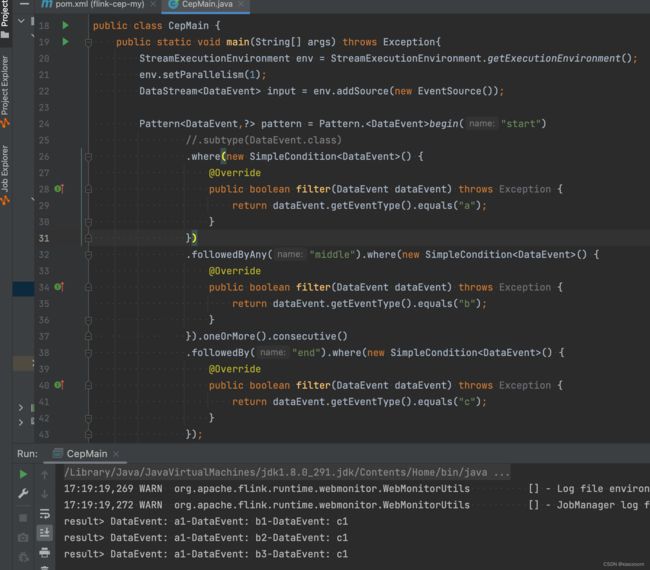

验证第三种情况:

根据官方的说法,process方法,不仅可以处理匹配到的对象,还支持处理那些因为事件到了而被丢弃的部分匹配的对象,只需要

class MyPatternProcessFunction extends PatternProcessFunction implements TimedOutPartialMatchHandler {

@Override

public void processMatch(Map> match, Context ctx, Collector out) throws Exception;

...

}

@Override

public void processTimedOutMatch(Map> match, Context ctx) throws Exception;

IN startEvent = match.get("start").get(0);

ctx.output(outputTag, T(startEvent));

}

} 值得注意的是,这个processTimedOutMatch方法并不提供主数据流的入口,只能通过上下文ctx把数据发送给旁路输出。

这是为什么呢?PatternStream的Process方法中并没有调用这个procssTimedOutMatch方法。

public SingleOutputStreamOperator process(

final PatternProcessFunction patternProcessFunction) {

final TypeInformation returnType =

TypeExtractor.getUnaryOperatorReturnType(

patternProcessFunction,

PatternProcessFunction.class,

0,

1,

TypeExtractor.NO_INDEX,

builder.getInputType(),

null,

false);

return process(patternProcessFunction, returnType);

}

/**

* Applies a process function to the detected pattern sequence. For each pattern sequence the

* provided {@link PatternProcessFunction} is called. In order to process timed out partial

* matches as well one can use {@link TimedOutPartialMatchHandler} as additional interface.

*

* @param patternProcessFunction The pattern process function which is called for each detected

* pattern sequence.

* @param Type of the resulting elements

* @param outTypeInfo Explicit specification of output type.

* @return {@link DataStream} which contains the resulting elements from the pattern process

* function.

*/

public SingleOutputStreamOperator process(

final PatternProcessFunction patternProcessFunction,

final TypeInformation outTypeInfo) {

return builder.build(outTypeInfo, builder.clean(patternProcessFunction));

} 值得一提的是文档上没有写可以直接提供TypeInformation的这个方法,这个看情况可以手动调用吧。

而这个builder就可以直接追述到environment对其执行Closure行为(闭包),那它是何时生效的呢?答案在CepOperator里(在flink里,一切皆算子,包括sink和source)

private void advanceTime(NFAState nfaState, long timestamp) throws Exception {

try (SharedBufferAccessor sharedBufferAccessor = partialMatches.getAccessor()) {

Tuple2<

Collection>>,

Collection>, Long>>>

pendingMatchesAndTimeout =

nfa.advanceTime(sharedBufferAccessor, nfaState, timestamp);

Collection>> pendingMatches = pendingMatchesAndTimeout.f0;

Collection>, Long>> timedOut = pendingMatchesAndTimeout.f1;

if (!pendingMatches.isEmpty()) {

processMatchedSequences(pendingMatches, timestamp);

}

if (!timedOut.isEmpty()) {

processTimedOutSequences(timedOut);

}

}

}

private void processMatchedSequences(

Iterable>> matchingSequences, long timestamp) throws Exception {

PatternProcessFunction function = getUserFunction();

setTimestamp(timestamp);

for (Map> matchingSequence : matchingSequences) {

function.processMatch(matchingSequence, context, collector);

}

}

private void processTimedOutSequences(

Collection>, Long>> timedOutSequences) throws Exception {

PatternProcessFunction function = getUserFunction();

if (function instanceof TimedOutPartialMatchHandler) {

@SuppressWarnings("unchecked")

TimedOutPartialMatchHandler timeoutHandler =

(TimedOutPartialMatchHandler) function;

for (Tuple2>, Long> matchingSequence : timedOutSequences) {

setTimestamp(matchingSequence.f1);

timeoutHandler.processTimedOutMatch(matchingSequence.f0, context);

}

}

} 在该算子的时间前进时,如果触发了TimedOut,就一定会调用processTimedOutSequence方法,如果获取的这个PatternProcessFunction还实现了TimedOutPartialMatchHandler接口,那就会直接把获取并调用对应的processTimedOutMatch方法处理。如果没有实现,则不会做任何处理。

(未完待续,求收藏和指正)