- matlab mle 优化,MLE+: Matlab Toolbox for Integrated Modeling, Control and Optimization for Buildings...

Simon Zhong

matlabmle优化

摘要:FollowingunilateralopticnervesectioninadultPVGhoodedrat,theaxonguidancecueephrin-A2isup-regulatedincaudalbutnotrostralsuperiorcolliculus(SC)andtheEphA5receptorisdown-regulatedinaxotomisedretinalgan

- [Swift]LeetCode767. 重构字符串 | Reorganize String

weixin_30591551

swiftruntime

★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★➤微信公众号:山青咏芝(shanqingyongzhi)➤博客园地址:山青咏芝(https://www.cnblogs.com/strengthen/)➤GitHub地址:https://github.com/strengthen/LeetCode➤原文地址:https://www.cnblogs.com/streng

- 生成式地图制图

Bwywb_3

深度学习机器学习深度学习生成对抗网络

生成式地图制图(GenerativeCartography)是一种利用生成式算法和人工智能技术自动创建地图的技术。它结合了传统的地理信息系统(GIS)技术与现代生成模型(如深度学习、GANs等),能够根据输入的数据自动生成符合需求的地图。这种方法在城市规划、虚拟环境设计、游戏开发等多个领域具有应用前景。主要特点:自动化生成:通过算法和模型,系统能够根据输入的地理或空间数据自动生成地图,而无需人工逐

- Ubuntu Juju 与 Ansible的区别

xidianjiapei001

#Kubernetesubuntuansiblelinux云原生Juju

JujuandAnsiblearebothpowerfultoolsusedformanagingandorchestratingITinfrastructureandapplications,buttheyhavedifferentapproachesandusecases.Here’sabreakdownofthekeydifferencesbetweenthem:1.ConceptualFo

- 2005年高考英语北京卷 - 阅读理解C

让文字更美

Howcouldwepossiblythinkthatkeepinganimalsincagesinunnaturalenvironments-mostlyforentertainmentpurposes-isfairandrespectful?我们怎么可能认为把动物关在非自然环境的笼子里——主要是为了娱乐目的——是公平和尊重的呢?Zooofficialssaytheyareconcernedab

- ComfyUI AnimateDiff-Lightning 教程

jayli517

ComfyUIAIGC

介绍项目主页:https://huggingface.co/ByteDance/AnimateDiff-Lightning在线测试(有墙):https://huggingface.co/spaces/ByteDance/AnimateDiff-Lightning国内镜像:https://hf-mirror.com/ByteDance/AnimateDiff-LightningAnimateDiff

- VITS 源码解析2-模型概述

迪三

#NN_Audio音频人工智能

VITs是文本到语音(Text-to-Speech,TTS)任务中最流行的技术之一,其实现思路是将文本语音信息融合到了HiFiGAN潜空间内,通过文本控制HiFiGAN的生成器,输出含文本语义的声音。VITs主要以GAN的方式训练,其生成器G是SynthesizerTrn,判别器D是MPD。VITS的判别器几乎和HiFiGAN一样,生成器则融合了文本、时序、声音三大类模型1.文件概述模型部分包含三

- 解决BERT模型bert-base-chinese报错(无法自动联网下载)

搬砖修狗

bert人工智能深度学习python

一、下载问题hugging-face是访问BERT模型的最初网站,但是目前hugging-face在中国多地不可达,在代码中涉及到该网站的模型都会报错,本文我们就以bert-base-chinese报错为例,提供一个下载到本地的方法来解决问题。二、网站google-bert(BERTcommunity)Thisorganizationismaintainedbythetransformerstea

- Quartus II SDC文件建立流程

cattao1989

verilog

QuartusIISDC文件编写教程第一步:打开TimeQuestTimingAnalyzer,也可以点击图中1所示图标。第二步:点击Netlist,点击CreateTimingNetlist第三步:按照下图所示选择。

- Quartus sdc UI界面设置(二)

落雨无风

IC设计fpgafpga开发

Quartussdc设置根据一配置quartus综合简单流程(一)上次文章中,说了自己写sdc需要配置的分类点,这次将说明在UI界面配置sdc。1.在Quartus软件中,导入verilog设计之后,打开Tools/TimeQuestTimingAnalyzer界面大致分为上下两部分,上半部分左侧显示Report、Tasks,右侧显示欢迎界面;下半部分显示Console和History,此处缺图,

- 基于深度学习的农作物病害检测

SEU-WYL

深度学习dnn深度学习人工智能

基于深度学习的农作物病害检测利用卷积神经网络(CNN)、生成对抗网络(GAN)、Transformer等深度学习技术,自动识别和分类农作物的病害,帮助农业工作者提高作物管理效率、减少损失。1.农作物病害检测的挑战病害种类繁多:农作物病害的类型多样,不同病害在同一作物上的表现差异很大,同时同一种病害在不同生长阶段的症状也可能不同。环境影响:天气、光照、湿度等外部环境因素会影响农作物的表现,使得病害检

- 深度学习--对抗生成网络(GAN, Generative Adversarial Network)

Ambition_LAO

深度学习生成对抗网络

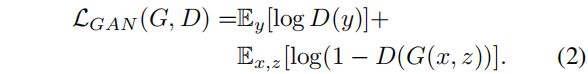

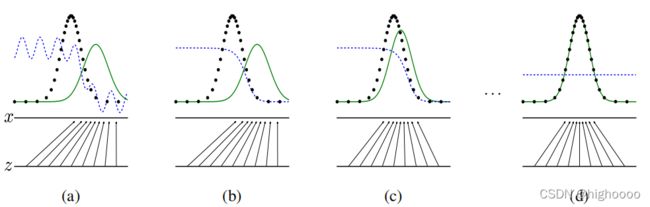

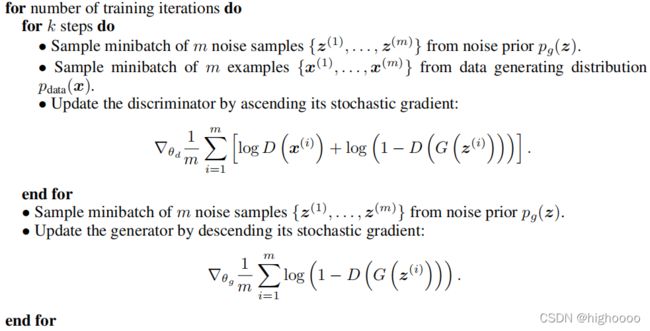

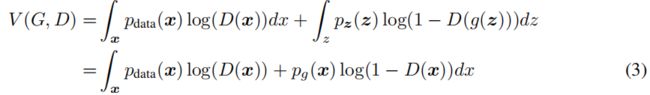

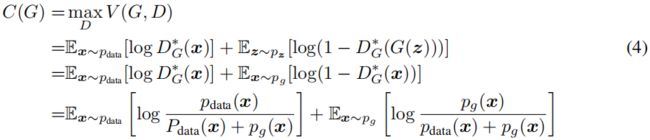

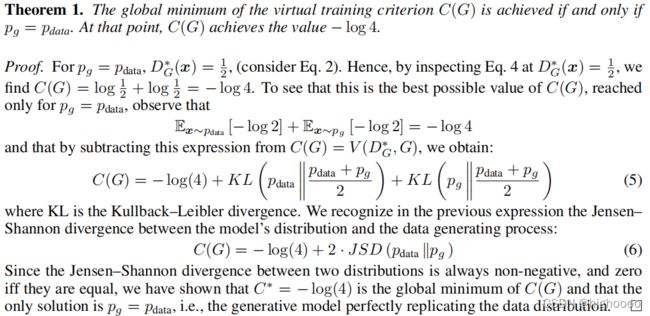

对抗生成网络(GAN,GenerativeAdversarialNetwork)是一种深度学习模型,由IanGoodfellow等人在2014年提出。GAN主要用于生成数据,通过两个神经网络相互对抗,来生成以假乱真的新数据。以下是对GAN的详细阐述,包括其概念、作用、核心要点、实现过程、代码实现和适用场景。1.概念GAN由两个神经网络组成:生成器(Generator)和判别器(Discrimina

- 万字长文聊聊Web3的组成架构

Keegan小钢

web3架构区块链

本文首发于公众号:Keegan小钢Web3发展至今,生态已然初具雏形,如果将当前阶段的Web3生态组成架构抽象出一个鸟瞰图,由下而上可划分为四个层级:区块链网络层、中间件层、应用层、访问层。下面我们来具体看看每一层级都有什么。另外,此章节会涉及到很多项目的名称,因为篇幅原因不会一一进行介绍,有兴趣的可以另外去查阅相关资料进行深入了解。区块链网络层最底层是「区块链网络层」,也是Web3的基石层,主要

- 【双语新闻】AGI安全与对齐,DeepMind近期工作

曲奇人工智能安全

agi安全llama人工智能

我们想与AF社区分享我们最近的工作总结。以下是关于我们正在做什么,为什么会这么做以及我们认为它的意义所在的一些详细信息。我们希望这能帮助人们从我们的工作基础上继续发展,并了解他们的工作如何与我们相关联。byRohinShah,SebFarquhar,AncaDragan21stAug2024AIAlignmentForumWewantedtosharearecapofourrecentoutput

- 甘特图组件DHTMLX Gantt中文教程 - 如何实现持久UI状态

界面开发小八哥

甘特图uiDHTMLX项目管理javascript

DHTMLXGantt是用于跨浏览器和跨平台应用程序的功能齐全的Gantt图表。可满足项目管理应用程序的所有需求,是最完善的甘特图图表库。在现代Web应用程序中,在页面重新加载之间保持UI元素的状态对于流畅的用户体验至关重要。在本教程中我们将知道您完成DHTMLXGantt中持久UI的简单实现,重点关注一小部分特性——即任务的展开或折叠分支,以及选定的甘特图缩放级别。您将了解如何将这些设置存储在浏

- 剑指offer 面试题05. 替换空格

Hubhub

题目描述leetcode地址代码classSolution{public:stringreplaceSpace(strings){stringans="";for(autoe:s){if(e==''){ans+="%20";}else{ans+=e;}}returnans;}};

- Python和java的区别

周作业

一些杂七杂八

更多decorator的内容,请参考https://wiki.python.org/moin/PythonDecorators来源:my.oschina.net/taogang/blog/264351基本概念Python和Javascript都是脚本语言,所以它们有很多共同的特性,都需要解释器来运行,都是动态类型,都支持自动内存管理,都可以调用eval()来执行脚本等等脚本语言所共有的特性。然而它

- 探索深度学习的奥秘:从理论到实践的奇幻之旅

小周不想卷

深度学习

目录引言:穿越智能的迷雾一、深度学习的奇幻起源:从感知机到神经网络1.1感知机的启蒙1.2神经网络的诞生与演进1.3深度学习的崛起二、深度学习的核心魔法:神经网络架构2.1前馈神经网络(FeedforwardNeuralNetwork,FNN)2.2卷积神经网络(CNN)2.3循环神经网络(RNN)及其变体(LSTM,GRU)2.4生成对抗网络(GAN)三、深度学习的魔法秘籍:算法与训练3.1损失

- OpenAI gym: How to get complete list of ATARI environments

营赢盈英

AIaideeplearningopenaigymreinforcementlearning

题意:OpenAIGym:如何获取完整的ATARI环境列表问题背景:IhaveinstalledOpenAIgymandtheATARIenvironments.IknowthatIcanfindalltheATARIgamesinthedocumentationbutisthereawaytodothisinPython,withoutprintinganyotherenvironments(e

- CycleGAN学习:Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks, 2017.

屎山搬运工

深度学习CycleGANGAN风格迁移

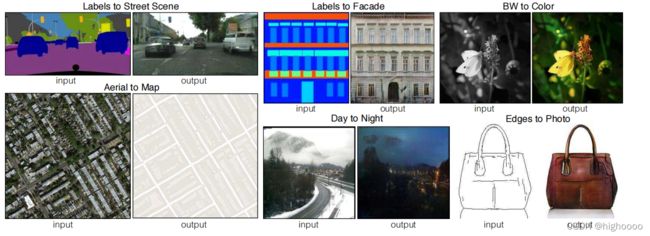

【导读】图像到图像的转换技术一般需要大量的成对数据,然而要收集这些数据异常耗时耗力。因此本文主要介绍了无需成对示例便能实现图像转换的CycleGAN图像转换技术。文章分为五部分,分别概述了:图像转换的问题;CycleGAN的非成对图像转换原理;CycleGAN的架构模型;CycleGAN的应用以及注意事项。图像到图像的转换涉及到生成给定图像的新的合成版本,并进行特定的修改,例如将夏季景观转换为冬季

- java基础之继承

Absinthe_苦艾酒

java开发语言

1.一个子类只能有一个直接父类(一个父类可以多个子类)2.private修饰符和void不能继承、不同包不能继承代码如下:父类packagebegan;//定义一个父类publicclassPet01{//属性publicStringname;//方法publicvoidrun(Stringname){System.out.println(name+"running");}}子类packagebe

- 圣索菲亚大教堂变身清真寺,意味着什么?

茶与酒

位于土耳其伊斯坦布尔的圣索菲亚大教堂,是世界上最伟大的古迹之一。它具有一千五百多年历史,被列入教科文组织的世界遗产名录。圣索菲亚大教堂地处亚欧大陆交界处,不仅是东西方文化的混合体,还见证了基督教和伊斯兰教的对峙与融合。它在历史上曾经历过数次身份转变:基督教堂、清真寺、博物馆……最近,土耳其宣布它的身份将再一次发生变化,引来国际上的广泛关注。正文ErdogansignsdecreeallowingH

- 喜大普奔:HashiCorp Vagrant 2.2.0发布!

HashiCorpChina

OCT172018BRIANCAINWearepleasedtoannouncethereleaseofVagrant2.2.0.Vagrantisatoolforbuildinganddistributingdevelopmentenvironments.ThehighlightofthisreleaseistheintroductionofVagrantCloudcommandlinetool

- ios7 手势滑动切换ViewController 问题总结

wxcswd

ios

在handleGesture函数中注意ViewController的dismiss应该放在caseUIGestureRecognizerStateBegan滑动切换dismiss掉之后,必须在present出该ViewController的响应函数中添加判断if(!self.presentedViewController)//presentedViewController在其头文件中说明为read

- GaN HEMT:未来功率半导体

David WangYang

硬件工程

硅基金属氧化物自1960年代以来,硅基金属氧化物半导体场效应晶体管(MOSFET)一直是电力电子应用的标准。尽管如此,各种技术的发展(尤其是在汽车和消费电子领域)给寻求以越来越小的外形尺寸提供更高效率和更大功率密度的开发人员带来了新的挑战。从大型数据中心和墙壁插座交流适配器到汽车车载充电站,各种用途的电源都需要高电压,同时尽可能少地占用宝贵的电路板空间。自动驾驶汽车还需要更高效的能量分配,以运行越

- 2018-11-13

hongmei_yoyo

1)这本书主要写的是传统出版业和数字出版业之间有趣的相似性。Thebookdrawsinterestingparallelsbetweentraditionalpublishinganddigitalpublishing.2)场景:苏杭两城市有很多相似处。造句:WhenIvisitedHangzhou,IsawmanystrikingparallelsbetweenHangzhouandSuzho

- Java面试题 -- SpringBoot面试题二(Spring Boot 是微服务中最好的 Java 框架)

Liberty-895

JavaWeb高级Java面试题

问题一path=”users”,collectionResourceRel=”users”如何与SpringDataRest一起使用?@RepositoryRestResource(collectionResourceRel="users",path="users")publicinterfaceUserRestRepositoryextendsPagingAndSortingRepository

- pwiz, a model generator

weixin_33861800

python数据库shell

文档链接pwizisalittlescriptthatshipswithpeeweeandiscapableofintrospectinganexistingdatabaseandgeneratingmodelcodesuitableforinteractingwiththeunderlyingdata.Ifyouhaveadatabasealready,pwizcangiveyouanicebo

- 牛客周赛 Round 58(下)

筱姌

算法

能做到的吧题目描述登录—专业IT笔试面试备考平台_牛客网运行代码#include#includeusingnamespacestd;stringfindMax(strings){intn=s.length();stringans=s;for(inti=0;ians)ans=s;swap(s[i],s[j]);}}returnans;}intmain(){intt;cin>>t;while(t--)

- 网络安全 L1 Introduction to Security

h08.14

网络安全web安全安全

Informationsecurity1.Theprocessofpreventinganddetectingunauthoriseduseofyourinformation.2.Thescienceofguardinginformationsystemsandassetsagainstmaliciousbehavioursofintelligentadversaries.3.Securityvs

- ASM系列四 利用Method 组件动态注入方法逻辑

lijingyao8206

字节码技术jvmAOP动态代理ASM

这篇继续结合例子来深入了解下Method组件动态变更方法字节码的实现。通过前面一篇,知道ClassVisitor 的visitMethod()方法可以返回一个MethodVisitor的实例。那么我们也基本可以知道,同ClassVisitor改变类成员一样,MethodVIsistor如果需要改变方法成员,注入逻辑,也可以

- java编程思想 --内部类

百合不是茶

java内部类匿名内部类

内部类;了解外部类 并能与之通信 内部类写出来的代码更加整洁与优雅

1,内部类的创建 内部类是创建在类中的

package com.wj.InsideClass;

/*

* 内部类的创建

*/

public class CreateInsideClass {

public CreateInsideClass(

- web.xml报错

crabdave

web.xml

web.xml报错

The content of element type "web-app" must match "(icon?,display-

name?,description?,distributable?,context-param*,filter*,filter-mapping*,listener*,servlet*,s

- 泛型类的自定义

麦田的设计者

javaandroid泛型

为什么要定义泛型类,当类中要操作的引用数据类型不确定的时候。

采用泛型类,完成扩展。

例如有一个学生类

Student{

Student(){

System.out.println("I'm a student.....");

}

}

有一个老师类

- CSS清除浮动的4中方法

IT独行者

JavaScriptUIcss

清除浮动这个问题,做前端的应该再熟悉不过了,咱是个新人,所以还是记个笔记,做个积累,努力学习向大神靠近。CSS清除浮动的方法网上一搜,大概有N多种,用过几种,说下个人感受。

1、结尾处加空div标签 clear:both 1 2 3 4

.div

1

{

background

:

#000080

;

border

:

1px

s

- Cygwin使用windows的jdk 配置方法

_wy_

jdkwindowscygwin

1.[vim /etc/profile]

JAVA_HOME="/cgydrive/d/Java/jdk1.6.0_43" (windows下jdk路径为D:\Java\jdk1.6.0_43)

PATH="$JAVA_HOME/bin:${PATH}"

CLAS

- linux下安装maven

无量

mavenlinux安装

Linux下安装maven(转) 1.首先到Maven官网

下载安装文件,目前最新版本为3.0.3,下载文件为

apache-maven-3.0.3-bin.tar.gz,下载可以使用wget命令;

2.进入下载文件夹,找到下载的文件,运行如下命令解压

tar -xvf apache-maven-2.2.1-bin.tar.gz

解压后的文件夹

- tomcat的https 配置,syslog-ng配置

aichenglong

tomcathttp跳转到httpssyslong-ng配置syslog配置

1) tomcat配置https,以及http自动跳转到https的配置

1)TOMCAT_HOME目录下生成密钥(keytool是jdk中的命令)

keytool -genkey -alias tomcat -keyalg RSA -keypass changeit -storepass changeit

- 关于领号活动总结

alafqq

活动

关于某彩票活动的总结

具体需求,每个用户进活动页面,领取一个号码,1000中的一个;

活动要求

1,随机性,一定要有随机性;

2,最少中奖概率,如果注数为3200注,则最多中4注

3,效率问题,(不能每个人来都产生一个随机数,这样效率不高);

4,支持断电(仍然从下一个开始),重启服务;(存数据库有点大材小用,因此不能存放在数据库)

解决方案

1,事先产生随机数1000个,并打

- java数据结构 冒泡排序的遍历与排序

百合不是茶

java

java的冒泡排序是一种简单的排序规则

冒泡排序的原理:

比较两个相邻的数,首先将最大的排在第一个,第二次比较第二个 ,此后一样;

针对所有的元素重复以上的步骤,除了最后一个

例题;将int array[]

- JS检查输入框输入的是否是数字的一种校验方法

bijian1013

js

如下是JS检查输入框输入的是否是数字的一种校验方法:

<form method=post target="_blank">

数字:<input type="text" name=num onkeypress="checkNum(this.form)"><br>

</form>

- Test注解的两个属性:expected和timeout

bijian1013

javaJUnitexpectedtimeout

JUnit4:Test文档中的解释:

The Test annotation supports two optional parameters.

The first, expected, declares that a test method should throw an exception.

If it doesn't throw an exception or if it

- [Gson二]继承关系的POJO的反序列化

bit1129

POJO

父类

package inheritance.test2;

import java.util.Map;

public class Model {

private String field1;

private String field2;

private Map<String, String> infoMap

- 【Spark八十四】Spark零碎知识点记录

bit1129

spark

1. ShuffleMapTask的shuffle数据在什么地方记录到MapOutputTracker中的

ShuffleMapTask的runTask方法负责写数据到shuffle map文件中。当任务执行完成成功,DAGScheduler会收到通知,在DAGScheduler的handleTaskCompletion方法中完成记录到MapOutputTracker中

- WAS各种脚本作用大全

ronin47

WAS 脚本

http://www.ibm.com/developerworks/cn/websphere/library/samples/SampleScripts.html

无意中,在WAS官网上发现的各种脚本作用,感觉很有作用,先与各位分享一下

获取下载

这些示例 jacl 和 Jython 脚本可用于在 WebSphere Application Server 的不同版本中自

- java-12.求 1+2+3+..n不能使用乘除法、 for 、 while 、 if 、 else 、 switch 、 case 等关键字以及条件判断语句

bylijinnan

switch

借鉴网上的思路,用java实现:

public class NoIfWhile {

/**

* @param args

*

* find x=1+2+3+....n

*/

public static void main(String[] args) {

int n=10;

int re=find(n);

System.o

- Netty源码学习-ObjectEncoder和ObjectDecoder

bylijinnan

javanetty

Netty中传递对象的思路很直观:

Netty中数据的传递是基于ChannelBuffer(也就是byte[]);

那把对象序列化为字节流,就可以在Netty中传递对象了

相应的从ChannelBuffer恢复对象,就是反序列化的过程

Netty已经封装好ObjectEncoder和ObjectDecoder

先看ObjectEncoder

ObjectEncoder是往外发送

- spring 定时任务中cronExpression表达式含义

chicony

cronExpression

一个cron表达式有6个必选的元素和一个可选的元素,各个元素之间是以空格分隔的,从左至右,这些元素的含义如下表所示:

代表含义 是否必须 允许的取值范围 &nb

- Nutz配置Jndi

ctrain

JNDI

1、使用JNDI获取指定资源:

var ioc = {

dao : {

type :"org.nutz.dao.impl.NutDao",

args : [ {jndi :"jdbc/dataSource"} ]

}

}

以上方法,仅需要在容器中配置好数据源,注入到NutDao即可.

- 解决 /bin/sh^M: bad interpreter: No such file or directory

daizj

shell

在Linux中执行.sh脚本,异常/bin/sh^M: bad interpreter: No such file or directory。

分析:这是不同系统编码格式引起的:在windows系统中编辑的.sh文件可能有不可见字符,所以在Linux系统下执行会报以上异常信息。

解决:

1)在windows下转换:

利用一些编辑器如UltraEdit或EditPlus等工具

- [转]for 循环为何可恨?

dcj3sjt126com

程序员读书

Java的闭包(Closure)特征最近成为了一个热门话题。 一些精英正在起草一份议案,要在Java将来的版本中加入闭包特征。 然而,提议中的闭包语法以及语言上的这种扩充受到了众多Java程序员的猛烈抨击。

不久前,出版过数十本编程书籍的大作家Elliotte Rusty Harold发表了对Java中闭包的价值的质疑。 尤其是他问道“for 循环为何可恨?”[http://ju

- Android实用小技巧

dcj3sjt126com

android

1、去掉所有Activity界面的标题栏

修改AndroidManifest.xml 在application 标签中添加android:theme="@android:style/Theme.NoTitleBar"

2、去掉所有Activity界面的TitleBar 和StatusBar

修改AndroidManifes

- Oracle 复习笔记之序列

eksliang

Oracle 序列sequenceOracle sequence

转载请出自出处:http://eksliang.iteye.com/blog/2098859

1.序列的作用

序列是用于生成唯一、连续序号的对象

一般用序列来充当数据库表的主键值

2.创建序列语法如下:

create sequence s_emp

start with 1 --开始值

increment by 1 --増长值

maxval

- 有“品”的程序员

gongmeitao

工作

完美程序员的10种品质

完美程序员的每种品质都有一个范围,这个范围取决于具体的问题和背景。没有能解决所有问题的

完美程序员(至少在我们这个星球上),并且对于特定问题,完美程序员应该具有以下品质:

1. 才智非凡- 能够理解问题、能够用清晰可读的代码翻译并表达想法、善于分析并且逻辑思维能力强

(范围:用简单方式解决复杂问题)

- 使用KeleyiSQLHelper类进行分页查询

hvt

sql.netC#asp.nethovertree

本文适用于sql server单主键表或者视图进行分页查询,支持多字段排序。KeleyiSQLHelper类的最新代码请到http://hovertree.codeplex.com/SourceControl/latest下载整个解决方案源代码查看。或者直接在线查看类的代码:http://hovertree.codeplex.com/SourceControl/latest#HoverTree.D

- SVG 教程 (三)圆形,椭圆,直线

天梯梦

svg

SVG <circle> SVG 圆形 - <circle>

<circle> 标签可用来创建一个圆:

下面是SVG代码:

<svg xmlns="http://www.w3.org/2000/svg" version="1.1">

<circle cx="100" c

- 链表栈

luyulong

java数据结构

public class Node {

private Object object;

private Node next;

public Node() {

this.next = null;

this.object = null;

}

public Object getObject() {

return object;

}

public

- 基础数据结构和算法十:2-3 search tree

sunwinner

Algorithm2-3 search tree

Binary search tree works well for a wide variety of applications, but they have poor worst-case performance. Now we introduce a type of binary search tree where costs are guaranteed to be loga

- spring配置定时任务

stunizhengjia

springtimer

最近因工作的需要,用到了spring的定时任务的功能,觉得spring还是很智能化的,只需要配置一下配置文件就可以了,在此记录一下,以便以后用到:

//------------------------定时任务调用的方法------------------------------

/**

* 存储过程定时器

*/

publi

- ITeye 8月技术图书有奖试读获奖名单公布

ITeye管理员

活动

ITeye携手博文视点举办的8月技术图书有奖试读活动已圆满结束,非常感谢广大用户对本次活动的关注与参与。

8月试读活动回顾:

http://webmaster.iteye.com/blog/2102830

本次技术图书试读活动的优秀奖获奖名单及相应作品如下(优秀文章有很多,但名额有限,没获奖并不代表不优秀):

《跨终端Web》

gleams:http