【ViT 论文笔记】AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE

“We show that this reliance on CNNs is not necessary and a pure transformer applied directly to sequences of image patches can perform very well on image classification tasks.” ——完全不依赖CNN

参考:Vision Transformer详解_太阳花的小绿豆的博客-CSDN博客_vision transformer

目录

“INTRODUCTION”

“METHOD”

embedding层结构

Transformer Encoder详解

编辑

MLP Head详解

“Inductive bias”

“Hybrid Architecture.”

详细结构

VIT代码

“HEAD TYPE AND CLASS TOKEN”

“POSITIONAL EMBEDDING”

“3.2 FINE-TUNING AND HIGHER RESOLUTION”

“CONCLUSION”

总结

展望

“INTRODUCTION”

直接将transformer应用于视觉,不做过多的修改

- split an image into patches,每个patch是16x16,因此224x224的图片变成14x14的序列

- 将每一个patch通过一个fc layer,获得一个linear embedding (将patch看成序列中的单词)

- 有监督的训练方式

比resnet弱一点,因为缺少归纳偏置

归纳偏置:

- locality:假设相邻的区域有相邻的特征

- translation equivariance:平移不变性

“METHOD”

首先输入图片分为很多 patch,论文中为 16。

- 将 patch 输入一个 Linear Projection of Flattened Patches 这个 Embedding 层,就会得到一个个向量,通常就称作 tokens。tokens包含position信息以及图像信息。

- 此外还需要加上位置的信息(position embedding),对应着 0~9。

- 紧接着在一系列 token 的前面加上加上一个新的 token,叫做class token,学习其他token的信息(类别token,有点像输入给 Transformer Decoder 的 START,就是对应着 * 那个位置)。class token也是其他所有token做全局平均池化,效果一样。

- 然后输入到 Transformer Encoder 中,对应着右边的图,将 block 重复堆叠 L 次。Transformer Encoder 有多少个输入就有多少个输出。

embedding层结构

对于图像数据而言,其数据格式为[H, W, C]是三维矩阵明显不是Transformer想要的。所以需要先通过一个Embedding层来对数据做个变换。

- 如下图所示,首先将一张图片按给定大小分成一堆Patches。以ViT-B/16为例,将输入图片(224x224)按照16x16大小的Patch进行划分,划分后会得到(224/16)^2=196个Patches。

- 接着通过线性映射将每个Patch映射到一维向量中,以ViT-B/16为例,每个Patche数据shape为[16, 16, 3]通过映射得到一个长度为768的向量(后面都直接称为token)。

[16, 16, 3] -> [768]

在代码实现中,直接通过一个卷积层来实现。 以ViT-B/16为例,直接使用一个卷积核大小为16x16,步距为16,卷积核个数为768的卷积来实现。通过卷积[224, 224, 3] -> [14, 14, 768],然后把H以及W两个维度展平即可[14, 14, 768] -> [196, 768],此时正好变成了一个二维矩阵,正是Transformer想要的。

3. 在输入Transformer Encoder之前注意需要加上[class]token以及Position Embedding。Cat([1, 768], [196, 768]) -> [197, 768]。Position Embedding采用的是一个可训练的参数(1D Pos. Emb.),是直接叠加在tokens上的(add),所以shape一样。

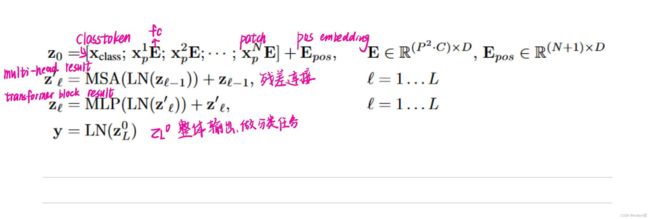

Transformer Encoder详解

Transformer Encoder其实就是重复堆叠Encoder Block L次,下图是我自己绘制的Encoder Block,主要由以下几部分组成:

- Layer Norm,这种Normalization方法主要是针对NLP领域提出的,这里是对每个token进行Norm处理。

- Multi-Head Attention

- Dropout/DropPath,在原论文的代码中是直接使用的Dropout层,在但rwightman实现的代码中使用的是DropPath(stochastic depth),可能后者会更好一点。

- MLP Block,就是全连接+GELU激活函数+Dropout组成也非常简单,需要注意的是第一个全连接层会把输入节点个数翻4倍[197, 768] -> [197, 3072],第二个全连接层会还原回原节点个数[197, 3072] -> [197, 768]

MLP Head详解

这里我们只是需要分类的信息,所以我们只需要提取出[class]token生成的对应结果就行,即[197, 768]中抽取出[class]token对应的[1, 768]。接着我们通过MLP Head得到我们最终的分类结果。MLP Head原论文中说在训练ImageNet21K时是由Linear+tanh激活函数+Linear组成。但是迁移到ImageNet1K上或者你自己的数据上时,只用一个Linear即可。

“Inductive bias”

“In ViT, only MLP layers are local and translationally equivariant, while the self-attention layers are global.” [1]

“Hybrid Architecture.”

“In this hybrid model, the patch embedding projection E (Eq. 1) is applied to patches extracted from a CNN feature map. As a special case, the patches can have spatial size 1x1, which means that the input sequence is obtained by simply flattening the spatial dimensions of the feature map and projecting to the Transformer dimension. The classification input embedding and position embeddings are added as described above.” [2]

224X224->CNN->14X14->transformer

详细结构

VIT代码

“HEAD TYPE AND CLASS TOKEN”

“Comparison of class-token and global average pooling classifiers. Both work similarly well, but require different learning-rates.

----

类标记和全局平均池化分类器的比较。两者工作都很好,但需要不同的学习率。” [3]

“POSITIONAL EMBEDDING”

“1-dimensional positional embedding: Considering the inputs as a sequence of patches in the raster order (default across all other experiments in this paper).” [4]

“3.2 FINE-TUNING AND HIGHER RESOLUTION”

“The Vision Transformer can handle arbitrary sequence lengths (up to memory constraints), however, the pre-trained position embeddings may no longer be meaningful.” [5] Vision Transformer可以处理任意序列长度的(直至内存约束),但是,预训练的位置嵌入可能不再有意义。

“We therefore perform 2D interpolation of the pre-trained position embeddings, according to their location in the original image.” [6] 因此,我们根据它们在原始图像中的位置,对预训练的位置嵌入进行2D插值。

“CONCLUSION”

总结

“We have explored the direct application of Transformers to image recognition.” [7] 我们探索了Transformers在图像识别中的直接应用。

“we interpret an image as a sequence of patches and process it by a standard Transformer encoder as used in NLP.” [8] 我们将一幅图像解释为一系列补丁,并使用NLP中使用的标准Transformer编码器对其进行处理。

展望

1. “One is to apply ViT to other computer vision tasks, such as detection and segmentation.” [9] 一种是将ViT应用于其他计算机视觉任务,如检测和分割。

2. “Another challenge is to continue exploring selfsupervised pre-training methods.” [10] 另一个挑战是继续探索自我监督的预训练方法。